CSC/ECE 506 Spring 2012/10b sr: Difference between revisions

| Line 38: | Line 38: | ||

Hutto and Ahamad | Hutto and Ahamad <ref>P.W. Hutto andM. Ahamad. Slowmemory: Weakening consistency to enhance concurrency in distributed shared memories. In | ||

Proceedings of the 10th International Conference on Distributed Computing Systems, pages 302–311,May 1990.</ref> introduced causal consistency. Lamport <ref>Leslie Lamport. Time, clocks, and the ordering of events in a distributed system. Communications of the ACM, 21(7):558–565, 1978.</ref> defined the notion of potential causality to capture the flow of information in a distributed system. This notion can be applied to a memory system by interpreting a write as a message-send event and a read as a message-read event. A memory is causally consistent if all processors agree on the order of causally related events. Causally unrelated events (concurrent events) can be observed in different orders. | |||

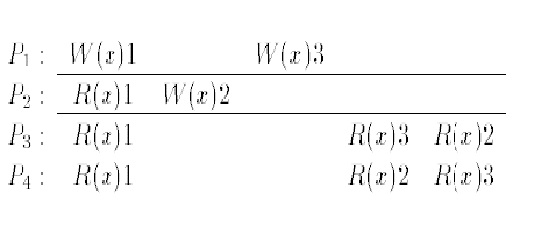

The example shown here is a legal execution history under CC but not under SC. Note that W(x)1 and W(x)2 are causally related as P2 observed the first write by P1. Furthermore, P3 and P4 observe the accesses W(x)2 and W(x)3 in different orders, which would not be legal in SC. | The example shown here is a legal execution history under CC but not under SC. Note that W(x)1 and W(x)2 are causally related as P2 observed the first write by P1. Furthermore, P3 and P4 observe the accesses W(x)2 and W(x)3 in different orders, which would not be legal in SC. | ||

Revision as of 14:11, 4 April 2012

Use of consistency models in current multiprocessors

The memory consistency model of a shared memory system determines the order in which memory operations will appear to execute to the programmer. This article describes how consistency is used in multiprocessors today and later digs into the details of popular consistency models in use today. The impact of these models on the multiprocessor performance is also discussed. The article finishes off with a discussion about how the consistency models perform with larger multiprocessors.

Introduction

Many modern computer systems and most multicore chips support shared memory in hardware. In a shared memory system, each of the processor cores may read and write to a single shared address space. These designs seek various goodness properties, such as high performance, low power, and low cost. Of course, it is not valuable to provide these goodness properties without first providing correctness. Correct shared memory seems intuitive at a hand-wave level, but, there are subtle issues in even defining what it means for a shared memory system to be correct, as well as many subtle corner cases in designing a correct shared memory implementation. Moreover, these subtleties must be mastered in hardware implementations where bug fixes are expensive.

It is the job of consistency to define shared memory correctness. Consistency definitions provide rules about loads and stores (or memory reads and writes) and how they act upon memory. Ideally, consistency definitions would be simple and easy to understand. However, defining what it means for shared memory to behave correctly is more subtle than defining the correct behavior of, for example, a single-threaded processor core. The correctness criterion for a single processor core partitions behavior between one correct result and many incorrect alternatives. This is because the processor’s architecture mandates that the execution of a thread transforms a given input state into a single well-defined output state, even on an out-of-order core. Shared memory consistency models, however, concern the loads and stores of multiple threads and usually allow many correct executions while disallowing many incorrect ones. The possibility of multiple correct executions is due to the ISA allowing multiple threads to execute concurrently, often with many possible legal interleavings of instructions from different threads. The multitude of correct executions complicates the erstwhile simple challenge of determining whether an execution is correct. Nevertheless, consistency must be mastered to implement shared memory and, in some cases, to write correct programs that use it.

Consistency in current-day multiprocessors

Consistency models used

Address translation aware memory consistency

These memory consistency models define the behavior of operations (loads, stores, memory barriers, etc.) on physical addresses and virtual addresses. The two important levels of memory consistency that can be classified as address translation aware are described below:

Physical address memory consistency (PAMC)

It is necessary to have correct PAMC for unmapped code to work correctly. Unmapped software, including the boot code and part of the system software that manages AT, relies upon PAMC. It is the responsibility of the hardware to implement PAMC and this is specified precisely in the architectural manual. It is not too difficult to adapt an AT-oblivious consistency model as the specification of PAMC.

Example:

The PAMC model could be SC. In such a case the interface would specify that (i) there must exist a total order of all loads and stores to physical addresses that respects the program order of each thread and (ii) the value of each load is equal to the value of the most recent store to that physical address in the total order.

Virtual address memory consistency (VAMC)

Correct VAMC is required for mapped code to work correctly.

Although adapting an AT-oblivious consistency model for PAMC is straightforward, there are a few challenges when adapting an AT-oblivious consistency model for VAMC:

- synonyms - Multiple virtual addresses may map to the same physical address. Suppose two virtual addresses VA1 and VA2 map to the same physical address PA. SC requires that the value of a load is equal to the value of the most recent store to the same address. It is possible to have a naive definition of VAMC that does not consider the level of indirection introduced by AT. To overcome this challenge, we re-formulate AT-oblivious consistency models for VAMC by applying the model to synonym sets of virtual addresses rather than individual addresses. For instance, we can define SC for VAMC as follows: there must exist a total order of all loads and stores to virtual addresses that respects program order and in which each load gets the value of the most recent store to any virtual address in the same virtual address synonym set. Incorporating synonyms explicitly in the consistency model allows programmers to reason about the ordering of accesses to virtual addresses.

- mapping and permission changes - Another challenge is that the set of memory operations at the VAMC level is richer than at the PAMC level.

- load/store side effects - Yet another challenge in specifying VAMC is that loads and stores to virtual addresses have certain side effects. The AT system includes status bits such as accessed and dirty bits for each page table entry. These status bits are part of the architectural state, and the ordering of updates to those bits must thus be specified in VAMC. To achieve this we add two new operations to the specification tables: Ld-sb (load’s impact on status bits) and St-sb (store’s impact on status bits).

Causal consistency

Hutto and Ahamad <ref>P.W. Hutto andM. Ahamad. Slowmemory: Weakening consistency to enhance concurrency in distributed shared memories. In

Proceedings of the 10th International Conference on Distributed Computing Systems, pages 302–311,May 1990.</ref> introduced causal consistency. Lamport <ref>Leslie Lamport. Time, clocks, and the ordering of events in a distributed system. Communications of the ACM, 21(7):558–565, 1978.</ref> defined the notion of potential causality to capture the flow of information in a distributed system. This notion can be applied to a memory system by interpreting a write as a message-send event and a read as a message-read event. A memory is causally consistent if all processors agree on the order of causally related events. Causally unrelated events (concurrent events) can be observed in different orders.

The example shown here is a legal execution history under CC but not under SC. Note that W(x)1 and W(x)2 are causally related as P2 observed the first write by P1. Furthermore, P3 and P4 observe the accesses W(x)2 and W(x)3 in different orders, which would not be legal in SC.

Delta consistency

The delta consistency model states that after a fixed time period δ, an update is propagated through the system and all replicas will be consistent. In other words, barring a short bounded interval after a modification, the result of any read operation is consistent with a read on the original copy of an object. If an object is modified, the read will not be consistent during the short period of time following its modification. Once the fixed time period elapses, the modification is propagated and the read is now consistent.

Entry consistency

This consistency model has been designed to be used with critical sections. Here, the programmer needs to use acquire and release at the start and end of each critical section just like in both variants of release consistency. However it also required every ordinary shared variable to be associated with a synchronization variable such as a lock or a barrier. If the elements of an array need to be accessed independently in parallel, then each element of the array must be associated with a lock. When an acquire is done on a synchronization variable, only those ordinary shared variables guarded by that synchronization variable are made consistent. Release consistency does not associate shared variables with locks or barriers and at acquire time has to determine empirically which variables it needs. This is where entry consistency differs from release consistency.

Formally, a memory exhibits entry consistency if the following conditions are met:

- An acquire access of a synchronization variable is not allowed to perform with respect to a process until all updates to the guarded shared data have been performed with respect to that process.

- Before an exclusive mode access to a synchronization variable by a process is allowed to perform with respect to that process, no other process may hold the synchronization variable, not even in nonexclusive mode.

- After an exclusive mode access to a synchronization variable has been performed, any other process next nonexclusive mode access to that synchronization variable may not be performed until it has performed with respect to that variable's owner.

Eventual consistency

Given a sufficiently long period of time over which no changes are sent, all updates can be expected to propagate eventually through the system. Thus, all the replicas will be consistent. As the consistency achieved is eventual, the possibility of conflicts is high. The conflicts have to be resolved. There are three types of resolution:

- Read repair: The correction is done when a read finds an inconsistency. This slows down the read operation.

- Write repair: The correction is done when a write operation finds an inconsistency, slowing down the write operation.

- Asynchronous repair: The correction is not part of a read or write operation.

Linearizability

(also known as strict or atomic consistency)

One-copy serializability

PRAM consistency

(also known as FIFO consistency)