CSC/ECE 506 Spring 2012/9a mm: Difference between revisions

(→Quiz) |

|||

| Line 60: | Line 60: | ||

==Quiz== | ==Quiz== | ||

# Which one of this actions is not part of the locking process: | |||

## Acquire() | |||

## Spinning() | |||

## Release() | |||

## | |||

Revision as of 16:00, 2 April 2012

Reducing Locking Overhead

Thread synchronization in a multiprocessor environment seeks to ensure memory consistency during concurrent execution. Several mechanisms exist to address synchronization and central to the solution is typically the provision of atomicity. When a thread performs a set of operations that are seen by other threads and the rest of the system as instantaneous, atomicity has been provided. The operations themselves of course are not physically instantaneous and rely upon locking other processes out of protected memory locations while critical accesses by a single thread occur. The overhead associated with providing thread synchronization can be significant, and the aim of this article is to discuss atomicity via locks and optimizations that improve locking performance.

Locks

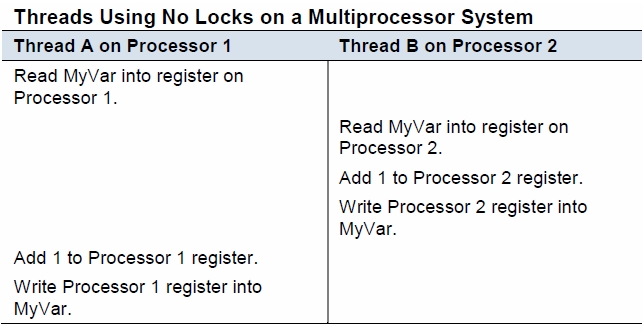

Locks are a mechanism for providing concurrency control among concurrently executing processes. Locks are important when multiple processes share data, unless in a read only manner, and also when a set of operations executed by a process must be accomplished atomically. A multiprocessor environment that fails to provide concurrency control can suffer correctness problems as demonstrated in the figure below.

Consider two processes that access and write a shared variable MyVar with three processor instructions: Read MyVar into a register, increment MyVar, and write the new value back to the memory location. The diagram shows two processes attempting to concurrently increment MyVar and the incorrectness can follow when the three operations are not executed atomically. Only the Thread A update is preserved in the execution sequence shown as it overwrites the results of Thread B.

Locks are ususally constructed with hardware support although methods such as Peterson's or Dekker's algorithms have been developed for environments where hardware primitives are not available.

Atomic Primitives

These instructions are used directly by compilers and operating systems but are also used in library functions in higher-level languages to implement locking. These include operations such as atomic read-write, atomic swap, test-and-set, fetch-and-add, compare-and-swap, and Load-Link/Store-Conditional (LL/SC). For example, a C function that implements compare-and-swap might look like this:

int compare_and_swap (int* reg, int oldval, int newval)

{

int old_reg_val = *reg;

if (old_reg_val == oldval)

*reg = newval;

return old_reg_val;

}

This function shows the logic for the swap but does not yet address correctness because it does not guarantee atomicity. Compare-and-swap takes as parameters a memory value is read and remembered (oldval) and a calculated value based on the old value (newval) as well as the address of the memory location. In the function, the memory location is tested and if it still contains the value oldval then the new value is swapped in. Although this function provides the logic it still does not provide a guarantee of atomicity.

Monitors

Some languages have mechanisms for synchronizing entire methods or collections of methods. Monitors are objects or modules that are designed to be use safely in a multiprogramming environment due to their guarantee of mutual exclusion. At any given time only a single thread may be executing any of a monitor object's methods. Many languages have supported monitors including Java, C#, Python and Ruby.

Monitors in Java

Monitors in Java are conceptual spaces that can be occupied by only one thread at a time. Entering the monitor space is termed entering the monitor. The monitor space has a conceptual inner region which when entering is called acquiring the monitor. Occupying this inner region is called owning the monitor while leaving it is called releasing the monitor and exiting the monitor space all together is called exiting the monitor. No more than one thread can own the monitor at any given time. This region of the monitor typically contains data and code that potentially support two types of synchronization available with Java, mutual exclusion and cooperation. Monitors can contain many code regions, related or not, which each when executed must do so with a

guarantee of atomicity with respect to other executing threads. Each code region has its own entry set. This means that each monitor has multiple points of entry, each of which is associated with a particular region of code that for correctness must be synchronized. When a thread, in its course of execution, comes upon a region controlled by the monitor it is placed in the entry set of that region. If the monitor is not occupied, the thread acquires and then occupies the monitor and continues with its execution of that region. If the monitor is occupied then the thread is forced to wait (along with other waiting threads) for the occupying thread to release the monitor. All waiting threads then compete for the opportunity to acquire the monitor.

Mutual exclusion is guaranteed by the monitor because no more than one thread is allowed in any region of a particular monitor. Cooperation between threads can be orchestrated with a "Wait and Notify" (or "Signal and Continue") monitor. In this type of monitor a thread, while occupying the monitor, can execute a wait command. Executing a wait causes a thread to release the monitor and enter a wait set. The thread, now in the wait set, will stay in a suspended state until after it receives a notify command and it is able to occupy the monitor according to protocols already described. A thread which executes a notify command (the Signal) continues to occupy the monitor in its normal course of execution until it releases the monitor or suspends as well via a wait command.

The figure on the right shows the monitor as the space inclusive of the inner region, which includes protected data and code, as well as the entry and wait sets. The diagram also numbers the different entry and exit opportunities and the names associated with each status change.

Locking Objects

In the JVM all objects and classes are logically associated with a monitor. Associated monitors protect an object's instance variables and a class's class variables. The JVM associates a lock with each object and class.

Problems with Locks

Thin Locks

Biased Locks

One of the techniques, previously introduced, that are used to test and capture the locks is compare&swap, CAS. These atomic instructions are indeed very intensive, and consume many bus cycles. They solely could be blamed on using a significant percentage of the computing time and resources of a processor. (It is interesting to state here that this effect depends on the processor architecture, as well as the VM implementation and, hence, IBM Java VM is less disposed to this effect than Oracle's. Also, recent processors, like the Intel Core family, have been optimized to perform this task) Due to its architecture and way of use, Java is prone to use a certain level of locking, and that level has a tremendous effect on the computing time of every program. Due to the idiosyncrasy of the spins, it is not uncommon (ref) that, when we compare the times used in the execution of a program serially or using threads, the latter shows higher inefficiency and latencies grow exponentially with relation to the number of threads used. One of the solutions proposed to mitigate this problem is based on taking advantage of some observations in the distribution of locks by the programs: in most cases, due to the way the programs are structured, there is one thread that consumes the locks more often than the others. In occasions, that thread could be entering the locks a number of times several order the magnitude higher. So why not to make it easier for it? The idea behind the biased locks is not to released the lock for that thread once its task is finished. In that way if, as foreseen, the same task comes back to get the same lock, it finds it ready for it, and can save the expensive CAS operation. There are four steps in the process of synchronization, that are normally cyclical:

- Remainder, where a thread realizes that is entering a critical section and gets ready for it.

- Acquire, where a thread fights for and obtain a lock using some atomic instruction, like CAS; and/or barriers.

- Critical Section, where a thread executes some functions with mutual exclusion.

- Release, where the thread release the lock for others to acquire.

See Also

References

- Thin Locks: Featherweight Synchronization for Java. Bacon et al: http://www.research.ibm.com/people/d/dfb/papers/Bacon98Thin.pdf

- David's Dice Weblog: Biased Locking in HotSpot. Dice: https://blogs.oracle.com/dave/entry/biased_locking_in_hotspot

- Java Locks: Analysis and Acceleration (Thesis). Kiyokuni Kawachiya: http://www.research.ibm.com/trl/people/kawatiya/Kawachiya05phd.pdf

- Reducing Biased Lock Revocation By Learning. Rogers, Iyengar: http://www.cs.man.ac.uk/%7Eirogers/Reducing_Biased_Lock_Revocation_By_Learning.pdf

- Quickly Reacquirable Locks. Dice, Moir and Scherer: http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf

- Implementing fast java[tm] monitors with relaxed-locks. Dice: http://static.usenix.org/event/jvm01/full_papers/dice/dice.pdf

Quiz

- Which one of this actions is not part of the locking process:

- Acquire()

- Spinning()

- Release()