CSC/ECE 506 Spring 2012/9a mm: Difference between revisions

mNo edit summary |

mNo edit summary |

||

| Line 6: | Line 6: | ||

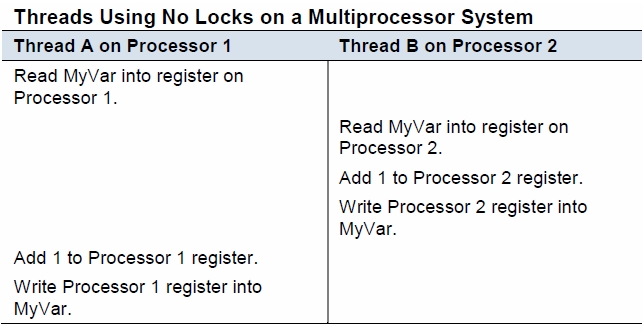

[[File:correctness.jpg|400px|thumb|left|No concurrency control]] Consider two processes that access and write a shared variable MyVar with three processor instructions: Read MyVar into a register, increment MyVar, and write the new value back to the memory location. The diagram shows two processes attempting to concurrently increment MyVar and the incorrectness can follow when the three operations are not executed atomically. Only the Thread A update is preserved in the execution sequence shown as it overwrites the results of Thread B. | [[File:correctness.jpg|400px|thumb|left|No concurrency control]] Consider two processes that access and write a shared variable MyVar with three processor instructions: Read MyVar into a register, increment MyVar, and write the new value back to the memory location. The diagram shows two processes attempting to concurrently increment MyVar and the incorrectness can follow when the three operations are not executed atomically. Only the Thread A update is preserved in the execution sequence shown as it overwrites the results of Thread B. | ||

Locks are typically constructed with hardware support although methods such as Peterson's or Dekker's algorithms have been developed | Locks are typically constructed with hardware support although methods such as Peterson's or Dekker's algorithms have been developed for environments where hardware primitives are not available. | ||

===Monitors=== | ===Monitors=== | ||

===Thin Locks=== | ===Thin Locks=== | ||

Revision as of 13:41, 1 April 2012

Reducing Locking Overhead

Thread synchronization in a multiprocessor environment seeks to ensure memory consistency during concurrent execution. Several mechanisms exist to address synchronization and central to the solution is typically the provision of atomicity. When a thread performs a set of operations that are seen by other threads and the rest of the system as instantaneous, atomicity has been provided. The operations themselves of course are not physically instantaneous and rely upon locking other processes out of protected memory locations while critical accesses by a single thread occur. The overhead associated with providing thread synchronization can be significant, and the aim of this article is to discuss atomicity via locks and optimizations that improve locking performance.

Locks

Locks are a mechanism for providing concurrency control among concurrently executing processes. Locks are important when multiple processes share data, unless in a read only manner, and also when a set of operations executed by a process must be accomplished atomically. A multiprocessor environment that fails to provide concurrency control can suffer correctness problems as demonstrated in the figure below.

Consider two processes that access and write a shared variable MyVar with three processor instructions: Read MyVar into a register, increment MyVar, and write the new value back to the memory location. The diagram shows two processes attempting to concurrently increment MyVar and the incorrectness can follow when the three operations are not executed atomically. Only the Thread A update is preserved in the execution sequence shown as it overwrites the results of Thread B.

Locks are typically constructed with hardware support although methods such as Peterson's or Dekker's algorithms have been developed for environments where hardware primitives are not available.