CSC/ECE 506 Spring 2012/6b pa: Difference between revisions

No edit summary |

|||

| Line 5: | Line 5: | ||

===Write-Through=== | ===Write-Through=== | ||

[[Image:writethrough_1.png|frame|thumb|Figure 4. Example of ASO]] | |||

In a write-through cache, the memory is updated as the cache is updated with a write. This type of update allows invalidation of cache lines to be done with no stalls because the memory already has all the updated values. However, this causes more traffic because memory is being updated every time there is a change. | In a write-through cache, the memory is updated as the cache is updated with a write. This type of update allows invalidation of cache lines to be done with no stalls because the memory already has all the updated values. However, this causes more traffic because memory is being updated every time there is a change. | ||

Revision as of 18:27, 5 March 2012

Introduction

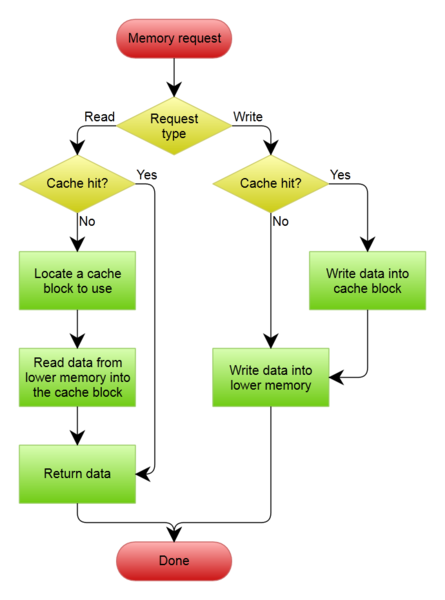

Ideally, in the case of write misses, the processor does not need to wait until the write is completed as it is not asking for any data.Hence,instead of stalling the processor,we can do the write action in the background,write the data into a write buffer and delegate the responsibility to the write buffer for performing the write to the memory hierarchy.Not stalling the processor under a write miss is good except when the write miss is followed by a read request to the same block and the write is pending in the write buffer.The solution to this problem lies in checking the write buffer for any pending writes to the requested block before requesting the data block from the next level of memory hierarchy.The following methods describe methods how to solve this issue when it comes to processors sharing a common memory in order to let other processors notice that the line is in fact in the write buffer and not in memory.

Issue with Write Buffer

Software Coherency through Write Policies

Write-Through

In a write-through cache, the memory is updated as the cache is updated with a write. This type of update allows invalidation of cache lines to be done with no stalls because the memory already has all the updated values. However, this causes more traffic because memory is being updated every time there is a change.

Writeback

In a writeback, the cache is updated with a write but memory is not. Because of this, memory can contain stale values. When a block is being replace in the cache, the memory will be updated accordingly. This policy causes more stalls than write-through policy above. When an invalidation needs to occur, the memory might not have the most up to date values. Thus, a cache flush operation occurs in which every line in the cache is updated to memory. Writeback has more traffic during invalidation than write-through. Also, the processor whose cache/buffer has the latest value needs to be tracked. This is an additional overhead that write-through does not have.

Implementation with Write Buffer

Write-Through with No Buffering

Write-Through with Buffering

Write-Through with Write-Back Write Buffer

Write-Through Write-Allocate with Writeback Write Buffer

Writeback

Comparisons

Sequential Coherency

Strong Ordering

Weak Ordering

Modified Weak Ordering

Total Store Ordering

Relaxed Memory Ordering

Atomic Ordering

Modified Weak Ordering

Atomic Sequence Ordering<ref>http://www.eecg.toronto.edu/~moshovos/research/store-wait-free.pdf</ref>

Another cause of a store delay is ordering constraints that stall the execution until in-flight stores have completed. Ordering stalls can be eliminated through atomic sequence ordering (ASO). Instead of enforcing ordering constraints on every memory access, it enforces ordering at a higher granularity. This access sequence will appear as an atomic sequence to other processors. A data race will expose an ordering violation and ASO will recover to the beginning of the nearest atomic sequence using a checkpoint-based rollback.

Function of ASO

ASO groups memory accesses into atomic sequences. As long as an atomic sequence appears atomic to other processors, the order of accesses in the sequence does not matter as it is not observable by other processors. An atomic sequence executes and either commits or is discarded because of a race condition. A data race exposes an ordering violation but is very rare. In this way, ASO eliminates unnecessary ordering stalls.

When an instruction would be stalled due to the ordering constraint, the system starts ASO. Figure 4 shows an example of ASO for two atomic sequences. An atomic sequence goes through 3 steps as shown in the diagram.

Step 1. A checkpoint is created when a new atomic sequence starts. At this point the atomic sequence is in an accumulate state. The memory accesses retire with speculative values ignoring ordering constraints. As this happens, the memory access instructions are added onto the atomic sequence. A write request is then sent for the cache blocks to be updated by the instructions in the sequence.

Step 2. Once an atomic sequence is of a certain predetermined size, a new checkpoint and atomic sequence are created. At this point, the old atomic sequence is in await permission state as it is waiting for all its write permissions.

Step 3. When an atomic sequence receives all its write permissions, it transitions into the commit state. At this point, it proceeds to update the memory system with its write values. All other processors writes are being stalled at this point to assume commit atomicity.

Step 4. A sequence can transition directly from accumulate to commit state if it already has its write permissions. This is shown by the second sequence in the figure.

Hardware Implementation

Tracking Atomic Sequences

As mentioned earlier, ASO is initialized once a instruction is not able to retire because of an out-standing store that is not yet complete. An Atomic Sequence Table (AST) will keep track of all the atomic sequences. For each atomic sequence, the AST will hold information about which instructions are in the sequence, a Lines counter to show which cache lines will be written to by the instructions in the sequence, and a Filled counter to show which write permissions have been obtained. When Filled equals Lines counter, it signifies that all the permissions for that sequence have arrived and the sequence may commit. In the L1 cache, each line has a SW (speculatively written) bit for each sequence in the AST. The bit indicates which atomic sequence's Filled counter to update when the line arrives. A new atomic sequence is created whenever the current sequences Lines count reaches a certain number, in this case, 16.

Detecting Atomicity Violations

To keep track of speculative reads in an atomic sequence, each line in the L1 and L2 cache is augmented with an SR (speculative read) bit for each sequence. If an invalidation matches a speculative line, then the atomic sequence must roll back accordingly. This must also occur if a line is evicted from L2 cache.

Committing Atomic Sequences

When a sequence has received all its permissions, it may commit values into the L2 cache. During this draining of instructions, other requests to access memory are stalled. SR and SW bits corresponding to the sequence are clears. It is easier to clear these bits if they are implemented using a specialized array rather than augmenting the existing caches. This causes very little storage overhead for the caches.

Rollback on Violation

To rollback in case of a violation, a checkpointing mechanism must be implemented. There must be one checkpoint for each atomic sequence present in the AST. A checkpoint must contain information regarding all register and the TLB/MMU (translation lookaside table/memory management unit) state. When a rollback occurs, all lines with a high SW bit are invalidated and the discarded sequences are removed from the AST.

Conclusion

ASO removes ordering constraints and therefore reduces their penalties. Figure 5 shows the results of adding ASO to an execution with SSB. It has improved performance by 8%. ASO combined with SSB outperforms even the RMO execution shown in Figure 3.

Write Buffer Implementations

Universal Read Write Buffer<ref>http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=342629&tag=1</ref>

Motivation

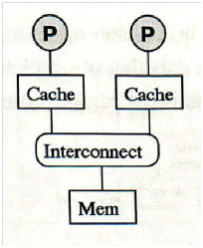

The uniform memory access class of MIMD(Multiple instruction Multiple data) parallel computers employ the Snoopy cache protocols (namely MSI,MESI and MOESI) mainly used to reduce bus traffic and to reduce the average memory access time for shared memory systems. Figure 1 depicts the shared memory system.

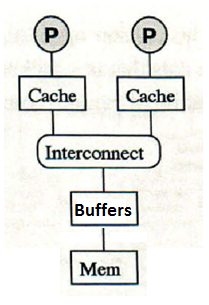

The main memory bandwidth of the shared memory systems is an important factor that affects the performance of the shared memory systems. This is because the off-chip bandwidth is low. A large number of writes to the main memory can lead to slower systems that need to wait for 100s of cycles until the slow main memory subsystem finishes the whole write access. Also, giving priority to reads over writes can improve the performance of the system as a whole. The universal read/write buffer supports multiprocessor cache coherence of the different cache coherence protocols in order to reduce the bandwidth of the memory bus to allow faster access than the main memory for both read and write accesses. It is located between the CPU’s local bus and the main memory. Figure 2 depicts the architecture of the universal read/write buffer scheme.

Hardware Description

Functionally, the buffer consists of two parts, the data buffer and the address buffer.

- The Data Buffer consists of three FIFOs namely the Non_block Write FIFO, Write back FIFO and the read FIFO.

- The Address Buffer consists of a four deep FIFO for non-block write cycle, write-back FIFO for eviction cycle, line-fill FIFO for line fill cycle, snoop and byte gathering logic

Data Buffer

The Non_block Write FIFO is a four deep FIFO used whenever there is a write to an address range non-specified in the memory map. This process does not require any block transfer (burst transfer) because there is no eviction or line-fill cycle. As a result, the Non_block Write FIFO provides a temporary storage area so that the CPU can start the next cycle immediately. The depth of the FIFO is arbitrary though more depth allows lower chances of the FIFO being full.

Write-back FIFO is eight-deep FIFO used for eviction cycles. If there is a cache miss and eviction to main memory is required, the whole line of cache can be stored in burst mode (data transmitted in every clock). Right after this transaction, the new data can be read from main memory to cache (line-fill cycle) after which the data in write-back FIFO is written back to main memory. By doing so, the time required for writing-back a line of data will be hidden to CPU and new data will be available to CPU earlier. The depth of FIFO2 can be arbitrary.

The Read FIFO is used for storing data from main memory or Posted-write/Write-back FIFO. When data from main memory or FIFOl or FIFO2 is ready but local bus is busy, the data will be temporarily stored in this FIFO until Local bus is cleared. MUX-A and MUX-B provide a data path between FIFOs and memory bus (or local bus). Latches and tri-state buffers hold data whenever memory bus (or local bus) is still busy and not ready for new data.

Address Buffer

The fourdeep FIFO for non-block write cycle, stores addresses for each non-block write cycle and provide them to main memory when system bus is available. The depth of the FIFO should be same as that of corresponding FIFO in data buffer.

Write-back FIFO stores starting address of eviction cycle and provide the address to system bus when the bus is free. It is single level because memory controller can provide (or predict) next addresses within line boundary and the starting address of the line is the only necessary information for eviction cycle.

Line-fill FIFO, stores starting address of line-fill cycle and provide the address to system bus when the bus is free. It is single level because memory controller can provide (or predict) next addresses within line boundary and the starting address of the line is the only necessary information for line fill transfer

Whenever there is a read to a line that requires access to the main memory, the snoop logic is activated to compare the addresses in the WriteBack Address FIFO and the Line-fill Address FIFO to the read address. If any of the addresses matches, HIT flag is set and the data in the FIFO is read-back to Read FIFO through internal bypass path. By doing so, stale data will not be read and CPU doesn't have to waste lengthy memory latency time.

Snoop logic block also includes byte-gathering logic. Byte-gathering logic compares the incoming address and Byte- Enable (BE#) bits for non-block write cycle with that of previous non-block write cycle in non blocking address FIFO. If addresses are matched with no Byte-Enables overlapped, then the pointer of FIFOl will not advance. Therefore, the incoming data will stored in the same level of FIFOl and prevents the FIFOl from being filled up quickly.

Since read cycle has priority over write cycle, the read address will be provided directly to the memory bus whenever read cycle is issued and memory bus is available. In case memory bus is not free, the read address can be temporarily stored in REG until bus becomes free.

Conclusion

The buffers function as a temporary storage for the written data so that lengthy main memory access time can be hidden. In this case,the CPU does not need to wait for the main memory cycle to finish then it can start another cycle. From the timing simulation results, it shows a 45% write cycle process improvement. In a normal write process, it takes 11 clock cycles to complete the entire write process. In other words, CPU is virtually busy in those 11 clock cycles. Hence, CPU can not proceed to another process until the write process is completed. It is, however, after adding the readwrite buffer, the originally required 11 clock cycles have been reduced to 6 cycles. Therefore, by using the read/write buffer, 5 cycles can be saved ( that means (1 14)/11 x 100% = 45% saving). This big saving definitely helps the overall system performance. This is because, for a CPU which occasionally writes to main memory, the read/write buffer can help saving thousands of clock cycles and free up the CPU much sooner. In fact, the performance is better than what it appears to be so far because the read buffer has not been taken into account in the above discussion. The read buffer helps to improve the performance even further. There are, without any doubts, many read processes from main memory to CPU during system operation. Hence, the read buffer help reducing the extra access time and meliorates the performance.

Scalable Store Buffers<ref>http://www.eecg.toronto.edu/~moshovos/research/store-wait-free.pdf</ref>

A store buffer is a storage containing retired store values prior to their release to memory. One cause of a store delay is insufficient store buffer capacity. A capacity stall is when a store instruction is not able to retire because all the store buffer entries are full. A scalable store buffer (SSB) helps eliminate these store buffer capacity stalls.

A store buffer capacity is “limited because every load must associatively search the buffer for a matching store, and this search is on the load’s critical path.” With SSB, there is no need for this associative search because speculative values are forwarded to loads straight from the L1 cache and committed values are maintained in the L2 cache. The SSB has stores in a FIFO order making it unnecessary to search through it associatively. However, it still allows an ordered commit to L2.

Functionality of SSB

In a conventional store buffer, the store buffer forwards values to the corresponding loads and it maintains the order among the stores. A load must search through the store buffer for a matching store. In contrast, an SSB forwards values to the loads through an L1 cache eliminating the need for this search. To be consistent in removing the need for this search, the stores in the SSB are in FIFO order which does not support an associative search. Stores commit in order from the SSB to L2 cache. Thus, L1 will contain speculative values and L2 will contain the committed values as mentioned above.

This allows the SSB capacity to equal L1’s capacity. This capacity is greater than that of a conventional store buffer. However, if an application requires a greater capacity, the SSB will be able to stall and wait for stores to drain just as a conventional store buffer does when its maximum capacity is reached.

Hardware Implementation

When a store retires, it updates the L1 cache and it is added to the SSB. There are valid bits added to each L1 line- one bit per word. These valid bits allow the data from L1 to be used in case of an out-standing store miss and allows merging with new data. The SSB contains the address, value and size of all stores in a FIFO structure (circular buffer). The size of the SSB is the same as the size of the L1 cache because there is no need for it to be associatively searched.

Invalidations

When two processors are trying to write to the same cache block at the same time, the cached block will be partially invalidated if the valid bit corresponding to that block is high. This means that the uncommitted stores in the SSB will be preserved while part of the global cache line in L2 will be invalidated. The valid bits in the line will be cleared and a request for write permission as well as the updated value is issued. When the updated line is received, the SSB "reconstructs the line by traversing the entire [SSB] to replay stores to the affected line, setting per-word valid bits as appropriate". This is an inefficient process but there is not much performance loss because invalidations tend to be very rare.

Sub-word writes

The design of the SSB is such that there is a valid bit for every word and not every byte. This means that writes of smaller than a word cannot take place unless all valid bits for that line are high/valid. In case of a sub-word miss, the sub-word is placed in a mini store buffer and waits until the line is filled. When multiple sub-word entries for the same word exist in the mini store buffer, an individual entry is created for the store in the SSB.

Conclusion

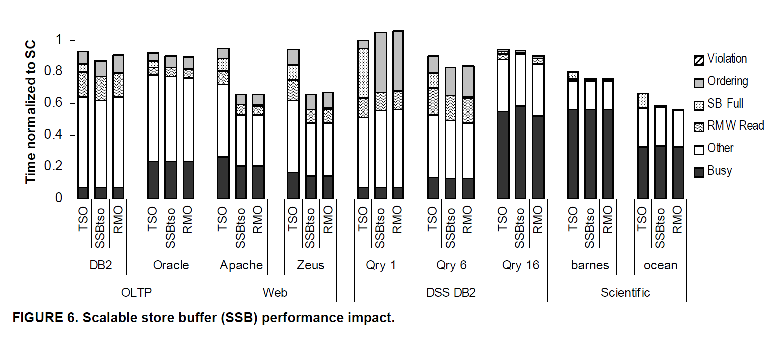

Conventional store buffers cannot eliminate store buffer capacity stalls. A SSB provides more capacity than is required by most application demands. Figure 3 shows the impacts of using an scalable store buffer.

There are three types of executions studied: SC, TSO, RMO. SC and RMO are two extremes. TSO is what is most applicable to our study. The figure shows that when we add a SSB to a TSO execution, all capacity stalls are eliminated and we get results similar to that of an RMO.

References

<references/>