CSC/ECE 506 Spring 2011/ch6a ep: Difference between revisions

| Line 295: | Line 295: | ||

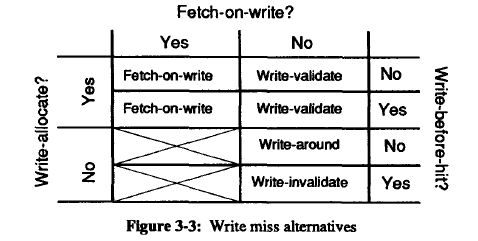

The two combinations of fetch-on-write and no-write-allocate are blocked out in the above diagram. They are considered unproductive since the data is fetched from lower level memory, but is not stored in the cache. Temporal and space locality suggest that this data will be used again, and will therefore need to be retrieved a second time. | The two combinations of fetch-on-write and no-write-allocate are blocked out in the above diagram. They are considered unproductive since the data is fetched from lower level memory, but is not stored in the cache. Temporal and space locality suggest that this data will be used again, and will therefore need to be retrieved a second time. | ||

===Write-Validate=== | |||

The combination of no-fetch-on-write and write-allocation is referred to as 'write-validate'. It requires additional overhead to track what bytes have been changed in the cache and lower level memories that can process partial lines. The authors contend that this overhead is 'significant' | |||

===Write-Around=== | |||

The is a combination of no-fetch-on-write, no-write-allocate, and no-write-before-hit is referred to as a 'write-around' since it bypasses the higher level cache, writes directly to the lower level memory, and leaves the existing block line in the cache unaltered. This strategy show performance improvements when the data that is written will not be reread soon. | |||

=Prefetching in Contemporary Parallel Processors= | =Prefetching in Contemporary Parallel Processors= | ||

Revision as of 02:29, 1 March 2011

CSC/ECE 506 Spring 2011/ch6a ep

Recent Architectures and their Cache Characteristics

Multicore processor architectures with both shared and private caches are common in desktop and server platforms from a variety of manufacturers. Below is a table that illustrates the variety of core and cache designs in multiprocessors from four major manufactureres:

| Table 1: Recent Architectures and their Cache Characteristics [1][2][3][4] | |||||

|---|---|---|---|---|---|

| Company | Processor | Cores | L1 Cache | L2 Cache | L3 Cache |

| AMD | Athlon 64 X2 | 2 | 128 KB x 2 | 512 KB x 2 1MB x 2 | |

| AMD | Athlon 64 FX | 2 | 128 KB x 2 | 1MB x 2 | |

| AMD | Athlon X2 | 2 | 128 KB x 2 | 512 KB x 2 | |

| AMD | Phenom X3 | 3 | 128 KB x 3 | 512 KB x 3 | 2 MB |

| AMD | Phenom X4 | 4 | 128 KB x 4 | 512 KB x 4 | 2 MB |

| AMD | Athlon II X2 | 2 | 128 KB x 2 | 512 KB x 2 1MB x 2 | |

| AMD | Athlon II x3 | 3 | 128 KB x 3 | 512 KB x 3 | |

| AMD | Athlon II X4 | 4 | 128 KB x 4 | 512 KB x 4 | |

| AMD | Phenom II X2 | 2 | 128 KB x 2 | 512 KB x 2 | 6 MB |

| AMD | Phenom II X3 | 3 | 128 KB x 3 | 512 KB x 3 | 6 MB |

| AMD | Phenom II X4 | 4 | 128 KB x 4 | 512 KB x 4 | 4-6 MB |

| AMD | Phenom X6 | 6 | 128 KB x 4 | 512 KB x 4 | 6 MB |

| Intel | Pentium D | 2 | 12+16KB x 2 | 2 MB | |

| Intel | Celeron E | 2 | 32 KB x2 | 512 -1 MB | |

| Intel | Pentium D | 2 | 32 KB x2 | 1 MB | |

| Intel | Core 2 Duo | 2 | 32 KB x2 | 2 - 4 MB | |

| Intel | Core 2 Quad | 4 | 32 KB x2 | 8 MB | |

| Intel | Atom 330 | 2 | 32+24KB x 2 | 512 MB x 2 | |

| Intel | Pentium E | 2 | 32 KB x 2 | 2 MB | |

| Intel | Core 2 Duo E | 2 | 32 KB x 2 | 3-6 MB | |

| Intel | Core 2 Quad | 4 | 32 KB x 4 | 2-6 MB x 2 | |

| Intel | Core i3 | 2 | 32+32 KB x 2 | 256 KB x2 | 4 MB |

| Intel | Core i5 - 6 Series | 2 | 32+32 KB x 2 | 256 KB x2 | 4 MB |

| Intel | Core i5 - 7 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 8 MB |

| Intel | Core i5 - 2400 Series Core i5 - 2500 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 6 MB |

| Intel | Core i7 - 8 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 8 MB |

| Intel | Core i7 - 9 Series | 4 | 32+32 KB x 4 | 256 KB x 4 | 8 MB |

| Intel | Core i7 - 970 | 6 | 32+32 KB x 6 | 256 KB x 6 | 12 MB |

| Sun | UltraSPARC T1 | 8 | 8 K x 8 Inst. 16 K x 8 Data | 3 MB | |

| Sun | SPARC64 VI | 2 | 128 K x 2 Inst. 128 K x 2 Data | 5 MB | |

| Sun | UltraSPARC T2 | 8 | 8 K x 4 Inst. 16 K x 4 Data | 4 MB | |

| Sun | SPARC64 VII | 4 | 64 K x 4 Inst. 64 K x 4 Data | 6 MB | |

| Sun | SPARC T3 | 8 | 8 K x 8 Inst. 16 K x 8 Data | 6 MB | |

| Sun | SPARC64 VII+ | 2 | 128 K x 2 Inst. 128 K x 2 Data | 12 MB | |

| IBM | Power5 | 2 | 64 K x 2 Inst. 64 K x 2 Data | 4 MB x 2 | 32 MB |

| IBM | Power7 | 4, 6, or 8 | 32+32 KB x C | 256 kB x C | 4 - 32 MB x C |

Cache Write Policies[5]

In section 6.2.3, cache write hit policies and write miss policies were explored. The write hit policies, write-through and write-back, determine when the data written in the local cache is propagated to lower-level memory. The write miss policies, write-allocate and no-write-allocate, determine if a memory block is stored in a cache line after the write occurs. Note that since a miss occurred on write, the block is not already in a cache line.

Write-miss policies can also be expressed in terms of write-allocate, fetch-on-write, and write-before hit. These can be used in combination with the same write-through and write-back hit policies to define the complete cache write policy.

Fetch-on-Write

On a write miss, the memory block is not found in the cache, and the memory block containing the address to be written is fetched from the lower level memory hierarchy before the write proceeds. Note that this is different from write-allocate. Fetch-on-write determines if the block is fetched from memory, and write-allocate determines if the memory block is stored in a cache line. Certain write policies may allocate a line in cache for data that is written by the CPU without retrieving it from memory first.

No-Fetch-on-Write

On a write miss, the memory block is not fetched first from the lower level memory hierarchy. Therefore, the write can proceed with out having to wait for the memory block to be returned.

Write-Before-Hit

On a write, the write waits until the cache determines it the block being written to is in the cache or not.

No-Write-Before-Hit

On a write, the write proceeds before the cache determines if a hit or miss occurred. In this scenario, the tag and the data can be written simultaneously.

Write-Miss Policy Combinations

In practice, these policies are used in combination to provide an over-all write policy. Four combinations of these three write miss policies are relevant, and are expressed in the table below:

The two combinations of fetch-on-write and no-write-allocate are blocked out in the above diagram. They are considered unproductive since the data is fetched from lower level memory, but is not stored in the cache. Temporal and space locality suggest that this data will be used again, and will therefore need to be retrieved a second time.

Write-Validate

The combination of no-fetch-on-write and write-allocation is referred to as 'write-validate'. It requires additional overhead to track what bytes have been changed in the cache and lower level memories that can process partial lines. The authors contend that this overhead is 'significant'

Write-Around

The is a combination of no-fetch-on-write, no-write-allocate, and no-write-before-hit is referred to as a 'write-around' since it bypasses the higher level cache, writes directly to the lower level memory, and leaves the existing block line in the cache unaltered. This strategy show performance improvements when the data that is written will not be reread soon.

Prefetching in Contemporary Parallel Processors

Prefetching is a technique that can be used in addition to write-hit and write-miss policies to improved the utilization of the cache. Microprocessors, such as those listed in Table 1, support prefetching logic directly in the chip.

Intel Core i7 [6]

The Intel 64 Architecture, including the Intel Core i7, includes both instruction and data prefetching directly on the chip.

The Data Cache Unit prefetcher is a streaming prefetcher for L1 caches that detects ascending access to data that has been loaded very recently. The processor assumes that this ascending access will continue, and prefetches the next line.

The data prefetch logic (DPL) maintains two arrays to track the recent accesses to memory: one for the upstreams that has 12 entries, and one for downstreams that has 4 entires. As pages are accessed, their address is tracked in these arrays. When the DPL detects an access to a page that is sequential to an existing entry, it assumes this stream will continue, and prefetches the next cache line from memory.

AMD [7]

The AMD Family 10h processors use a stream-detection strategy similar to the Intel process described above to trigger prefetching of the next sequential memory location into the L1 cache. (Previous AMD processors fetched into the L2 cache, which introduced the access latency of the L2 cache and hindered performance.)

The AMD Family 10h can prefetch more than just the next sequential block when a stream is detected, though. When this 'unit-stride' prefetcher detects misses to sequential blocks, it can trigger a preset number of prefetch requests from memory. For example, if the preset value is two, the next two blocks of memory will be prefetched when a sequential access is detected. Subsequent detection of misses of sequential blocks may only prefetch a single block. This maintains the 'unit-stride' of two blocks in the cache ahead of the next anticipated read.

AMD contends that this is beneficial when processing large data sets which often process sequential data and process all the data in the stream. In these cases, a larger unit-stride populates the cache with blocks that will ultimately be processed by the CPU. AMD suggests that the optimal number of blocks to prefetch is between 4 and 8, and that fetching too many or fetching too soon has impaired performance in empirical testing.

The AMD Family 10h also includes 'Adaptive Prefetching', a hardware optimization that triggers prefetching when the demand stream catches up to the prefetch stream. In this case, the unit-stride is increased to assure that the prefetcher is fetching at a rate sufficient enough to continuously provide data to the processor.

References

[1]: http://www.techarp.com/showarticle.aspx?artno=337

[2]: http://en.wikipedia.org/wiki/SPARC

[3]: http://en.wikipedia.org/wiki/POWER7

[4]: http://en.wikipedia.org/wiki/POWER5

[5]: “Cache write policies and performance,” Norman Jouppi, Proc. 20th International Symposium on Computer Architecture (ACM Computer Architecture News 21:2), May 1993, pp. 191–201. http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-91-12.pdf

[6]: "Intel® 64 and IA-32 Architectures Optimization Reference Manual", Intel Corporation, 1997-2011. http://www.intel.com/Assets/PDF/manual/248966.pdf

[7]: "Software Optimization Guide for AMD Family 10h and 12h Processors", Advanced Micro Devices, Inc., 2006–201. http://support.amd.com/us/Processor_TechDocs/40546.pdf