CSC/ECE 506 Spring 2011/ch2a mc: Difference between revisions

| Line 278: | Line 278: | ||

==CUDA Threads== | ==CUDA Threads== | ||

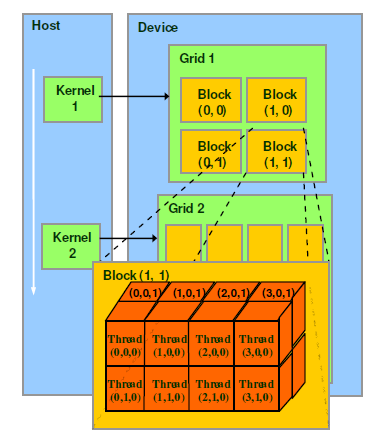

In CUDA programming, serial operations are still handled by the host CPU (main processor) while parallelizable kernels are handed off to the GPU for processing. It is important to understand the layout of the CUDA architecture and memory. Figure 6[[#References|<sup> | In CUDA programming, serial operations are still handled by the host CPU (main processor) while parallelizable kernels are handed off to the GPU for processing. It is important to understand the layout of the CUDA architecture and memory. Figure 6[[#References|<sup>6</sup>]] shows a simplified block diagram of a typical CUDA thread model | ||

[[Image:Cuda_Fig2.png|frame|center|<b>Figure 6:</b> CUDA Thread Model]] | [[Image:Cuda_Fig2.png|frame|center|<b>Figure 6:</b> CUDA Thread Model]] | ||

Revision as of 03:37, 12 February 2011

Introduction

The Graphical Processing Unit (GPU) is a dedicated super-threaded, massively data parallel co-processor. Unlike the CPU, GPUs are designed to be highly parallel, have a high computational throughput, and high memory throughput. CPUs are architected to perform well for single and multi-threaded applications. A number of programming APIs have been introduced to allow programmers to harness the power of the GPU to perform parallel tasks. Two such architectures are OpenCL and Compute Unified Device Architecture (CUDA).

OpenCL

Programming Model

As of version 1.1 of the OpenCL specification1, the programming model of OpenCL is a hybrid of task parallelism and data parallelism. We will first describe the key concepts of OpenCL:

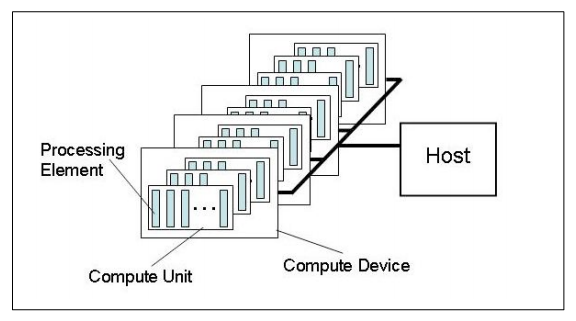

Platform Model

As shown in Figure 1, the platform for running OpenCL programs somewhat resembles a network of computer clusters. The parallel is relatively close: each Compute Device behaves like a cluster, which consists of computers (in the form of Compute Unit - CU), and each Compute Unit can contain multiple Processing Element(PE), much like a computer can contain multiple CPUs.

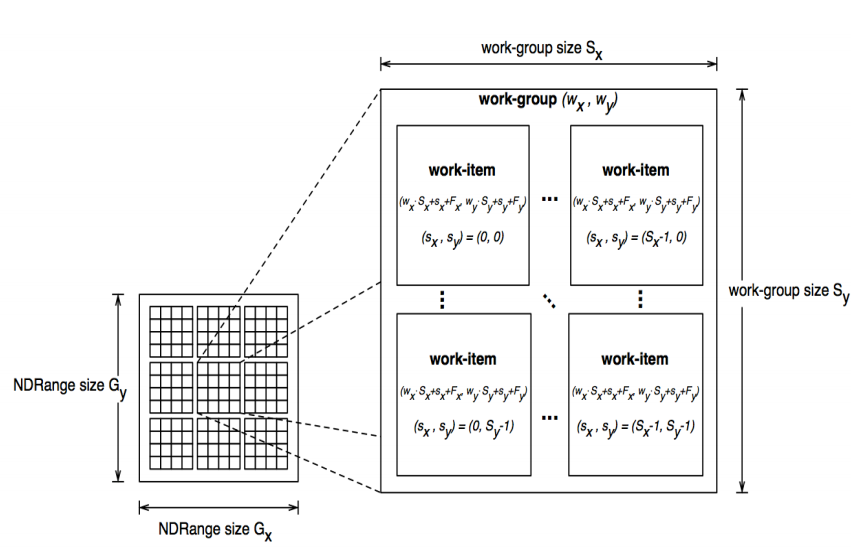

Execution Model

Figure 2 depicts the conceptual model of execution. There is host program executes on the host and manages the execution of multiple compute kernels. Each compute kernel can execute in parallel, on separate work-item. Work-items close together can then be grouped into work-group. This execution model closely matches the platform model described above, as each PE often executes one kernel, and one workgroup is often handled by one CU.

Memory Model

The memory model of OpenCL reflects the design of the Platform Model and Execution Model. Specifically, there are four levels of memory, as shown in the table below:

| Memory Regions | Accessible by |

|---|---|

| Global Memory | All work-items in all work-groups |

| Constant Memory | All work-items in all work-groups |

| Local Memory | All work-items in a work-group |

| Private Memory | Private to a work-item |

Programming Model

To take advantage of OpenCL architecture, two programming models can be used. The first one is data parallel programming model and the other one is task parallel programming model. In the data parallel programming model, every computer kernel executes the same block of code, but on different data. Therefore, conceptually there is one global program counter for all computer kernels. The programmer can choose to manually partition the kernels into group, or delegate this task to the OpenCL middleware. Beside the data parallel programming model, programmer can choose to use the task parallel programming model. In this case, each PE will execute a separate compute kernel and parallelism is achieved by running multiple kernels on separate work-item.

Implementation

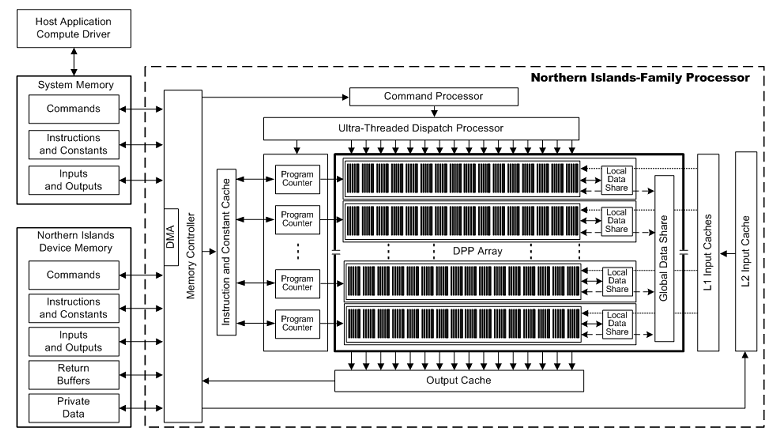

AMD Implementation

The first is GPU generation from AMD that supports OpenCL 1.1 is the Radeon HD 5000 series. Latest generations also support OpenCL 1.1, at the same time significantly improve performance due to architectural refinements. The table below will summarize key characteristics of Radeon HD 6000 GPUs

| Model | Clock rate | Config core | Memory | TDP (W) | Double-precision FP | ||||

|---|---|---|---|---|---|---|---|---|---|

| Core (MHz) | Memory (MHz) | Bandwidth (GB/s) | Bus type | Bus width (bit) | Idle | Max. | |||

| Radeon HD 6450 | 625-750 | 533-800 800-900 | 160:8:4 | 8.5-12.8 25.6-28.8 | DDR3, GDDR5 | 64 | ? | 31 | No |

| Radeon HD 6570 | 650 | 900 1000 | 480:24:8 | 28.8 64 | GDDR3 GDDR5 | 128 | ? | ? | No |

| Radeon HD 6670 | 800 | 1000 | 480:24:8 | 64 | GDDR5 | 128 | ? | 63 | No |

| Radeon HD 6750 | 720:36:16 | up to 73.6 | GDDR5 | 128 | 16 | 86 | No | ||

| Radeon HD 6770 | 800:40:16 | up to 76.8 | GDDR5 | 128 | 18 | 108 | No | ||

| Radeon HD 6850 | 775 | 1000 | 960:48:32 | 128 | GDDR5 | 256 | 19 | 127 | No |

| Radeon HD 6870 | 900 | 1050 | 1120:56:32 | 134.4 | GDDR5 | 256 | 19 | 151 | No |

| Radeon HD 6950 | 800 | 1250 | 1408:88:32 | 160 | GDDR5 | 256 | 20 | 200 | 563 |

| Radeon HD 6970 | 880 | 1375 | 1536:96:32 | 176 | GDDR5 | 256 | 20 | 250 | 675 |

| Radeon HD 6990 | ? | ? | 3072:?:? | ? | GDDR5 | 2x 256 | ? | ~300 | Yes |

As can be seen from table one, GPUs can varied of multiple parameters, which lead to difference in performance. Top-of-the-line model like Radeon HD 6970 is equipped with more cores (PE), wider memory bus bandwidth and faster clock rate. As a result, it is much faster than a low end model with significantly reduced configurations.

According to specification published by AMD3, Radeon HD GPU organization has significant similarity with OpenCL platform model described above. The similarity can be seen from the figure below.

This is, however, not by chance, but by design as the closeness between the conceptual model and the implementation assists in the development process and improves performance.

NVidia Implementation

The first NVidia GPU generation to support OpenCL 1.1 is GeForce 400 series. Their architecture is shown in the following figure:

[[Image:OpenCL_Fig4.png|frame|center|Figure 4: NVidia Fermi Architecture4

As shown in figure four, NVidia Implementation follows almost the same design, with slightly different variations. Each Core is essentially a PE; which are grouped into Streaming Multiprocessor (SM). Cores have access to two level caches and a register file, similar to AMD’s design. The differences apparently are in terminology and are not fundamental.

Sample Application

OpenCL applications are mainly implemented in C; a C++ wrapper built on top of C is also available. According to the OpenCL 1.1 specification, the OpenCL C language is based on ISO C99, with extensions designed for parallel computing1. The following is sample application to compute a Fast Fourier Transformation (FFT)5:

// create a compute context with GPU device context = clCreateContextFromType(NULL, CL_DEVICE_TYPE_GPU, NULL, NULL, NULL); // create a command queue queue = clCreateCommandQueue(context, NULL, 0, NULL); // allocate the buffer memory objects memobjs[0] = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR, sizeof(float)*2*num_entries, srcA, NULL); memobjs[1] = clCreateBuffer(context, CL_MEM_READ_WRITE, sizeof(float)*2*num_entries, NULL, NULL); // create the compute program program = clCreateProgramWithSource(context, 1, &fft1D_1024_kernel_src, NULL, NULL); // build the compute program executable clBuildProgram(program, 0, NULL, NULL, NULL, NULL); // create the compute kernel kernel = clCreateKernel(program, "fft1D_1024", NULL); // set the args values clSetKernelArg(kernel, 0, sizeof(cl_mem), (void *)&memobjs[0]); clSetKernelArg(kernel, 1, sizeof(cl_mem), (void *)&memobjs[1]); clSetKernelArg(kernel, 2, sizeof(float)*(local_work_size[0]+1)*16, NULL); clSetKernelArg(kernel, 3, sizeof(float)*(local_work_size[0]+1)*16, NULL); // create N-D range object with work-item dimensions and execute kernel global_work_size[0] = num_entries; local_work_size[0] = 64; clEnqueueNDRangeKernel(queue, kernel, 1, NULL, global_work_size, local_work_size, 0, NULL, NULL);

The compute kernel is specified in the variable fft1D_1024_kernel_src, its source code is provided below:

// This kernel computes FFT of length 1024. The 1024 length FFT is decomposed into

// calls to a radix 16 function, another radix 16 function and then a radix 4 function

__kernel void fft1D_1024 (__global float2 *in, __global float2 *out,

__local float *sMemx, __local float *sMemy) {

int tid = get_local_id(0);

int blockIdx = get_group_id(0) * 1024 + tid;

float2 data[16];

// starting index of data to/from global memory

in = in + blockIdx; out = out + blockIdx;

globalLoads(data, in, 64); // coalesced global reads

fftRadix16Pass(data); // in-place radix-16 pass

twiddleFactorMul(data, tid, 1024, 0);

// local shuffle using local memory

localShuffle(data, sMemx, sMemy, tid, (((tid & 15) * 65) + (tid >> 4)));

fftRadix16Pass(data); // in-place radix-16 pass

twiddleFactorMul(data, tid, 64, 4); // twiddle factor multiplication

localShuffle(data, sMemx, sMemy, tid, (((tid >> 4) * 64) + (tid & 15)));

// four radix-4 function calls

fftRadix4Pass(data); // radix-4 function number 1

fftRadix4Pass(data + 4); // radix-4 function number 2

fftRadix4Pass(data + 8); // radix-4 function number 3

fftRadix4Pass(data + 12); // radix-4 function number 4

// coalesced global writes

globalStores(data, out, 64);

}

As can be seen from the above example, OpenCL C program has a clear control flow. The host program first creates a global share memory area and initializes the environment. It then instructs the driver to compile the compute kernel from OpenCL C source code to machine code specific to the hardware PEs. 3 The compute kernels are then enqueued to run on the Compute Device. When the results are available the host program will be notified. Developing a compute kernel is a relatively straightforward process. Data is first loaded from global memory to local memory. The kernels will then perform computation on this chunk of data. The result will be written back to global memory.

Compute Unified Device Architecture (CUDA)

CUDA is one of the parallel architectures available to modern GPUs. CUDA a proprietary architecture developed by NVIDIA. CUDA was introduced with NVIDIA’s GeForce 8, February 20076, series of video cards. This architecture gives programmers access to the GPUs multicore processor for performing math intensive operations. These operations include physics modeling (PhysX), physical modeling, image processing, matrix algebra, etc. These GPUs are specifically design to perform many floating point and integer operations simultaneously. CUDA is capable of handling millions of threads simultaneously with little overhead to manage this large number of threads.

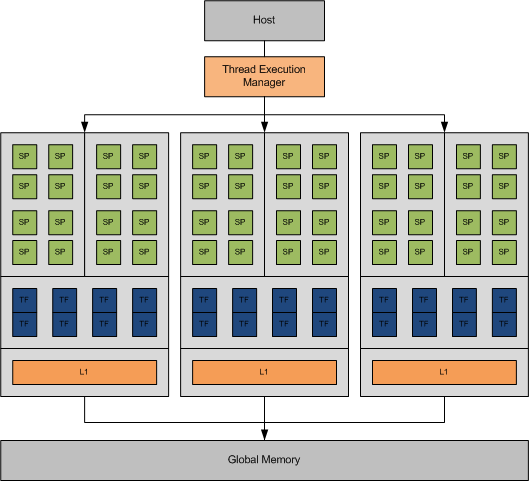

CUDA Architecture

Figure 56 shows the typical arrangement on for a GPU multiprocessor. This figure shows the general flow path of data through the GPU. Data flows from the host to the thread execution manager, which spawns and schedules the threads to each stream processor (SP). Each multi-processor, in this figure, contains eight stream processors. Each stream processor has its own memory, texture filter (TF). Each pair of processors has a shared L1 cache. Global memory is a shared memory is shared amongst all the stream processors.

CUDA Threads

In CUDA programming, serial operations are still handled by the host CPU (main processor) while parallelizable kernels are handed off to the GPU for processing. It is important to understand the layout of the CUDA architecture and memory. Figure 66 shows a simplified block diagram of a typical CUDA thread model

Each kernel is assigned a grid. Each grid contains a number of blocks. Each block contains threads (512 maximum per block).

CUDA Programming

Programming using CUDA is accomplished via language extensions or wrappers. These extensions are available for a number of common programming langauages such as:

Coding using CUDA is fairly straightforward. The listing below8 shows a simple program that will square each value in a matrix.

#include "stdafx.h"

#include <stdio.h>

#include <cuda.h>

// Kernel that executes on the CUDA device

__global__ void square_array(float *a, int N)

{

int idx = blockIdx.x * blockDim.x + threadIdx.x;

if (idx<N) a[idx] = a[idx] * a[idx];

}

// main routine that executes on the host

int main(void)

{

float *a_h, *a_d; // Pointer to host & device arrays

const int N = 10; // Number of elements in arrays

size_t size = N * sizeof(float);

a_h = (float *)malloc(size); // Allocate array on host

cudaMalloc((void **) &a_d, size); // Allocate array on device

// Initialize host array and copy it to CUDA device

for (int i=0; i<N; i++)

a_h[i] = (float)i;

cudaMemcpy(a_d, a_h, size, cudaMemcpyHostToDevice);

// Do calculation on device:

int block_size = 4;

int n_blocks = N/block_size + (N%block_size == 0 ? 0:1);

square_array <<< n_blocks, block_size >>> (a_d, N);

// Retrieve result from device and store it in host array

cudaMemcpy(a_h, a_d, sizeof(float)*N, cudaMemcpyDeviceToHost);

// Print results

for (int i=0; i<N; i++)

printf("%d %f\n", i, a_h[i]);

// Cleanup

free(a_h);

cudaFree(a_d);

}

References

- http://www.khronos.org/registry/cl/specs/opencl-1.1.pdf

- http://en.wikipedia.org/wiki/Comparison_of_AMD_graphics_processing_units#Northern_Islands_.28HD_6xxx.29_series

- http://developer.amd.com/gpu/AMDAPPSDK/assets/AMD_HD_6900_Series_Instruction_Set_Architecture.pdf

- http://www.nvidia.com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper.pdf

- http://en.wikipedia.org/wiki/Opencl

- http://en.wikipedia.org/wiki/CUDA

- http://courses.engr.illinois.edu/ece498/al

- http://llpanorama.wordpress.com/2008/05/21/my-first-cuda-program