CSC/ECE 506 Spring 2010/ch 9: Difference between revisions

No edit summary |

No edit summary |

||

| Line 39: | Line 39: | ||

= Barrier Implementations = | = Barrier Implementations = | ||

In many ways, barriers are simpler than locks. A barrier simply is a point in a program where one or more threads must reach before the parallel program is allowed to continue. When using barriers a programmer does not have to be concerned with advanced topics such as fairness, that are required when programming for locks. | |||

== Performance Evaluation == | |||

The Solihin text gives 2 metrics for which the performance of a barrier implementation should be evaluated. | |||

*Latency - The time required to enter and exit a barrier. | |||

*Traffic - Communications overhead required by the barrier. | |||

== Sense-Reversal Barrier == | == Sense-Reversal Barrier == | ||

Revision as of 23:02, 28 April 2010

In addition to a proper cache coherency model, it is also important for a multiprocessor system to provide support at the hardware level for synchronization. The most common types of synchronization are locks and barriers, which are discussed in this chapter.

Lock Implementations

Locks are an important concept when programming for multi core systems. The basic concept of a lock is to protect the code inside the lock. That is to be sure that while a certain thread X has entered the critical section, another thread Y is not also inside of the critical section and possibly modifying critical values. When a lock is being used, while thread X has entered into the critical section, thread Y must wait until X has exited before entering. This can be accomplished in a variety of ways of varying complexity and performance.

Performance Evaluation

The Solihin text gives 4 methods to determine the performance of a lock implementation.

- Acquisition Latency - How much time does it take to acquire the lock?

- Traffic - How much bus traffic is generated by threads attempting to acquire the lock?

- Fairness - FIFO vs. Luck

- Storage - How much storage is needed compared to the number of threads?

Atomic Instructions

Since a multiprocessor system cannot disable interupts as an effective method to execute a bit of code atomically, there must be hardware support for atomic operations. [1]

Being able to execute an atomic instruction is a requirement for most lock implementations. It is important that when a processor attempts to set a lock either the lock is fully set and the thread is able to enter into the critical section, or the lock is not set, and it appears that none of the instructions required to set the lock have executed.

In the x86 instruction set the opcode CMPXCHG (meaning compare and exchange) can be used in a lock implementation in order to guarantee atomicity. This function works by sending a destination and a source. The accumulator is compared to the destination and if they are equal loaded with the source. If they are NOT equal the accumulator is loaded with the destination value. In order to assure that this is executed atomically the opcode must be issued with the LOCK prefix. This is useful in implementing some locks, such as ticket locks. [2]

Hand-off Lock

Another type of lock that is not discussed in the text is known as the "Hand-off" lock. In this lock the first thread acquires the lock if no other thread is currently locked (since it is the first thread). When another thread attempts to gain the lock it will see that the lock is in use and adds itself to the queue. Once done this thread can sleep until called by the thread with the lock. Once the thread in the lock is finished, it will pass the lock to the next thread in the queue. [www.cs.duke.edu/~chase/cps110/slides/threads3.ppt]

Avoiding Locks

There are many reasons why a programmer should attempt to write programs in such a way as to avoid locks if possible. There are many problems that can arise with the use of locks. [3]

One of the must well known issue is deadlock. This can occur when the threads are waiting to acquire the lock, but the lock will never be unlocked. For example if 2 threads are both spinning on a lock that is locked, they will continue to spin forever, as each thread 'thinks' that the other is inside the critical section.

Another problem with using locks is that the performance is not optimal, as often a lock is used when there is only a chance of conflict. This approach to programming yields slower performance than what might be possible with other methods. This also leads to questions of granularity, that is how much of the code should be protected under the critical section. The programmer must decide between many small (fine grain) locks or fewer, more encompassing locks. This decision can greatly effect the performance of the program. Lock (computer science) [4]

Barrier Implementations

In many ways, barriers are simpler than locks. A barrier simply is a point in a program where one or more threads must reach before the parallel program is allowed to continue. When using barriers a programmer does not have to be concerned with advanced topics such as fairness, that are required when programming for locks.

Performance Evaluation

The Solihin text gives 2 metrics for which the performance of a barrier implementation should be evaluated.

- Latency - The time required to enter and exit a barrier.

- Traffic - Communications overhead required by the barrier.

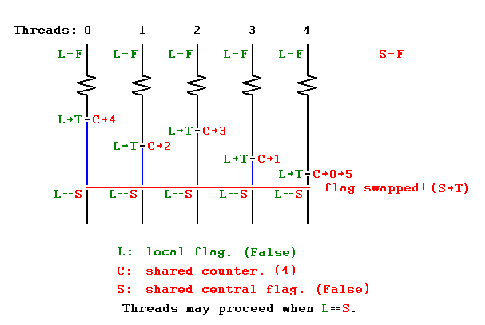

Sense-Reversal Barrier

This barrier is a centralized barrier where a single count variable protected by a lock is shared among all the threads. Each thread on reaching the barrier increments its value and waits till the value of variable has reached the number of threads for which barrier was implemented.

Since all the threads are spinning around a single variable the miss rate is scales quadratically with number of processors.

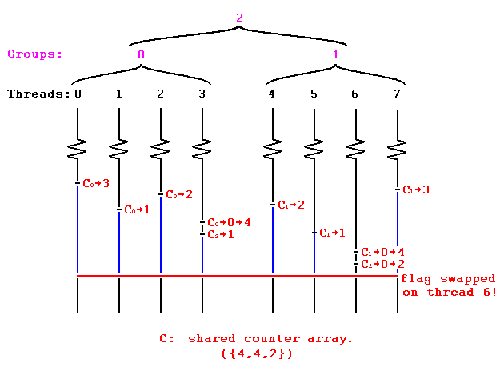

Combining Tree Barrier

This barrier is a distributed barrier where group of processors form clusters and updates value of a local variable. The local variable on reaching a value equal to number of threads updating it, proceeds to increment another variable higher in hierarchy in the combining tree. When the variable in the highest level of hierarchy in the combining tree reaches its max value it is considered that all the threads have reached the barrier and synchronization is complete.

Since in this case all the threads are updated local variables in form of smaller groups the miss rate is not as high as sense-reversal barrier.

The diagram below shows the operation of combining tree barrier with threads grouped in two groups. The variables C0 and C1 are local to each group and C2 is the variable that is at higher level of hierarchy in the tree.

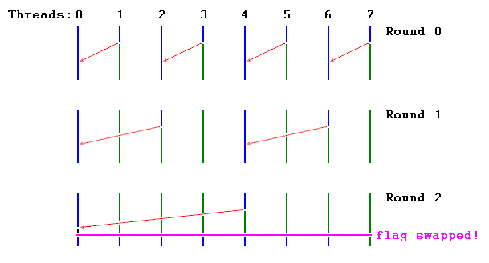

Tournament Barrier

In tournament barrier the threads are considered to be leaves at the end of a binary tree and each node represents a flag. Two threads compete with each other and the looser thread is allowed to set the flag and move to higher level and compete to loose with the looser thread from other section of binary tree. Thus the thread which completes last is able to set the highest flag in the binary tree. On setting the flag it indicates to all the threads that barrier has been completed and thus synchronization is achieved.

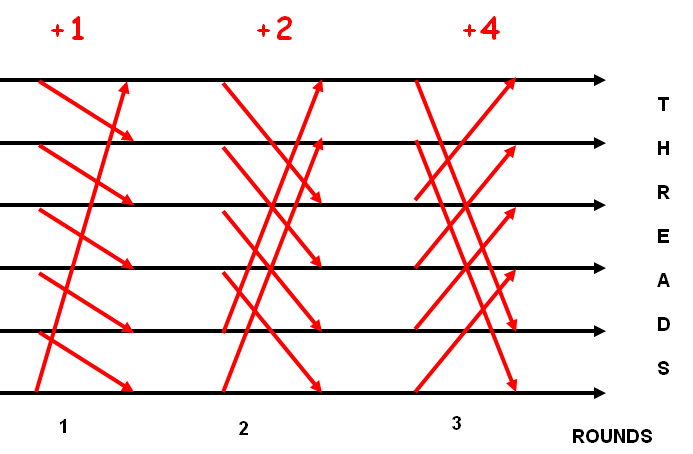

Disseminating Barrier

In this barrier each thread maintains a record of the activity of other threads. For every round i with n threads, thread A notifies thread (A + 2i) mod n. Thus after logn rounds all the threads are aware of the status of every other thread running and whether it has reached the barrier.

Performance Comparison

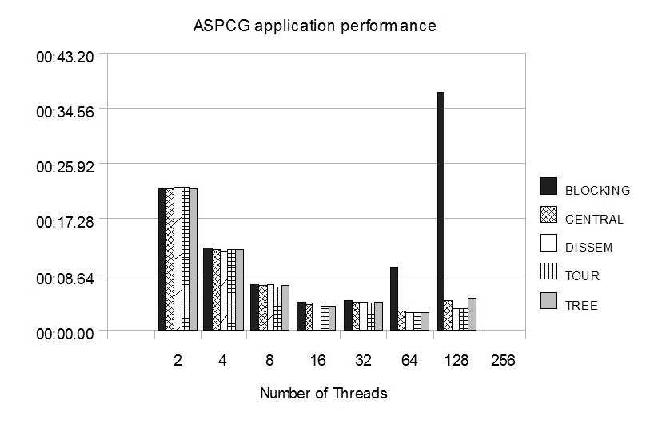

Additive Schwarz Preconditioned Conjugate Gradient (ASPCG) kernal on Altix System

Figure shows the timings of the ASPCG kernel using the different barrier implementations. It can be seen that the blocking barrier does not scale with number of threads as with increase in number of threads the contention increases. [9]

EPCC Microbenchmark

Figure shows the timings to implement a barrier of the EPCC Microbenchmark using the different barrier implementations. It can be seen that the blocking barrier/centralized blocking barrier does not scale with number of threads as with increase in number of threads the contention increases. [10]

References

- Yan Solihin, Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems, Solihin Books, August 2009.

- CMPXCHG - Compare and Exchange [11]

- Lock (computer science) [12]

- www.cs.duke.edu/~chase/cps110/slides/threads3.ppt [www.cs.duke.edu/~chase/cps110/slides/threads3.ppt]

- Deadlock [13]

- Barrier Synchronization in Java, Carwyn Ball and Mark Bull [14]

- www.cs.brown.edu/courses/cs176/barrier.ppt [15]

- Scalability evaluation of barrier algorithms for OpenMP [16]