CSC/ECE 506 Spring 2010/ch1 lm: Difference between revisions

| Line 247: | Line 247: | ||

<li>[http://www4.ncsu.edu/~fmeng/papers/supercomputer_archi.pdf Current Trend of Supercomputer Architecture]</li> | <li>[http://www4.ncsu.edu/~fmeng/papers/supercomputer_archi.pdf Current Trend of Supercomputer Architecture]</li> | ||

<li>[http://www.top500.org TOP500 Supercomputers]</li> | <li>[http://www.top500.org TOP500 Supercomputers]</li> | ||

<li>[http://royal.pingdom.com/2009/06/24/the-triumph-of-linux-as-a-supercomputer-os/ The triumph of Linux as a supercomputer OS]</li> | |||

</ol> | </ol> | ||

Revision as of 21:53, 29 January 2010

"Look through the www.top500.org site, and any other relevant material you can find, and write about supercomputer trends since the beginning of top500.org. Specifically, look at how the architectures, operating systems, and programming models have changed. What models were dominant, say, for each generation, or five-year interval? What technological trends caused the changes? Please write an integrated description. You can link to other Web sites, but your description should be self-contained."

A supercomputer is a computer that is at the frontline of current processing capacity, particularly speed of calculation. Supercomputers were introduced in the 1960s and were designed primarily by Seymour Cray at Control Data Corporation (CDC), and led the market into the 1970s until Cray left to form his own company, Cray Research. He then took over the supercomputer market with his new designs, holding the top spot in supercomputing for five years (1985–1990). In the 1980s a large number of smaller competitors entered the market, in parallel to the creation of the minicomputer market a decade earlier, but many of these disappeared in the mid-1990s "supercomputer market crash".

Today, supercomputers are typically one-of-a-kind custom designs produced by "traditional" companies such as Cray, IBM and Hewlett-Packard, who had purchased many of the 1980s companies to gain their experience. Template:As of, the Cray Jaguar is the fastest supercomputer in the world.

The term supercomputer itself is rather fluid, and today's supercomputer tends to become tomorrow's ordinary computer. CDC's early machines were simply very fast scalar processors, some ten times the speed of the fastest machines offered by other companies. In the 1970s most supercomputers were dedicated to running a vector processor, and many of the newer players developed their own such processors at a lower price to enter the market. The early and mid-1980s saw machines with a modest number of vector processors working in parallel to become the standard. Typical numbers of processors were in the range of four to sixteen. In the later 1980s and 1990s, attention turned from vector processors to massive parallel processing systems with thousands of "ordinary" CPUs, some being off the shelf units and others being custom designs. Today, parallel designs are based on "off the shelf" server-class microprocessors, such as the PowerPC, Opteron, or Xeon, and most modern supercomputers are now highly-tuned computer clusters using commodity processors combined with custom interconnects.

Supercomputers are used for highly calculation-intensive tasks such as problems involving quantum physics, weather forecasting, climate research, molecular modeling (computing the structures and properties of chemical compounds, biological macromolecules, polymers, and crystals), physical simulations (such as simulation of airplanes in wind tunnels, simulation of the detonation of nuclear weapons, and research into nuclear fusion). A particular class of problems, known as Grand Challenge problems, are problems whose full solution requires semi-infinite computing resources.

Relevant here is the distinction between capability computing and capacity computing, as defined by Graham et al. Capability computing is typically thought of as using the maximum computing power to solve a large problem in the shortest amount of time. Often a capability system is able to solve a problem of a size or complexity that no other computer can. Capacity computing in contrast is typically thought of as using efficient cost-effective computing power to solve somewhat large problems or many small problems or to prepare for a run on a capability system.

Timeline of supercomputers

This is a list of the record-holders for fastest general-purpose supercomputer in the world, and the year each one set the record. For entries prior to 1993, this list refers to various sources CDC timeline at Computer History Museum. From 1993 to present, the list reflects the Top500 listing Directory page for Top500 lists. Result for each list since June 1993, and the "Peak speed" is given as the "Rmax" rating.

| Year | Supercomputer | Peak speed (Rmax) |

Location |

|---|---|---|---|

| 1938 | Z1 | 1 OPS | Konrad Zuse, Berlin, Germany |

| 1941 | Z3 | 20 OPS | Konrad Zuse, Berlin, Germany |

| 1943 | Colossus 1 | 5 kOPS | Post Office Research Station, Bletchley_Park|Bletchley Park, UK |

| 1944 | Colossus 2 (Single Processor) | 25 kOPS | Post Office Research Station, Bletchley_Park|Bletchley Park, UK |

| 1946 | Colossus 2 (Parallel Processor) | 50 kOPS | Post Office Research Station, Bletchley_Park|Bletchley Park, UK |

| 1946 |

UPenn ENIAC (before 1948+ modifications) |

5 kOPS | Department of War Aberdeen Proving Ground, Maryland, United States|USA |

| 1954 | NORC | 67 kOPS | Department of Defense Naval Surface Warfare Center Dahlgren Division|U.S. Naval Proving Ground, Dahlgren, Virginia|Dahlgren, Virginia, United States|USA |

| 1956 | MIT TX-0 | 83 kOPS | Massachusetts Inst. of Technology, Lexington, Massachusetts|Lexington, Massachusetts, United States|USA |

| 1958 | IBM AN/FSQ-7 | 400 kOPS | U.S. Air Force sites across the continental United States|continental USA and 1 site in Canada (52 computers) |

| 1960 | LARC | 250 kFLOPS | Atomic Energy Commission (AEC) Lawrence Livermore National Laboratory, California, United States|USA |

| 1961 | IBM 7030 "Stretch" | 1.2 MFLOPS | AEC-Los Alamos National Laboratory, New Mexico, United States|USA |

| 1964 | CDC 6600 | 3 MFLOPS | Lawrence Livermore National Laboratory|AEC-Lawrence Livermore National Laboratory, California, United States|USA |

| 1969 | CDC 7600 | 36 MFLOPS | |

| 1974 | CDC STAR-100 | 100 MFLOPS | |

| 1975 | Burroughs ILLIAC IV | 150 MFLOPS | USA |

| 1976 | Cray-1 | 250 MFLOPS | USA (80+ sold worldwide) |

| 1981 | CDC Cyber 205 | 400 MFLOPS | (~40 systems worldwide) |

| 1983 | Cray X-MP/4 | 941 MFLOPS | U.S. Department of Energy (DoE) Los Alamos National Laboratory; Lawrence Livermore National Laboratory; Battelle Memorial Institute|Battelle; Boeing |

| 1984 | M-13 | 2.4 GFLOPS | USSR |

| 1985 | Cray-2/8 | 3.9 GFLOPS | DoE-Lawrence Livermore National Laboratory, California, United States|USA |

| 1989 | ETA10-G/8 | 10.3 GFLOPS | USA |

| 1990 | NEC SX-3/44R | 23.2 GFLOPS | NEC Fuchu Plant, Fuchū,_Tokyo, Japan |

| 1993 | CM-5/1024 | 59.7 GFLOPS | DoE-Los Alamos National Laboratory; National Security Agency |

| Fujitsu Numerical Wind Tunnel | 124.50 GFLOPS | National Aerospace Laboratory, Tokyo, Japan | |

| Paragon XP/S 140 | 143.40 GFLOPS | DoE-Sandia National Laboratories, New Mexico, United States|USA | |

| 1994 | Fujitsu Numerical Wind Tunnel | 170.40 GFLOPS | National Aerospace Laboratory, Tokyo, Japan |

| 1996 | Hitachi SR2201/1024 | 220.4 GFLOPS | University of Tokyo, Japan |

| Hitachi/Tsukuba CP-PACS/2048 | 368.2 GFLOPS | Center for Computational Physics, University of Tsukuba, Tsukuba, Japan | |

| 1997 | Intel ASCI Red/9152 | 1.338 TFLOPS | Sandia National Laboratories|DoE-Sandia National Laboratories, New Mexico, United States|USA |

| 1999 | Intel ASCI Red/9632 | 2.3796 TFLOPS | |

| 2000 | IBM ASCI White | 7.226 TFLOPS | DoE-Lawrence Livermore National Laboratory, California, United States|USA |

| 2002 | NEC Earth Simulator | 35.86 TFLOPS | Earth Simulator Center, Yokohama, Japan |

| 2004 | IBM Blue Gene|Blue Gene/L | 70.72 TFLOPS | DoE/IBM|IBM Rochester, Minnesota, United States|USA |

| 2005 | 136.8 TFLOPS | United States Department of Energy|DoE/United States National Nuclear Security Administration|U.S. National Nuclear Security Administration, Lawrence Livermore National Laboratory, California, United States|USA | |

| 280.6 TFLOPS | |||

| 2007 | 478.2 TFLOPS | ||

| 2008 | IBM IBM Roadrunner|Roadrunner | 1.026 PFLOPS | Los Alamos National Laboratory|DoE-Los Alamos National Laboratory, New Mexico, United States|USA |

| 1.105 PFLOPS | |||

| 2009 | Jaguar | 1.759 PFLOPS | DoE-Oak Ridge National Laboratory, Tennessee, United States|USA |

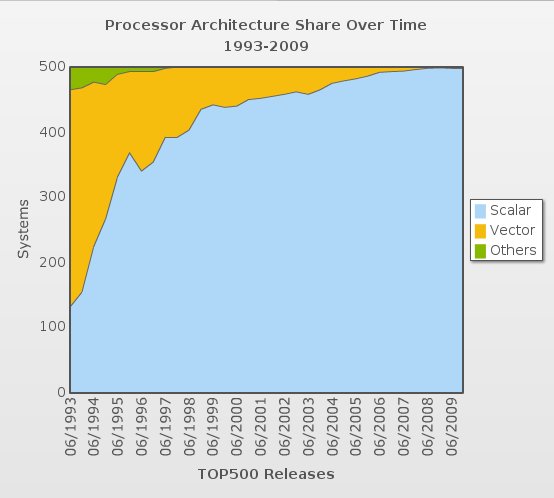

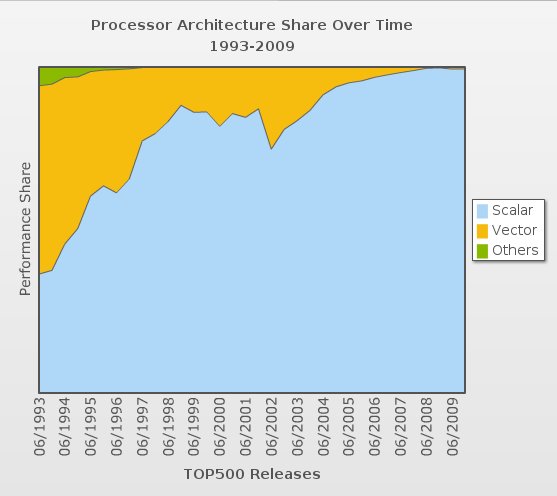

Processors

Processor Architecture

Processor Family

Number of Processors

Operating Systems

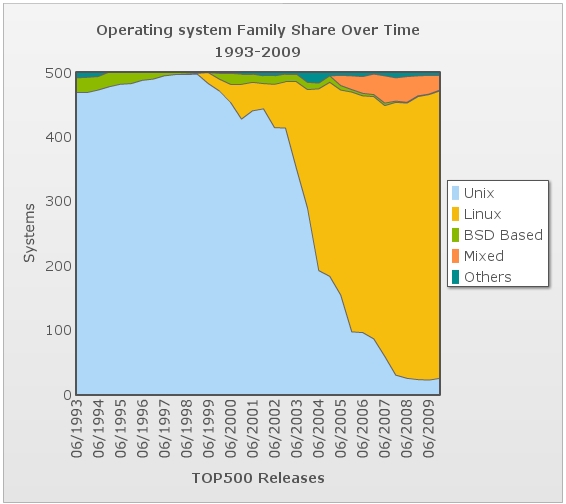

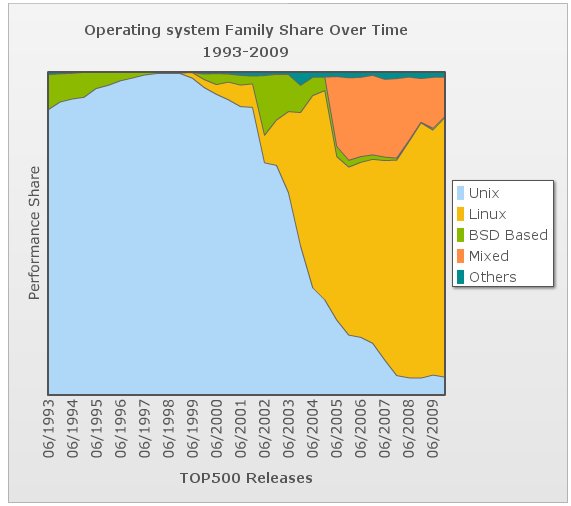

Operating Systems Family

Supercomputer use various of operating systems. The operating system of one specific supercomputer depends on the vendor of it. Until the early-to-mid-1980s, supercomputers usually sacrificed instruction set compatibility and code portability for performance (processing and memory access speed). For the most part, supercomputers to this time (unlike high-end mainframes) had vastly different operating systems. The Cray-1 alone had at least six different proprietary OSs largely unknown to the general computing community. In similar manner, different and incompatible vectorizing and parallelizing compilers for Fortran existed. This trend would have continued with the ETA-10 were it not for the initial instruction set compatibility between the Cray-1 and the Cray X-MP, and the adoption of computer system's such as Cray's Unicos, or Linux.

From the statistics of top500, before the 21st century almost all the OS fall into "Unix" family, while after year 2000 more and more Linux branches are adopted into supercomputers. In the 2009/11 list, 446 out of 500 supercomputers at the top were using their own distribution of Linux. When we list the OS for each of the top 20 supercomputers, the result for Linux is very impressive:

19 of the top 20 supercomputers in the world are running some form of Linux.

And if you just look at the top 10, ALL of them use Linux. Looking at the list, it becomes clear that prominent supercomputer vendors such as Cray, IBM and SGI have wholeheartedly embraced Linux. In a few cases Linux coexists with a lightweight kernel running on the compute nodes (the part of the supercomputer that performs the actual calculations), but often even these lightweight kernels are based on Linux. Cray, for example, has a modified version of Linux they call CNL (Compute Node Linux).

Operating Systems Trend -- Why Linux?

Architecture

For building Super computers, the trend that seems to emerge is that most new systems look as minor variations on the same theme: clusters of RISC-based Symmetric Multi-Processing (SMP) nodes which in turn are connected by a fast network. Consider this as a natural architectural evolution. The availability of relatively low-cost (RISC) processors and network products to connect these processors together with standardised communication software has stimulated the building of home-brew clusters computers as an alternative to complete systems offered by vendors.

Interconnect

Application Area

According to top500 statistics, supercomputers are used in various application areas like finance, defense and research etc.