CSC/ECE 517 Fall 2009/wiki2 14 conc patterns: Difference between revisions

| Line 3: | Line 3: | ||

Within a particular realm, design patterns represent solutions to the problems that are encountered during the development phase of software development life cycle(SDLC). These patterns help in reusing of successful software architectures and designs. There are various different patterns as shown in adjacent figure. | Within a particular realm, design patterns represent solutions to the problems that are encountered during the development phase of software development life cycle(SDLC). These patterns help in reusing of successful software architectures and designs. There are various different patterns as shown in adjacent figure. | ||

==Thread-Safe Programming== | ==Thread-Safe Programming== | ||

Revision as of 09:58, 9 October 2009

Thread-Safe Programming and Concurrency Patterns

Introduction

Within a particular realm, design patterns represent solutions to the problems that are encountered during the development phase of software development life cycle(SDLC). These patterns help in reusing of successful software architectures and designs. There are various different patterns as shown in adjacent figure.

Thread-Safe Programming

In computer programming, a thread is an instance of the program running on behalf of some user or process. Thread-safe describes a program portion or routine that functions correctly during simultaneous execution by multiple threads. A thread-safe piece of code can be called from multiple threads simultaneously without "clobbering" shared data or creating "race" conditions.

For example, suppose that an application creates several threads, each of which makes a call to the same library routine which, in turn, accesses/modifies a global structure or location in memory. As each thread calls this routine, it is possible that they may try to modify this global structure/memory location at the same time. If the routine does not employ some sort of synchronization constructs to prevent data corruption, then it is not thread-safe. This is depicted in the adjacent figure.

In order to ensure that a routine is thread-safe, make sure that concurrent threads use synchronized algorithms that cooperate with each other and confine the address of a shared object to one thread whenever an unsynchronized algorithm is active.

Consider the following method in Java:

Concurrent Design Patterns

Read/Write access

This is the most common type of design pattern used to implement concurrency. This pattern allows concurrent read access to an object. However to write to an object it enforces eclusive access.

Consider a program to implement reliability features offerred by TCP/IP protocol in UDP protocol. When an acknowledgement is received from recipient then _ack_count, a global variable counter, is incremented by one. Consider that each time an UDP packet is sent from sender, a new thread is started to monitor if acknowledgement for that packet is received. If acknowledgement is not received, packet is retransmitted. This arrangement leads to a situation to resolve concurrent increment of _ack_count.

We can resolve this using Read/Write access mechanism. Here is the code in Java that implements this design pattern. Suppose there is a class called SpinLocks that has methods s_read_lock(), s_write_lock(), s_done().

int _ack_count=0;

public class Reliable_UDP {

privte SpinLocks lock_manager = new SpinLocks();

public int get_increment_ack_count{

lock_manager.s_read_lock();

int _ack_count = this.Reliable_UDP;

lock_manger.s_done();

return _ack_count;

}

public int set_increment_ack_count{

lock_manager.s_write_lock();

_ack_count++;

lock_manger.s_done();

}

}

As can be observed from above code sample, the methods of the Reliable_UDP class use a SpinLocks object to coordinate concurrent calls. They begin by calling the appropriate lock method before getting or setting values. When finished, they call the SpinLocks object's done method to release the lock.

The Read/Write Lock pattern is a specialized form of the Scheduler pattern.

Double-checked locking pattern

The Double-checked locking design pattern is used when an application has one or more critical sections of code that must execute sequentially; when multiple threads can potentially attempt to execute the critical section simultaneously; when the critical section is executed just once and when acquiring a lock on every access to the critical section causes excessive overhead.

Many familiar design patterns such as Singleton that work well for sequential programs contain subtle assumptions that do not apply in the context of concurrency. Consider the following implementation of the Singleton pattern:

class Singleton

{

public:

static Singleton *instance (void)

{

if (instance_ == 0)

// Critical section.

instance_ = new Singleton;

return instance_;

}

void method (void);

// Other methods and members omitted.

private:

static Singleton *instance_;

};

// ...

Singleton::instance ()->method ();

// ...

The above implementation does not work in the presence of preemptive multi-tasking or true parallelism. For instance, if multiple threads executing on a parallel machine invoke Singleton::instance simultaneously before it is initialized, the Singleton constructor can be called multiple times This violates the properties of a critical section.

The Solution: Double-Checked Locking

A common way to implement a critical section is to add a static Mutex to the class which ensures that the allocation and initialization of the Singleton occurs atomically. A Guard class is used to ensure that every access to Singleton::instance will automatically acquire and release the lock as follows:

class Singleton

{

public:

static Singleton *instance (void)

{

// First check

if (instance_ == 0)

{

// Ensure serialization (guard

// constructor acquires lock_).

Guard<Mutex> guard (lock_);

// Double check.

if (instance_ == 0)

instance_ = new Singleton;

}

return instance_;

// guard destructor releases lock_.

}

private:

static Mutex lock_;

static Singleton *instance_;

};

The first thread that acquires the lock will construct Singleton and assign the pointer to instance_. All threads that subsequently call instance will find instance_!= 0 and skip the initialization step. The second check prevents a race condition if multiple threads try to initialize the Singleton simultaneously. Thus, the Double-Checked Locking pattern provides advantages of minimized locking and prevention of race conditions.

Thread-Specific Storage pattern

The Thread-Specific Storage pattern alleviates several problems with multi-threading performance such as the overhead of acquiring and releasing locks and programming complexity. It improves performance and simplifies multithreaded applications by allowing multiple threads to use one logically global access point to retrieve thread-specific data without incurring locking overhead for each access. This pattern should be applied to multi-threaded applications that frequently access objects that are logically global but physically specific to each thread.

For instance, when an error occurs in a system call, operating systems like UNIX and Win32 use errno to report the problem. Consider the following typical C code fragment that receives buffers from a non-blocking TCP socket:

// One global errno per-process.

extern int errno;

void *worker (SOCKET socket)

{

// Read from the network connection

// and process the data until the connection

// is closed.

for (;;) {

char buffer[BUFSIZ];

int result = recv (socket, buffer, BUFSIZ, 0);

// Check to see if the recv() call failed.

if (result == -1) {

if (errno != EWOULDBLOCK)

// Record error result in thread-specific data.

printf ("recv failed, errno = %d", errno);

} else

// Perform the work on success.

process_buffer (buffer);

}

}

If recv returns -1 the code checks that errno != EWOULDBLOCK and prints an error message if this is not the case (e.g., if errno == EINTR), otherwise it processes the buffer it receives.

When the “global error variable” approach shown above is used in multi-threaded systems, race conditions can cause an errno value set by a method in one thread to be interpreted erroneously by applications in other threads.

Solution: Thread-Specific Storage

The Thread-specific storage pattern allows sequential methods within a thread to access thread-specific objects atomically without incurring locking overhead for each access. Also, system developers can make the use of thread-specific storage completely transparent at the source-code level via data abstraction or macros.It is also highly portable. For example, the following code illustrates how errno is defined on Solaris 2.x:

// A thread-specific errno definition (typically

// defined in <sys/errno.h>).

#if defined (_REENTRANT)

// The _errno() function returns the

// thread-specific value of errno.

#define errno (*_errno())

#else

// Non-MT behavior is unchanged.

extern int errno;

#endif /* REENTRANT */

void *worker (SOCKET socket)

{

// Exactly the same implementation shown above.

}

When the REENTRANT flag is enabled the errno symbol is defined as a macro that invokes a helper function called errno, which returns a pointer to the thread-specific value of errno. This pointer is dereferenced by the macro so that it can appear on either the left or right side of an assignment operator.

Balking Pattern

Suppose a call is made to an object's method when the object is not in a state to properly execute the method. If the method handles the situation by returning without performing its normal function, we say that the method balked.

Consider there is a class called AirPlane in Java. And it can be operated in autopilot mode as well as normal mode. Now when it is in normal mode we don't want autopilot functions to work. This arrangement raises some concurrency issues to resolve.

We have to decide when the air plane is in autopilot mode then if some action is performed manually by pilot that function should be ignored. We can resolve the concurrency issue in Java as shown below:

public class AirPlane{

private boolean _auto_pilot_mode = false;

public void _perform_some_action(){

synchronized(this){

if(_auto_pilot_mode){

return;

}

}

//Code to execute the action goes here

}

void _auto_pilot_mode_off() {

_auto_pilot_mode = false;

}

}

If the _perform_some_action is called while a action is in progress, the method balks.

Please note the use of the synchronized statement in the _perform_some_action method. It is there to ensure that if mutiple calls to the _perform_some_action method occur at the same time, the result is predictable. Exactly one of the calls will proceed normally and others will balk. Without synchronized statement, multiple calls could proceed normally at the same time.

And _auto_pilot_mode_off method is not synchronized. This is because there is never a time when setting the _auto_pilot_mode variable to false causes a problem. Since the _auto_pilot_mode_off method does not modify any other state information, concurrent calls to the _auto_pilot_mode_off are safe. However, there never should be concurrent calls to the _auto_pilot_mode_off method, because the nature of the auto pilot mechanism makes it physically impossible for two actions to complete at the same time.

Guarded Suspension and Single Threaded Execution design patterns provide an alternate way to handle method calls to objects that are not in an appropriate state to execute the method call.

Producer/Consumer

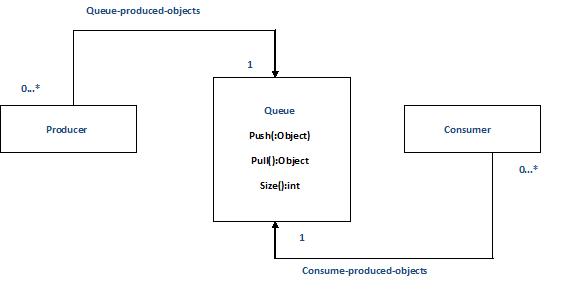

This type of design pattern can be used when the objects are produced and consumed asynchronously. Due to asynchronous behaviour, the objects needs coordination among themselves.

For example there are numerous online websites, that provide facility "Talk to a Specialist", which in turn starts new chat session with online representative.

Any number of people may attempt to initiate the chat session at any given time. There will be multiple representatives online. If the representative is not busy when a chat session starts, the representative responds to users. Otherwise, users are placed in a queue, where the user waites its turn to be seen by a online representative.

Consider that we have a class called User, that pushes a user in Queue & class Dispatcher, that pulls out a user when online representative is free to begin the chat session.

And, suppose that maximum size of queue is 50. If queue is full and User class tries to push one more user to queue then we can enforce the producer thread to wait until a consumer thread removes an object from the queue.

The above example can be implemented in Java using core Java API that includes the classes java.io.PipedInputStream and java.io.PipedOutputStream. Together, they implement a variant of the Producer-Consumer pattern called the Pipe pattern. This pattern includes only one Producer and Consumer object. The Pipe pattern usually refers to the Producer object as a data source and the Consumer object as a data sink.

public class Specialist implements Runnable {

private Queue queue_users;

...

public Specialist(Queue queue_users){

this.queue_users = queue_users;

...

}

public void run(){

User new_user = null;

..

queue_users.push(new_user);

}

public class Dispatcher implements Runnable{

private Queue queue_users;

...

public Dispatcher(Queue queue_users){

this.queue_users = queue_users;

...

}

...

public void run(){

User new_user = queue_users.pull();

...

}

...

}

And, final "Queue" class listing.

public class Queue{

private ArrayList data = new ArrayList();

...

synchronized public void push(User new_user){

data.add(new_user)

notify();

}

...

synchronized public User pull(){

while(data.size()==0){

try {

wait();

} catch(InterruptedException e) {

}

User new_user = (User)data.get(0);

data.remove(0);

return new_user;

}

public int size(){

return data.size();

}

}

In the above listing, Specialist class is a producer. And Specialist class supply objects that are used by Consumer objects. Specialist class produce objects asynchronously of threads that consume them. This means that sometimes a Producer object will produce an object when all Consumer objects are busy processing other objects. When Consumer is busy, objects are pushed in the queue.

Queue act as a buffer for objects produced by Specialist class. They remain there until a Consumer object pulls them out of queue.

Consumer use objects produced by Producer objects. They get the objects from queue and if queue is empty, consumer object waits until producer adds new object to the queue.

The relationship between Producer-Consumer and Queue is shown in the adjacent diagram.