CSC/ECE 517 Fall 2009/wiki2 14 conc patterns: Difference between revisions

No edit summary |

No edit summary |

||

| Line 5: | Line 5: | ||

==Concurrent Design Patterns== | ==Concurrent Design Patterns== | ||

=== | ===Read/Write access=== | ||

This is the most common type of design pattern used to implement concurrency. This pattern allows concurrent read access to an object. However to write to an object it enforces eclusive access. | This is the most common type of design pattern used to implement concurrency. This pattern allows concurrent read access to an object. However to write to an object it enforces eclusive access. | ||

| Line 32: | Line 32: | ||

The Read/Write Lock pattern is a specialized form of the Scheduler pattern. | The Read/Write Lock pattern is a specialized form of the Scheduler pattern. | ||

=== | ===Double-checked locking=== | ||

The Double-checked locking design pattern is used when an application has one or more critical sections of code that must execute sequentially; when multiple threads can potentially attempt to execute the critical section simultaneously; when the critical section is executed just once and when acquiring a lock on every access to the critical section causes excessive overhead. | The Double-checked locking design pattern is used when an application has one or more critical sections of code that must execute sequentially; when multiple threads can potentially attempt to execute the critical section simultaneously; when the critical section is executed just once and when acquiring a lock on every access to the critical section causes excessive overhead. | ||

Revision as of 06:31, 9 October 2009

Thread-Safe Programming and Concurrency Patterns

Introduction

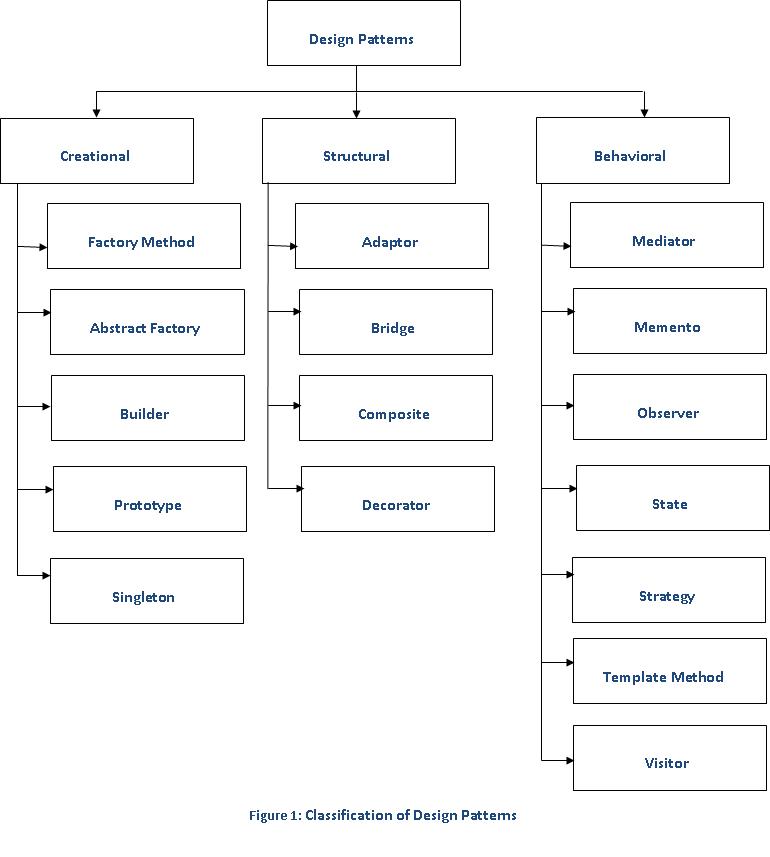

Within a particular realm, design patterns represent solutions to the problems that are encountered during the development phase of software development life cycle(SDLC). These patterns help in reusing of successful software architectures and designs. There are various different patterns as shown in adjacent figure.

Concurrent Design Patterns

Read/Write access

This is the most common type of design pattern used to implement concurrency. This pattern allows concurrent read access to an object. However to write to an object it enforces eclusive access.

Consider a program to implement reliability features offerred by TCP/IP protocol in UDP protocol. When an acknowledgement is received from recipient then _ack_count, a global variable counter, is incremented by one. Consider that each time an UDP packet is sent from sender, a new thread is started to monitor if acknowledgement for that packet is received. If acknowledgement is not received, packet is retransmitted. This arrangement leads to a situation to resolve concurrent increment of _ack_count.

We can resolve this using Read/Write access mechanism. Here is the code in Java that implements this design pattern. Suppose there is a class called SpinLocks that has methods s_read_lock(), s_write_lock(), s_done().

int _ack_count=0;

public class Reliable_UDP {

privte SpinLocks lock_manager = new SpinLocks();

public int get_increment_ack_count{

lock_manager.s_read_lock();

int _ack_count = this.Reliable_UDP;

lock_manger.s_done();

return _ack_count;

}

public int set_increment_ack_count{

lock_manager.s_write_lock();

_ack_count++;

lock_manger.s_done();

}

}

As can be observed from above code sample, the methods of the Reliable_UDP class use a SpinLocks object to coordinate concurrent calls. They begin by calling the appropriate lock method before getting or setting values. When finished, they call the SpinLocks object's done method to release the lock.

The Read/Write Lock pattern is a specialized form of the Scheduler pattern.

Double-checked locking

The Double-checked locking design pattern is used when an application has one or more critical sections of code that must execute sequentially; when multiple threads can potentially attempt to execute the critical section simultaneously; when the critical section is executed just once and when acquiring a lock on every access to the critical section causes excessive overhead.

Many familiar design patterns such as Singleton that work well for sequential programs contain subtle assumptions that do not apply in the context of concurrency. Consider the following implementation of the Singleton pattern:

class Singleton

{

public:

static Singleton *instance (void)

{

if (instance_ == 0)

// Critical section.

instance_ = new Singleton;

return instance_;

}

void method (void);

// Other methods and members omitted.

private:

static Singleton *instance_;

};

// ...

Singleton::instance ()->method ();

// ...

The above implementation does not work in the presence of preemptive multi-tasking or true parallelism. For instance, if multiple threads executing on a parallel machine invoke Singleton::instance simultaneously before it is initialized, the Singleton constructor can be called multiple times This violates the properties of a critical section.

The Solution: Double-Checked Locking

A common way to implement a critical section is to add a static Mutex to the class which ensures that the allocation and initialization of the Singleton occurs atomically. A Guard class is used to ensure that every access to Singleton::instance will automatically acquire and release the lock as follows:

class Singleton

{

public:

static Singleton *instance (void)

{

// First check

if (instance_ == 0)

{

// Ensure serialization (guard

// constructor acquires lock_).

Guard<Mutex> guard (lock_);

// Double check.

if (instance_ == 0)

instance_ = new Singleton;

}

return instance_;

// guard destructor releases lock_.

}

private:

static Mutex lock_;

static Singleton *instance_;

};

The first thread that acquires the lock will construct Singleton and assign the pointer to instance_. All threads that subsequently call instance will find instance_!= 0 and skip the initialization step. The second check prevents a race condition if multiple threads try to initialize the Singleton simultaneously. Thus, the Double-Checked Locking pattern provides advantages of minimized locking and prevention of race conditions.