CSC/ECE 517 Spring 2023 - NTNX-3. Refactor models to keep profiles (software, compute, network, etc) as optional and use default if not specified: Difference between revisions

| Line 12: | Line 12: | ||

#NDB also provides automatic sharding, which helps ensure that your database can handle large amounts of data. You can use graph queries to analyze relationships between data in real-time, which can help you make more informed decisions. Furthermore, NDB offers high availability and fault tolerance through its distributed architecture and replication features. Lastly, NDB provides strong security features, including role-based access control, data encryption at rest, and network security features. | #NDB also provides automatic sharding, which helps ensure that your database can handle large amounts of data. You can use graph queries to analyze relationships between data in real-time, which can help you make more informed decisions. Furthermore, NDB offers high availability and fault tolerance through its distributed architecture and replication features. Lastly, NDB provides strong security features, including role-based access control, data encryption at rest, and network security features. | ||

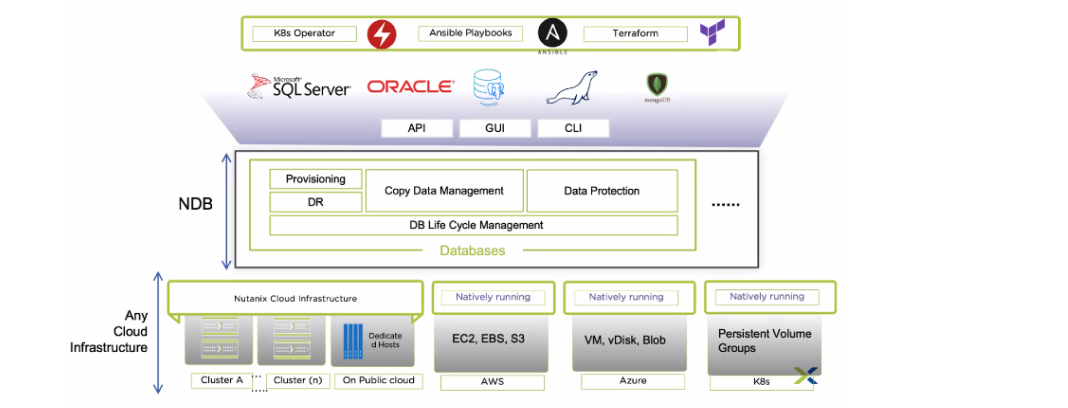

[[File:f1.jpg]] | [[File:f1.jpg|1200px]] | ||

===NDB Kubernetes Operator=== | ===NDB Kubernetes Operator=== | ||

Revision as of 02:20, 26 March 2023

Background

Kubernetes An open-source container orchestration technology called Kubernetes is used to automatically deploy, scale, and manage containerized applications. Developers can use Kubernetes to distribute and control containerized applications across a dispersed network of servers or PCs. To ensure that the actual state of an application matches the desired state, it uses a declarative model to express the desired state and automatically manages the containerized components. Kubernetes can be operated on public or private cloud infrastructure as well as in-house data centers and offers a wide range of functionality for managing containerized applications, such as autonomous scaling, rolling updates, self-healing, service discovery, and load balancing.

Nutanix Database Service

A hybrid multi-cloud database-as-a-service for Microsoft SQL Server, Oracle Database, PostgreSQL, MongoDB, and MySQL, among other databases, is called Nutanix Database Service. It allows for the efficient management of hundreds to thousands of databases, the quick creation of new ones, and the automation of time-consuming administration activities like patching and backups. Users can also choose certain operating systems, database versions, and extensions to satisfy application and compliance requirements. Customers from all around the world have optimized their databases across numerous locations and sped up software development using Nutanix Database Service.

Features offered by NDB Service:

- Nutanix NDB is a distributed NoSQL database service that is part of the Nutanix platform. Some of the key features of NDB include highly scalable architecture, distributed data storage, support for multiple data models, consistent data, fast data access, automatic sharding, real-time analytics, high availability and fault tolerance, and strong security features.

- With its ability to scale up or down the number of nodes in a cluster, Nutanix NDB provides highly scalable architecture without any downtime. Its distributed architecture ensures high availability and fault tolerance, while its support for multiple data models makes it a versatile database service for a wide range of use cases. Additionally, NDB supports strong consistency and fast data access by caching frequently accessed data in memory, which helps reduce the number of disk reads and improves query performance.

- NDB also provides automatic sharding, which helps ensure that your database can handle large amounts of data. You can use graph queries to analyze relationships between data in real-time, which can help you make more informed decisions. Furthermore, NDB offers high availability and fault tolerance through its distributed architecture and replication features. Lastly, NDB provides strong security features, including role-based access control, data encryption at rest, and network security features.

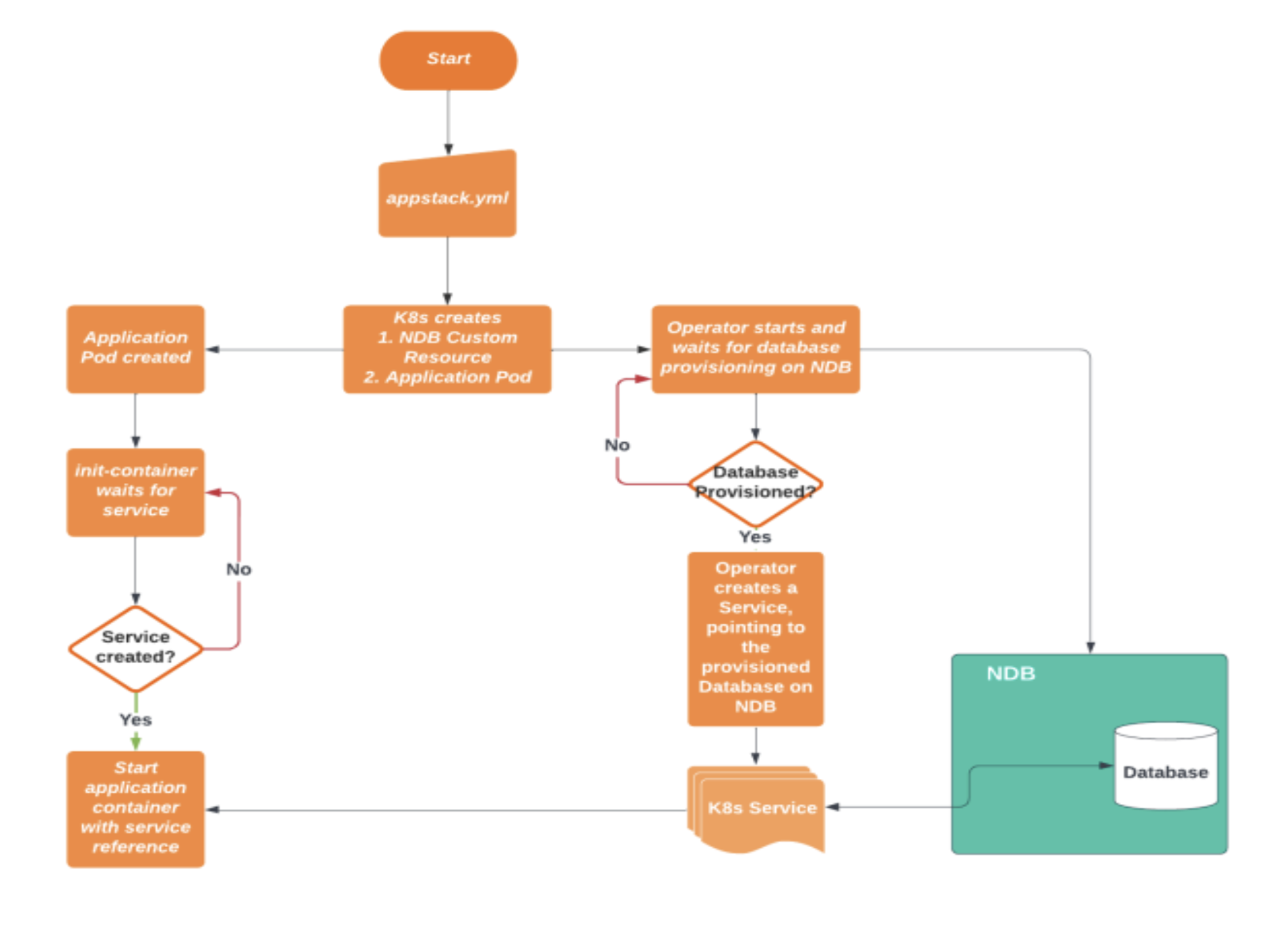

NDB Kubernetes Operator

A tool that makes it easier to deploy and administer open-source databases on Kubernetes is the NDB Kubernetes operator. It enables users to declaratively deploy and manage well-known databases like MySQL, PostgreSQL, and MariaDB on Kubernetes. That is one method of using the NDB service. The purpose of Kubernetes is to manage stateless objects. We needed an operator since NDB must be handled in a very specific manner.

The NDB Kubernetes Operator makes it simpler to manage databases in a Kubernetes environment by automating database deployment, scaling, backup, recovery, and monitoring. To automate the deployment and management of databases, it also connects with well-known DevOps tools like Ansible, Jenkins, and Terraform. It enables users to take advantage of Kubernetes' advantages, such as load balancing, service discovery, self-healing, rolling updates, and automatic scaling.

Developers and DevOps teams can concentrate on the high-level features of their applications rather than the low-level intricacies of managing databases using the NDB Kubernetes Operator, which makes application deployment and maintenance more scalable and dependable.

Existing Architecture and Problem Statement

Problem Statement: Refactor models to keep profiles (software, compute, network, etc) as optional and use default if not specified

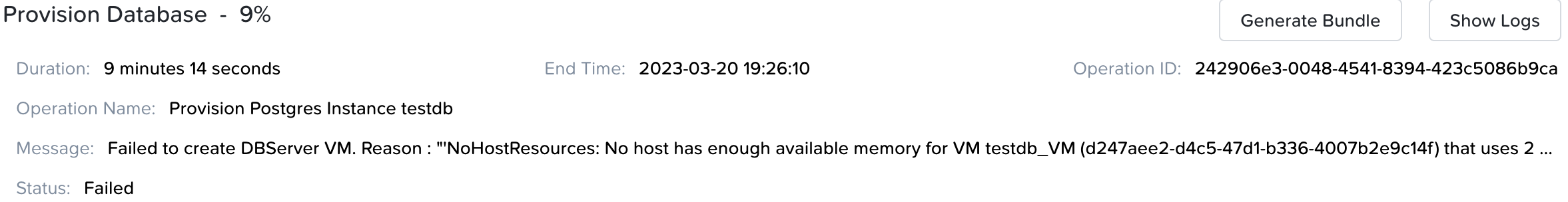

The NDB Kubernetes operator currently uses default compute, network and OS software profiles while provisioning the database. Refactor this module to include optional fields and only if absent, fall back to default.

NDB Architecture

Microsoft SQL Server, Oracle Database, PostgreSQL, MySQL, and MongoDB are just a few of the databases that can have high availability, scalability, and speed thanks to the distributed architecture of the Nutanix Database Service. The hyper-converged infrastructure from Nutanix, which offers a scalable and adaptable platform for handling enterprise workloads, is the foundation around which the architecture is built.

There are various layers in the architecture of the Nutanix Database Service. The Nutanix hyperconverged infrastructure is the basic layer that provides the storage, computing, and networking resources needed to run the databases. The Nutanix Acropolis operating system, which offers the essential virtualization and administration features, sits on top of this layer.

The Nutanix Era layer, which is located above the Nutanix Acropolis layer, offers the Nutanix Database Service the ability to manage databases throughout their existence. The Nutanix Era Manager, a centralized management console that offers a single point of access for controlling the databases across several clouds and data centers, is included in this tier.

The Nutanix Era Orchestrator, which is in charge of automating the provisioning, scaling, patching, and backup of the databases, is another component of the Nutanix Era layer. The Orchestrator offers a declarative approach for specifying the desired state of the databases and is built to work with a variety of databases.

The Nutanix Era Application, a web-based interface that enables database administrators and developers to quickly provision and administer the databases, is the final component of the top layer. A self-service interface for installing databases as well as a number of tools for tracking and troubleshooting database performance are offered by the Era Application.

Design & Workflow

Large amounts of data may be handled by the highly scalable, fault-tolerant, and consistent Nutanix NDB NoSQL database. It is a distributed database created to be installed over several cluster nodes. A portion of the data is stored on each node in the cluster, and the data is replicated across several nodes to guarantee high availability.

Configure your Nutanix cluster: We need to configure your Nutanix cluster to support NDB. This includes setting up the storage and network configurations, configuring the NDB nodes, and defining the replication factor.

Create a table: We need to create a table in NDB to store your data. This includes defining the schema, specifying the replication factor, and configuring any other options you need.

Write your code: We need to write your code to interact with the NDB cluster. This includes inserting and retrieving data, as well as performing more complex operations such as querying, indexing, and data aggregation.

Test your code: We need to test your code to ensure that it works as expected. This includes testing basic operations such as creating and retrieving data, as well as testing more complex operations such as queries and data aggregation.

Monitor your cluster: We need to monitor your NDB cluster to ensure that it is performing as expected. This includes monitoring resource usage, handling errors and exceptions, and optimizing performance.

Optimize your cluster: We need to optimize your NDB cluster over time to ensure that it continues to meet your needs. This includes tuning the configuration, optimizing queries, and scaling the cluster as needed.

Backup and recovery: We need to establish backup and recovery procedures to ensure that your data is protected against data loss or corruption. This includes regularly backing up your data, testing your backups, and establishing procedures for recovering data in case of a disaster.

Potential Design Patterns, Principles, and Code Refactoring strategies

The codebase could be converted into an Object Oriented fashion with classes. Further, here are some of the design patterns we could use:

Builder: For any objects that are created, we could instantiate using say method chaining rather than initializing everything with a constructor.

Factory: Instead of using the regular way (such as the ‘new’ keyword) to instantiate an object, a factory method would be used to do the same. This pattern can be used if we are creating a superclass for provisioning databases, and subclasses for provisioning different kinds of databases (MongoDB, MySQL, etc). This is because if we want to add another kind of database to our project, and we are creating new databases by conditional checking, our code could get messy. Thus, a factory method could instead create objects in a smarter way for the different database classes (or modules in our case).

Facade: This pattern could be used for masking the complicated provisioning payloads.

Open and Closed principle: As per this principle, an interface is open for extension but closed for modification. We could have an interface with provisioning and deprovisioning methods, and interfaces of different databases could extend and reimplement those methods.

Adapter design pattern could also be added to adapt to different databases

DRY(Don’t repeat yourself): There are a lot of ways in which DRY principles will be applied in our project:

- Reusing the provisioning function

- Using constants instead of variables

- Extracting all common functionality into reusable modules and functions.

Demo

Here is the demo video for the developed infra:

https://www.youtube.com/watch?v=lUarCdA8RP0

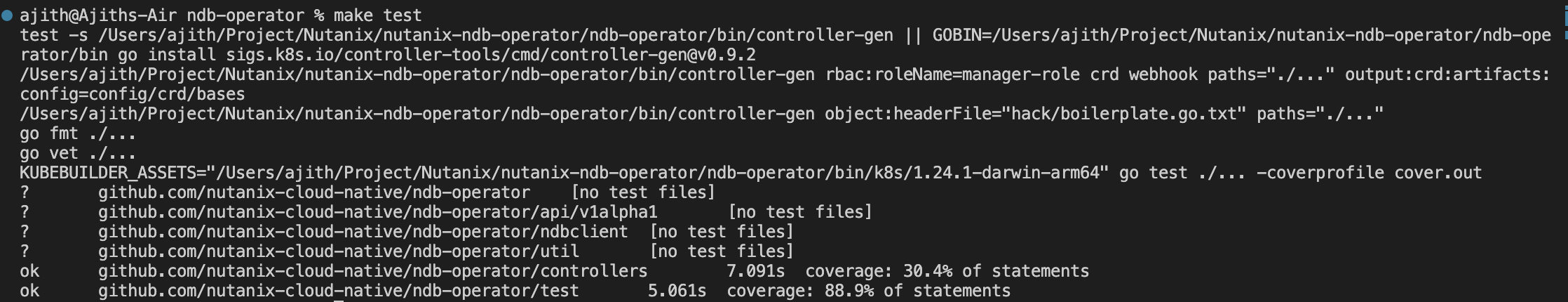

Tests

Here is the video for test execution:

https://www.youtube.com/watch?v=O9Z9ypvQJDM

Here’s an example test for GeneratingProvisioningRequest.

The purpose of the test is to verify that the function generates a valid provisioning request for different database types. The test sets up a test server using the GetServerTestHelper function, creates a ndbclient object, and then iterates over a list of database types (PostgreSQL, MySQL, and MongoDB).

For each database type, the test creates a dbSpec object that defines the desired state of the database instance. It then creates a reqData map that specifies additional provisioning request parameters such as the password and SSH public key. The GenerateProvisioningRequest function is called with the ndbclient, dbSpec, and reqData arguments to generate the actual provisioning request.

The test then checks that the generated request is valid by asserting that the DatabaseType field matches the expected value for the given database type. It also checks that the SoftwareProfileId, SoftwareProfileVersionId, ComputeProfileId, NetworkProfileId, and DbParameterProfileId fields are not empty, and that the TimeMachineInfo.SlaId field is set to NONE_SLA_ID. If any of the assertions fail, the test logs an error message using the t.Errorf or t.Logf functions, indicating which specific assertion failed.

There are also many tests that are handling a lot of niche functionality with regards to generating the provisioning request. For instance, TestGenerateProvisioningRequestReturnsErrorIfDBPasswordIsEmpty is one. It ensures that the function returns an error when the db password parameter is empty. The test sets up an HTTP server, creates an instance of an ndbclient, and then generates a provisioning request with an empty database password for three different database types: postgres, mysql, and mongodb. If the function GenerateProvisioningRequest returns no error, the test fails with an error message.

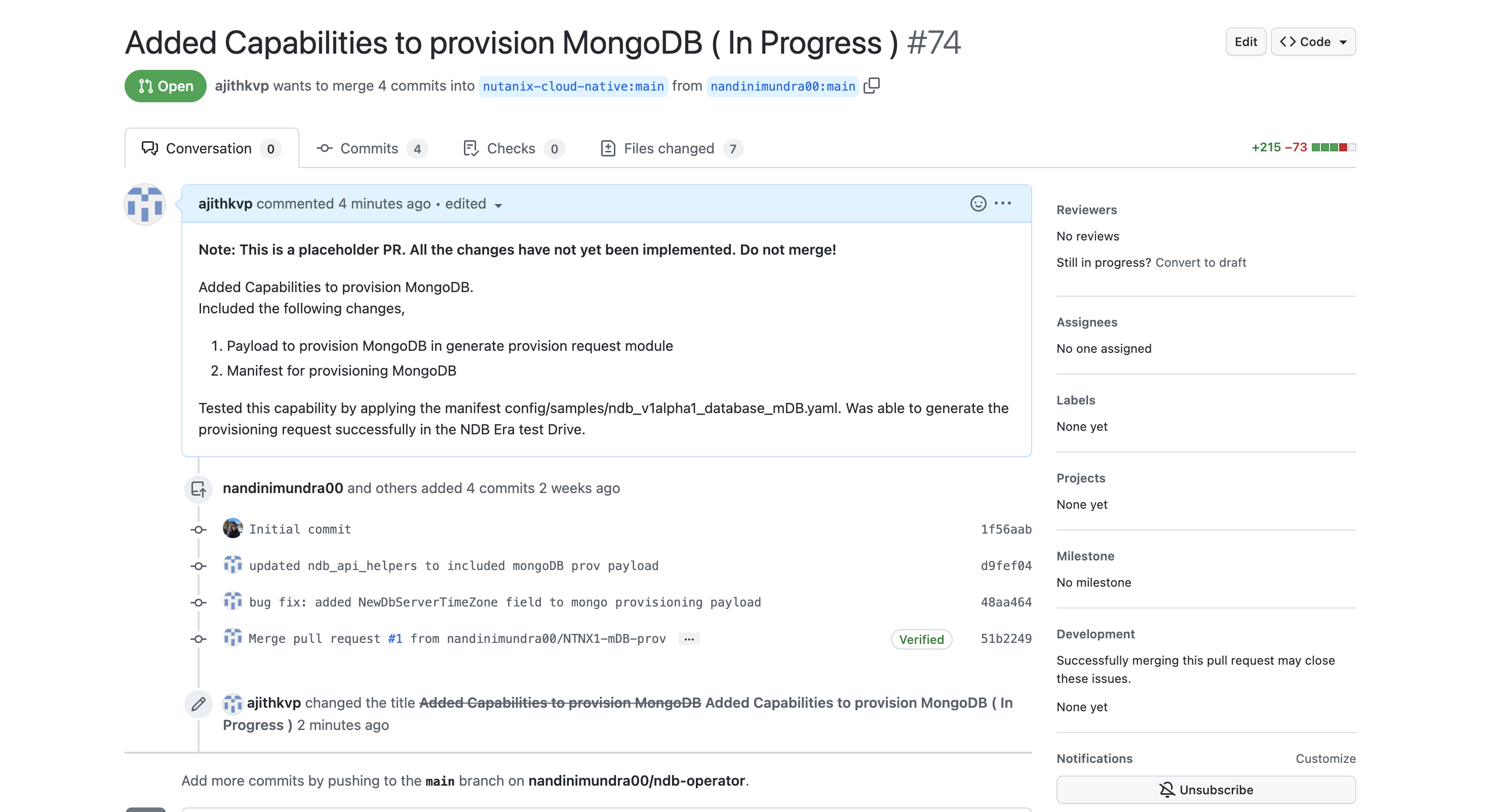

Github

- Repo: https://github.com/karan-47/ndb-operator/tree/feature/ntnx_3

- Pull Request: https://github.com/nutanix-cloud-native/ndb-operator/pull/74

Mentors

- Prof. Edward F. Gehringer

- Krunal Jhaveri

- Manav Rajvanshi

- Krishna Saurabh Vankadaru

- Kartiki Bhandakkar

Contributors

- Karan Pradeep Gala (kgala2)

- Ashish Joshi (ajoshi24)

- Tilak Satra (trsatra)

References

[1] Nutanix. (n.d.). Nutanix Database Service. Retrieved from https://www.nutanix.com/products/database-service

[2] Kubernetes Operator Pattern https://kubernetes.io/docs/concepts/extend-kubernetes/operator