CSC/ECE 517 Fall 2021 - E2150. Integrate suggestion detection algorithm: Difference between revisions

No edit summary |

No edit summary |

||

| Line 7: | Line 7: | ||

=== Overview === | === Overview === | ||

The previous implementation added the following features: | The previous implementation added the following features: | ||

# | # Setup a config file 'review_metric.yml' where the instructor can select what review metric to display for the current assignments | ||

# | # API calls (sentiment, problem, suggestion) are made and a table is rendered below, displaying the feedback of the review comments. | ||

# The total time | # The total time taken by the API calls was also displayed. | ||

=== UI Screenshots === | === UI Screenshots === | ||

| Line 17: | Line 17: | ||

=== Control Flow === | === Control Flow === | ||

[[File:Review_metric.png|1200px|thumb| | [[File:Review_metric.png|1200px|thumb | center]] | ||

| Line 27: | Line 27: | ||

# The criteria are numbered in the view, and those numbers do not correspond to anything on the rubric form. So if the rubric is long, it would be quite difficult for the reviewer to figure out what automated feedback referred to which comment. | # The criteria are numbered in the view, and those numbers do not correspond to anything on the rubric form. So if the rubric is long, it would be quite difficult for the reviewer to figure out what automated feedback referred to which comment. | ||

# Too much specific information on metrics is encoded into the text. While some of the info is in configuration files, the help text for the info buttons is in the code. | # Too much specific information on metrics is encoded into the text. While some of the info is in configuration files, the help text for the info buttons is in the code. | ||

# Currently there are many repetitive blocks of code. For | # Currently there are many repetitive blocks of code. For Ex.; in _response_analysis.html for the getReviewFeedback(), the API call for each type of tag (sentiment, suggestion, etc) is being repeated. Only the API link differs there. | ||

Revision as of 00:06, 3 November 2021

Problem Definition

Peer-review systems like Expertiza utilize a lot of students’ input to determine each other’s performance. At the same time, students learn from the reviews they receive to improve their own performance. In order to make this happen, it would be good to have everyone give quality reviews instead of generic ones. Currently, Expertiza has a few classifiers that can detect useful features of review comments, such as whether they contain suggestions. The suggestion-detection algorithm has been coded as a web service, and other detection algorithms, such as problem detection and sentiment analysis, also exist as newer web services. We need to make the UI more intuitive by allowing users to view the feedback of specific review comments and the code needs to be refactored to remove redundancy to follow the DRY principle.

Previous Implementation

Overview

The previous implementation added the following features:

- Setup a config file 'review_metric.yml' where the instructor can select what review metric to display for the current assignments

- API calls (sentiment, problem, suggestion) are made and a table is rendered below, displaying the feedback of the review comments.

- The total time taken by the API calls was also displayed.

UI Screenshots

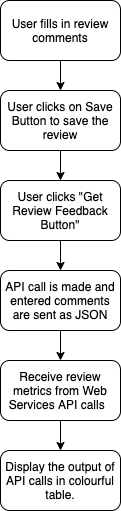

The following image shows how a reviewer interacts with the system to get feedback on the review comments.

Control Flow

Issues with Previous Work

With the previous implementation of this project, students can write comments and request feedback for the comments. There are certain issues with the previous implementation that needs to be addressed.

- The criteria are numbered in the view, and those numbers do not correspond to anything on the rubric form. So if the rubric is long, it would be quite difficult for the reviewer to figure out what automated feedback referred to which comment.

- Too much specific information on metrics is encoded into the text. While some of the info is in configuration files, the help text for the info buttons is in the code.

- Currently there are many repetitive blocks of code. For Ex.; in _response_analysis.html for the getReviewFeedback(), the API call for each type of tag (sentiment, suggestion, etc) is being repeated. Only the API link differs there.

Comments on Prior Teams Implementation

- The criteria are numbered in the view, and those numbers do not correspond to anything on the rubric form.

- So if the rubric is long, it would be quite difficult for the reviewer to figure out what automated feedback referred to which comment

- There is too much specific information on metrics that is encoded into the text.

- While some of the info is in configuration files, the help text for the info buttons is in the code. Also, calls for each metric are programmed into the code, though, depending on how diverse they are, this is perhaps unavoidable

- The code can be more modularized.

- Currently there are many repetitive blocks of code. For ex; in _response_analysis.html for the getReviewFeedback(), the API call for each type of tag (sentiment, suggestion, etc) is being repeated. Only the API link differs there. So it might be more beneficial to store the API link in the config file and use the variable in the function

API Endpoints

- https://peer-reviews-nlp.herokuapp.com/all for all metrics at once

- https://peer-reviews-nlp.herokuapp.com/volume for volume metrics only

- https://peer-reviews-nlp.herokuapp.com/sentiment for sentiment metrics only

- https://peer-reviews-nlp.herokuapp.com/suggestions for suggestions metrics only

JSON Formatting

- Input Text is passed in the following JSON format

{

"text": "This is an excellent project. Keep up the great work"

}

- Output is returned in the following JSON format:

{

"sentiment_score": 0.9,

"sentiment_tone": "Positive",

"suggestions": "absent",

"suggestions_chances": 10.17,

"text": "This is an excellent project. Keep up the great work",

"total_volume": 10,

"volume_without_stopwords": 6

}

Team

- Prashan Sengo (psengo)

- Griffin Brookshire ()

- Divyang Doshi (ddoshi2)

- Srujan (sponnur)