CSC/ECE 517 Fall 2020 - E2080. Track the time students look at other submissions: Difference between revisions

No edit summary |

|||

| Line 23: | Line 23: | ||

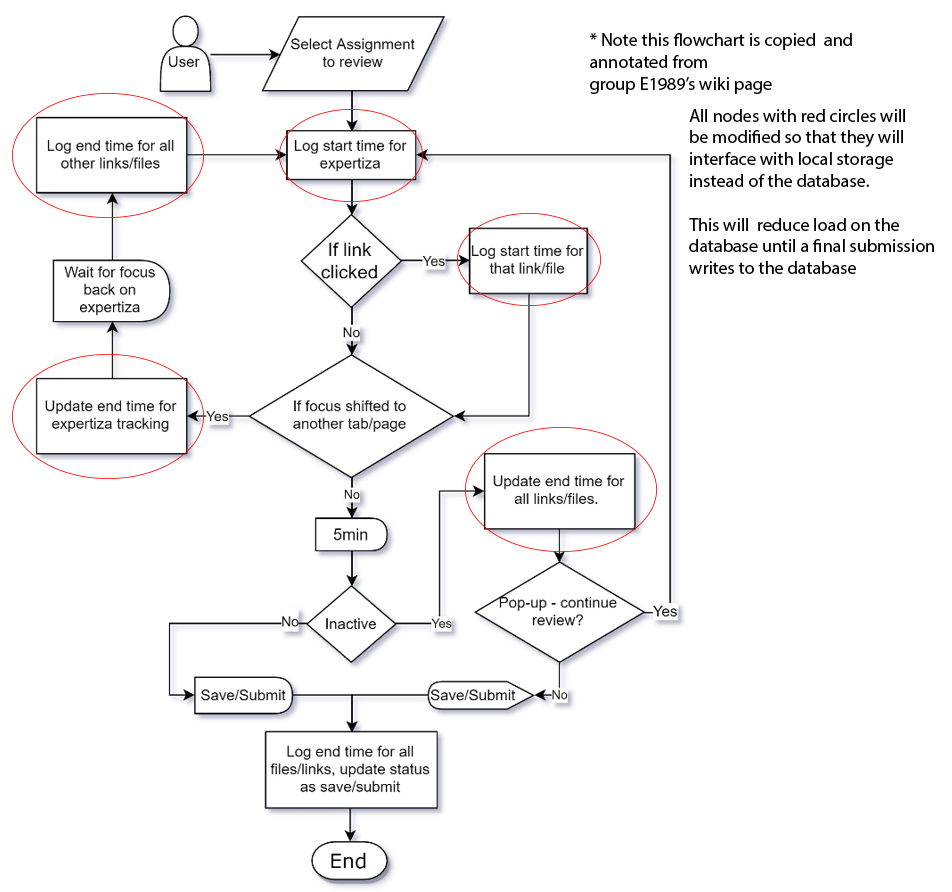

The following is the flowchart presented by E1989 that does a good job outlining the logic for tracking the time students spend reviewing: | The following is the flowchart presented by E1989 that does a good job outlining the logic for tracking the time students spend reviewing: | ||

[[File:E1989_Student_flowchart_modified.png | center]] | |||

== ''' Code Changes ''' == | == ''' Code Changes ''' == | ||

Revision as of 03:34, 23 October 2020

Introduction

The Expertiza project takes advantage of peer-review among students to allow them to learn from each other. Tracking the time that a student spends on each submitted resources is meaningful to instructors to study and improve the teaching experience. Unfortunately, most peer assessment systems do not manage the content of students’ submission within the systems. They usually allow the authors submit external links to the submission (e.g. GitHub code / deployed application), which makes it difficult for the system to track the time that the reviewers spend on the submissions.

Problem Statement

Expertiza allows students to peer review the work of other students in their course. To ensure the quality of the peer reviews, instructors would like to have the ability to track the time a student spends on a peer-review. These metrics need to be tracked and displayed in a way that the instructor is able to gain valuable insight into the quality of a review or set of reviews.

Various metrics will be tracked including

- The time spent on the primary review page

- The time spent on secondary/external links and downloadables

Previous Implementations

- E1791 were able to implement the tracking mechanism for recording the time spent on looking at submissions that were of the type of links and downloadable files. The time spent on links were tracked using window popups when they are opened and closed. The downloadable files of the type text and images were displayed on a new HTML page to track the time spent on viewing that. Each time a submission link or downloadable file is clicked by the reviewer, a new record is created in the database. This causes a lot of database operations which degrades the performance of the application. Also, the way in which the results are displayed is not user-friendly. Other than these issues, the team provided a good implementation of the feature.

- E1872 started with E1971's implementation as their base and tried to display the results in a tabular format. The table included useful statistics but it is being displayed outside the review report to the right side which does not blend in with the review report table. Also, the table is hard to read as a lot of information is presented in a cluttered manner. It is hard to map each statistic with its corresponding review. Furthermore, the team did not include any tests.

- E1989 is the most recent implementation, built from earlier designs, features a solid UI and ample tracking of review time across the board. They started off with project E1791 as their base and focused on displaying the results in a user-friendly manner. For this purpose, the results are displayed in a new window so that it does not look cluttered. The issue of extensive database operations still remains as future work in their project.

Proposed Solution

- From the suggestions of the previous team, E1989, we plan to improve their implementation by reducing the frequency of database queries and insertions. In E1989's current implementation every time a start time is logged for expertiza/link/file, a new entry is created in the database. As a result the submission_viewing_event table increases in size very rapidly as it stores start and end times for each link if a particular event occurs. The solution is to save all entries locally on the users system and once the user presses Save or Submit, save the entry in the database.

- Secondly, E1989's implementation has a decent UI that effectively displays the necessary information to the user. We plan to add minor improvements to their UI to try to improve usability.

- The previous team also mentioned other issues involving the "Save review after 60 seconds" checkbox feature, that may be looked into in the case of extra time.

Design pattern

Flowchart

The following is the flowchart presented by E1989 that does a good job outlining the logic for tracking the time students spend reviewing:

Code Changes

TBD

Test Plan

Automated Testing Using RSpec

TBD

Manual UI Testing

TBD

Helpful Links

Identified Issues

TBD

Team Information

- Luke McConnaughey (lcmcconn)

- Pedro Benitez (pbenite)

- Rohit Nair (rnair2)

- Surbhi Jha (sjha6)

Mentor: Sanket Pai (sgpai2)

Professor: Dr. Edward F. Gehringer (efg)