E1929 Visualizations for Instructors: Difference between revisions

| Line 7: | Line 7: | ||

__TOC__ | __TOC__ | ||

== | == Proposed Changes == | ||

To help instructors understand the relationship between assignment scores and rubric scores, this project proposes two types of visualizations. The first type of visualization allows instructors to examine the rubric statistics for a single assignment. The second type of visualization allows instructors to compare the rubric statistics between two different assignments when the criteria for each assignment is the same. | To help instructors understand the relationship between assignment scores and rubric scores, this project proposes two types of visualizations. The first type of visualization allows instructors to examine the rubric statistics for a single assignment. The second type of visualization allows instructors to compare the rubric statistics between two different assignments when the criteria for each assignment is the same. | ||

=== Existing Views === | |||

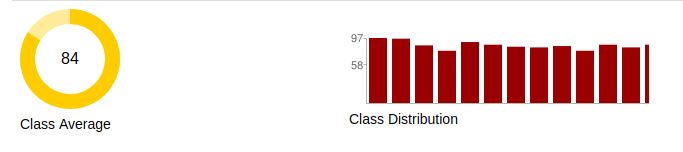

In both cases, the project will add rubric statistics to the assignment grade view. The current assignment grade view consists of a header containing grade statistics followed by a table of the individual grades. In the assignment grade view header, the left side fo the header shows the average assignment grade as a circle, while the right side of the assignment grade view header shows the assignment grade distribution. Figure 1 shows the current header in the grade assignment view page. | |||

[[File:Existing_Assignment_Grades.JPG|frame|centre|Figure 1: Existing assignment grade page header. The existing assignment grade page header has both the average grade for the assignment and the grade distribution for the assignment. The left side shows the average as the percentage of a circle. The right side of the header shows the grade distribution for the assignment. The grade distribution shows the minimum and maximum grade for the assignment. Under the header is a list of the grades for each student.]] | |||

The changes proposed by this project add rubric statistics to this assignment grade view. This project proposes to integrate rubric statistics visualization within a single assignment and to integrate rubric statistics visualization between multiple assignments. | |||

=== Proposed Changes for Single Assignment === | |||

The first visualization change proposed by this project is the integration of rubric statistic visualization within a single assignment. The rubric statistics are integrated into the assignment grade view header. The following HTML mock-up shows the proposed changes to the header. The list of student grades is not changed and therefore not shown. | |||

https://jwarren3.github.io/expertiza/single.html | https://jwarren3.github.io/expertiza/single.html | ||

The | The rubric statistics are shown in the upper middle section of the header, while the options used to filter out the statistics are shown in the lower middle section of the header. The left and right side of the header are not changed. | ||

In the integration of the rubric statistic visualization mock-up within a single assignment, there are two rounds of rubrics for the students to fill out. The rubric in round 1 consists of five criteria, while the rubric in round 2 consists of 4 criteria. The drop-down menu on the left side selects the round of interest. The radio buttons next to each criterion selects the bar graphs shown. The bar graphs represent the percentage score of the selected criterion. Finally, the drop-down menu on the right side selects the statistic shown. This project proposes that the rubric statistic be limited to either the mean of the score or the median of the score. Hovering the mouse over each of the bars in the bar graph shows the numerical value of the chosen statistic. | |||

=== Proposed Changes for Multiple Assignments === | |||

Along with the integration of the rubric statistics within a single assignment, this project also proposes integrating rubric statistic visualizations between multiple assignments. The rubric statistics are integrated into the assignment grade view header. The following HTML mock-up shows the proposed changes to the header. The list of student grades is not changed and therefore not shown. | |||

The | |||

https://jwarren3.github.io/expertiza/tabs.html | |||

The upper part of the rubric statistic visualization displays a set of tabs that allow the instructor to analyze the rubric within a single assignment or to compare the rubric statistics between multiple assignments. The middle part of the rubric statistic visualization displays the statistics for each selected criteria as a bar graph. The heights of the bars are shown as a percentage of the maximum value for each criteria. The bottom part of the rubric statistic visualization displays a set of options that allow the instructor to filter the statistics shown in the bar graph. The left side (assignment average) and right side (assignment distribution) of the assignment grade view header are not changed. | |||

2. | In the integration of the rubric statistic visualization mock-up within a single assignment, there are two rounds of rubrics for the students to fill out. The rubric in round 1 consisted of five criteria, while the rubric in round 2 consisted of 4 criteria. The round of interest is selected using the drop-down menu on the left side. The criteria shown in the bar graph are selected using radio buttons next to each criterion. Finally, the type of statistic shown is selected using a drop-down menu on the right side of the options. Currently, the statistic is limited to either mean or median. Hovering the mouse over each of the bars in the bar graph shows the numerical value of the chosen statistic. | ||

Visualizing the rubric statistics within a single assignment starts by selecting the left tab titled "Analyze Assignment". The default displays the mean of all criteria from the first round. Changing the round, the type of statistic, and the particular criteria are controlled from the drop-down menus and radio buttons below the bar graph. | |||

Visualizing the rubric statistics between assignments starts by selecting the right tab titled "Compare Assignments". The default displays the comparisons between round one and all criteria of an assignment in the same course. The default assignment is the chronologically earliest assignment. The current assignment is shown in red while the rubric being compared is shown in blue. | |||

== Project Design == | == Project Design == | ||

Revision as of 10:58, 8 April 2019

Introduction

Expertiza is an online, assignment, grading platform for courses associated with instructors. Each instructor creates courses and assignments associated with those courses. For each assignment, students peer review other students' assignments via questionnaires or rubrics. After each round of rubrics, students can submit changes to their assignment based on these rubrics. Each submission and rubric is considered a round. Hence, assignments have multiple rounds each associated with a rubric.

Instructors use the association between the assignment and the rubrics to understand what subjects need more focus. For example, a low average on a particular question, or criterion, on a rubric can indicate that the class has issues with a particular part of the assignment. Currently, the information about assignment grades is on one pages, while the information on rubrics is on a second page. This project intends to make the visualization of assignment grades and rubric scores easier by showing rubric statistics on the assignment grade page.

Proposed Changes

To help instructors understand the relationship between assignment scores and rubric scores, this project proposes two types of visualizations. The first type of visualization allows instructors to examine the rubric statistics for a single assignment. The second type of visualization allows instructors to compare the rubric statistics between two different assignments when the criteria for each assignment is the same.

Existing Views

In both cases, the project will add rubric statistics to the assignment grade view. The current assignment grade view consists of a header containing grade statistics followed by a table of the individual grades. In the assignment grade view header, the left side fo the header shows the average assignment grade as a circle, while the right side of the assignment grade view header shows the assignment grade distribution. Figure 1 shows the current header in the grade assignment view page.

The changes proposed by this project add rubric statistics to this assignment grade view. This project proposes to integrate rubric statistics visualization within a single assignment and to integrate rubric statistics visualization between multiple assignments.

Proposed Changes for Single Assignment

The first visualization change proposed by this project is the integration of rubric statistic visualization within a single assignment. The rubric statistics are integrated into the assignment grade view header. The following HTML mock-up shows the proposed changes to the header. The list of student grades is not changed and therefore not shown.

https://jwarren3.github.io/expertiza/single.html

The rubric statistics are shown in the upper middle section of the header, while the options used to filter out the statistics are shown in the lower middle section of the header. The left and right side of the header are not changed.

In the integration of the rubric statistic visualization mock-up within a single assignment, there are two rounds of rubrics for the students to fill out. The rubric in round 1 consists of five criteria, while the rubric in round 2 consists of 4 criteria. The drop-down menu on the left side selects the round of interest. The radio buttons next to each criterion selects the bar graphs shown. The bar graphs represent the percentage score of the selected criterion. Finally, the drop-down menu on the right side selects the statistic shown. This project proposes that the rubric statistic be limited to either the mean of the score or the median of the score. Hovering the mouse over each of the bars in the bar graph shows the numerical value of the chosen statistic.

Proposed Changes for Multiple Assignments

Along with the integration of the rubric statistics within a single assignment, this project also proposes integrating rubric statistic visualizations between multiple assignments. The rubric statistics are integrated into the assignment grade view header. The following HTML mock-up shows the proposed changes to the header. The list of student grades is not changed and therefore not shown.

https://jwarren3.github.io/expertiza/tabs.html

The upper part of the rubric statistic visualization displays a set of tabs that allow the instructor to analyze the rubric within a single assignment or to compare the rubric statistics between multiple assignments. The middle part of the rubric statistic visualization displays the statistics for each selected criteria as a bar graph. The heights of the bars are shown as a percentage of the maximum value for each criteria. The bottom part of the rubric statistic visualization displays a set of options that allow the instructor to filter the statistics shown in the bar graph. The left side (assignment average) and right side (assignment distribution) of the assignment grade view header are not changed.

In the integration of the rubric statistic visualization mock-up within a single assignment, there are two rounds of rubrics for the students to fill out. The rubric in round 1 consisted of five criteria, while the rubric in round 2 consisted of 4 criteria. The round of interest is selected using the drop-down menu on the left side. The criteria shown in the bar graph are selected using radio buttons next to each criterion. Finally, the type of statistic shown is selected using a drop-down menu on the right side of the options. Currently, the statistic is limited to either mean or median. Hovering the mouse over each of the bars in the bar graph shows the numerical value of the chosen statistic.

Visualizing the rubric statistics within a single assignment starts by selecting the left tab titled "Analyze Assignment". The default displays the mean of all criteria from the first round. Changing the round, the type of statistic, and the particular criteria are controlled from the drop-down menus and radio buttons below the bar graph.

Visualizing the rubric statistics between assignments starts by selecting the right tab titled "Compare Assignments". The default displays the comparisons between round one and all criteria of an assignment in the same course. The default assignment is the chronologically earliest assignment. The current assignment is shown in red while the rubric being compared is shown in blue.

Project Design

The changes made to the expertiza project will primarily include HTML/ERB changes to the view files to accommodate the added charts on the page and the necessary javascript to allow responsive design. Brief controller modifications will be made to facilitate database filtering to get the displayed data.

Design Flow

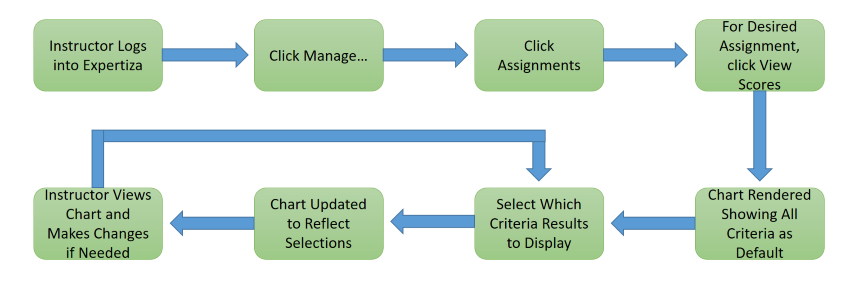

Instructor wants to view student scores on individual rubric criteria:

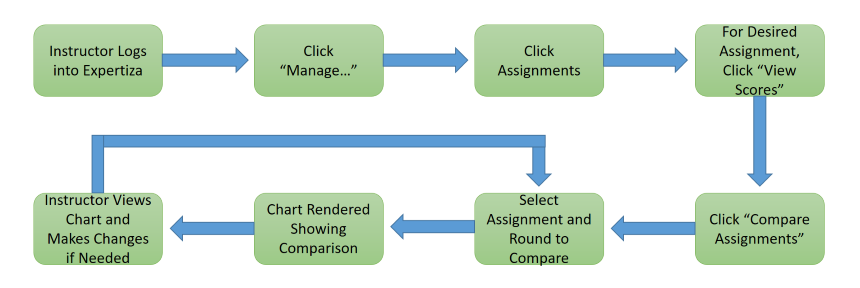

Instructor wants to compare student scores on individual rubric criteria between two compatible rounds of two different assignments:

Tools and Design Choice

We have used the lightweight Google Charts library for displaying the chart data on the page, with standard HTML for all of the options and dropdowns for option selection. Google Charts was chosen because of its high compatibility, full option set, and comparable graphical quality to the rest of expertiza while keeping a small JS footprint, which should help prevent slow page responsiveness.

Visualization

The following graph shows the view of the 'view scores' page after the modifications. The instructor selects a subset of rubric criteria for which he/she wants to know how a class performed for a particular round. A bar graph of the average score of the class for that subset of criteria is displayed.

The above graph shows an average score of the class for 10 rubric criteria in Round 1 for assignment "OSS project/Writing assignment 2" selected by the instructor. A live demo with randomly generated data can be found on JSFiddle

Test Plan

The team plans to perform both automated tests using frameworks RSpec and Capybara. In addition, we will perform manual tests of the user interface (UI), using the app.

RSpec Framework Tests

The RSpec Testing Framework, automated testing, will be used to verify the Models of the Expertiza Rails Web application feature set. Since this feature is dealing with Visualizations (charts) that is intimately tied with an Active Record Models, we will seed the Testing Database with known data via RSpec. These changes will be automatically rolled-back once the testing is complete.

Capybara Tests

Another automated testing framework that will be used is Capybara. Capybara is an browser type test to simulate a user clicking through your site. We will use this testing framework to verify that our charting object is present on the page and contains the seeded data that we had loaded.

UI Tests

In addition to the automated tests above we will also perform manual testing of the newly added features to include:

- Chart is displaying correctly

- Bars are showing up where expected

- Bar annotations are showing the expected value

- Criteria labels are for the correct bar and displaying correct values

- Hover text is displaying the correct values

- Null values are not present on the chart

- Correct colors are used for the multi-round view

- Show Labels checkbox works as expected

- Round Criteria is displaying correctly

- Round dropdown menu shows all rounds for the assignment

- Selecting a round changes the criteria checkboxes

- All checkboxes are displayed with appropriate text

- Checkboxes correctly remove or add criterion bars to the chart

Files Involved

We have implemented a new partial file criteria_charts to the team_chart that display the bar graph with existing data collected by the grades controller methods to the view page.

Modified files:

app/controllers/grades_controller.rb app/views/grades/_teams.html.erb app/views/grades/_criteria_charts.html.erb app/views/grades/_team_charts.html.erb

app/views/grades/_criteria_charts.html.erb

<html>

<head>

<%= content_tag :div, class: "chartdata_information", data: {chartdata: @chartdata} do %>

<% end %>

<%= content_tag :div, class: "text_information", data: {text: @text} do %>

<% end %>

<%= content_tag :div, class: "minmax_information", data: {minmax: @minmax} do %>

<% end %>

<script type="text/javascript" src="https://www.gstatic.com/charts/loader.js"></script>

<script type="text/javascript">

google.charts.load('current', {packages: ['corechart', 'bar']});

//Display Options

var showLabels = true;

var barColors = [ // Other colors generated from the expertiza base red

'#A90201', // using paletton.com

'#018701',

'#016565',

'#A94D01'

];

function getData(){ //Loads all chart data from the page

chartData = $('.chartdata_information').data('chartdata');

chartText = $('.text_information').data('text');

chartRange = $('.minmax_information').data('minmax');

for (var i = 0; i < chartData.length; i++){ //Set all the criteriaSelected to true

var criteria = [];

for (var j = 0; j < chartData[i].length; j++){

criteria.push(true);

}

criteriaSelected.push(criteria);

}

}

function generateData() { //Generates random data for testing

var rounds = 3;

for(var i = 0; i < rounds; i++) {

var criteriaNum = Math.floor(Math.random() * 5 + 5); //Random number of criteria

var round = [];

var criteria = [];

for(var j = 0; j < criteriaNum; j++) {

round.push(Math.floor(Math.random() * 101)); //Random score for each criterion

criteria.push(true); //Everything starts out true

}

chartData.push(round);

criteriaSelected.push(criteria);

chartOptions = { //Render options for the chart

title: 'Class Average on Criteria',

titleTextStyle: {

fontName: 'arial',

fontSize: 18,

italic: false,

bold: true

chart = new google.visualization.ColumnChart(document.getElementById('chart_div'));

var checkBox = document.getElementById("labelCheck");

checkBox.checked = true;

checkBox.style.display = "inline";

updateChart(currentRound);

loadRounds(); }

function updateChart(roundNum) { //Updates the chart with a new round number and renders

currentRound = roundNum;

renderChart();

loadCriteria();

function renderChart() { //Renders the chart if changes have been made

var data = loadData();

chartOptions.vAxis.viewWindow.max = 5;

chartOptions.vAxis.viewWindow.min = 0;

if (chartRange[currentRound]) { //Set axis ranges if they exist

if (chartRange[currentRound][1])

chartOptions.vAxis.viewWindow.max = chartRange[currentRound][1];

if (chartRange[currentRound][0])

chartOptions.vAxis.viewWindow.min = chartRange[currentRound][0];

}

chart.draw(data, chartOptions);

}

chartOptions.hAxis.ticks = [];

var rowCount = 1;

for(var i = 0; i < chartData[currentRound].length; i++) { //Add a chart row for each criterion if not null

if (criteriaSelected[currentRound][i] && chartData[currentRound][i]) {

data.addRow([rowCount, chartData[currentRound][i], barColors[0], (showLabels) ? chartData[currentRound][i].toFixed(1).toString() : ""]);

chartOptions.hAxis.ticks.push({v: rowCount++, f: (i+1).toString()});

}

}

var data = new google.visualization.DataTable();

data.addColumn('number', 'Criterion');

var i;

for(i = 0; i < roundNum; i++) { //Add all columns for the data

data.addColumn('number', 'Round ' + (i+1).toString());

data.addColumn({type: 'string', role: 'style'}); //column for specifying the bar color

data.addColumn({type: 'string', role: 'annotation'});

var newRow = [];

var elementsAdded = false;

newRow.push(rowCount);

for(var j = 0; j < roundNum; j++) { //If the round has the criterion, add it

if (chartData[j][i]) {

newRow.push(chartData[j][i]);

elementsAdded = true;

} else {

newRow.push(null);

}

newRow.push(barColors[j % barColors.length]); //Add column color

if (chartData[j] && chartData[j][i] && showLabels)

newRow.push(chartData[j][i].toFixed(1).toString()); //Add column annotations

else

newRow.push("");

}

function loadCriteria() { //Creates the criteria check boxes

var form = document.getElementById("chartCriteria");

while (form.firstChild) //Clear out the old check boxes

form.removeChild(form.firstChild);

if (currentRound == -1) //Don't show criteria for 'all rounds'

return;

chartData[currentRound].forEach(function(dat, i) {

var checkbox = document.createElement('input');

checkbox.type = "checkbox";

checkbox.id = "checkboxoption" + i;

checkbox.onclick = function() { //Register callback to toggle the criterion

checkboxUpdate(i);

}

var label = document.createElement('label')

<form id="chartOptions" name="chartOptions">

<select id="chartRounds" name="rounds"

onChange="updateChart(document.chartOptions.chartRounds.options[document.chartOptions.chartRounds.options.selectedIndex].value)" style = "display: none">

</select>

<label><input type="checkbox" id = "labelCheck" checked="checked" style="display: none" onclick="showLabels = !showLabels; renderChart();">Show

Labels</label>

</form>

app/controllers/grades_controller.rb

def action_allowed?

case params[:action]

when 'view_my_scores'

['Instructor',

'Teaching Assistant',

'Administrator',

'Super-Administrator',

'Student'].include? current_role_name and

are_needed_authorizations_present?(params[:id], "reader", "reviewer") and

check_self_review_status

when 'view_team'

if ['Student'].include? current_role_name # students can only see the head map for their own team

participant = AssignmentParticipant.find(params[:id])

session[:user].id == participant.user_id

else

true

end

else

['Instructor',

'Teaching Assistant',

'Administrator',

'Super-Administrator'].include? current_role_name

end

end

# collects the question text for display on the chart

# Added as part of E1859

def assign_chart_text

@text = []

(1..@assignment.num_review_rounds).to_a.each do |round|

question = @questions[('review' + round.to_s).to_sym]

@text[round - 1] = []

next if question.nil?

(0..(question.length - 1)).to_a.each do |q|

@text[round - 1][q] = question[q].txt

end

end

end

# find the maximum and minimum scores for each questionnaire round

# Added as part of E1859

def assign_minmax(questionnaires)

@minmax = []

questionnaires.each do |questionnaire|

next if questionnaire.symbol != :review

round = AssignmentQuestionnaire.where(assignment_id: @assignment.id, questionnaire_id: questionnaire.id).first.used_in_round

next if round.nil?

@minmax[round - 1] = []

@minmax[round - 1][0] = if !questionnaire.min_question_score.nil? and questionnaire.min_question_score < 0

questionnaire.min_question_score

else

0

end

@minmax[round - 1][1] = if !questionnaire.max_question_score.nil?

questionnaire.max_question_score

else

5

end

end

end

# this method collects and averages all the review scores across teams

# Added as part of E1859

def assign_chart_data

@rounds = @assignment.num_review_rounds

@chartdata = []

(1..@rounds).to_a.each do |round|

@teams = AssignmentTeam.where(parent_id: @assignment.id)

@teamids = []

@result = []

@responseids = []

@scoreviews = []

(0..(@teams.length - 1)).to_a.each do |t|

@teamids[t] = @teams[t].id

@result[t] = ResponseMap.find_by_sql ["SELECT id FROM response_maps

WHERE type = 'ReviewResponseMap' AND reviewee_id = ?", @teamids[t]]

@responseids[t] = []

@scoreviews[t] = []

(0..(@result[t].length - 1)).to_a.each do |r|

@responseids[t][r] = Response.find_by_sql ["SELECT id FROM responses

WHERE round = ? AND map_id = ?", round, @result[t][r]]

@scoreviews[t][r] = Answer.where(response_id: @responseids[t][r][0]) unless @responseids[t][r].empty?

end

end

@chartdata[round - 1] = []

# because the nth first elements could be nil

# iterate until a non-nil value is found or move to next round

t = 0

r = 0

t += 1 while @scoreviews[t].nil?

while t < @scoreviews.length and @scoreviews[t][r].nil?

if r < @scoreviews[t].length - 1

r += 1

else

def assign_chart_data

end

end

next if t >= @scoreviews.length

(0..(@scoreviews[t][r].length - 1)).to_a.each do |q|

sum = 0

counter = 0

def retrieve_questions(questionnaires)

questionnaires.each do |questionnaire|

round = AssignmentQuestionnaire.where(assignment_id: @assignment.id, questionnaire_id: questionnaire.id).first.used_in_round

questionnaire_symbol = if !round.nil?

(questionnaire.symbol.to_s + round.to_s).to_sym

else

questionnaire.symbol

end

@questions[questionnaire_symbol] = questionnaire.questions

end

end

def update

if format("%.2f", total_score) != params[:participant][:grade]

participant.update_attribute(:grade, params[:participant][:grade])

message = if participant.grade.nil?

"The computed score will be used for " + participant.user.name + "."

else

"A score of " + params[:participant][:grade] + "% has been saved for " + participant.user.name + "."

end

end

flash[:note] = message

redirect_to action: 'edit', id: params[:id]

app/views/grades/_team_charts.html.erb

<br> <br>

<%= render :partial => 'criteria_charts' %>

<br>

<a href="#" name='team-chartLink' onClick="toggleElement('team-chart', 'stats');return false;">Hide stats</a>

<br>

<TR style ="background-color: white;" class="team" id="team-chart">

<th>

<div class="circle" id="average-score">

</div>

Class Average

</th>

<TH COLSPAN="8">

<img src="<%= @average_chart %>" ><br>

Class Distribution

<br>

</th>

<TH WIDTH="9"> </th>

</TR>

<script type="text/javascript">

var myCircle = Circles.create({

id: 'average-score',

radius: 50,

value: <%= @avg_of_avg.to_i %>,

maxValue: 100,

width: 15,

text: '<%=@avg_of_avg.to_i%>',

colors: ['#FFEB99', '#FFCC00'],

duration: 700,

textClass: 'circles-final'

});

</script>

<style>

.circles-final{

font-size: 16px !important;

}

</style>

Test Results

The team ran both automated tests, using the RSpec framework, and manual tests of the user interface (UI), using the app. The automated tests helped to ensure that the basic functionality of the app still worked, while the UI tests ensured that the visualizations were correct.

RSpec Test Results

In order to ensure that our changes did not affect basic functionality of the app, we made sure that all tests that passed before our changes also passed after our changes. Add statistics on the tests before our changes and matching statistics after our changes.

UI Tests

To validate all functionality of the chart when adding new features or fixing old ones, the following criteria were tested manually for expected functionality:

- Chart is displaying correctly

- Bars are showing up where expected

- Bar annotations are showing the expected value

- Criteria labels are for the correct bar and displaying correct values

- Hover text is displaying the correct values

- Null values are not present on the chart

- Correct colors are used for the multi-round view

- Show Labels checkbox works as expected

- Round Criteria is displaying correctly

- Round dropdown menu shows all rounds for the assignment

- Selecting a round changes the criteria checkboxes

- All checkboxes are displayed with appropriate text

- Checkboxes correctly remove or add criterion bars to the chart