CSC/ECE 517 Spring 2016/E1738 Integrate Simicheck Web Service: Difference between revisions

| Line 46: | Line 46: | ||

''There is no need to store the raw content sent to SimiCheck. | ''There is no need to store the raw content sent to SimiCheck. | ||

==== | ==== Assignment ==== | ||

To accommodate the number of hours to wait after the assignment due date, before executing the Plagiarism Checking Comparison task, we added a "simicheck" property to the the existing Assignment model. "Simicheck" will be -1 if there is no Plagiarism Checking, and between 0 and 100 (hours) if the assignment is to have a Plagiarism Checker Report. | |||

==== PlagiarismCheckerComparison ==== | ==== PlagiarismCheckerComparison ==== | ||

Revision as of 06:18, 29 April 2017

Team BEND - Bradford Ingersoll, Erika Eill, Nephi Grant, David Gutierrez

Problem Statement

Given that submissions to Expertiza are digital in nature, the opportunity exists to utilize tools that automate plagiarism validation. One of these such tools is called SimiCheck. SimiCheck has a web service API that can be used to compare documents and check them for plagiarism. The goal of this project is to integrate the SimiCheck API into Expertiza in order to allow for an automated plagiarism check to take place once a submission is closed. .

Background

This project has been worked on before in previous semesters. However, the outcomes from these projects did not clearly demonstrate successful integration with SimiCheck from Expertiza and were not deemed production worthy. As a result, part of our project involves researching the previous submission and learning from it. In addition, based on this feedback so far we have decided to start our development from scratch and utilize the previous project as a resource for lessons learned.

Requirements

- Create a scheduled background task that starts a configurable amount of time after the due date of an assignment has passed

- The scheduled task should do the following:

- Fetch the submission content using links provided by the student in Expertiza from only these sources:

- External website or MediaWiki

- GET request to the URL then strip HTML from the content

- Google Doc (not sheet or slides)

- Google Drive API

- GitHub project (not pull requests)

- GitHub API, will only use the student or group’s changes

- External website or MediaWiki

- Categorize the submission content as either a text submission or source code

- Convert the submission content to raw text format to facilitate comparison

- Use the SimiCheck API to check similarity among the assignment’s submissions

- Notify the instructor that a comparison has started

- Send the content of the submissions for each submission category

- We will experiment with how many documents to send at a time

- Note that each file is limited in size to 1 MB by SimiCheck

- Wait for the SimiCheck comparison process to complete

- We will provide SimiCheck with a callback URL to notify when the comparison is complete, however if this doesn’t work well will revert to polling

- We will also provide an “Update Status” link to manually poll comparison status

- Notify the assignment’s instructor that a comparison has been completed

- Fetch the submission content using links provided by the student in Expertiza from only these sources:

- Visualize the results in report

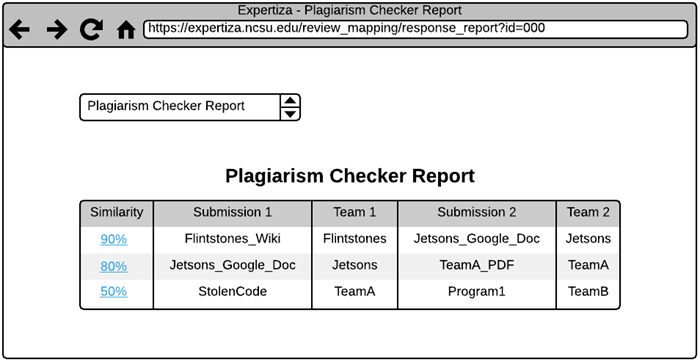

- We will edit view/review_mapping/response_report.html.haml to include a new PlagiarismCheckerReport type and point to the plagiarism_checker_report partial.

- Since SimiCheck has already implemented file diffs, links will be provided in the Expertiza view that lead to the SimiCheck website for each file combination

- Comparison results for each category will be displayed within Expertiza in a table

- Each row is a file combination with similiarity, file names, team names, and diff link

- Sorted in descending similarity

- Uses available data from the SimiCheck API’s summary report

Expertiza Modifications

Due to the fact that the majority of the updates will be a handled in a new background task, there won't be many modifications to files in Expertiza that currently exist. The current assignment configuration view will need to be expanded to add a configuration parameter and the Assignment model will need to be expanded to include a SimiCheck Delay parameter. The Plagiarism Comparison Report will be added to the "Review Report" ui's select box for a selected assignment.

Design

Pattern(s)

Given that this project revolves around integration with several web services, our team is planning to follow the facade design pattern to allow Expertiza to make REST requests to several APIs include SimiCheck, GitHub, Google Drive, etc. This pattern is commonly used to abstract complex functionalities into a simpler interface for use as well as encapsulate API changes to prevent having to update application code if the service changes or a different one is used. We feel this is appropriate based on the requirements because we can create an easy-to-use interface within Expertiza that hides the actual API integration behind the scenes. With this in place, current and future Expertiza developers can use our simplified functionality without needing to understand the miniscule details of the API’s operation.

Model(s)

There is no need to store the raw content sent to SimiCheck.

Assignment

To accommodate the number of hours to wait after the assignment due date, before executing the Plagiarism Checking Comparison task, we added a "simicheck" property to the the existing Assignment model. "Simicheck" will be -1 if there is no Plagiarism Checking, and between 0 and 100 (hours) if the assignment is to have a Plagiarism Checker Report.

PlagiarismCheckerComparison

This model belongs_to the PlagiarismCheckerAssignmentSubmission model. It stores the file IDs returned from SimiCheck, the percent similarity between them, and a URL to a detailed comparison (diff).

PlagiarismCheckerAssignmentSubmission

This model has_many PlagiarismCheckerComparison models. It represents the results of the comparison among submissions for the assignment. As such it will contain all of the relevant fields that are shown in the view as described in the requirements.

Diagram

Typical overall system operation is shown here:

User Interface

In order to be able to configure how long to wait to run the comparison task the current assignment configuration UI will be modified to contain another field where an integer can be input.

After the results have been aggregated they will be able to be viewed via a results report page. This report will include the submissions, their corresponding teams, and the similarity results.

Here is a mock up of our proposed UI:

Task Triggering

The majority of this project is implemented as a background task and as such we can think of it as a separate application or process apart from Expertiza. Ideally this process will be running in another thread to allow for concurrency. We will be exploring during development different options for handling the task with respect to languages and trigger methodologies. For languages we will be exploring Ruby, Python, Node, and potentially others. From a trigger methodology standpoint we will explore both polling and/or event based triggering.

Code Sample(s)

Coming soon...

Potential Hurdles

GiHub has rate limits with respect to how many requests we are allowed to make per auth token per hour. According to GitHub's documentation we are allowed 5000 authenticated requests per hour with an additional 60 requests per hour for unauthenticated requests. This documentation can be found here. Due to the fact that this is a background task and immediate results are not required(will likely be an overnight task), we will ensure that if the need arises to exceed 5000 requests per hour that we will delay the subsequent quests until the next hour. This should satisfy the number of checks that need to occur within a reasonable time frame.

Testing Strategy

API Testing during Development

API testing can be done outside of our code using applications like Postman. We will use this to determine which API calls to use and what our expected output will be. In addition, this will lay the groundwork for testing within Rails for the direct API calls that the Expertiza server will do.

Automated Testing within Rails

We plan on writing unit tests for any controllers and models that we create. High level testing of the controllers, models, and view together will be done with Capybara. The tests we write will be alongside the existing test framework in Expertiza.