CSC517 Fall 2017 OSS M1705: Difference between revisions

| Line 6: | Line 6: | ||

It aims to create the parallel environment with different components which can be handled by small separate tasks. | It aims to create the parallel environment with different components which can be handled by small separate tasks. | ||

We are listing down new contributors to the servo/servo repository. We have to solve the issue of tracking the information across all the repositories in the servo organization. The | We are listing down new contributors to the servo/servo repository. We have to solve the issue of tracking the information across all the repositories in the servo organization. The purpose of this work is to build a system using the Github API to determine this information on a regular basis. | ||

==Scope - Initial Phase == | ==Scope - Initial Phase == | ||

Revision as of 01:51, 13 April 2017

M1705 - Automatically report new contributors to all git repositories

Introduction

Servo<ref>https://en.wikipedia.org/wiki/Servo_(layout_engine)</ref> is a browser layout engine developed by Mozilla<ref>https://en.wikipedia.org/wiki/Mozilla</ref>. It aims to create the parallel environment with different components which can be handled by small separate tasks.

We are listing down new contributors to the servo/servo repository. We have to solve the issue of tracking the information across all the repositories in the servo organization. The purpose of this work is to build a system using the Github API to determine this information on a regular basis.

Scope - Initial Phase

High Level Overview

The scope of the project was to complete the following steps:

- create a GitHub organization with several repositories that can be used for manual tests

- create a tool that initializes a JSON file with the known authors for a local git repository

- create a tool that clones every git repository in a given GitHub organization (use the GitHub API to retrieve this information)

More details about it can be found directly on the Project Description page.

Detailed Overview

The initial target of our project required us to get familiar with GitHub API and JSON. We did not have an existing code base to start from. We had to make decision on what language and tools we will be using to accomplish the initial tasks. Rationale behind our choice can be found in the Design subsection below

Tool # 1: JSON Author File

Create a tool that initializes a JSON file with the known authors for a local git repository

- First, it fetches the author name from all the commits in a repository

- Then, adds them to set. Here we are using set to avoid the duplication of authors if s/he has done more than 1 commit.

- Saves the set in a JSON file.

Tool # 2: Clone Repositories Tool

Create a tool that clones every git repository in a given GitHub organization (use the GitHub API to retrieve this information)

- `package.json` has the dependencies needed to run both the tools.

- `gitApi.js` - clones the all the repositories in the given organization inside `./tmp` folder

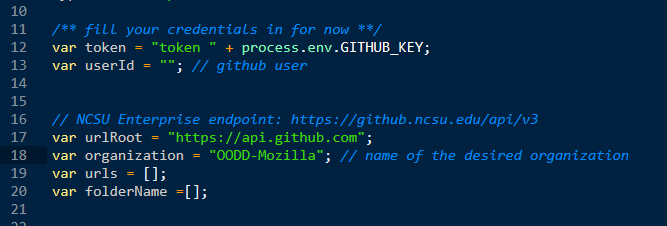

* On line # 12 - define the GITAPI token as environment variable `GITHUB_KEY` **check references below for token** * On line # 13 - define the username of the git account user * On line # 18 - define the organization of interest from where repositories need to be cloned

git clone https://github.com/OODD-Mozilla/ToolRepository.git cd ToolRepository npm install

Scope: Post-initial Phase

As we are familiar using GitHub APIs now, we are ready to move to subsequent steps mentioned on the wiki page<ref>https://github.com/servo/servo/wiki/Report-new-contributors-project</ref> We have three main tasks. We will be working between the tasks - they are not necessarily done sequentially, especially testing task.

Task 1

First of all, we will fetch all of the closed pull requests for an organization during a time period.

- We will be looking through a repository's logs in order to get this information. <ref>http://www.commandlinefu.com/commands/view/4519/list-all-authors-of-a-particular-git-project</ref>

Task 2

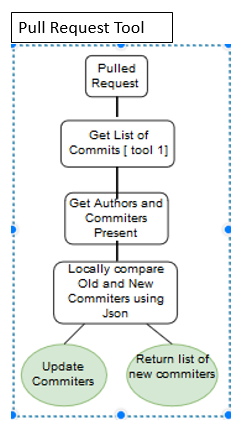

(Tool #3 - Pull Request Tool) Create a tool that processes all of the closed pull requests for a github organization during a particular time period

- For each pull requests, we will get the list of commits present.

- For each commit, we will retrieve the author/committers present.

- Each of the author/committer is checked with list of known author/committers. If they are new, add it to a list of new contributors. Return this list along with link to the author profiles.

- Update the JSON file containing the known authors with the new authors.

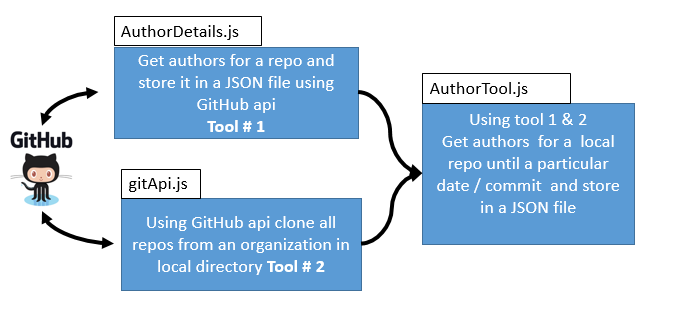

Using the GitHub web API to get the commits has limitations. We can only get the last 30 commits present. However, for organizations like servo, the number of commits can be much higher. Hence, we first need to clone all the repositories present in an organization (using tool #1) and then fetch the list of commits (modified version tool #2) in order to build on our tool #3. The images below task 3 show how these tools come together along with a sketch plan for the pull request tool.

Task 3

Test the tools we have created.

- Create unit tests mocking the github API usage for validating the tools. Check the Test Plan section on this page

- Black box test cases can also be found above.

Design

Design Patterns

One opportunity we came across is that the AuthorTool and PullRequestTool both require access to the author list file, and if left separate the tools would have duplicate code. To solve this, we plan on using the dependency injection design pattern (see this blog post). We will create a AuthorListUtils module that will be passed to both tools when they are created. This will provide a common interface to the author file and lead to DRYer code.

Design Choices

Since we were not working on a existing code base, we had to make design and tool choices when we started working on the project. We picked NodeJS as the language of choice since it is a lightweight run time environment based on javascript. It also has a rich collection of third party and open source packages that can be easily be added as dependencies and managed with the npm package manager. Since we were working with the GitHub API, we used a libraries to help us to wrap the calls - nodegit and request .

We rely heavily on callbacks feature that javascript supports since many of the function calls are asynchronous. The callback() idea falls under API Patterns. Promises are another way javascript will support us when we need to manage the sequence of these asynch calls.

Security Concerns

In order to access and make requests to GITHUB API, a user must have proper authentication. Details about basic authentication and along with an example is provided on the this developer's manualpage. Any user can generate a personal OAuth token for his or her account from the settings page. This randomly generated token when placed inside a source file authorizes the user and allows to place API requests. Having this token exposed in a source file stored in a version control system is the same as hard coding a password in. This introduces a security risk for the user. One way to avoid this, is to generate the token and store it in a local environment variable. In this way, when the program references the environment variable, the token is accessed but its contents remain hidden when source file changes are pushed and pulled.

Please check out the references section in our README.md that provides a link to see (i) how to generate the token on GITHUB (ii) and how to add it as an environment variable.

Test Plan

Initial Phase

In the initial phase, we have not explored any testing frameworks completely. But we have a test plan in place and very basic test cases implemented in the main class for both tools. In order to see the test plan and check how to run these, please check out the README.md file in our Tool Repository. Unit testing will be part of next phase - TDD - Test Driven Development model will be followed post-initial phase.

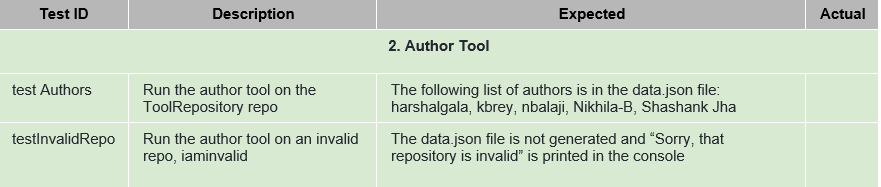

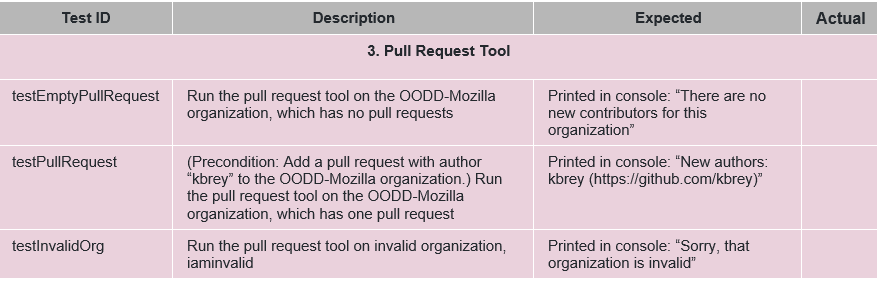

Post-initial Phase

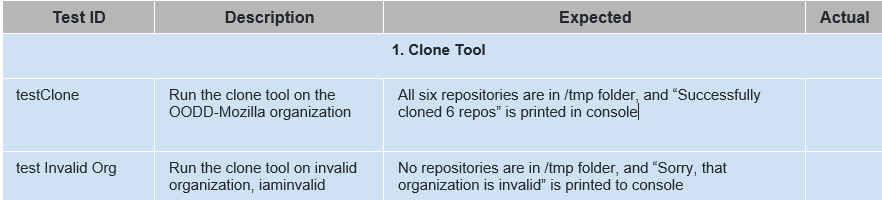

To test our tools we will be using both white-box and black-box testing. For white-box testing we will be using the Mocha framework, with Chai for the assertion library. Additionally, we will use the Nock library to mock our Github API requests. We intend to unit test all of our code, following the methodology provided by Sandi Metz in her “Magic Tricks of Testing” lecture. Our black box test plan is shown in Figure 1 below. 1. White Box Testing - Using Mocha, Chai, and Nock. Test suite can be found in our repo ./test directory 2. Black Box Testing - The tables below outline test cases for each of the tools

Conclusion

After understanding the GitHub API and the way JSON object can be used to access and post the data of the new contributors in the repository, we have observed it can be automated and the steps to understand it are as shown in the article. Once all the tools are written and tested, it can be easily integrated for the dev-servo organization if approved by Mozilla dev-servo community.

References

<references/>