CSC/ECE 506 Fall 2007/wiki4 7 jp07: Difference between revisions

No edit summary |

No edit summary |

||

| Line 10: | Line 10: | ||

Note that in a CMP the two contexts would be separate chips, or in an SMT they would be separate thread contexts. The main sequential program will have some knowledge from previous training or via the compiler that a potential long latency instruction such as a cache miss is upcoming. Through some history or prior knowledge the helper thread runs ahead of the main thread executing the instruction vital only to the long-latency instruction. This thread completes ahead of the main thread, so that when the main thread finally reaches the long latency instruction, the helper thread can forward the computed result. | Note that in a CMP the two contexts would be separate chips, or in an SMT they would be separate thread contexts. The main sequential program will have some knowledge from previous training or via the compiler that a potential long latency instruction such as a cache miss is upcoming. Through some history or prior knowledge the helper thread runs ahead of the main thread executing the instruction vital only to the long-latency instruction. This thread completes ahead of the main thread, so that when the main thread finally reaches the long latency instruction, the helper thread can forward the computed result. | ||

== Applications of Helper Threads == | |||

=== Speculative Branch Prediction === | |||

=== Speculative Value Prediction === | |||

=== Slipstream Technology === | |||

=== Pre-computation Slices === | |||

Revision as of 02:30, 29 November 2007

Helper Threads

One of the problems when using parallel machines is that the machine is only trying to execute sequential code. Therefore, much of the benefit of having the ability to run multiple threads simultaneously is lost. This is true of many multi-threading paradigms including Simultaneous Multithread Systems (SMTs), Symmetric Multiprocessors (SMPs), and Chip Multiprocessors (CMPs).

The natural solution it seems would be to rewrite or recompile the programs to make use of parallel execution. But, in some cases this may be too time consuming or even unfeasible due to the nature of the program. Therefore, there is a middle ground where the program is not truly parallelized but the multithreading capabilities are utilized to improve execution time. This technique is known as helper threads.

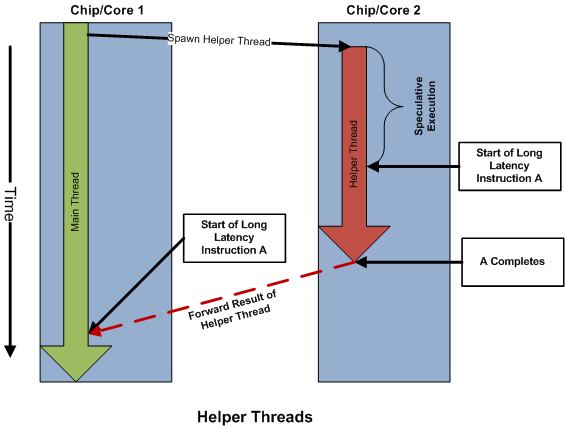

Helper threads run in parallel to the main thread, and do work for the main thread to improve it's performance [Olokuton]. Typically these threads will execute parts of the program "ahead" of the main thread, in an attempt to predict branches and/or values before the main thread completes. This is done to help shadow the penalty of long latency instructions. The figure below illustrates the basic concepts of helper thread execution.

Note that in a CMP the two contexts would be separate chips, or in an SMT they would be separate thread contexts. The main sequential program will have some knowledge from previous training or via the compiler that a potential long latency instruction such as a cache miss is upcoming. Through some history or prior knowledge the helper thread runs ahead of the main thread executing the instruction vital only to the long-latency instruction. This thread completes ahead of the main thread, so that when the main thread finally reaches the long latency instruction, the helper thread can forward the computed result.