CSC/ECE 506 Spring 2014/8a at: Difference between revisions

| Line 107: | Line 107: | ||

The Sandy Bridge multi-core system contains 8 cores, each with HyperThreading, allowing for up to 4 threads per core. The Sandy Bridge also utilizes QPI ([http://www.intel.com/content/www/us/en/io/quickpath-technology/quick-path-interconnect-introduction-paper.html Intel QuickPath Interconnect]) for point-to-point communication at a rate of 8 GT/s. Each core runs at 2.60GHz and contains a 32KB L1 Data cache and a 32KB L1 Instruction cache. The Sandy Bridge is also equipped with L2 256KB unified caches specific to each core. However, there exists a shared 20MB L3 unified cache divided into 8 sections which individually can be accessed by any processor. Like the Xeon Phi, the Sandy Bridge also uses a ring bus but with 4 lines: data line, request line, acknowledgement line, and snoop line. | The Sandy Bridge multi-core system contains 8 cores, each with HyperThreading, allowing for up to 4 threads per core. The Sandy Bridge also utilizes QPI ([http://www.intel.com/content/www/us/en/io/quickpath-technology/quick-path-interconnect-introduction-paper.html Intel QuickPath Interconnect]) for point-to-point communication at a rate of 8 GT/s. Each core runs at 2.60GHz and contains a 32KB L1 Data cache and a 32KB L1 Instruction cache. The Sandy Bridge is also equipped with L2 256KB unified caches specific to each core. However, there exists a shared 20MB L3 unified cache divided into 8 sections which individually can be accessed by any processor. Like the Xeon Phi, the Sandy Bridge also uses a ring bus but with 4 lines: data line, request line, acknowledgement line, and snoop line. | ||

The Intel Xeon Sandy Bridge uses MESIF cache coherence protocol (see table). Similar to the functionality of the MESI states, the additional forwarding (F) state is used to indicate which processor will answer a new request for the block in question. Once a requester receives a block from a processor that has the block in the forwarding state, the request becomes the new “forwarder” and the previous forwarder moves its copy of the block to the shared state (S). The goal of the forwarding state is to maintain availability of a block in one of the processors to avoid future requests of the block from having to access main memory. <ref>[http://htor.inf.ethz.ch/publications/img/ramos-hoefler-cc-modeling.pdf]</ref><br> | The Intel Xeon Sandy Bridge uses MESIF cache coherence protocol (see table). Similar to the functionality of the MESI states, the additional forwarding (F) state is used to indicate which processor will answer a new request for the block in question. Once a requester receives a block from a processor that has the block in the forwarding state, the request becomes the new “forwarder” and the previous forwarder moves its copy of the block to the shared state (S). The goal of the forwarding state is to maintain availability of a block in one of the processors to avoid future requests of the block from having to access main memory. <ref>[http://htor.inf.ethz.ch/publications/img/ramos-hoefler-cc-modeling.pdf Ramos Hoefler CC Modeling]</ref><br> | ||

== MESIF in Intel Nehalem Computer== | == MESIF in Intel Nehalem Computer== | ||

Revision as of 20:18, 22 March 2014

Introduction

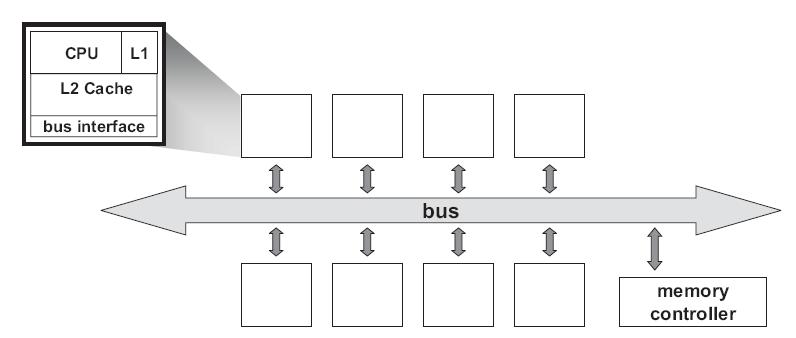

Symmetric multiprocessing (SMP) involves a multiprocessor computer hardware architecture where two or more identical processors are connected to a single shared main memory via system bus. An SMP provides symmetric access to all of main memory from any processor and is the building block for larger parallel systems.

If each processor has a cache that reflects the state of various parts of memory, it is possible that two or more caches may have copies of the same line. It is also possible that a given line may contain more than one lockable data item. If two threads make appropriately serialized changes to those data items, the result could be that both caches end up with different, incorrect versions of the line of memory. In other words, the system's state is no longer coherent because the system contains two different versions of what is supposed to be the content of a specific area of memory. Various protocols have been devised to address the issue of cache coherence problem, such as MSI, MESI, MOESI, MERSI, MESIF, Synapse, Berkeley, Firefly and Dragon protocol. In this wiki article, MSI, MESI, MESIF, MOESI and Synapse protocol implementations on real architectures will be discussed.<ref>Cache Coherence</ref>

Synapse Protocol

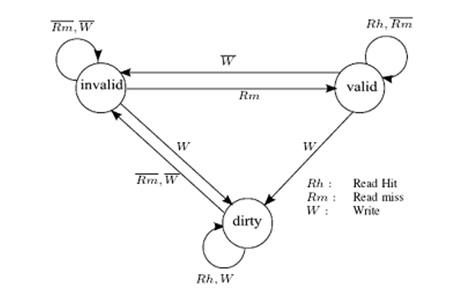

In Synapse protocol, cache blocks are in one of the following states:

- Invalid: Indicates that the block is invalid

- Valid: Indicates that the cache block is unmodified and possibly shared

- Dirty: indicates that the cache block is modified and there are no other copies.

Only blocks in state Dirty are written back when replaced. Any cache with a copy of a block in state Dirty is called the owner of that block. If no Dirty copy exists, main memory is the owner.

Synapse N+1 multiprocessor

Synapse protocol was used in the Synapse N + 1, a multiprocessor for fault-tolerant transaction processing. The N + 1 differs from other shared bus designs considered in that it has two system buses. The added bandwidth of the extra bus allows the system to be expanded to a maximum of 28 processors. A single-bit tag is included with each cache block in main memory, indicating whether main memory is to respond to a miss on that block. If a cache has a modified copy of the block, the bit tells the memory that it need not respond. Thus if the bit is set, an access to the block fails until the data is written back to memory by the cache with the exclusive copy and the bit is reset. This prevents a possible race condition if a cache does not respond quickly enough to inhibit main memory from responding.<ref>Cache Coherence Protocols: Evaluation Using a Multiprocessor Simulation Model</ref>

Implementation Complexities

The Synapse Expansion Bus includes an ownership level protocol between processor caches. It employs a non-write-through algorithm to minimize the bandwidth between cache and shared memory is employed in the cache to reduce memory contention. This protocol does not require a great deal of hardware complexity. Since an extra bit is added to the main memory to indicate whether a cache has an exclusive(Dirty) copy of the block, this needs to be implemented right to prevent malfunction of the protocol.<ref>Synapse tightly coupled multiprocessors: a new approach to solve old problems</ref>

MSI Protocol

MSI protocol is a three-state write-back invalidation protocol which is one of the simplest and earliest-used snooping-based cache coherence-protocols.

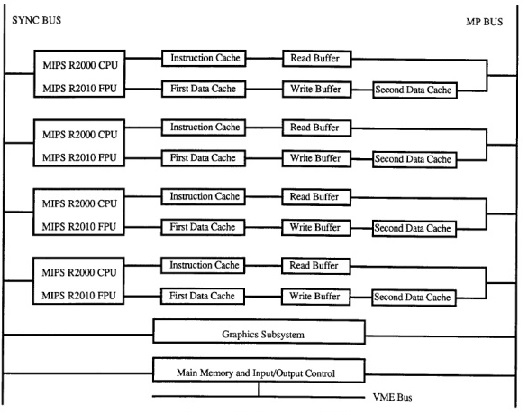

SGI 4D MP

MSI protocol was first used in SGI IRIS 4D series. SGI produced a broad range of MIPS-based (Microprocessor without Interlocked Pipeline Stages) workstations and servers during the 1990s, running SGI's version of UNIX System V, now called IRIX. The 4D-MP graphics superworkstation brought 40 MIPS(million instructions per second) of computing performance to a graphics superworkstation.

Such a high degree of computing and graphics processing was made possible by an intelligent Computing System Architecture. The sync bus provides the required synchronization among the main processors(4 in this system) of the system. Processor buses provide full speed access to the L1 instruction and data caches. Each of the L1 caches are 64KB in size. Thus providing a 512 KB total cache size.The L2 Cache counts for another 512 KB memory of the system and is made up of four individual 64 KB caches. One important highlight of the 4D-MP is that the memory hierarchy is inclusive i.e., the L1 cache is a subset of the L2 cache. The multiprocessor(MP) bus used in 4D-MP graphics superworkstation is a pipelined, block transfer bus that supports the cache coherence protocol as well as providing 64 megabytes of sustained data bandwidth between the processors, the memory and I/O system, and the graphics subsystem.

Every transaction on this MP bus is monitored by the L2 cache. The state for each cache line is maintained by it. It checks if the transactions involve data in its storage through a tag-matching mechanism and changes the state of the cache lines accordingly (States will be M/E/S/I depending on the requests). Write propagation is via the invalidation operation and Write serialization is via the MP bus. Consistency is guaranteed due to the Inclusion property of the Memory Hierarchy system.

With the simple rules of MSI protocol enforced by hardware, efficient synchronization and efficient data sharing are achieved in a simple shared memory model of parallel processing in the 4D-MP graphics superworkstation.<ref>4D-MP graphics superworkstation</ref>

Implementation complexities

In the MSI system, an explicit upgrade message is required for a read followed by a write, even if there are no other sharers. When a processor reads in and modifies a data item, two bus transactions are generated in this protocol even in the absence of sharers. The first is a BusRd that gets the memory block in S state, and the second is a BusRdX(or BusUpgr) that converts the blcok from S to M state. In this protocol, the complexity of the mechanism that determines the exclusiveness of the block is an aspect that needs attention. Also, in snoop-based cache-coherence protocols, the overall set of actions for memory operations is not atomic. This could lead to race conditions, and the issues of deadlock, serialization, etc. make it harder to implement.<ref>Snoop-based Multiprocessor Design</ref>

MESI Protocol

MESI protocol is a 4-state protocol, in which a cache block can have an 'Exclusive' state apart from the Modified, Shared and Invalid state as in MSI protocol.

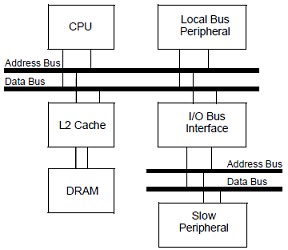

AM486

The AM486 processor implements a 32-bit architecture, encompassing the complete 486 microprocessor instruction set with several extensions. The AM486 also uses a modified MESI cache coherence protocol with write-back and write-through and read-allocation. Caches in the AM486 follow the Pseudo-LRU block replacement policy.

The AM486 introduces the concept of multi-master environment, allowing it reduce unnecessary bus traffic through dynamic identification of shared blocks. This multi-master environment with the MESI cache coherence model allows the system to appear as a single unified memory structure, facilitating even programs written without cache support.

The modified MESI protocol in AM486 differs in one respect to traditional MESI. When a block is hit, either by the processor that currently has the block, or by an external master (another processor), main memory is updated. However in the case that the modified block is requested by another processor other than the processor holding the block, the request is first cancelled and then the modified block is flushed to main memory. The requester must then resend a request, this time to main memory for the block in question.

ARM MPCore

The ARM11 MPCore and Cortex-A9 MPCore processors support the MESI cache coherency protocol.<ref>ARM</ref> ARM MPCore defines the states of the MESI protocol it implements as:

| Cache Line State: | Modified | Exclusive | Shared | Invalid |

| Copies in other caches | NO | NO | YES | - |

| Clean or Dirty | DIRTY | CLEAN | CLEAN | - |

The coherency protocol is implemented and managed by the Snoop Control Unit (SCU) in the ARM MPCore, which monitors the traffic between local L1 data caches and the next level of the memory hierarchy. At boot time, each core can choose to partake in the coherency domain or not. Unless explicit system calls bound a task to a specific core (processor affinity), there are high chances that a task will at some point migrate to a different core, along with its data as it is used. Migration of tasks is not efficiently implemented in literal MESI implementation, so the ARM MPCore offers two optimizations that allow for MESI compliance and migration of tasks: Direct Data Intervention (DDI) (in which the SCU keeps a copy of all cores caches’ tag RAMs. This enables it to efficiently detect if a cache line request by a core is in another core in the coherency domain before looking for it in the next level of the memory hierarchy)and Cache-to-cache Migration (where if the SCU finds that the cache line requested by one CPU present in another core, it will either copy it (if clean) or move it (if dirty) from the other CPU directly into the requesting one, without interacting with external memory). These optimizations reduce memory traffic in and out of the L1 cache subsystem by eliminating interaction with external memories, which in effect, reduces the overall load on the interconnect and the overall power consumption.<ref>ARM</ref>

Intel Xeon Phi

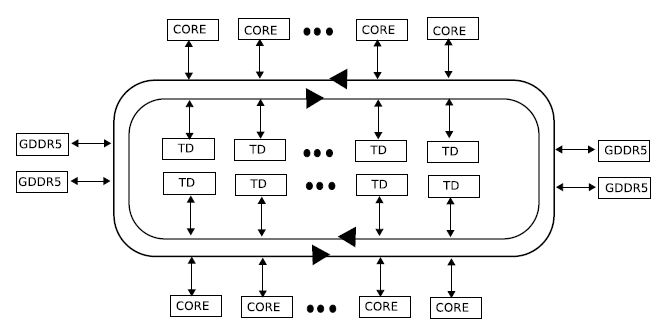

The Xeon Phi is composed of 60 cores, each running at 1065 MHz with hyperthreading, allowing for up to 4 threads per core. Each core contains a 64 byte register for supporting Intel’s proprietary instruction set, the Intel Initial Many Core Instructions (IMCI) and contains both a 32KB L1 Data Cache and 32KB L1 Instruction Cache and also a 512KB L2 Unified Cache. The L2 Unified Cache utilizes a distribtued tag directory (DTD) system to ensure coherency. Cores also have access to 4 channels of global GDDR5 memory supporting up to 8GBs in total.

The architecture of the Xeon Phi is design in such as a way that sharing of resource is intuitive, thus improving its scalability. Processors are laid out around a bi-directional tri-ring bus system. Each direction of the bus is composed of 3 lines: a 64 byte data ring, an address ring, and an acknowledgement ring. The transfer of data occurs on the data ring, allowing for 1 entire register block to be transferred at a time while the address ring facilitates both read and write commands to memory structures. Lastly, the acknowledgement ring facilitates flow control and coherency, allowing the Xeon Phi to support MESI based bus transactions to maintain cache coherency among its 60 cores.

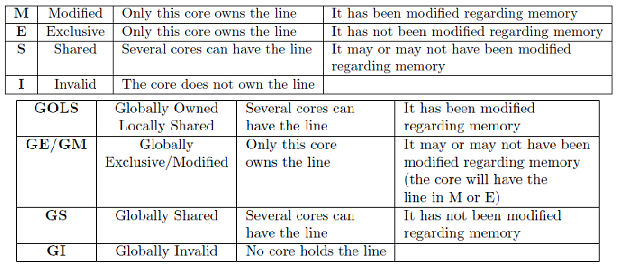

The Xeon Phi implements an extended MESI protocol utilizing Globally Owned Locally Shared (GOLS) to simulate an owned state. This additional feature allows modified blocks to be shared between processors to avoid as many writebacks to main memory as possible. Each processor’s cache maintains the state of its blocks according to the MESI protocol while the tag directories maintain the global state (GOLS) of each block. Upon cache miss, requests are send to the DTD and responses are based on the current GOLS state of the requested block. Should a processor have the block in question, the DTD sends the request to the processor in question and the servicing processor replies with an acknowledgement to the DTD. When the requesting processor has received the block it has requested, it also sends an acknowledgement to the DTD. The DTD then updates the current state of the block (see table). The DTD system intervenes even during eviction of blocks, as processors wishing to evict a block must send the request to the DTD, and the same acknowledgment exchange occurs.

Implementation Complexities

During replacement of a cache block, some MESI implementations require a message to be sent to memory when a cache line is flushed - an E to I transition, as the line was exclusively in one cache before it was removed. In alternate implementation, this replacement message could be avoided if the system is designed so that the flush of a modified /exclusive line requires an acknowledgment from the memory. However, this requires the flush to be stored in a 'write-back' buffer until the reply arrives to ensure the change is successfully propagated to memory.

According to the application, there is a bandwidth trade-off in both these applications. The MESI protocol has greater complexity in terms of block states and transitions. It requires a priority scheme for cache-to-cache transfers to determine which cache should supply the data when in shared state. In commercial implementations, usually the memory is allowed to update data. Like in the MSI protocol, this protocol too has the issues of complexity in issues of serialization, handshaking, deadlocks, etc. Also, the implementation of write-backs necessitates a write-buffer. The bus transactions relevant to buffered blocks must be handled carefully<ref>Discussion of cache coherence protocol implementation</ref>

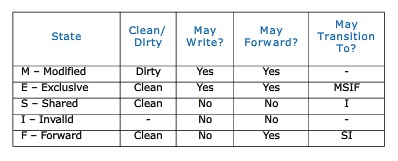

MESIF Protocol

The MESIF protocol is a cache coherency and memory coherence protocol developed by Intel for cache coherent non-uniform memory architectures.The protocol consists of five states, Modified (M), Exclusive (E), Shared (S), Invalid (I) and Forward (F).

The M, E, S and I states are the same as in the MESI protocol. When a processor requests a cache line that is stored in multiple locations, every location might respond with the data. However, the requesting processor only needs a single copy of the data, so the system is wasting a bit of bandwidth. Intel's solution to this issue is rather elegant. They adapted the standard MESI protocol to include an additional state, the Forwarding (F) state, and changed the role of the Shared (S) state. In the MESIF protocol, only a single instance of a cache line may be in the F state and that instance is the only one that may be duplicated. Other caches may hold the data, but it will be in the shared state and cannot be copied. In other words, the cache line in the F state is used to respond to any read requests, while the S state cache lines are now silent. This makes the line in the F state a first amongst equals, when responding to snoop requests. By designating a single cache line to respond to requests, coherency traffic is substantially reduced when multiple copies of the data exist.

When a cache line in the F state is copied, the F state migrates to the newer copy, while the older one drops back to S. This has two advantages over pinning the F state to the original copy of the cache line. First, because the newest copy of the cache line is always in the F state, it is very unlikely that the line in the F state will be evicted from the caches. In essence, this takes advantage of the temporal locality of the request. The second advantage is that if a particular cache line is in high demand due to spatial locality, the bandwidth used to transmit that data will be spread across several nodes.

The table below summarizes the MESIF cache states:

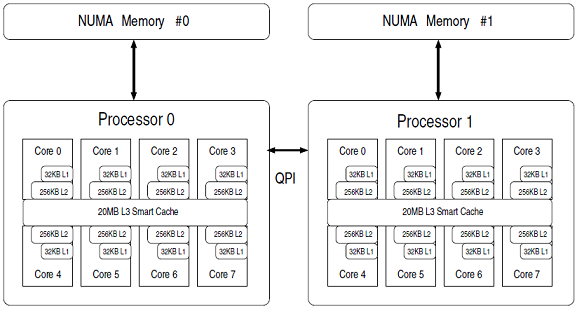

Intel Sandy Bridge Xeon

The Sandy Bridge multi-core system contains 8 cores, each with HyperThreading, allowing for up to 4 threads per core. The Sandy Bridge also utilizes QPI (Intel QuickPath Interconnect) for point-to-point communication at a rate of 8 GT/s. Each core runs at 2.60GHz and contains a 32KB L1 Data cache and a 32KB L1 Instruction cache. The Sandy Bridge is also equipped with L2 256KB unified caches specific to each core. However, there exists a shared 20MB L3 unified cache divided into 8 sections which individually can be accessed by any processor. Like the Xeon Phi, the Sandy Bridge also uses a ring bus but with 4 lines: data line, request line, acknowledgement line, and snoop line.

The Intel Xeon Sandy Bridge uses MESIF cache coherence protocol (see table). Similar to the functionality of the MESI states, the additional forwarding (F) state is used to indicate which processor will answer a new request for the block in question. Once a requester receives a block from a processor that has the block in the forwarding state, the request becomes the new “forwarder” and the previous forwarder moves its copy of the block to the shared state (S). The goal of the forwarding state is to maintain availability of a block in one of the processors to avoid future requests of the block from having to access main memory. <ref>Ramos Hoefler CC Modeling</ref>

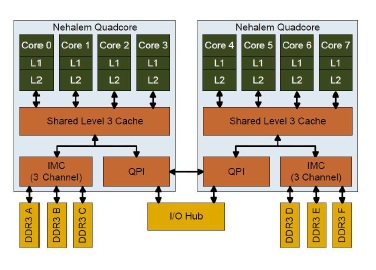

MESIF in Intel Nehalem Computer

Intel Nehalem Computer uses the MESIF protocol. In the Nehalem architecture each core has its own L1 and L2 cache. Nehalem does has a shared cache, implemented as L3 cache. This cache is shared among all cores and is relatively large. This cache is inclusive, meaning that it duplicates all data stored in each indivitual L1 and L2 cache. This duplication greatly adds to the inter-core communication efficiency because any given core does not have to locate data in another processor’s cache. If the requested data is not found in any level of the core’s cache, it knows the data is also not present in any other core’s cache. To ensure coherency across all caches, the L3 cache has

additional flags that keep track of which core the data came from. If the data is modified in L3 cache, then the L3 cache knows if the data came from a different core than last time,and that the data in the first core needs its L1/L2 values updated with the new data. This greatly reduces the amount of traditional “snooping” coherency traffic between cores.<ref>Comparing Cache Organization and Memory Management of the Intel Nehalem Computer Architecture</ref>

The cache organization of a 8-core Intel Nehalem Processor is shown below:

Implementation Complexities

In comparision with the MESI protocol, the addition of 'Forward' state in the MESIF protocol reduces the complexity due to a reduction in the extent of communication between cores. This reduced communication is due to the design of specific state such that a single cache will respond to all the read requests for shared data. The implementation of write-backs necessitates a 'write-buffer' like in the case of MESI protocol, and the bus transactions relevant to these buffered blocks require careful handling. Though the inclusion of an additional state does increase design complexity, the working of this additional state and the concomitant decrease in communication between cores leads to the overall implementation complexity being similar to that in the MESI protocol.

MOESI Protocol

MOESI is a five state cache coherence protocol.

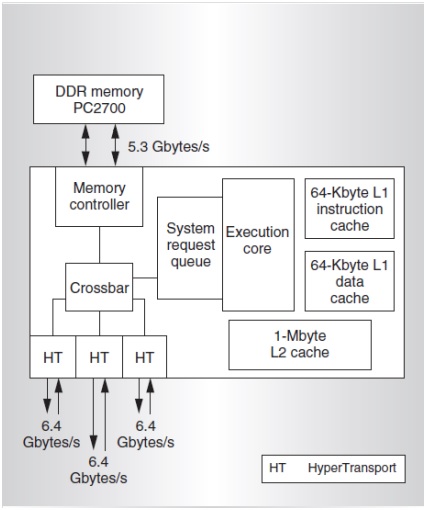

AMD Opteron

Advanced Micro Devices’ Opteron (successor to AMD's Athlon) processor is a 64-bit, x86-based microprocessor supported by large on-chip level-1 (L1) and level-2 (L2) caches. The AMD Opteron implements the MOESI cache coherence protocol.

Opteron has separate L1 data and instruction caches, each of 64 KB, amounting a 128 KB first level cache. They are 2-way set-associative, linearly indexed, and physically tagged with a cache line size of 64 bytes. Furthermore, there is a large 1-Mbyte L2 cache, whose data is mutually exclusive with respect to the data in the L1 caches. The L2 cache is 16-way set-associative. The instruction and data caches each have independent L1 and L2 translation look-aside buffers (TLB). The on-chip memory controller resides in a separate chip called the Northbridge which takes care of cache coherence. Other functionalities of the Northbridge include the processing of I/O direct-mapped access, memory-mapped I/O, peripheral component interconnect (PCI) configuration requests and transaction ordering. Opteron employs broadcast cache coherence protocol for maintaining memory coherence by avoiding as opposed to directory-based systems. This results in lower serialization delays and also allows the snooping of the processor caches concurrently with DRAM access.

Implementation Complexities

When implementing the MOESI protocol on a real architecture like AMD K10 series, some modification or optimization was made to the protocol which allowed more efficient operation for some specific program patterns. For example AMD Phenom family of microprocessors (Family 0×10) which is AMD’s first generation to incorporate 4 distinct cores on a single die, and the first to have a cache that all the cores share, uses the MOESI protocol with some optimization techniques incorporated.

It focuses on a small subset of compute problems which behave like Producer and Consumer programs. In such a computing problem, a thread of a program running on a single core produces data, which is consumed by a thread that is running on a separate core. With such programs, it is desirable to get the two distinct cores to communicate through the shared cache, to avoid round trips to/from main memory. The MOESI protocol that the AMD Phenom cache uses for cache coherence can also limit bandwidth. Hence by keeping the cache line in the ‘M’ state for such computing problems, we can achieve better performance.

When the producer thread , writes a new entry, it allocates cache-lines in the modified (M) state. Eventually, these M-marked cache lines will start to fill the L3 cache. When the consumer reads the cache line, the MOESI protocol changes the state of the cache line to owned (O) in the L3 cache and pulls down a shared (S) copy for its own use. Now, the producer thread circles the ring buffer to arrive back to the same cache line it had previously written. However, when the producer attempts to write new data to the owned (marked ‘O’) cache line, it finds that it cannot, since a cache line marked ‘O’ by the previous consumer read does not have sufficient permission for a write request (in the MOESI protocol). To maintain coherence, the memory controller must initiate probes in the other caches (to handle any other S copies that may exist). This will slow down the process.

Thus, it is preferable to keep the cache line in the ‘M’ state in the L3 cache. In such a situation, when the producer comes back around the ring buffer, it finds the previously written cache line still marked ‘M’, to which it is safe to write without coherence concerns. Thus better performance can be achieved by such optimization techniques to standard protocols when implemented in real machines.

You can find more information on how this is implemented and various other ways of optimizations in this manual Software Optimization guide for AMD 10h Processors

References

<references/>