CSC 456 Fall 2013/1b ra: Difference between revisions

| Line 135: | Line 135: | ||

===Advantages/Disadvantages=== | ===Advantages/Disadvantages=== | ||

===Architectures=== | ===Architectures=== | ||

Victim caching can be found in AMD's Opteron processor series produced specifically for servers and workstations. <ref>http://en.wikipedia.org/wiki/Opteron</ref> Opteron processors use a victim cache that is capable of holding eight victim blocks. <ref> Hennessy, John L., and David A. Patterson. ''Computer Architecture: A Quantitative Approach''. Elsevier, Inc., 2012, p. B-14.</ref> | Victim caching can be found in AMD's Opteron processor series produced specifically for servers and workstations. <ref>http://en.wikipedia.org/wiki/Opteron</ref> Opteron processors use a victim cache that is capable of holding eight victim blocks. <ref> Hennessy, John L., and David A. Patterson. ''Computer Architecture: A Quantitative Approach''. Elsevier, Inc., 2012, p. B-14.</ref> | ||

Revision as of 10:45, 24 September 2013

Sector Cache

Sectored caching was one type of early CPU cache organization that has risen and fallen in popularity over the years. Sectored caching involves dividing a cache up into sectors to allow for faster searching and more efficient use of space than some other cache organizations.

Background

IBM Model 85

Sectored caching was first used on the IBM System/360 Model 85, which was the earliest commercial system that used a CPU data cache of any kind. Sectored caching allowed for smaller tag sizes in the addresses, which made searching in cache easier and quicker, without requiring cache lines to be excessively long; and it was easier to build than other kinds of cache organizations with the circuit technology of the time. <ref name="Rothman">Rothman, Jeffrey B. and Alan Jay Smith. Sector Cache Design and Performance. http://www.eecs.berkeley.edu/Pubs/TechRpts/1999/CSD-99-1034.pdf

</ref> Released in 1968, the Model 85 was one of the first computers to have a CPU data cache, which at the time was known as "buffer storage", come as a standard feature. As with most caches, the cache on the Model 85 attempts to fetch data from the cache first before fetching from main memory. This allowed for much faster data fetching and storage, reducing the average system storage cycle time to about one third or one quarter what it would be if using main memory alone. <ref name="IBM">IBM. IBM System/360 Model 85 Functional Characteristics. http://www.bitsavers.org/pdf/ibm/360/funcChar/A22-6916-1_360-85_funcChar_Jun68.pdf Template:Cite manual </ref> This new type of cache organization represented a significant departure from cache organizations used previously in the IBM System/360 line of computers. In testing during the design of the Model 85, it was found to run at 81% of the maximum ideal efficiency calculated by IBM's designers. <ref name="Liptay">Liptay, J. S. Structural Aspects of the System/360 Model 85, Part II: The Cache. http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5388402&isnumber=5388400

</ref> After the release of the Model 85, however, it soon became clear that sectored caching was less effective than set-associative caching for computer and chip architectures at that time and fell out of common use.<ref name="Rothman"/>

Reappearance

In a 1999 article, Alan Jay Smith and Jeffrey B. Rothman revisited the idea of sectored caching to determine if changes in technology from 1968 had made sectored caching useful. They looked at whether there would be any performance gain if sectored caching was used in multi-level cache architectures.

One early cache organization technique was sector cache. It was used on the IBM 360/85, which was one of the earliest commercial CPUs<ref name="rot99">Rothman, Jeffrey B. and Alan Jay Smith. Sector Cache Design and Performance</ref>. Sector cache allowed for smaller tag sizes, which made searching in cache easier and quicker, without requiring cache lines to be excessively long.<ref name="rot99"/> It was also easier to build with the circuit technology of the time.<ref name="rot99"/> In testing during the design of the IBM 360/85, it was found to run at 81% of the maximum ideal efficiency calculated by IBM's designers.<ref name="lip68">Liptay, J. S. Structural Aspects of the System/360 Model 85, Part II: The Cache.</ref> Sector caches were later replaced by set associative caches, which were found to be more efficient.<ref name="rot99"/>

[Old work]

Organization

[New stuff here]

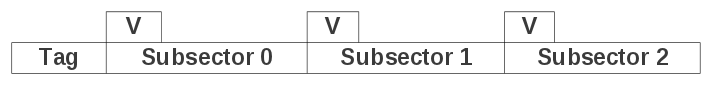

The cache is divided into sectors, which correspond to logical sectors on the main storage device.<ref name="lip68"/> When sectors are needed, they are not loaded into cache all at once, but in smaller pieces known as subsectors.<ref name="lip68"/> Subsectors are similar to the lines in a direct mapped cache, and are only loaded into cache when needed. This prevents large amounts of data from having to be transferred for every memory reference. Subsectors have a validity bit that indicates whether they are loaded with data or not.<ref name="lip68"/>

[Old work]

Implementation

[New stuff here]

Advantages/Disadvantages

[New stuff here]

Miss Handling

[New stuff here]

When a process requests data from a disk sector that is not in the cache, a cache sector is assigned to the sector on the main storage device where the requested data is stored.<ref name="lip68"/> Then the subsector where the data is located is loaded into the cache. The subsector's validity bit is then set to reflect that it has been loaded from the main storage.<ref name="lip68"/> When data from other subsectors within a loaded sector are requested, the system loads those subsectors into the cache sector and sets their validity bits.<ref name="lip68"/> Sectors are not removed from the cache until the system needs to reclaim the space to process another request.<ref name="lip68"/>

[Old work]

Architectures

[New stuff here]

Parameters

[New stuff here]

Evolution

[New stuff here]

Victim Cache

Background

Victim caches were first proposed by Norman P. Jouppi in 1990. Victim caching implements a small, fully-associative cache between direct-mapped L1 memory and the next level of memory. The cache allows lines evicted from the L1 cache a “second-chance” by loading them into the victim cache. Victim caches decrease the overall conflict miss rate (Jouppi).

Direct-mapped caches can especially benefit from victim caching due to their large miss rates. Victim caching allows direct-mapped caches to still be used in order to take advantage of their speed while decreasing the miss rate to an even lower rate than the miss rate found in set-associative caches (Jouppi).

[Old work]

Victim caches were first proposed by Norman P. Jouppi in 1990. Victim caching implements a small, fully-associative cache between direct-mapped L1 memory and the next level of memory. The cache allows lines evicted from the L1 cache a “second-chance” by loading them into the victim cache. Victim caches decrease the overall conflict miss rate (Jouppi). Direct-mapped caches can especially benefit from victim caching due to their large miss rates. Victim caching allows direct-mapped caches to still be used in order to take advantage of their speed while decreasing the miss rate to an even lower rate than the miss rate found in set-associative caches (Jouppi).

Victim caches were first proposed by Norman P. Jouppi in 1990 at the 17th Annual International Symposium on Computer Architecture. During this time the performance of processors was increasing drastically, however memory hierarchies had not advanced in the same manner. Jouppi observed that if this trend continued the newer machines would easily lose half of their potential performance due to the short comings of the state of memory hierarchy at the time. This is illustrated in the figure below which is from Jouppi's 1990 paper.which shows how the cost of cache misses was dramatically increasing.

Organization

Victim Cache

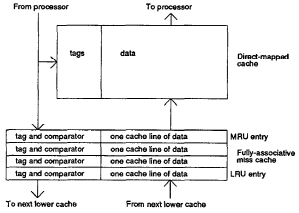

In order for a system to use a victim cache it needs to be use a direct-mapping cache system. Victim caching is a technique of optimizing the use of a miss cache(Jouppi). A miss cache is a small on-chip cache between the first-level cache and the access point to the second-level cache(Jouppi). The miss cache would typically store two to five lines, when a miss would occur the data would not only be loaded into the direct-mapped cache but also into the miss cache replacing the least recently used item(Jouppi). While this did help with reducing the amount of conflict misses the optimization of that small cache was not very good considering it could have a lot of the same lines in the direct-mapped cache. The organization of the miss cache can be seen below in the Figure 1.2 which was shown in the 1990 paper by Jouppi.

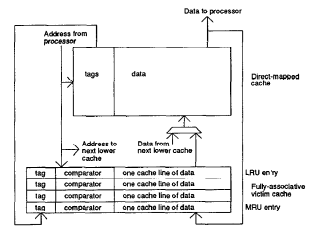

Jouppi discovered a way of helping to optimize the miss cache by using a victim caching organization instead. The idea of the victim cache is to use the same hardware of the miss cache technique but use a different replacement algorithm to make sure that there would be no repeated lines between the direct-mapped cache and the victim cache. When a system using victim caching encounters a miss instead of the new line being placed in both the direct-mapped cache and the victim cache (like the previous method), the line is put into the direct-mapped cache only and the victim cache replaces the least used line with the line that was taken out of the direct mapped cache(Jouppi). After this process when a miss would occur in the direct-mapped cache but a hit occurred in the victim cache the line from the victim cache would be swapped with a line from the direct-mapped cache(Jouppi). This technique is always more efficient than the miss cache due to the redundancy of data between the two different caches, which the miss cache's efficiency was dependent upon. In the figure below is an illustration from Jouppi's 1990 paper that details the organization of the victim cache.

Selective Victim Cache

Implementation

Advantages/Disadvantages

Architectures

Victim caching can be found in AMD's Opteron processor series produced specifically for servers and workstations. <ref>http://en.wikipedia.org/wiki/Opteron</ref> Opteron processors use a victim cache that is capable of holding eight victim blocks. <ref> Hennessy, John L., and David A. Patterson. Computer Architecture: A Quantitative Approach. Elsevier, Inc., 2012, p. B-14.</ref>

Parameters

Evolution

References

<references />

Topic 1b: Sectored Caches & Victim Caches

Sectored Cache:

Links: Wikipedia page saying IBM 360/85 --Taolande 11:31, 3 September 2013 (EDT)