CSC/ECE 506 Fall 2007/wiki3 1 ncdt: Difference between revisions

m (→Bibiliography) |

|||

| Line 105: | Line 105: | ||

3.[http://www.cs.princeton.edu/~jps/ Parallel Computer Architecture Lecture notes By Jaswinder Pal Singh] | 3.[http://www.cs.princeton.edu/~jps/ Parallel Computer Architecture Lecture notes By Jaswinder Pal Singh] | ||

4. S. J. Eggers and R. H. Katz, “The effect of sharing on the cache and bus performance of parallel programs,” in Proc. 3rd Int. Conf Architectural Support for Programming Lung. and Operating Syst., Apr. 1989, pp.257-270. | 4. [http://www.eecs.berkeley.edu/Pubs/TechRpts/1988/6056.html S. J. Eggers and R. H. Katz, “The effect of sharing on the cache and bus performance of parallel programs,” in Proc. 3rd Int. Conf Architectural Support for Programming Lung. and Operating Syst., Apr. 1989, pp.257-270.] | ||

5. [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=878285 Analysis of shared memory misses and reference patterns by Rothman et. al.] | 5. [http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=878285 Analysis of shared memory misses and reference patterns by Rothman et. al.] | ||

| Line 111: | Line 111: | ||

6. [http://citeseer.ist.psu.edu/160021.html Reducing False Sharing on Shared Memory Multiprocessors through Compile Time Data Transformations by Tor E. Jeremiassen and Susan J. Eggers] | 6. [http://citeseer.ist.psu.edu/160021.html Reducing False Sharing on Shared Memory Multiprocessors through Compile Time Data Transformations by Tor E. Jeremiassen and Susan J. Eggers] | ||

7. [ parasol.tamu.edu/~rwerger/Courses/689/spring2002/day-3-ParMemAlloc/papers/lee96effective.pdf J. Lee and Y Cho, “An Effective Shared Memory Allocator for Reducing False Sharing in NUMA Multiprocessors”, in Algorithms and Architectures for Parallel Processing, 1996.] | |||

8. Josep Torrellas, Monica S. Lam, and John L. Hennessy. Shared Data Placement Optimizations to Reduce Multiprocessor Cache Miss Rates. In Proceedings of the 1990 International Conference on Parallel Processang, volume II(Software), pages 266-270. | |||

9. [http://citeseer.ist.psu.edu/kadiyala95dynamic.html Murali Kadiyala and Laxmi N. Bhuyan, “A Dynamic Cache sub-block Design to Reduce False Sharing” IEEE International Conference on Computer Design, 1995.] | |||

10.[www.virtutech.com/about/research/pdf/zhao-ICIS-L2cache.pdf Xuemei Zhao, Karl Sammut, Fangpo He, Shaowen Qin, Split Private and Shared L2 Cache Architecture for Snooping-based CMP] | |||

11. http://suif.stanford.edu/papers/anderson95/node2.html | |||

12 [http://www.intel.com/cd/ids/developer/asmo-na/eng/43813.htm False sharing in threaded programming environment and potential solutions] | |||

13.[http://docs.hp.com/en/B3909-90003/ch13s02.html HP Article] | |||

14.[http://www.usenix.org/publications/library/proceedings/als00/2000papers/papers/full_papers/thomas/thomas_html/node10.html Effect of false sharing] | |||

Revision as of 03:44, 25 October 2007

Introduction

In multiprocessor computing system with snoopy coherence protocol, the overall performance is a contributed by two factors

- Uniprocessor cache miss traffic

- The traffic caused by the communication, which generates invalidations and subsequent cache misses.

The uniprocessor misses are categorized into the three C’s (capacity, compulsory, and conflict missesComputer Architecture: A Quantitative Approach, Appendix C).However, the misses arising from interprocessor communication are named as coherence misses and can be divided into two different types:

- True sharing misses: These misses are caused by the communication of data through the cache coherence mechanism. For example, in an invalidation-based protocol, the first write by a processor to a shared cache block causes an invalidation to establish ownership of that block and writes a particular word in that block. Subsequently, when another processor attempts to read the same modified word in the same cache block, a miss occurs and the corresponding block is transferred.

- False sharing misses: These misses arise from the use of an invalidation-based coherence protocol with a single valid bit per cache block. False sharing occurs when a block is invalidated and a subsequent reference causes a miss because a particular word in the block, other than one being read, is getting written.

The effect of coherence misses become significant for tightly coupled applications that share significant amount of data, for example commercial workloads.

Effect of false sharing

Id a one processor writes a word in particular block then other processor invalidates shared copy of the same block, subsequently later process intends to read the same word in the same block, then the reference is a true sharing reference and would cause a miss independent of the block size. If, however, the word being written and the word being read are different and the invalidation does not cause a new value to be communicated, but only causes an extra cache miss, then it is a false sharing miss. Evidently, if the cache block size is increased, false sharing misses would also go up. Though, large block sizes exploit locality and decrease the effective memory access time, full cache block (all words in the block) is not necessarily useful to a particular processor rather mostly only part of it is relevant. In general, previous works have shown the miss rate of the data cache in multiprocessors changes less predictably than in uniprocessors with increasing cache block size.

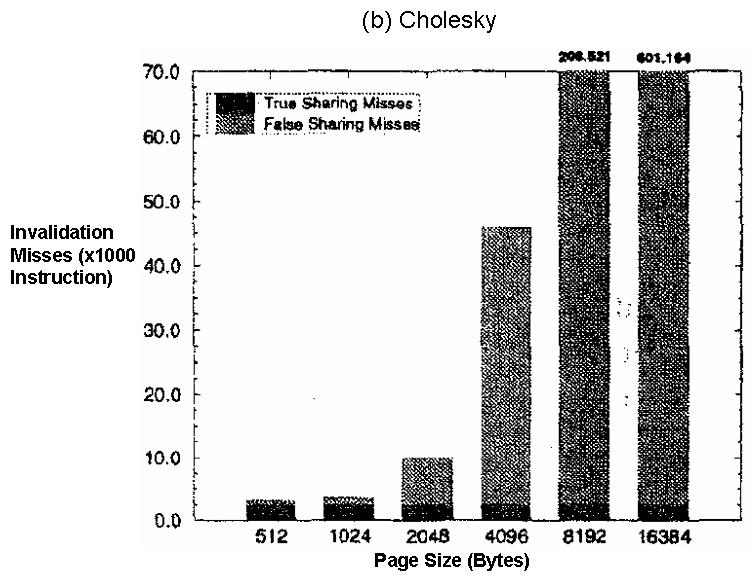

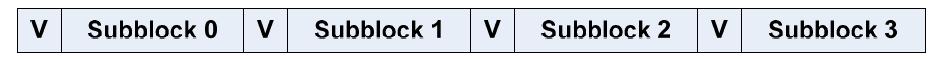

Figure 1 and Figure 2(source) depict the impact of false sharing for different parallel application using 32 processors:

Figure 1: The number of false sharing misses and true sharing misses during execution of parallel application Barnes. The Barnes-Hut simulation is an algorithm for performing an N-body simulation having order O(n log n) . More information here.

Figure 2: The number of false sharing misses and true sharing misses during execution of parallel application Cholesky. More about Cholesky decomposition here.

Not only do false misses increase the memory accesses latency, but also they generate traffic between processors and memory. As the block size increases, a miss produces a higher volume of traffic.Generally, between two consecutive misses on a given block, a processor usually references a very small number of distinct words in that block.

Techniques to reduce false sharing misses

Various hardware and software techniques have been proposed to reduce false sharing misses. Following are some of the popular and accepted techniques to combat false sharing issue:

I.Data placement optimizations: reference This method addresses the problem of reducing false sharing misses on shared data by improving the spatial locality of shared data. The placement of data structures in cache blocks is optimized by using local changes that are programmer-transparent and have general applicability. This approach is partly motivated by the fact that cache misses on shared data are often concentrated in small sections of the shared data address space. Therefore, local actions involving relatively few bytes may yield most of the desired effects.

Four optimizations of the data layout have been suggested referenceto reduce false sharing cache misses in a multiprocessor environment:

- Split Scalar: Place scalar variables that cause false sharing in different blocks. Given a cache block with scalar variables where the increase in misses due to prefetching exceeds 0.5% of the program misses, allocate each of them to an empty cache block.

- Heap Allocate: Allocate shared space from different heap regions according to which processor requests the space. It is common for a slave process to access the shared space that it requests itself. If no action is taken, the space allocated by different processes may share the same cache block and lead to false sharing

- Expand Record: Expand records in an array (padding with dummy words) to reduce the sharing of a cache block by different records. While successful prefetching may occur within a record or across records, false sharing usually occurs across records, when more than one of them share the same cache block. If the multi-word simulation indicates that there is much false sharing and little gain in prefetching, then consider expansion. If the reverse is true, optimization is not applied.

- Align Record: Choose a layout for arrays of records that minimizes the number of blocks the average record spans. This optimization maximizes prefetching of the rest of the record when one word of a record is accessed, and may also reduce false sharing. This optimization is possible when the number of words in the record and in the cache block have a greater common divisor (GCD) larger than one.The array is laid out at a distance from a block boundary equal to 0 or a multiple of the GCD, whichever wastes less space.

II. Dynamic Cache sub-block Design to Reduce False Sharing referenceIn this method false sharing is minimized by trying to dynamically locate the point of false reference. Sharing traffic is minimized by maintaining coherence on smaller blocks (sub-blocks) which are truly shared, whereas larger blocks are used as the basic units of transfer.

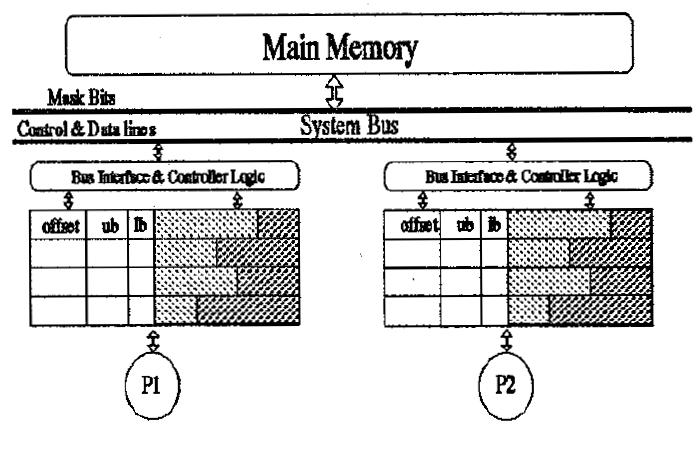

The sub-block sizes are dynamically determined with the objective of maximizing the size of a truly shared sub-block which will settle to a value which is most suitable for the application being run and also minimize the invalidation misses due to false partitioning of true shared blocks. Larger blocks exploit locality while coherence is maintained on sub-blocks which minimize bus traffic due to shared misses. Figure 3 shows the cache organization to support dynamic cache sub-block design.

Figure 3 New Architecture for the Dynamic Sub-block Coherence Scheme

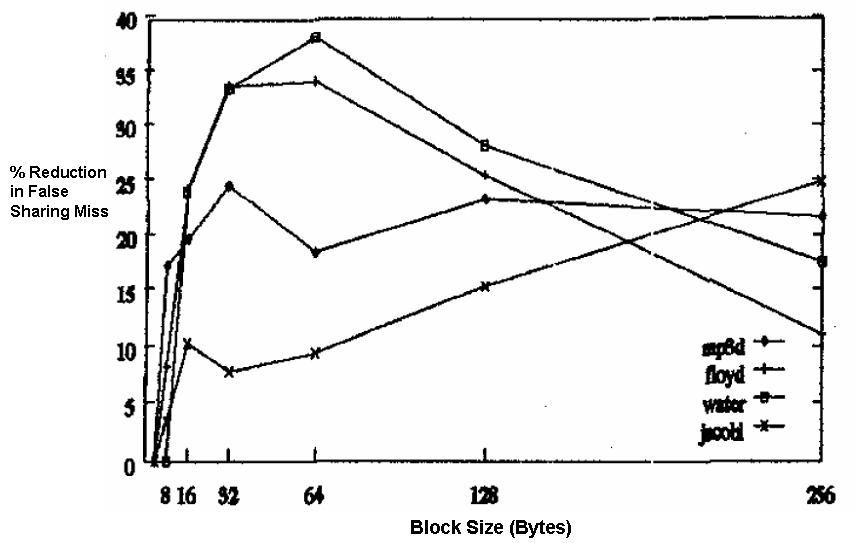

The simulation results indicate that the dynamic sub-block (DSB) protocol reduces the false sharing misses by 20 to 90 percent over the fixed sub-block (FSB) scheme Figure 4.

Figure 4 The percentage reduction in false sharing miss rate in the DSB scheme compared to the FSB scheme for MP3D, Floyd, Water and Jacobi vs block size.

More about benchmarks used :

- MP3D,

- Floyd’s algorithm is designed to find the least-expensive paths between all the vertices in a graph. It does this by operating on a matrix

representing the costs of edges between vertices. More here

- Water

- The Jacobi method is an algorithm in linear algebra for determining the solutions of a system of linear equations with largest absolute values in each row and column dominated by the diagonal element. More here.

Techniques to reduce true sharing misses

True sharing requires the processors involved to explicitly synchronize with each other to ensure program correctness. A computation is said to have temporal locality if it re-uses much of the data it has been accessing, programs with high temporal locality tend to have less true sharing. The amount of true sharing in the program is a critical factor for performance on multiprocessors. High levels of true sharing and synchronization can easily overwhelm the advantage of parallelism.

In fact, true misses are required for the correct execution of the program. The techniques used for true sharing is mostly involved with reducing the latency and bus traffic caused by miss.

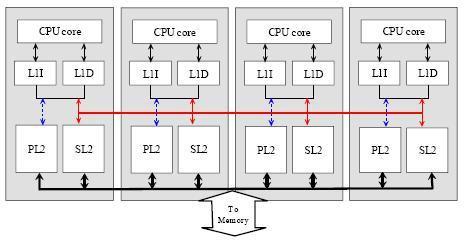

- One of the proposed technique is to have a private L1 cache and shared L2 cache among all the processors (also called SPS2 system) . Figure 5 shows one of the possible implementation of a private L1 cache and shared L2 cache architecture.

Figure 5: A possible SPS2 cache architecture to reduce true sharing latency.

Shared L2 is a multi-banked multi-port cache that could be accessed by all the processors directly over the bus. Data in private L1 and L2 are exclusive, but private L1 and shared L2 could be inclusive. Unlike the unified L2 cache structure, the SPS2 system with its split private and shared L2 caches can be flexibly and individually designed according to demand. SPS2 reduces access latency and contention between shared data and private data. It imposes a low L2 hit latency because most of the private data should be found in the local L2. So the shared data will be placed in shared L2 which collectively provide high storage capacity to help reduce off-chip access.

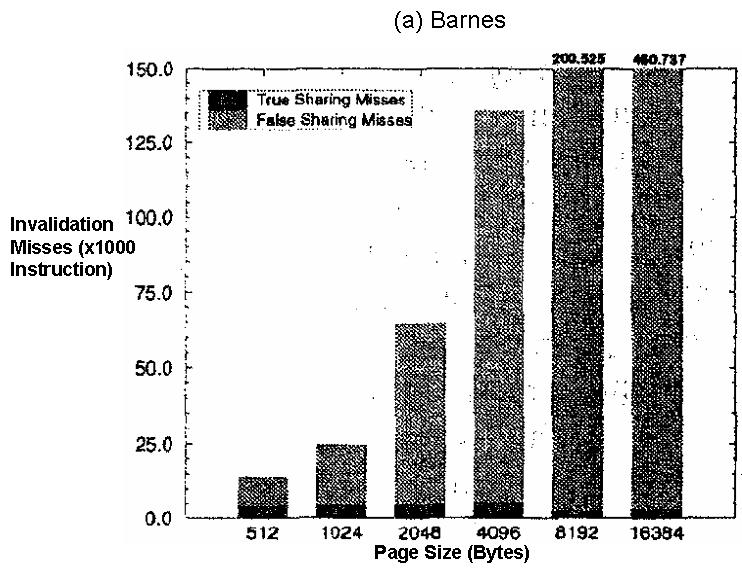

- Sub-blocking in the cache can also help in reducing the bus communication because of true sharing misses. Figure 6 shows the sub-blocking in a cache.

Figure 6: Sub-blocking in a cache

A valid bit is associated with each sub-block in the cache line. The sub-block might be equivalent to a word process writes or reads. Now if a true sharing miss occurs then only the specific word written by the processor in the cache line needs to send across the bus (not the whole cache line).

Bibiliography

3.Parallel Computer Architecture Lecture notes By Jaswinder Pal Singh

5. Analysis of shared memory misses and reference patterns by Rothman et. al.

7. [ parasol.tamu.edu/~rwerger/Courses/689/spring2002/day-3-ParMemAlloc/papers/lee96effective.pdf J. Lee and Y Cho, “An Effective Shared Memory Allocator for Reducing False Sharing in NUMA Multiprocessors”, in Algorithms and Architectures for Parallel Processing, 1996.]

8. Josep Torrellas, Monica S. Lam, and John L. Hennessy. Shared Data Placement Optimizations to Reduce Multiprocessor Cache Miss Rates. In Proceedings of the 1990 International Conference on Parallel Processang, volume II(Software), pages 266-270.

10.[www.virtutech.com/about/research/pdf/zhao-ICIS-L2cache.pdf Xuemei Zhao, Karl Sammut, Fangpo He, Shaowen Qin, Split Private and Shared L2 Cache Architecture for Snooping-based CMP]

11. http://suif.stanford.edu/papers/anderson95/node2.html

12 False sharing in threaded programming environment and potential solutions

13.HP Article