|

|

| Line 1: |

Line 1: |

| '''Prefetching and Memory Consistency Models''' | | ='''Prefetching and Memory Consistency Models'''= |

|

| |

|

| == '''Overview''' ==

| |

| This wiki article explores two different topics Sequential Prefetching and Memory Consistency models. The article covers description of prefetching, different types of prefetching like Fixed, Adaptive, etc explained in detail. This is followed by, different types of memory consistency models like Sequential consistency and Relaxed consistency. It also talks about the authors' and researchers' comments through examples.

| |

|

| |

|

| = '''Prefetching''' = | | == '''Cache Misses'''== |

| | | Cache misses can be classified into four categories: conflict, compulsory, capacity [3], and coherence. <b>Conflict misses</b> are misses that would not occur if the cache was fully-associative and had LRU replacement. <b>Compulsory misses</b> are misses required in any cache organization because they are the first references to an instruction or piece of data. <b>Capacity misses</b> occur when the cache size is not sufficient to hold data between references. <b>Coherence misses</b> are misses that occur as a result of invalidation to preserve multiprocessor cache consistency.The number of compulsory and capacity misses can be reduced by employing prefetching techniques such as by increasing the size of the cache lines or by prefetching blocks ahead of time. |

| Sequential prefetching is a simple hardware controlled pre fetching technique which relies on the automatic prefetch of consecutive blocks following the block that misses in the cache, thus exploiting spatial locality. In its simplest for, the number of prefetched blocks on each miss is fixed throughout the execution<ref>http://129.16.20.23/~pers/pub/j5.pdf</ref>.

| |

| | |

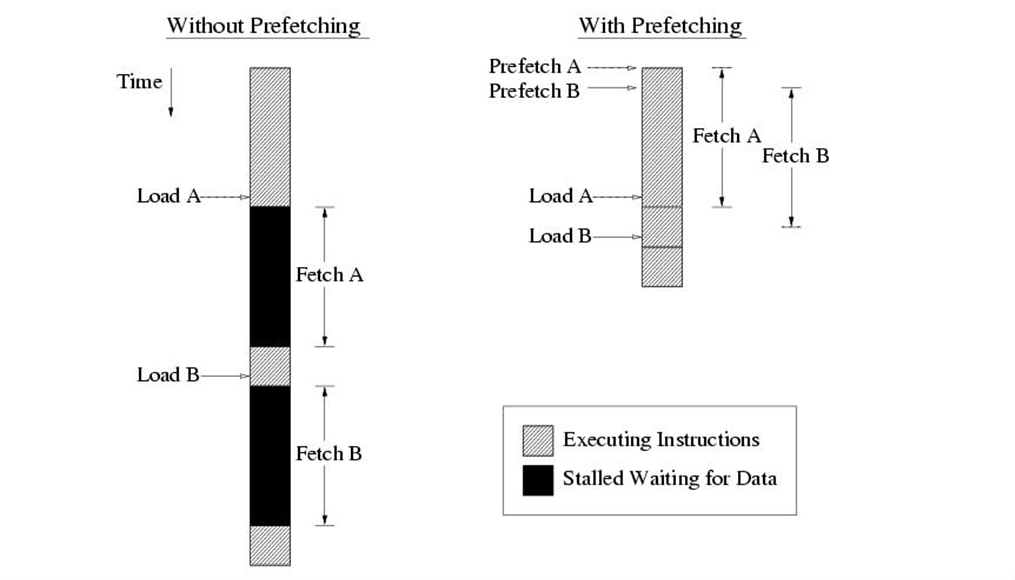

| Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. Prefetching approaches proposed in the literature are software or hardware based.

| |

| | |

| Software controlled prefetching schemes rely on the programmer/compiler to insert prefetch instructions prior to the instructions that trigger a miss. In addition, both the processor and the memory system must be able to support prefetch instructions which can potentially increase the code size and the run-time overhead. By contrast, hardware-controlled prefetch relieve the programmer/compiler from the burden of deciding what and when to prefetch. Usually, these schemes take advantage of the regularity of data access in scientific computations by dynamically detecting access strides.

| |

| | |

| Basically there are two types of prefetching techniques

| |

| | |

| 1. Fixed sequential prefetching

| |

| | |

| 2. Adaptive sequential prefetching

| |

| | |

| More details about these is discussed in the following sections along with other prefetching models:

| |

| | |

| == Simulated processing Node Architecture ==

| |

| According to Fig. 2.1, the processing node consists of a processor, a first-level cache (FLC), a second-level cache (SLC), a first- and second-level write buffer (FLWB and SLWB), a local bus, a network interface controller, and a memory module. The FLC is a direct-mapped write-through cache with no allocation of blocks on write misses and is blocking on read misses. Writes and read miss requests are buffered in the FLWB. The second-level cache (SLC) is a direct-mapped write-back cache. For both prefetching techniques, we only prefetch into the SLC. In addition, a second-level write buffer (SLWB) keeps track of outstanding requests (SLC read miss, prefetch, and write requests). No more than one request to the same block is allowed to be issued to the system; others are just kept in the SLWB while waiting for the pending request to that block to complete. Moreover, a read miss request may bypass write requests if they are for different blocks.

| |

| [[File:Procenv.png|thumb|center|upright|350px|Figure 2.1. Processor environment and simulated architecture]]

| |

| | |

| ==Fixed Sequential Prefetching<ref>http://www.springerlink.com/content/lu0755310187318n/</ref>==

| |

| By fixed sequential prefetching we mean that K consecutive blocks are prefetched into the SLC on a reference to a block, i.e., blocks n + 1 ... n + K are prefetched upon a reference to block n, if they are not present in the cache. Sequential prefetching has been extensively studied in the context of uniprocessors,but to our knowledge, have never been considered for general applications on multiprocessors. Although many sequential strategies have been proposed for uniprocessors, we have restricted ourselves to prefetching on a miss in the SLC. When a reference misses in the SLC, the miss request is sent to memory, and the cache is searched for the K consecutive blocks directly following the missing block in the address space. The blocks among the K consecutive blocks that are not present in the SLC and have no pending requests in the SLWB are prefetched. We refer to K as the degree of prefetching.

| |

| [[File:untitled1.png|thumb|center|upright|350px|Figure 2.2. The fixed sequential prefetching mechanism]]

| |

| | |

| Fig. 2.2 shows the mechanism of the fixed sequential prefetching scheme. As a cache lookup is made for block address n, the next block address

| |

| (n + 1) is calculated. On a read miss, a read request is issued to the memory system and is kept in the SLWB. In the next cache cycle, the calculated address (n + I) is directed to the cache, and a cache lookup is made. If the block is not present in the cache, a prefetch request is issued and is kept in the SLWB. During that time, the subsequent block address is calculated (n + 2). The number of iterations is determined by the degree of prefetching. The processor is blocked only during the time it takes to handle the first read miss. Since the prefetch requests are issued one at a time and are pipelined in the memory system, they can be overlapped with the original read request. Besides the simple extensions in the SLC to incorporate fixed sequential prefetching, the memory system must be able to handle three new network commands: a prefetch request and two reply messages denoted PreData and PreNeg. Whereas PreData carries the prefetched block, PreNeg tells the cache that the prefetch request cannot be satisfied because the memory copy is in a transient state-some other cache is reading or writing to it.

| |

| | |

| ==Adaptive Sequential Prefetching<ref>http://web.cecs.pdx.edu/~walpole/papers/mmcn1998b.pdf</ref>==

| |

| The mechanism behind the adaptive scheme is basically the same as that of fixed sequential prefetching. For example, prefetching is activated by a read miss and blocks are prefetched into the SLC. In contrast to fixed sequential prefetching, however, the degree of prefetching is not fixed; rather it is controlled

| |

| by a register, the Lookahead Counter. The adaptive sequential prefetching scheme relies on adjusting the degree of prefetching (the value of the Lookahead- Counter) dynamically by counting the useful prefetches, i.e., prefetched blocks that are actually referenced during their lifetime in the cache. To explain how this is achieved, we will first focus on how the algorithm measures the prefetch efficiency and then how the Lookahead Counter is adjusted to a certain prefetch efficiency. The mechanisms needed to achieve these task-two bits per cache line and three counters per cache appear in Table 1.

| |

| | |

| {|class="wikitable"

| |

| |-

| |

| |PrefetchBit (per Cache Line)

| |

| |Used to detect useful prefetches (needed when prefetching is tumed on.)

| |

| |-

| |

| |ZeroBit (per cache line)

| |

| |Used to detect when a prefetch would have been useful (needed when prefetching is turned off.)

| |

| |-

| |

| |LookaheadCounter (per cache)

| |

| |The current degree of prefetching (per cache)

| |

| |-

| |

| |PrefetchCounter (per cache)

| |

| |Counts the number of prefetches that have been I returned after each read miss

| |

| |-

| |

| |UsefulCounter (per cache)

| |

| |Counts the number of useful prefetches

| |

| |}

| |

| Conceptually, the algorithm measures the prefetch efficiency by counting the fraction of prefetched blocks that are referenced by the processors. If this fraction exceeds a preset threshold, the degree of prefetching is increased and, if it is below another preset threshold, the degree of prefetching is decreased.

| |

| | |

| The basic mechanisms used to measure the prefetch efficiency consist of two counters (the PrefetchCounter and the UsefulCounter) and a PrefetchBit per cache line which are all cleared from the very beginning. The fraction of useful prefetches is established as the ratio of the UsefulCounter and the PrefetchCounter as follows. The number of prefetched blocks is counted by incrementing the PrefetchCounter whenever a prefetch acknowledgment is received from the memory system, independent of whether the prefetch was accepted (PreData) or not (PreNeg) (e.g., if the memory block was in a transient state and neither clean nor dirty) by the memory system. To count the number of prefetched blocks that are referenced, the PrefetchBit of a prefetched block is set; when a block is accessed with its PrefetchBit set, the Usefulcounter is incremented and the PrefetchBit is cleared.

| |

| | |

| Every time the PrefetchCounter reaches its maximum (i.e., it wraps around), the value of the Usefulcounter is matched against two preset thresholds to determine if the Lookahead-Counter-initially set to one-should be changed. If the Useful- Counter exceeds the upper threshold, we are in a phase of execution where the program could benefit from a higher degree of prefetching and therefore the LookaheadCounter is incremented. If the Usefulcounter is lower than the lower preset threshold, the amount of prefetching is too high and the LookaheadCounter is

| |

| decremented. Finally, if the Usefulcounter has a value between the two thresholds, the LookaheadCounter is not affected. In all cases, the Usefulcounter is cleared. In our evaluation, we have considered counters modulo 16 (4 bits).

| |

| | |

| When the LookaheadCounter reaches zero, prefetching is turned off. To turn it back on, we use the following mechanism. When a block is received on a read miss and prefetching is turned off, the ZeroBit in the corresponding SLC block frame, which is initially cleared, is set to indicate that the following block in the address space could have been prefetched

| |

| and the PrefetchCounter is incremented. On a read miss, a cache lookup is made to the previous block (by address); if it

| |

| hits and the ZeroBit is set, the UsefulCounter is incremented and the ZeroBit is cleared. The ZeroBit of a block is also

| |

| cleared when the block is accessed and the LookaheadCounter is not zero to keep the number of ZeroBits that have been previously set to a minimum.

| |

| | |

| ==Chip Multiprocessing Prefetching (CMP)==

| |

| [[File:PrefetchImp.png|thumb|right|upright|300px|Figure 2.3. Prefetch Implementation]]

| |

| Prefetching the lowest miss address stream in the cache hierarchy has many advantages, particularly in a CMP system. First, in a CMP, the L2 cache is often shared by all processors on the chip. Consequently, prefetching the L2 miss address stream can share prefetch history among the processors, resulting in larger history tables. Second, prefetching L2 miss addresses reduces contention on the cache ports, which is becoming increasingly important as the number of processors per chip grows. Before a prefetch is sent to the memory subsystem, it must access the L2 directory. Since the L2 miss address stream has the fewest memory references it will generate less prefetches and access the cache ports less often. Last, prefetching into the L1 is relatively insignificant, since modern out-of-order processors can tolerate most L1 data cache misses with relatively little performance degradation. Prefetching in a CMP is more difficult than in a uniprocessor system. In addition to limited bandwidth and increased latency (as described earlier), cache coherency protocols play an important role in CMP prefetching.

| |

| | |

| ==Disadvantages of Prefetching==

| |

| | |

| 1. Increased Complexity and overhead of handling the perfetching algorithms. Performance must be improved significantly to counterbalance this overhead and complexity or the efforts are not worthwhile.

| |

| | |

| 2. With multiple cores, prefetching requests can originate from a variety of different cores. This puts additional stress on memory to not only deal with regular prefetch requests but also to handle prefetch from different sources, thus greatly increasing the overhead and complexity of logic.

| |

| | |

| 3. If prefetched data is stored in the data cache, then cache conflict or cache pollution, can become a significant burden.

| |

|

| |

|

| | == '''Prefetching'''== |

| | Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. Prefetching approaches proposed in the literature are software or hardware based. |

| | [[File:Prefetching.png]] |

|

| |

|

| =References= | | =References= |

| <references/> | | <references/> |