CSC/ECE 506 Spring 2013/4a aj: Difference between revisions

No edit summary |

No edit summary |

||

| Line 83: | Line 83: | ||

==Examples<ref>http://http://www.top500.org</ref>== | ==Examples<ref>http://http://www.top500.org</ref>== | ||

=== | ===Power Fortran Analyzer=== | ||

blah blah blah | |||

===Polaris=== | |||

blah blah blah | |||

===Stanford University Intermediate Format (SUIF 1,2)=== | |||

blah blah blah | |||

===Intel C and C++ Compilers=== | |||

blah blah blah | |||

==== Legend ==== | ==== Legend ==== | ||

* '''Vendor''' – The manufacturer of the platform and hardware. | * '''Vendor''' – The manufacturer of the platform and hardware. | ||

| Line 182: | Line 109: | ||

*One of the disadvantage with this high-performer is it consumes about 9.8 MW of power, that's the amount of power that would be enough to light 10,000 houses. When compared with its closest competitor, that is the Tianhe-1A, the K-computer is miles ahead and it is highly unlikely that it would lose its number 1 spot any time soon. | *One of the disadvantage with this high-performer is it consumes about 9.8 MW of power, that's the amount of power that would be enough to light 10,000 houses. When compared with its closest competitor, that is the Tianhe-1A, the K-computer is miles ahead and it is highly unlikely that it would lose its number 1 spot any time soon. | ||

[[Image:Tianhe-IA-Fastest-Linux-Supercomputers 2.jpg|thumb|right|200px|Tianhe-IA]] | [[Image:Tianhe-IA-Fastest-Linux-Supercomputers 2.jpg|thumb|right|200px|Tianhe-IA]] | ||

=== Supercomputer Programming Models<ref>http://books.google.com/books?id=tDxNyGSXg5IC&pg=PA4&lpg=PA4&dq=evolution+of+supercomputers&source=bl&ots=I1NZtZyCTD&sig=Ma2fHyp336BSp4Yv2ERmfrpeo4&hl=en&ei=IAReS4WbM8eUtgf2u8GnAg&sa=X&oi=book_result&ct=result&resnum=5&ved=0CB4Q6AEwBA#v=onepage&q=evolution%20of%20supercomputers&f=false</ref> === | === Supercomputer Programming Models<ref>http://books.google.com/books?id=tDxNyGSXg5IC&pg=PA4&lpg=PA4&dq=evolution+of+supercomputers&source=bl&ots=I1NZtZyCTD&sig=Ma2fHyp336BSp4Yv2ERmfrpeo4&hl=en&ei=IAReS4WbM8eUtgf2u8GnAg&sa=X&oi=book_result&ct=result&resnum=5&ved=0CB4Q6AEwBA#v=onepage&q=evolution%20of%20supercomputers&f=false</ref> === | ||

Revision as of 20:34, 9 February 2013

Introduction<ref>http://en.wikipedia.org/wiki/Supercomputer</ref>

A supercomputer is generally considered to be the front-line “cutting-edge” in terms of processing capacity (number crunching) and computational speed at the time it is built, but with the pace of development, yesterday's supercomputers have become regular servers today. This wiki article was directed by Wiki Topics for Chapters 3 and 4

Identification <ref>http://www.bukisa.com/articles/13059_supercomputer-evolution</ref>

The United States government has played the key role in the development and use of supercomputers, During World War II, the US Army paid for the construction of Electronic Numerical Integrator And Computer(ENIAC)in order to speed the calculations of artillery tables. In the 30 years after World War II, the US government used high-performance computers to design nuclear weapons, break codes, and perform other security-related applications.

The most powerful supercomputers introduced in the 1960s were designed primarily by Seymour Cray at Control Data Corporation (CDC). They led the market into the 1970s until Cray left to form his own company,Cray Research.

With Moore’s Law still holding after more than thirty years, the rate at which future mass-market technologies overtake today’s cutting-edge super-duper wonders continues to accelerate. The effects of this are manifest in the abrupt about-face we have witnessed in the underlying philosophy of building supercomputers.

During the 1970s and all the way through the mid-1980s supercomputers were built using specialized custom vector processors working in parallel. Typically, this meant anywhere between four to sixteen CPUs. The next phase of the supercomputer evolution saw the introduction of massive parallel processing and a drift away from vector-only microprocessors. However, the processors used in the construction of this generation of supercomputers were still primarily highly specialized purpose-specific custom designed and fabricated units.

That is no longer true. No longer is silicon fabricated into the incredibly expensive highly specialized purpose-specific customized microprocessor units to serve as the heart and mind of supercomputers. Advances in mainstream technologies and economies of scale now dictate that “off-the-shelf” multicore server-class CPUs are assembled into great conglomerates, combined with mind-boggling quantities of storage (RAM and HDD), and interconnected using light-speed transports.

So we now find that instead of using specialized custom-built processors in their design, supercomputers are based on "off the shelf" server-class multicore microprocessors, such as the IBM PowerPC, Intel Itanium, or AMD x86-64. The modern supercomputer is firmly based around massively parallel processing by clustering very large numbers of commodity processors combined with a custom interconnect.

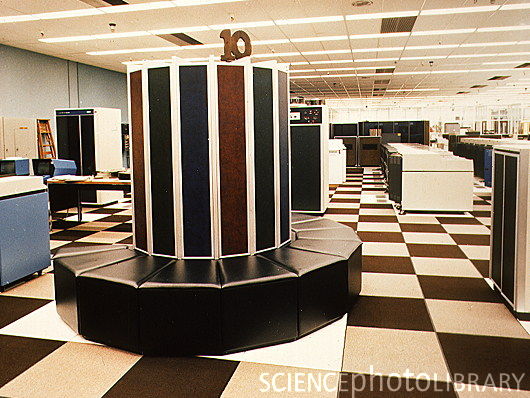

Currently, the K computer is the world's fastest supercomputer at 10.51 petaFLOPS. K is built by the Japanese computer firm Fujitsu, based in Kobe's Riken Advanced Institute for Computational Science. It consists of 88,000 SPARC64 VIIIfx CPUs, and spans 864 server racks. In November 2011, the power consumption was reported to be 12659.89 kW<ref>http://www.top500.org/list/2011/11/100</ref>. K's performance is equivalent to one million linked desktop computers, which is more than its five closest competitors combined. It consists of 672 cabinets stuffed with circuit-boards, and its creators plan to increase that to 800 in the coming months. It uses enough energy to power nearly 10,000 homes and costs $10 million (£6.2 million) annually to run<ref>http://www.telegraph.co.uk/technology/news/8586655/Japanese-supercomputer-K-is-worlds-fastest.html</ref>.

Some of the companies which build supercomputers are Silicon Graphics, Intel, IBM, Cray, Orion, Aspen Systems etc.

Here is a list of the top 10 supercomputers top10 supercomputers as of November 2011.

Techniques <ref>http://www.cray.com/Assets/PDF/about/CrayTimeline.pdf</ref>

In 1993 Fujitsu sprung a surprise to the world by announcing a Vector Parallel Processor (VPP) series that was designed for reaching in the range of hundreds of Gflop/s. At the core of the system is a combined Ga-As/Bi-CMOS processor, based largely on the original design of the VP-200. The processor chips gate delay was made as low as 60 ps in the Ga-As chips by using the most advanced hardware technology available. The resulting cycle time was 9.5 ns. The processor has four independent pipelines each capable of executing two Multiply-Add instructions in parallel resulting in a peak speed of 1.7 Gflop/s per processor. Each processor board is equipped with 256 Megabytes of central memory.

Static<ref>http://www.versionone.com/Agile101/Methodologies.asp </ref>

In the beginning there were only a few Cray-1s installed in Japan, and until 1983 no Japanese company produced supercomputers. The first models were announced in 1983. Naturally there had been prototypes earlier like the Fujitsu F230-75 APU produced in two copies in 1978, but Fujitsu's VP-200 and Hitachi's S-810 were the first officially announced versions. NEC announced its SX series slightly later.

The last decade has rather been a surprise. About three generations of machines have been produced by each of the domestic manufacturers. During the last ten years about 300 supercomputer systems have been shipped and installed in Japan, and a whole infrastructure of supercomputing has been established. All major universities, many of the large industrial companies and research centers have supercomputers.

In 1992 NEC announced the SX-3R with a couple of improvements compared to the first version. The clock was further reduced to 2.5 ns, so that the peak performance increased to 6.4 Gflop/s per processor

Speculative <ref>http://www.netlib.org/benchmark/top500/reports/report94/Japan/node5.html</ref>

In 1977 Fujitsu produced the first supercomputer prototype called the F230-75 APU which was a pipelined vector processor added to a scalar processor. This attached processor was installed in the Japanese Atomic Energy Commission (JAERI) and the National Aeronautic Lab (NAL).

Dynamic

In 1993 Fujitsu sprung a surprise to the world by announcing a Vector Parallel Processor (VPP) series that was designed for reaching in the range of hundreds of Gflop/s. At the core of the system is a combined Ga-As/Bi-CMOS processor, based largely on the original design of the VP-200. The processor chips gate delay was made as low as 60 ps in the Ga-As chips by using the most advanced hardware technology available. The resulting cycle time was 9.5 ns. The processor has four independent pipelines each capable of executing two Multiply-Add instructions in parallel resulting in a peak speed of 1.7 Gflop/s per processor. Each processor board is equipped with 256 Megabytes of central memory.

Interactive

In 1993 Fujitsu sprung a surprise to the world by announcing a Vector Parallel Processor (VPP) series that was designed for reaching in the range of hundreds of Gflop/s. At the core of the system is a combined Ga-As/Bi-CMOS processor, based largely on the original design of the VP-200. The processor chips gate delay was made as low as 60 ps in the Ga-As chips by using the most advanced hardware technology available. The resulting cycle time was 9.5 ns. The processor has four independent pipelines each capable of executing two Multiply-Add instructions in parallel resulting in a peak speed of 1.7 Gflop/s per processor. Each processor board is equipped with 256 Megabytes of central memory.

Polytope Model

In 1993 Fujitsu sprung a surprise to the world by announcing a Vector Parallel Processor (VPP) series that was designed for reaching in the range of hundreds of Gflop/s. At the core of the system is a combined Ga-As/Bi-CMOS processor, based largely on the original design of the VP-200. The processor chips gate delay was made as low as 60 ps in the Ga-As chips by using the most advanced hardware technology available. The resulting cycle time was 9.5 ns. The processor has four independent pipelines each capable of executing two Multiply-Add instructions in parallel resulting in a peak speed of 1.7 Gflop/s per processor. Each processor board is equipped with 256 Megabytes of central memory.

Pitfalls

Hitachi has been producing supercomputers since 1983 but differs from other manufacturers by not exporting them. For this reason, their supercomputers are less well known in the West. After having gone through two generations of supercomputers, the S-810 series started in 1983 and the S-820 series in 1988, Hitachi leapfrogged NEC in 1992 by announcing the most powerful vector supercomputer ever.The top S-820 model consisted of one processor operating at 4 ns and contained 4 vector pipelines with four pipelines and two independent floating-point units. This corresponded to a peak performance of 2 Gflop/s. Hitachi put great emphasis on a fast memory although this meant limiting its size to a maximum of 512 MB. The memory bandwidth of 2 words per pipe per vector cycle, giving a peak rate of 16 GB/s was a respectable achievement, but it was not enough to keep all functional units busy.

The S-3800 was announced two years ago and is comparable to NEC's SX- 3R in its features. It has up to four scalar processors with a vector processing unit each. These vector units have in turn up to four independent pipelines and two floating point units that can each perform a multiply/add operation per cycle. With a cycle time of 2.0 ns, the whole system achieves a peak performance level of 32 Gflop/s.

The S-3600 systems can be seen as the design of the S-820 recast in more modern technology. The system consists of a single scalar processor with an attached vector processor. The 4 models in the range correspond to a successive reduction of the number of pipelines and floating point units installed. Link showing the list of the top 500 super computers top 500 super computers. Link showing the statistics of top 500 supercomputer statistics

Limitations

In the early 1950s, IBM built their first scientific computer, the IBM 701. The IBM 704 and other high-end systems appeared in the 1950s and 1960s, but by today's standards, these early machines were little more than oversized calculators. After going through a rough patch, IBM re-emerged as a leader in supercomputing research and development in the mid-1990s, creating several systems for the U.S. Government's Accelerated Strategic Computing Initiative (ASCI). These computers boast approximately 100 times as much computational power as supercomputers of just ten years ago.

Sequoia is a petascale Blue Gene/Q supercomputer being constructed by IBM for the National Nuclear Security Administration as part of the Advanced Simulation and Computing Program (ASC). It is scheduled to be delivered to the Lawrence Livermore National Laboratory in 2011 and fully deployed in 2012.

Sequoia was revealed in February 2009; the targeted performance of 20 petaflops was more than the combined performance of the top 500 supercomputers in the world and about 20 times faster than Roadrunner, the reigning champion of the time. It will be twice as fast as the current record-holding K computer and also twice as fast as the intended future performance of Pleiades.

IBM has also built a smaller prototype called "Dawn," capable of 500 teraflops, using the Blue Gene/P design, to evaluate the Sequoia design. This system was delivered in April 2009 and entered the Top500 list at 9th place in June 2009

Supercomputer speeds are advancing rapidly as manufacturers latch on to new techniques and cheaper prices for computer chips. The first machine to break the teraflop barrier - a trillion calculations per second - was only built in 1996. Two years ago a $59m machine from Sun Microsystems, called Constellation, attempted to take the crown of world's fastest with operating speeds of 421 teraflops. Just two years later, Sequoia was able to achieve nearly 50 times the computing power.

Examples<ref>http://http://www.top500.org</ref>

Power Fortran Analyzer

blah blah blah

Polaris

blah blah blah

Stanford University Intermediate Format (SUIF 1,2)

blah blah blah

Intel C and C++ Compilers

blah blah blah

Legend

- Vendor – The manufacturer of the platform and hardware.

- Rmax – The highest score measured using the LINPACK benchmark suite. This is the number that is used to rank the computers. Measured in quadrillions of floating point operations per second, i.e. Petaflops(Pflops).

- Rpeak – This is the theoretical peak performance of the system. Measured in Pflops.

- Processor cores – The number of active processor cores used.

Top 10 supercomputers of today<ref>http://www.junauza.com/2011/07/top-10-fastest-linux-based.html</ref>

Below are the Top 10 supercomputers in the World(as of June 2011). An effort has been made to compare the architectural features of these supercomputers.

1.K-computer:

- K-computer is currently the world's fastest supercomputer. It is developed by Fujitsu at the RIKEN Advanced Institute for Computational Science campus in Kobe, Japan.

- As per the LINPACK benchmarking standards, K-computer managed to give a peak performance of a mind-blowing 8.16 petaflops toppling Tianhe-1A off its number one spot.

- This supercomputer uses 68,544 2.0 GHZ 8-core SPARC 64 VIIIfx processors packed in 672 cabinets, for a total of 548,352 cores. In layman's term, K-computer's performance is almost equivalent to the performance of 1 million desktop computers.

- The file system used here is an optimized parallel file system based on Lustre, called Fujitsu Exabyte File System.

- One of the disadvantage with this high-performer is it consumes about 9.8 MW of power, that's the amount of power that would be enough to light 10,000 houses. When compared with its closest competitor, that is the Tianhe-1A, the K-computer is miles ahead and it is highly unlikely that it would lose its number 1 spot any time soon.

Supercomputer Programming Models<ref>http://books.google.com/books?id=tDxNyGSXg5IC&pg=PA4&lpg=PA4&dq=evolution+of+supercomputers&source=bl&ots=I1NZtZyCTD&sig=Ma2fHyp336BSp4Yv2ERmfrpeo4&hl=en&ei=IAReS4WbM8eUtgf2u8GnAg&sa=X&oi=book_result&ct=result&resnum=5&ved=0CB4Q6AEwBA#v=onepage&q=evolution%20of%20supercomputers&f=false</ref>

The parallel architectures of supercomputers often dictate the use of special programming techniques to exploit their speed. The base language of supercomputer code is, in general, Fortran or C, using special libraries to share data between nodes. Now environments such as PVM and MPI for loosely connected clusters and OpenMP for tightly coordinated shared memory machines are used. Significant effort is required to optimize a problem for the interconnect characteristics of the machine it is run on. The aim is to prevent any of the CPUs from wasting time waiting on data from other nodes.

Now we will discuss briefly regarding the programming languages mentioned above.

1) Fortran previously known as FORTRAN is a general-purpose, procedural, imperative programming language that is especially suited to computation like numeric and scientific computing. It was originally developed by IBM in the 1950s for scientific and engineering applications,then became very dominant in this area of programming early on and has been in use for over half a century in very much computationally intensive areas such as numerical weather prediction, finite element analysis, computational fluid dynamics (CFD), computational physics, and computational chemistry. It is one of the most popular and highly preferred language in the area of high-performance computing and is the language used for programs that benchmark and rank the world's fastest supercomputers.

Fortran a blend derived from The IBM Mathematical Formula Translating System encompasses a lineage of versions, each of which evolved to add extensions to the language while usually retaining compatibility with previous versions. Successive versions have added support for processing of character-based data (FORTRAN 77), array programming, modular programming and object-based programming (Fortran 90 / 95), and object-oriented and generic programming (Fortran 2003).

2) C-Language is a general-purpose computer programming language developed in 1972 by Dennis Ritchie at the Bell Telephone Laboratories to use with the Unix operating system. It is also widely used for developing portable application software. C is one of the most popular programming languages and there are hardly few computer architectures for which a C compiler does not exist. C has greatly influenced many other popular programming languages, most notably C++, which originally began as an extension to C.

It was designed to be compiled using a relatively straightforward compiler, to provide low-level access to memory, to provide language constructs that map efficiently to machine instructions, and to require minimal run-time support.The language was designed to encourage machine-independent programming. The language has been used in very wide range of platforms, from embedded microcontrollers to supercomputers.

3) The Parallel Virtual Machine (PVM) is a software tool used for parallel networking of computers. It is designed to allow a network of heterogeneous Unix and/or Windows machines to be used as a single distributed parallel processor. Thus large and complex computational problems can be solved more cost effectively by using the combined memory and power of many computers. The software is very portable and has been compiled on everything from laptops to Crays.

PVM enables users to exploit their existing computer hardware to solve complex problems at very less cost. PVM is also been used as an educational tool to teach parallel programming and to solve important practical problems. It was developed by the University of Tennessee, Oak Ridge National Laboratory and Emory University. The first version was written at ORNL in 1989, and after being rewritten by University of Tennessee, version 2 was released in March 1991. Version 3 was released in March 1993, and supported fault tolerance and better portability.User programs written in C, C++, or Fortran can access PVM through provided library routines.

4) The OpenMP (Open Multi-Processing) is an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in C, C++ and Fortran on many architectures, including Unix and Microsoft Windows platforms. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior.

It was developed by a group of major computer hardware and software vendors. OpenMP is a portable, scalable model that gives programmers a simple and flexible interface for developing parallel applications for platforms ranging from the desktop to the supercomputer.An application built with the hybrid model of parallel programming can run on a computer cluster using both OpenMP and Message Passing Interface (MPI), or more transparently through the use of OpenMP extensions for non-shared memory systems.

The OpenMP Architecture Review Board (ARB) published its first API specifications, OpenMP for Fortran 1.0, in October 1997. October the following year they released the C/C++ standard. 2000 saw version 2.0 of the Fortran specifications with version 2.0 of the C/C++ specifications being released in 2002. Version 2.5 is a combined C/C++/Fortran specification that was released in 2005.

Version 3.0, released in May, 2008, is the current version of the API specifications. The new features included in 3.0 is the concept of tasks and the task construct. More info regarding openMP can be read here OpenMP 3.0 specifications.

External links

3.Top500-The supercomputer website

5.Supercomputers to "see" black holes

6.Supercomputer simulates stellar evolution

7.Encyclopedia on supercomputer

10.Water-cooling System Enables Supercomputers to Heat Buildings

13.UC-Irvine Supercomputer Project Aims to Predict Earth's Environmental Future

14.Wikipedia

15.Parallel programming in C with MPI and OpenMP ByMichael Jay Quinn

19.SuperComputers for One-Fifth the Price

References

<references/>