CSC/ECE 506 Fall 2007/wiki3 7 qaz: Difference between revisions

| Line 12: | Line 12: | ||

== Block Size and False Sharing Miss == | == Block Size and False Sharing Miss == | ||

The cache performance of shared data in a multiprocessor environment gets affected more because of misses due to shared data than because of any other types of misses. It has been obeserved that shared data is responsible for majority of cache misses, and there miss rate is less predictable. Generally miss rate in uniprocessor goes down with increase in block size, but in multi-processor it goes up with increase in block size (more often, but this is not a general case). | |||

== Strategies to reduce False Sharing Miss == | == Strategies to reduce False Sharing Miss == | ||

== True Sharing Miss == | == True Sharing Miss == | ||

== Strategies to reduce True Sharing Miss == | == Strategies to reduce True Sharing Miss == | ||

Revision as of 20:16, 19 October 2007

Wiki: True and false sharing. In Lectures 9 and 10, we covered performance results for true- and false-sharing misses. The results showed that some applications experienced degradation due to false sharing, and that this problem was greater with larger cache lines. But these data are at least 9 years old, and for multiprocessors that are smaller than those in use today. Comb the ACM Digital Library, IEEE Xplore, and the Web for more up-to-date results. What strategies have proven successful in combating false sharing? Is there any research into ways of diminishing true-sharing misses, e.g., by locating communicating processes on the same processor? Wouldn't this diminish parallelism and thus hurt performance?

False Sharing Miss

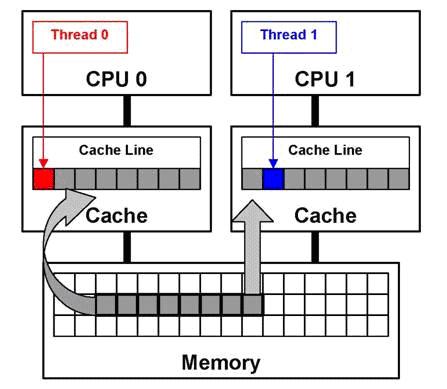

In multiprocessor system, it is important to ensure data coherence across all processors. Vendors like Intel uses MESI protocol to ensure cache coherence. When the program is loaded on to the cache for the first time, MESI requires to put this in Exclusive state. This data in the processors cache will go to shared state when another processor requests the same portion of program. For all subsequent stores by any one processor will cause its state to change from shared to modified and it will invalidate the corresponding cache content of other processors. Figure 1 demonstrates how two distinct variables that are placed adjacent to each other in system memory can be loaded on two or more processors cache line, causing the processor to mark the whole line as shared and invalidate the line for each load/store.

Fasle sharing is caused because of multiple processes/threads sharing same address space, and it is very popular to occur on multiprocessor system. MESI protocol, which is basically an invalidate based coherence protocol, will invalidate shared cache lines on different processors for each load/store of an element in a shared cache line. Figure 2 demostrates this. Even though CPU 0 is accessing different word in the cache line than what CPU 1 is trying to, the entire line in CPU 1 cache will be invalidated. A false sharing miss will occur when CPU 1 again tries to access the same word.

Block Size and False Sharing Miss

The cache performance of shared data in a multiprocessor environment gets affected more because of misses due to shared data than because of any other types of misses. It has been obeserved that shared data is responsible for majority of cache misses, and there miss rate is less predictable. Generally miss rate in uniprocessor goes down with increase in block size, but in multi-processor it goes up with increase in block size (more often, but this is not a general case).