CSC/ECE 506 Spring 2012/6b am: Difference between revisions

| Line 95: | Line 95: | ||

In this configuration, every processor has its own unique buffer that holds the loads and stores of the local processor as well as invalidates and loads of remote processors wanting access to the shared data. A store request in local cache will ensue invalidating of that data block in all other caches and main memory. A STORE is said to have been performed when | In this configuration, every processor has its own unique buffer that holds the loads and stores of the local processor as well as invalidates and loads of remote processors wanting access to the shared data. A store request in local cache will ensue invalidating of that data block in all other caches and main memory. A STORE is said to have been performed when | ||

(1) the request is serviced in the buffer and the cache is updated on a local hit and | #(1) the request is serviced in the buffer and the cache is updated on a local hit and | ||

(2) the request is issued to the buffer and an invalidate request has been sent to all other processor on the shared data. | #(2) the request is issued to the buffer and an invalidate request has been sent to all other processor on the shared data. | ||

This can be achieved in two ways depending on the topology of the connection between the caches - | This can be achieved in two ways depending on the topology of the connection between the caches - | ||

(1) For a bus-based MP system, the LOADs that miss in the local cache and the STOREs that need invalidation in other caches are broadcast over the bus to all processor caches and memory. The STORE request is then considered performed with respect to all processors when the invalidation signals have been sent out to the private buffers of all the caches. | #(1) For a bus-based MP system, the LOADs that miss in the local cache and the STOREs that need invalidation in other caches are broadcast over the bus to all processor caches and memory. The STORE request is then considered performed with respect to all processors when the invalidation signals have been sent out to the private buffers of all the caches. | ||

(2) For non-bus MP systems, the caches are connected in point-to-point mesh-based or interconnect ring-based topology. When a store is encountered in one of the processors, the shared data is first locked in the shared memory to ensure atomic access, and then the invalidate signal is propagated along the point-to-point interconnect in a decided order. The STORE to shared memory is performed only when the invalidation has been propagated to all the processor caches and buffers. If any processor issues a LOAD on the same address as that is being STOREd, then the LOAD request has to be rejected or buffered to be serviced at a later time, to maintain atomic access to the shared data block. | #(2) For non-bus MP systems, the caches are connected in point-to-point mesh-based or interconnect ring-based topology. When a store is encountered in one of the processors, the shared data is first locked in the shared memory to ensure atomic access, and then the invalidate signal is propagated along the point-to-point interconnect in a decided order. The STORE to shared memory is performed only when the invalidation has been propagated to all the processor caches and buffers. If any processor issues a LOAD on the same address as that is being STOREd, then the LOAD request has to be rejected or buffered to be serviced at a later time, to maintain atomic access to the shared data block. | ||

====Separate Buffers for Local and Remote Accesses==== | ====Separate Buffers for Local and Remote Accesses==== | ||

Revision as of 14:20, 3 March 2012

Introduction

With the present day processor speeds increasing at a much faster rate than memory speeds, there arises a need that the data transactions between the processor and the memory system be managed such that the slow memory speeds do not affect the performance of the processor system. While the read operations require that the processor wait for the read operation to complete before resuming execution, the write operations do not have this requirement. This is where a write buffer (WB) comes into the picture, assisting the processor in writes, so that the processor can continue its operation while the write buffer takes complete responsibility of executing the write.

Write Buffers in Uni-processors

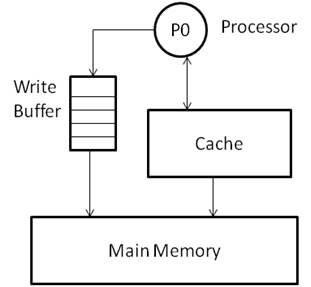

A write to be performed is put in a buffer implemented as a FIFO queue, so that the writes are performed in the order that they were called. In a uni-processor model, with the requirement and possibility of extracting Instruction Level Parallelism (ILP), the writes may also be called out-of-order, provided there are some hardware/ software protocols implemented to check the writes for any dependences that may exist in the instruction stream. The following figure shows the cache-based single processor system with a write buffer.

Write Buffer Issues in Multiprocessors

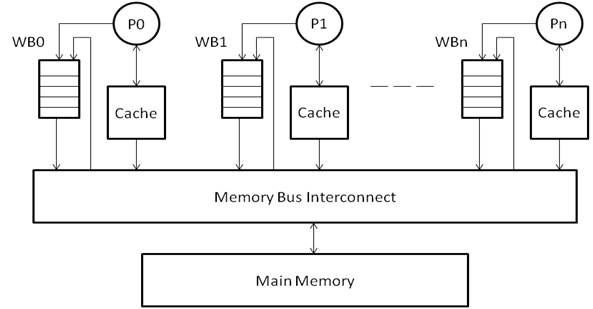

In a multiprocessor system, the same design can be extended, as shown in the figure. In this design, each processor will have its own private cache and a write buffer corresponding to the cache. The caches are connected by the means of an interconnect with each other as well as with the main memory.

As can be seen in the figure above, each processor makes a write by pushing it into the write buffer, and the write buffer completes the task of performing the write to the cache/ main memory. If another write is issued by the processor that modifies the same address as the earlier write, the former write value will be over-written with the new one in the write buffer. Similarly, if a read is issued to the same address, the processor will read the value from the write buffer rather than going into the cache or the main memory.

The Coherence Problem

Consider the case where a write (ST_A) has been issued by processor I into the WB_I, and the write is waiting to be executed. Since the write has not yet been performed to the cache or the main memory, the other processors do not have any knowledge about the changes made to address A. As a result, a read operation by another processor from address A will take the value from either its own cache, write buffer, or the main memory (depending on whether it hits or misses in the cache). It is the job of the designer to employ protocols that will take care that the sequential ordering of the instructions is maintained, and that the writes made to any one of the caches are propagated and updated in all of the processor caches.

Sequential Consistency

When operating as a part of a multiprocessor, it is not enough to check dependencies between the writes only at the local level. As data is shared and the course of events in different processors may affect the outcomes of each other, it has to be ensured that the sequence of writes and the data dependencies is preserved between the multiprocessor. “A system is said to be sequentially consistent if the result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operation of each individual processor appear in this sequence in the order specified by its program.” There are different approaches to this requirement, based on the expected outcome of the program. The condition of sequential consistency and logical ordering may be relaxed as per the requirement of the program we are looking to parallelize.

Strong Ordering

The requirements for strong ordering are as follows:

1) All memory operations appear to execute one at a time.

2) All memory operations from a single CPU appear to execute in-order.

3) All memory operations from different processors are “cleanly” interleaved with each other (serialization)

Total Store Ordering

Requirements are as follows:

1) Relaxed Consistency where store must complete in-order but stores need not complete before a read to a given location takes place

2) Allows reads to bypass pending writes where writes MUST exit the store buffer in FIFO order.

Partial Store Ordering

Requirement is as follows:

Even more relaxed consistency where stores to any given memory location complete in-order but stores to different locations may complete out of order and stores need not complete before a read to a given location takes place.

Weak Ordering

Requirement is as follows:

Really relaxed consistency where anything goes, except at barrier synchronization points, global memory state must be completely settled at each synchronization and memory state may correspond to any ordering of reads and writes between synchronization points.

Example

Examples for Sequential Consistency: In the following program, consider variables “a”, “b”, “flag1” and “flag2” are initialized with 0 and both processors (CPU1 and CPU 2) are sharing all the variables

a = b = flag1 = flag2 = 0; // initial value CPU1 CPU 2 Flag 1 = 1; flag2 = 1; a = 1; a = 2; r1 = a; r3 = a; r2 = flag2; r4 = flag1;

SPARC V8 architecture follows the Total Store Ordering model and allows a write following by a read to complete out of program order. Possible result we can get: r1 = 1, r3 = 2, r2 = r4 = 0 But strong ordering enforces strict atomicity thus we will get different results based on the execution order between two processors’ instructions.

Cache Coherence Models

The two prominent models to maintain cache coherence are the snoopy-bus protocol and the directory-based coherence protocol. The directory-based protocol is used for distributed memory systems, in which every processor keeps track of what data is being stored using a directory entry. The snoopy-bus protocol is in which every processor monitors the reads and writes that are being serviced by the memory bus. The read and write requests can be broadcast on the bus for all the processors to respond based on whether or not they are in possession of that data in their cache. We will be looking at shared memory systems in this article.

Write-Update

In this approach, a write request is broadcast to all the processors and each processor updates its local cache with the updated value of the data. Even though a read may miss in any of the processor local cache, the read can be made from any processor, as the copy has been updated in all caches. This saves a lot of bus bandwidth, in terms of writes, as there is only one broadcast that needs to be made in order for all the caches to be up to date with the newest value of the data.

Write-Invalidate

This approach is common when dealing with systems where a data block is being written by one processor, but is being read by multiple processors. Whenever a write is made to share data, an invalidate signal is sent to all the caches, asking for that data block to be made invalid, as the value is not updated to the latest one. This may cause additional traffic on the bus, as a result, a separate bus for invalidation requests may be included in the design.

Coherence in Write Buffers

Software-Based Coherence

The software technique relies on the compiler to ensure that no dependencies exist between the STORE/LOAD accesses carried out at different processors on the shared memory. The compiler, based on indications from the programmer will make sure that there are no incoherent accesses to shared memory. There is a possibility of having a shared READ/WRITE buffer between all processors to access the main memory.

Hardware-Based Coherence

In hardware-based protocols accesses to the shared memory are communicated using hardware invalidate signals that are broadcasted to all the processor memories. Hardware support may also be required for a LOAD to be broadcasted to all the caches, so that a read can be performed directly from a remote memory. There are two approaches to maintaining coherence in write buffers and caches for multiprocessor system-

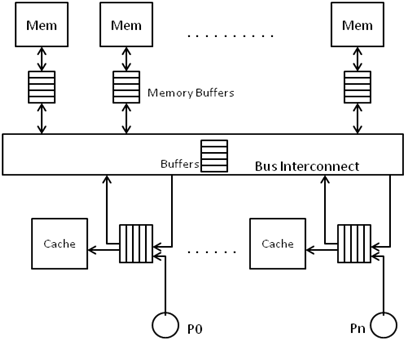

Unique Buffer per Processor

In this configuration, every processor has its own unique buffer that holds the loads and stores of the local processor as well as invalidates and loads of remote processors wanting access to the shared data. A store request in local cache will ensue invalidating of that data block in all other caches and main memory. A STORE is said to have been performed when

- (1) the request is serviced in the buffer and the cache is updated on a local hit and

- (2) the request is issued to the buffer and an invalidate request has been sent to all other processor on the shared data.

This can be achieved in two ways depending on the topology of the connection between the caches -

- (1) For a bus-based MP system, the LOADs that miss in the local cache and the STOREs that need invalidation in other caches are broadcast over the bus to all processor caches and memory. The STORE request is then considered performed with respect to all processors when the invalidation signals have been sent out to the private buffers of all the caches.

- (2) For non-bus MP systems, the caches are connected in point-to-point mesh-based or interconnect ring-based topology. When a store is encountered in one of the processors, the shared data is first locked in the shared memory to ensure atomic access, and then the invalidate signal is propagated along the point-to-point interconnect in a decided order. The STORE to shared memory is performed only when the invalidation has been propagated to all the processor caches and buffers. If any processor issues a LOAD on the same address as that is being STOREd, then the LOAD request has to be rejected or buffered to be serviced at a later time, to maintain atomic access to the shared data block.

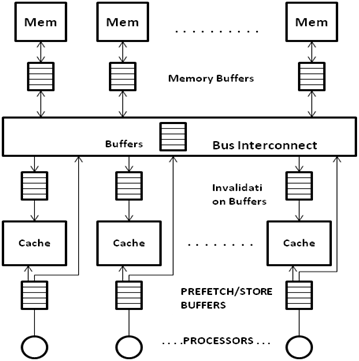

Separate Buffers for Local and Remote Accesses

Another approach to maintaining coherence is for every processor to have separate buffers for local data requests and remote data requests. As seen in the figure, every processor has a Local-buffer which queues the local LOADs and STOREs, and a Remote-buffer (also termed Invalidate buffer) that stores the invalidation requests and LOADs coming in from different processors. In bus-based processor system, this approach makes it difficult to maintain write atomicity, as different invalidate buffers may hold different number of invalidation requests, putting uncertainty on the time to invalidate the concerned data block in the cache. In non-bus based systems, however, this approach is successful in maintaining strong coherence, provided the invalidate signal makes sure that the data is invalidated at a cache before moving on to the next processor (rather than just pushing the invalidate request in the buffers and moving on).

Universal read/write Buffer

In this approach, a shared bus controller resides in between the local bus controller and memory bus, where local bus is connected to all private caches of the processors. This technique supports multiple processors with same or different coherence protocols like, MSI, MESI and MOESI. Shared bus controller consists of the local bus controller, the data buffer and the address buffer. The description and functional details of each of the blocks is as below.

Data Buffer

The data buffer consists of three FIFOs

- The non_block write-FIFO receives non cacheable memory access data from CPU. These requests do not require any cache eviction or line-fill cycles.

- The write-back FIFO is used for eviction cycles. If there is a cache miss and eviction is required, cache miss data will be read (line-fill cycle) from memory to the cache just after the evicted data moved to the FIFO. This FIFO will be written back to memory later on. So, CPU will get the new data but eviction process time will be hidden from the CPU.

- The read-FIFO is used for storing data from non_block write FIFO, Write-back FIFO or memory.

Address Buffer

The address buffer also consists of three FIFOs

- Non_block write FIFO - stores address of non-blocking accesses.

- Write-back FIFO - stores starting address of eviction cycle.

- Line-fill FIFO- stores the starting address of line-fill cycle.

Snoop logic is started whenever there is read cycle to main memory. Snoop logic compares the read request address with both write-back FIFO and Line-fill FIFO. If there is a match, HIT flag is set and CPU gets the data immediately through internal bypass path. By doing so, stale data will not be read and CPU doesn’t have to waste memory latency time.

Local Bus Controller

Controller monitors the local bus for status bits (M, O, E, S, I), INTERVENE# and SHARE# bits and determine that cycle needs to access main memory or private cache. For main memory access, controller informs the buffer with the signal of WB (Write Back), LF (Line Fill) or O3 (owned) status bit.

The above outputs can be written in Boolean equations as shown below:

WB = RD * MOESI * INTV + WR * [STATUS=M]

LF = INTV

03 = RD * MOESI * INTV

MOESI means the cycle is initiated by MOESI protocol.

INTV means INTERVENE signal which is asserted when any of cache provides a valid data to other cache.

Algorithms

MOESI The algorithm followed for the MOESI protocol is as follows:

- M state provides data to other cache if read hit initiated by other cache and changes its state from M to O

- If same data is hit again, cache with O state is responsible of providing data to the requesting cache.

- Write cycle needs a line fill if there is a cache miss and no INTERVENE

MSI and MESI The algorithms for the MSI and MOSI protocols are as follows. (MSI and MESI protocol have no O state)

- M of MSI or MESI protocol provides data to other cache and main memory and change the state from M to S.

- Local bus controller is responsible to change the state from S to O for MOESI protocol

- M of MSI or MESI still changes to S.

- If write cycle initiated by other CPU (MSI or MESI),

- If MOESI cache has a valid line with M or O status

- It will send out the data line on to local bus and change the state to I.

- [Because the line will be written by other cache and outdated]

- If MOESI cache has a valid line with M or O status

- 5. If the status is E or S, main memory will provide the data and no cache is involved in data transfer. However, cache with MOESI protocol will be the owner of a particular line.

- 6. Force the status bit to be O instead of S

References

<references/> Memory Access Buffering In Multiprocessors Michel Dubois, Christoph Scheurich, Faye Briggs

Multiprocessor Consistency an Coherence Memory System Architecture, Philip Koopman

Write buffer design for cache-coherent shared-memory multiprocessors, Fernaz Mounes-Toussi, David J. Lilja