CSC 456 Spring 2012/ch7 MN: Difference between revisions

| Line 44: | Line 44: | ||

Maintaining program order is very important for memory consistency but it comes with performance degradation. Various memory consistency models trades off performance to make programming easy. | Maintaining program order is very important for memory consistency but it comes with performance degradation. Various memory consistency models trades off performance to make programming easy. | ||

== Memory Consistency Models == | |||

==Resources | The memory consistency model of a shared-memory multiprocessor is a formal specification of how the memory system appears to the programmer. It eliminates the gap between the behavior expected by the programmer and the actual behavior supported by a system. Effectively, the consistency model places restrictions on the values that can be returned by a read in a shared-memory program execution. | ||

In a single processor system, in order to maintain memory consistency, it needs to ensure that the compiler preserves the program order when accessing synchronization variables. But in a multiprocessor system, it is required to ensure that accesses of one processor appear to execute in program order to all other processors, atleast partially. | |||

=== Memory semantics in Uniprocessor systems === | |||

Uniprocessor languages use simple sequential semantics for memory operations, which allow the programmer to assume that all memory operations will occur one at a time in the sequential order specified by the program. Thus, the programmer can expect a read to return the value of the last write to the same location before it by the sequential program order. It is sufficient to only maintain uniprocessor data and control dependences. The compiler and hardware can freely reorder operations to different locations if the uniprocessor data and control dependences are respected. This enables compiler optimizations such as register allocation, code motion, and loop transformations, and hardware optimizations, such as pipelining, multiple issue, write buffer bypassing and forwarding, and lockup-free caches, all of which lead to overlapping and reordering of memory operations. [4] | |||

=== Memory semantics in multiprocessor systems === | |||

Programmer's implicit expectations are: | |||

* memory accesses in a processor takes place according to the program order. | |||

* Each memory access is performed atomically. | |||

A strong consistency model attempting uniprocessor-like consistency could cause global bottleneck, costing performance. Thus, '''''weak''''' consistency models are deployed to improve performance. The advanatges of such models are: | |||

* They support out-of-order execution within individual CPUs | |||

* Relaxes latency issues with near-simultaneous accesses by different CPUs | |||

The following are the various consistency models and it is the programmer who must take into account the memory consistency model to create correct software: | |||

* linearizability (also known as strict or atomic consistency) | |||

* sequential consistency | |||

* causal consistency | |||

* release consistency | |||

* eventual consistency | |||

* delta consistency | |||

* PRAM consistency (also known as FIFO consistency) | |||

* weak consistency | |||

* vector-field consistency | |||

* fork consistency | |||

* serializability | |||

* one-copy serializability | |||

* entry consistency | |||

=Resources= | |||

1. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_jp#Memory_Consistency_Problem <br/> | 1. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_jp#Memory_Consistency_Problem <br/> | ||

2. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_ss#Cache_Coherence <br/> | 2. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_ss#Cache_Coherence <br/> | ||

Revision as of 18:31, 27 February 2012

Introduction

Though the migration from uniprocessor system to multiprocessing systems is not new, the world of parallel computers is undergoing a continuous change. Parallel computers, which started as high-end super-computing systems for carrying out huge calculations, are now ubiquitous and are present in all mainstream architectures for servers, desktops, and embedded systems. In order to design parallel architectures to meet programmer's needs and expectations more closely, exciting and challenging changes exist. The three main areas which are being considered by scientists today are: cache coherence, memory consistency and synchronization.

Cache Coherence Problem

In a system with single processor (single core), maintaining cache coherency is simple and easy but in a multiprocessor system, it is not as simple. Data can be present in any processors cache and protocol needs to ensure that the data is same in all caches. If it cannot ensure that all the caches are same, then it needs to flag a cache line indicating that it is not updated.

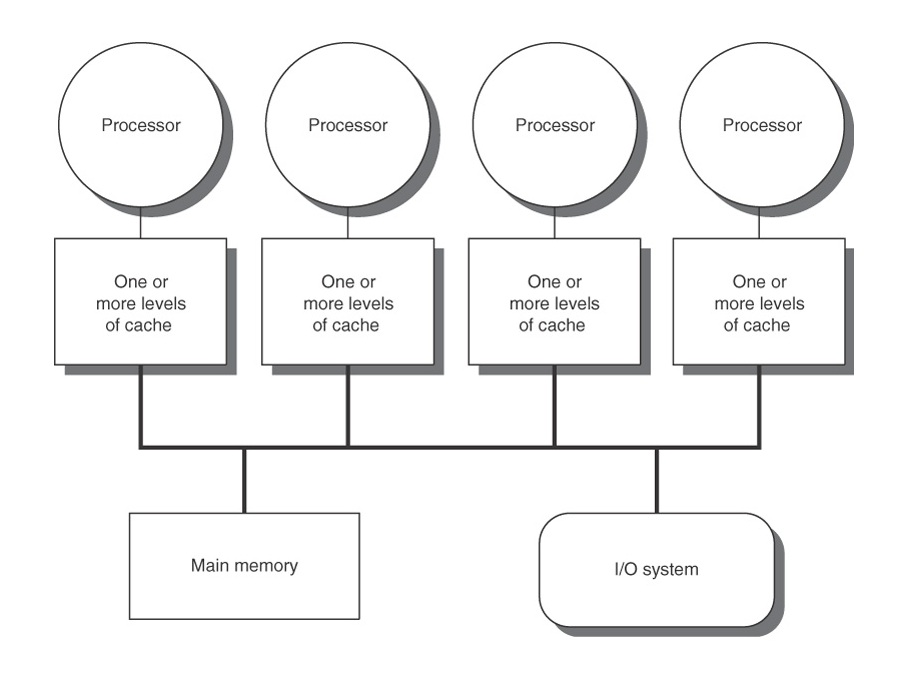

In the figure shown here, this is a 4 processor shared memory system where each processor has its own cache. Supposed processor P1 reads memory location M1 and stores it in its local cache. Then, if P2 reads same location memory location then M1 gets stored in P2’s cache. Now, if P1 changes value of M1, two copies of same data, residing in different caches will become different. When P2 operates on M1, it uses the stale value of M1 that was stored in its cache. It is responsibility of Cache Coherence Protocol to prevent this. Hardware support is needed to provide a coherent view of data in multiple caches. This is known as write propagation requirement.

One may think that cache write policy can provide cache coherence but it is not true. Cache write policy only controls how a change in value of cache is propagated to lower level cache or main memory. It is not responsible for propagating changes to other caches.

Cache Coherence Protocols

The two basic methods to utilize the inter-core bus to notify other cores when a core changes something in its cache are update and invalidate. In the update method, if variable 'x' is modified by core 1, core 1 has to send the updated value of 'x' onto the inter-core bus. Each cache listens to the inter-core bus and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable. [2]

In case of invalidation, an invalidation message is sent onto the inter-core bus when a variable is changed. The other caches will read this invalidation signal and if its core attempts to access that variable, it will result in a cache miss and the variable will be read from main memory.

The update method results in significant amount of traffic on the inter-core bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method.

In order to improve cache coherency performance over the years, the following protocols were proposed:

- MSI

MSI stands for Modified, Shared, and Invalid, based on the three states that a line of cache can be in. The Modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The Shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The Invalid state means that the value of the variable has been modified by another cache and this value is invalid; the cache must read a new value from main memory (or another cache).

- MESI

MESI stands for Modified, Exclusive, Shared, and Invalid. The Modified and Invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the Exclusive state. The Exclusive state means that the variable is in only this cache and the value of it matches the value within the main memory. This now means that the Shared state indicates that the variable is contained in more than one cache.

- MOSI

The MOSI protocol is identical to the MSI protocol except that it adds an Owned state. The Owned state means that the processor "Owns" the variable and will provide the current value to other caches when requested (or at least it will decide if it will provide it when asked). This is useful because another cache will not have to read the value from main memory and will receive it from the Owning cache much, much, faster.

- MOESI

The MOESI protocol is a combination of the MESI and MOSI protocols.

Memory Consistency Problem

Memory consistency deals with the ordering of memory operations (load and store) to different memory locations. In a single processor system, code will execute correctly if the compiler preservers the order of the access to synchronization variables and other dependent variables. But in shared memory model with multiple processors, two threads could be access a shared data (something like a synchronization variable) and the output of the threads could change based on which thread can get to the shared data earlier. If this happens, then the program output on uni-processor system and multi-processor program will be different.

Maintaining program order is very important for memory consistency but it comes with performance degradation. Various memory consistency models trades off performance to make programming easy.

Memory Consistency Models

The memory consistency model of a shared-memory multiprocessor is a formal specification of how the memory system appears to the programmer. It eliminates the gap between the behavior expected by the programmer and the actual behavior supported by a system. Effectively, the consistency model places restrictions on the values that can be returned by a read in a shared-memory program execution.

In a single processor system, in order to maintain memory consistency, it needs to ensure that the compiler preserves the program order when accessing synchronization variables. But in a multiprocessor system, it is required to ensure that accesses of one processor appear to execute in program order to all other processors, atleast partially.

Memory semantics in Uniprocessor systems

Uniprocessor languages use simple sequential semantics for memory operations, which allow the programmer to assume that all memory operations will occur one at a time in the sequential order specified by the program. Thus, the programmer can expect a read to return the value of the last write to the same location before it by the sequential program order. It is sufficient to only maintain uniprocessor data and control dependences. The compiler and hardware can freely reorder operations to different locations if the uniprocessor data and control dependences are respected. This enables compiler optimizations such as register allocation, code motion, and loop transformations, and hardware optimizations, such as pipelining, multiple issue, write buffer bypassing and forwarding, and lockup-free caches, all of which lead to overlapping and reordering of memory operations. [4]

Memory semantics in multiprocessor systems

Programmer's implicit expectations are:

- memory accesses in a processor takes place according to the program order.

- Each memory access is performed atomically.

A strong consistency model attempting uniprocessor-like consistency could cause global bottleneck, costing performance. Thus, weak consistency models are deployed to improve performance. The advanatges of such models are:

- They support out-of-order execution within individual CPUs

- Relaxes latency issues with near-simultaneous accesses by different CPUs

The following are the various consistency models and it is the programmer who must take into account the memory consistency model to create correct software:

- linearizability (also known as strict or atomic consistency)

- sequential consistency

- causal consistency

- release consistency

- eventual consistency

- delta consistency

- PRAM consistency (also known as FIFO consistency)

- weak consistency

- vector-field consistency

- fork consistency

- serializability

- one-copy serializability

- entry consistency

Resources

1. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_jp#Memory_Consistency_Problem

2. http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2011/ch7_ss#Cache_Coherence