CSC/ECE 506 Spring 2011/ch4a zz: Difference between revisions

| Line 1: | Line 1: | ||

== | == Introduction == | ||

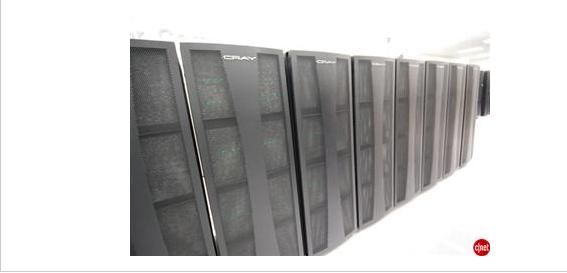

[[Image:Comp4.jpg|thumb|right|400px|The Cray X1E supercomputer at the Oak Ridge National Laboratory requires a separate array of discs to handle its storage needs. Here are some of those discs, which are arranged all around the supercomputer]] | [[Image:Comp4.jpg|thumb|right|400px|The Cray X1E supercomputer at the Oak Ridge National Laboratory requires a separate array of discs to handle its storage needs. Here are some of those discs, which are arranged all around the supercomputer]] | ||

Revision as of 21:29, 13 February 2011

Introduction

A supercomputer is generally considered to be the front-line “cutting-edge” in terms of processing capacity (number crunching) and computational speed at the time it is built, but with the pace of development yesterdays' supercomputers become regular servers today. A state-of-the-art supercomputer is an extremely powerful computer capable of manipulating massive amounts of data in a relatively short amount of time. Supercomputers are very expensive and are deployed for specialized scientific and engineering applications that must handle very large databases or do a great amount of computation --among them meteorology, animated graphics, fluid dynamic calculations, nuclear energy research, weapons simulation and petroleum exploration.

The United States government has played the key role in the development and use of supercomputers, During World War II, the US Army paid for the construction of ENIAC in order to speed the calculations of artillery tables. In the 30 years after World War II, the US government used high-performance computers to design nuclear weapons, break codes, and perform other security-related applications.

The most powerful supercomputers introduced in the 1960s were designed primarily by Seymour Cray at Control Data Corporation (CDC). They led the market into the 1970s until Cray left to form his own company, Cray Research. With Moore’s Law still holding after more than thirty years, the rate at which future mass-market technologies overtake today’s cutting-edge super-duper wonders continues to accelerate. The effects of this are manifest in the abrupt about-face we have witnessed in the underlying philosophy of building supercomputers.

During the 1970s and all the way through the mid-1980s supercomputers were built using specialized custom vector processors working in parallel. Typically, this meant anywhere between four to sixteen CPUs. The next phase of the supercomputer evolution saw the introduction of massive parallel processing and a drift away from vector-only microprocessors. However, the processors used in the construction of this generation of supercomputers were still primarily highly specialized purpose-specific custom designed and fabricated units.

That is no longer true. No longer is silicon fabricated into the incredibly expensive highly specialized purpose-specific customized microprocessor units to serve as the heart and mind of supercomputers. Advances in mainstream technologies and economies of scale now dictate that “off-the-shelf” multicore server-class CPUs are assembled into great conglomerates, combined with mind-boggling quantities of storage (RAM and HDD), and interconnected using light-speed transports.

So we now find that instead of using specialized custom-built processors in their design, supercomputers are based on "off the shelf" server-class multicore microprocessors, such as the IBM PowerPC, Intel Itanium, or AMD x86-64. The modern supercomputer is firmly based around massively parallel processing by clustering very large numbers of commodity processors combined with a custom interconnect.

Currently the fastest supercomputer is the Blue Gene/L, completed at Lawrence Livermore National Laboratory in 2005 and upgraded in 2007. It utilizes 212,992 processors to execute potentially as many 596 trillion mathematical operations per second. The computer is used to do nuclear weapons safety and reliability analysis. A prototype of Blue Gene/L demonstrated in 2003 was air-cooled, as opposed to many high-performance machines that use water and refrigeration, and used no more power than the average home. In 2003 scientists at Virginia Tech assembled a relatively low-cost supercomputer using 1,100 dual-processor Apple Macintoshes; it was ranked at the time as the third fastest machine in the world.

Some of the companies which build supercomputers are Silicon Graphics, Intel, IBM, Cray, Orion, Aspen Systems etc.

Here is a list of the top 10 supercomputers top10 supercomputers as of June 2009.