CSC/ECE 506 Fall 2007/wiki1 4 a1: Difference between revisions

| Line 1: | Line 1: | ||

== Architectural Trends == | == Architectural Trends == | ||

Feature size characterizes the integrated circuit processes, which is the minimum size of a transister or a wire used for connecting transistors and circuit components. Feature sizes have decreased from 10 microns in 1971 to 0.18 microns in 2001, and nowadays 0.13 microns process is applicable. These advanced integrated circuit processes allowed the integration of one billion transistors on a single chip. This dramatical advance enabled more complicated and faster microprocessor architecure which have evolved to the direction of increasing parallelism; ILP and TLP. With respect to microprocessor architecture, as superscaler processor prevails, several exploitable architectures were proposed; advanced superscalar, VLIW, Superspeculaive, Simultaneously multithreading, Chip multiprocessor and so on. These techniques tried to overcome the control and data hazard as deep pipelining and multiple issue overwhelms as well as to maximize the throughput of computing by TLP. | |||

[Fig. 1] MIPS R10000 Block Diagram (From Fig. 2 of []) | |||

=== VLIW(Very Long Instruction Word) === | === VLIW(Very Long Instruction Word) === | ||

VLIW is one way to expedite ILP with multiple issue. Multiple-issue processors come in two basic flavor: superscalar and VLIW. Superscalar processors issue varying number of instructions per clock and are either statically scheduled or dynamically scheduled. | VLIW is one way to expedite ILP with multiple issue. Multiple-issue processors come in two basic flavor: superscalar and VLIW. Superscalar processors issue varying number of instructions per clock and are either statically scheduled or dynamically scheduled. | ||

In contrast to superscalar, VLIW is based on statically sceduled processing which is performed by the compiler. The compiler analyzes the programmer's instructions and groups multiple independent instructions into a large package. The first multiple-issue processors that required the instruction stream to be explicitly organized to avoid dependences used wide instructions with multiple operations per instruction | In contrast to superscalar, VLIW is based on statically sceduled processing which is performed by the compiler. The compiler analyzes the programmer's instructions and groups multiple independent instructions into a large package. The first multiple-issue processors that required the instruction stream to be explicitly organized to avoid dependences used wide instructions with multiple operations per instruction. VLIW issues a fixed number of instructions formatted either as one large instruction or as a fixed instruction packet with the parallelism among instructions explicitly indicated by the instruction. For example, MIPS R10000 has 2 integer functional unit and 3 kinds of operands. Hence the compiler can one instruction which contain 3 integer operations with corresponding operands to each operations. | ||

E.g. Trimedia, i860 | E.g. Trimedia, i860 | ||

=== Multi-threading === | === Multi-threading === | ||

Multi-threading enables exploiting thread-level parallelism(TLP) within a processor. it allows multiple threads to share the functional units of a single processor by an overlapping manner. For this sharing, the processor have to maintain duplicated state information of each thread-regiter file, PC, page table and so on. For example, while pursuing multithreading, if the processor fetches data from slow system memory, the processor switches to another program or program thread which is ready to execute instead of stalling the previous thread for waiting the data. Though this does not speed up a particular program/thread, it increases the overall system throughput by reducing the CPU idle time. | |||

The Simultaneous multithreading (SMT) is a kind of multithreading which uses the resources of a multiple-issue, dynamically scheduled processor to exploit TLP at the same time it exploits ILP using the issue slots in a single clock cycle. | |||

[Fig. 2]Four different approaches of using issue slots in superscalar processor (From Fig 6.44 of [1]) | |||

=== Multi-core === | === Multi-core === | ||

Multi-core CPUs | Multi-core CPUs have multiple number of CPU cores on a single die, connected to each other through a shared L2 or L3 cache, an on-die bus, or an on-die crossbar switch. All the CPU cores on the die share interconnect components with which to interface to other processors and the rest of the system. These components includes a FSB (Front Side Bus) interface, a memory controller, a cache coherent link to other processors, and a non-coherent link to the southbridge and I/O devices. Multi-core chips do more work per clock cycle, and thus can be designed to operate at lower frequencies, than their single-core counterparts. Since power consumption goes up proportionally with frequency, multi-core architecture gives | ||

engineers the means to address the problem of runaway power and cooling requirements. | |||

[Fig. 3] Intel® Pentium® processor Extreme Edition processor die (From []) | |||

=== Speculative Execution === | === Speculative Execution === | ||

While trying to get more ILP, managing control dependencies becomes more important but more burden. To remove the pipeline stall, branch prediction is applied for the instruction fetching stage. However, for the processor which executes multiple instructions per clock, it is not sufficient to predict accurately. A wide issue processor needs to execute a branch every clock cycle to attain the maximum performance. Under speculative execution, fetch, issue, and execute instructions are performed as if branch predictions were always correct. When misprediction occurs, the recovery mechanism handles this situation. | |||

While trying to get more ILP, managing control dependencies becomes more important but more burden. To remove the pipeline stall, branch prediction is applied for the instruction fetching stage. However, for the processor which executes multiple instructions per clock, it is not sufficient to predict accurately. A wide issue processor | |||

E.g. PowerPC 603/604/G3/G4, MIPS R10000/R12000, Intel Pentium II/III/4, Alpha 21264, AMD K5/K6/Athlon | E.g. PowerPC 603/604/G3/G4, MIPS R10000/R12000, Intel Pentium II/III/4, Alpha 21264, AMD K5/K6/Athlon | ||

One problem with an instruction pipeline is that there are a class of instructions that must make their way entirely through the pipeline before execution can continue. In particular, conditional branches need to know the result of some prior instruction before "which side" of the branch to run is known. For instance, an instruction that says "if x is larger than 5 then do this, otherwise do that" will have to wait for the results of x to be known before it knows if the instructions for this or that can be fetched. | One problem with an instruction pipeline is that there are a class of instructions that must make their way entirely through the pipeline before execution can continue. In particular, conditional branches need to know the result of some prior instruction before "which side" of the branch to run is known. For instance, an instruction that says "if x is larger than 5 then do this, otherwise do that" will have to wait for the results of x to be known before it knows if the instructions for this or that can be fetched. | ||

Revision as of 01:09, 6 September 2007

Architectural Trends

Feature size characterizes the integrated circuit processes, which is the minimum size of a transister or a wire used for connecting transistors and circuit components. Feature sizes have decreased from 10 microns in 1971 to 0.18 microns in 2001, and nowadays 0.13 microns process is applicable. These advanced integrated circuit processes allowed the integration of one billion transistors on a single chip. This dramatical advance enabled more complicated and faster microprocessor architecure which have evolved to the direction of increasing parallelism; ILP and TLP. With respect to microprocessor architecture, as superscaler processor prevails, several exploitable architectures were proposed; advanced superscalar, VLIW, Superspeculaive, Simultaneously multithreading, Chip multiprocessor and so on. These techniques tried to overcome the control and data hazard as deep pipelining and multiple issue overwhelms as well as to maximize the throughput of computing by TLP.

[Fig. 1] MIPS R10000 Block Diagram (From Fig. 2 of [])

VLIW(Very Long Instruction Word)

VLIW is one way to expedite ILP with multiple issue. Multiple-issue processors come in two basic flavor: superscalar and VLIW. Superscalar processors issue varying number of instructions per clock and are either statically scheduled or dynamically scheduled.

In contrast to superscalar, VLIW is based on statically sceduled processing which is performed by the compiler. The compiler analyzes the programmer's instructions and groups multiple independent instructions into a large package. The first multiple-issue processors that required the instruction stream to be explicitly organized to avoid dependences used wide instructions with multiple operations per instruction. VLIW issues a fixed number of instructions formatted either as one large instruction or as a fixed instruction packet with the parallelism among instructions explicitly indicated by the instruction. For example, MIPS R10000 has 2 integer functional unit and 3 kinds of operands. Hence the compiler can one instruction which contain 3 integer operations with corresponding operands to each operations.

E.g. Trimedia, i860

Multi-threading

Multi-threading enables exploiting thread-level parallelism(TLP) within a processor. it allows multiple threads to share the functional units of a single processor by an overlapping manner. For this sharing, the processor have to maintain duplicated state information of each thread-regiter file, PC, page table and so on. For example, while pursuing multithreading, if the processor fetches data from slow system memory, the processor switches to another program or program thread which is ready to execute instead of stalling the previous thread for waiting the data. Though this does not speed up a particular program/thread, it increases the overall system throughput by reducing the CPU idle time.

The Simultaneous multithreading (SMT) is a kind of multithreading which uses the resources of a multiple-issue, dynamically scheduled processor to exploit TLP at the same time it exploits ILP using the issue slots in a single clock cycle.

[Fig. 2]Four different approaches of using issue slots in superscalar processor (From Fig 6.44 of [1])

Multi-core

Multi-core CPUs have multiple number of CPU cores on a single die, connected to each other through a shared L2 or L3 cache, an on-die bus, or an on-die crossbar switch. All the CPU cores on the die share interconnect components with which to interface to other processors and the rest of the system. These components includes a FSB (Front Side Bus) interface, a memory controller, a cache coherent link to other processors, and a non-coherent link to the southbridge and I/O devices. Multi-core chips do more work per clock cycle, and thus can be designed to operate at lower frequencies, than their single-core counterparts. Since power consumption goes up proportionally with frequency, multi-core architecture gives engineers the means to address the problem of runaway power and cooling requirements.

[Fig. 3] Intel® Pentium® processor Extreme Edition processor die (From [])

Speculative Execution

While trying to get more ILP, managing control dependencies becomes more important but more burden. To remove the pipeline stall, branch prediction is applied for the instruction fetching stage. However, for the processor which executes multiple instructions per clock, it is not sufficient to predict accurately. A wide issue processor needs to execute a branch every clock cycle to attain the maximum performance. Under speculative execution, fetch, issue, and execute instructions are performed as if branch predictions were always correct. When misprediction occurs, the recovery mechanism handles this situation.

E.g. PowerPC 603/604/G3/G4, MIPS R10000/R12000, Intel Pentium II/III/4, Alpha 21264, AMD K5/K6/Athlon

One problem with an instruction pipeline is that there are a class of instructions that must make their way entirely through the pipeline before execution can continue. In particular, conditional branches need to know the result of some prior instruction before "which side" of the branch to run is known. For instance, an instruction that says "if x is larger than 5 then do this, otherwise do that" will have to wait for the results of x to be known before it knows if the instructions for this or that can be fetched.

For a small four-deep pipeline this means a delay of up to three cycles — the decode can still happen. But as clock speeds increase the depth of the pipeline increases with it, and modern processors may have 20 stages or more. In this case the CPU is being stalled for the vast majority of its cycles every time one of these instructions is encountered.

The solution, or one of them, is speculative execution, also known as branch prediction. In reality one side or the other of the branch will be called much more often than the other, so it is often correct to simply go ahead and say "x will likely be smaller than five, start processing that". If the prediction turns out to be correct, a huge amount of time will be saved. Modern designs have rather complex prediction systems, which watch the results of past branches to predict the future with greater accuracy.

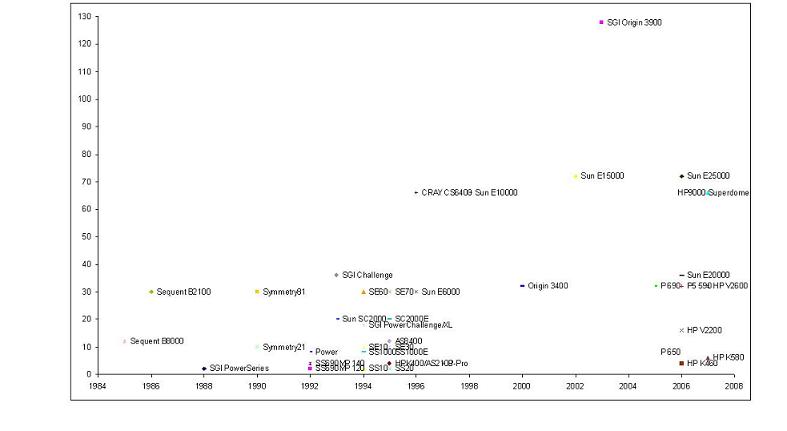

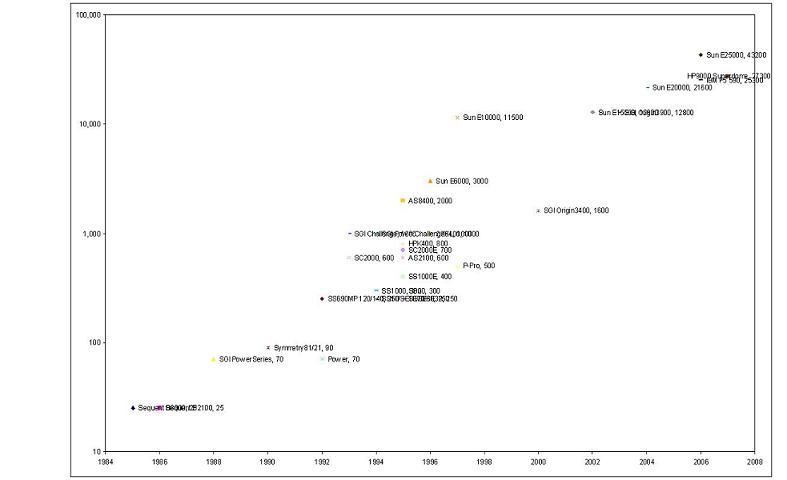

Updated Figure 1.8 & Figure 1.9

References

[1]John L. Hennessy, David A. Patterson, "Computer Architecture: A Quantitative Approach" 3rd Ed., Morgan Kaufmann, CA, USA

[2]CE Kozyrakis, DA Patterson, "A new direction for computer architecture research", Computer Volume 31 Issue 11, IEEE, Nov 1998, pp24-32

[3]K.C. Yeager, "THE MIPS R10000 Superscalar Microprocessor", IEEE Micro Volume 16 Issue 2, Apr. 1996, pp28-41

[4]Geoff Koch, "Discovering Multi-Core: Extending the Benefits of Moore’s Law", Technology@Intel Magazine, Jul 2005, pp1-6

[5]Richard Low, "Microprocessor trends:multicore, memory, and power developments", Embedded Computing Design, Sep 2005

[6]Artur Klauser, "Trends in High-Performance Microprocessor Design", Telematik 1, 2001