E1929 Visualizations for Instructors: Difference between revisions

| Line 21: | Line 21: | ||

=== Old Charts === | === Old Charts === | ||

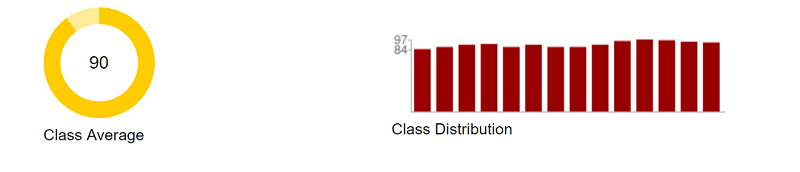

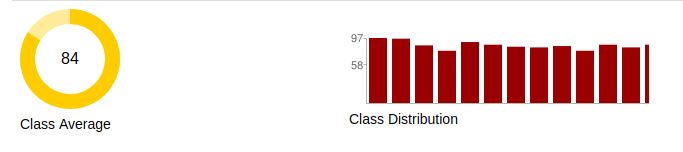

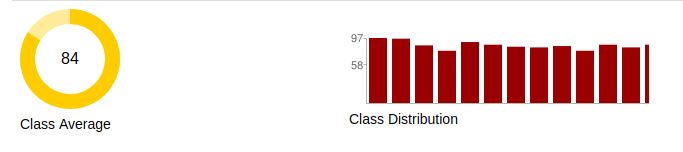

[[File:Existing_Assignment_Grades.JPG|frame|centre|'''Figure 1: Existing assignment grade charts - '''The existing assignment grade charts show the average grade for the assignment and the grade distribution. During this project, we aimed to add a third chart which shows the distribution for specific rubric criteria within the assignment.]] | [[File:Existing_Assignment_Grades.JPG|frame|centre|'''Figure 1: Existing assignment grade charts - '''The existing assignment grade charts show the average grade for the assignment and the grade distribution. During this project, we aimed to add a third chart which shows the distribution for specific rubric criteria within the assignment.]] | ||

[[File:Existing_Assignment_Grades_Zoom.png]] | |||

== Accomplished == | == Accomplished == | ||

Revision as of 01:24, 24 April 2019

Introduction

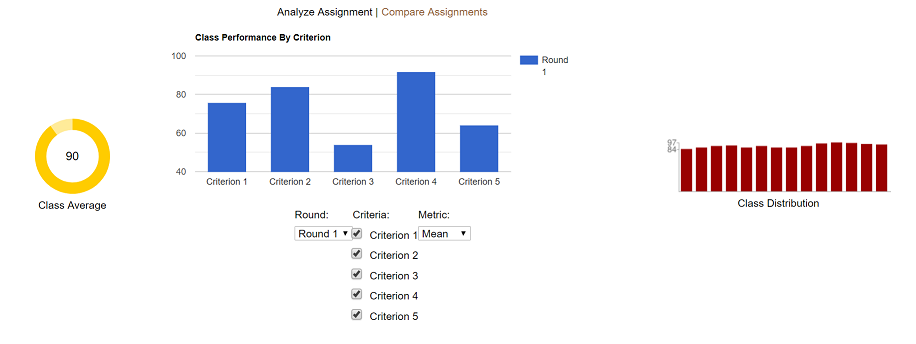

This Expertiza project, completed in the Spring of 2019, aimed to improve the charts instructors could view to see the grade statistics for a given assignment. Two charts already existed, one showing the grade distribution for all teams, and another simply showing the class average grade. Our goal for this project was to add a third chart, using Google Charts, which would show grade statistics for the various rubric grades within that assignment. The chart is interactive so the user (instructor) can toggle which rubric criteria, and which statistics, to display.

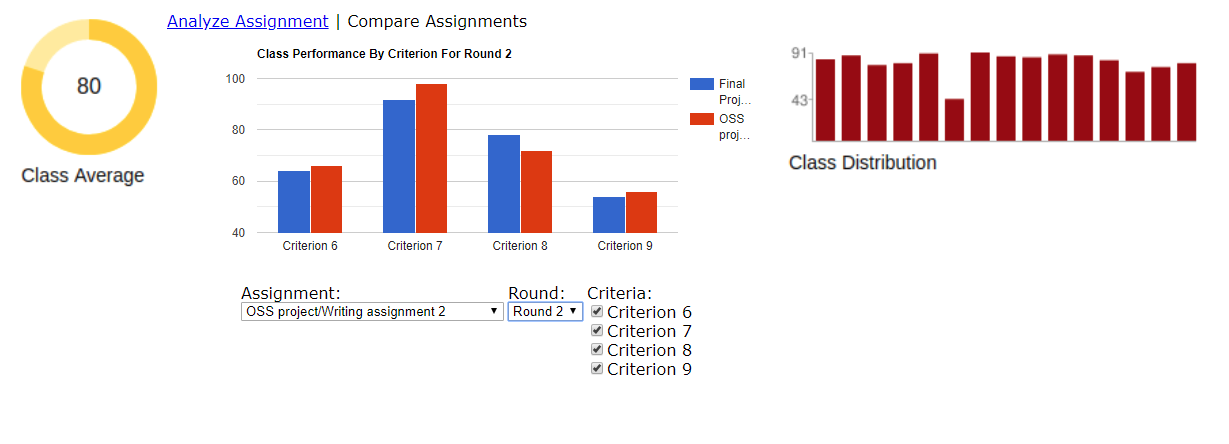

Along with displaying the rubric criteria for a single assignment, we aimed to add a feature which would allow the comparison of compatible rubric grades from two different assignments. Again, this functionality is interactive, allowing the instructor to choose which statistics will be populated in the chart.

By viewing our new visualization of rubric grades, an instructor can better judge which aspects of the course are well-understood, and which may need a bit more attention.

Problem Statement

E1929 - Visualizations for Instructors - Class performance on specific rubrics

An interactive visualization or table that shows how a class performed on selected rubric criteria would be immensely helpful. It would show the instructor what he / she will need to focus more attention on. For example, could you create a graph showing the range and clustering of scores for the 5 main rubric criteria? And, if these same 5 criteria are used in the preliminary and final assignments, it would be nice to be able to compare performance between assignments in a visualization that showed the class results on 3 separate artifacts.

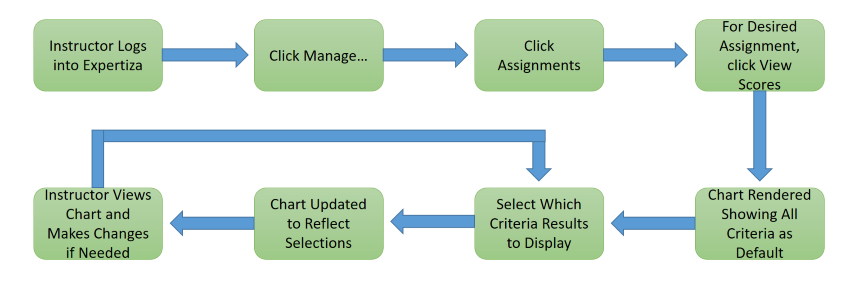

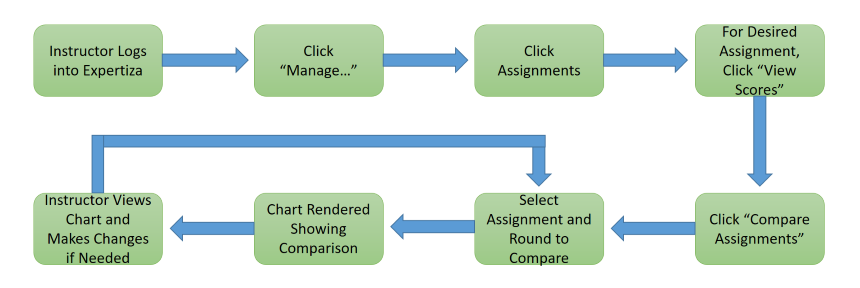

Webpage: login as instructor -> Manage -> Assignments -> View scores

Old Charts

Accomplished

Executed on Plans

Extra Issues Fixed

Testing

We performed automated tests using RSpec Framework and Capybara. In addition, we performed manual tests of the user interface (UI), by using the app.

RSpec Framework Tests

The RSpec Testing Framework, automated testing, was used to verify the models of the Expertiza web application feature set. Since this feature is dealing with visualizations (charts) that are intimately tied with Active Record models, we seeded the testing database with known data via RSpec. These changes were automatically rolled-back once the testing was complete.

Capybara Tests

Another automated testing framework that was used is Capybara. Capybara is a browser type test to simulate a user clicking through your site. We used this testing framework to verify that our charting object was present on the page and contained the seeded data that we had loaded.

UI Tests

In addition to the automated tests above we also performed manual testing of the newly added features to include:

SHOULD UPDATE TO SAY WHAT WE ACTUALLY TESTED

- Chart is displaying correctly

- Bars are showing up where expected

- Bar annotations are showing the expected value

- Criteria labels are for the correct bar and displaying correct values

- Hover text is displaying the correct values

- Null values are not present on the chart

- Correct colors are used for the multi-round view

- Show Labels checkbox works as expected

- Round Criteria is displaying correctly

- Round dropdown menu shows all rounds for the assignment

- Selecting a round changes the criteria checkboxes

- All checkboxes are displayed with appropriate text

- Checkboxes correctly remove or add criterion bars to the chart

Shortcomings

Decisions

Documentation

Team

Mentor:

Akshay Ravichandran

- Chris Adkins

- Bobby DeMarco

- George Hugh

- John Warren

old Introduction

It is the desire of all instructors to emphasize course material that students have trouble understanding and de-emphasize those aspects of the course material which students readily grasp. One method used by instructors is the creation of questionnaires, or rubrics. A rubric consists of a number of questions, or criterion, that give instructors a glimpse into student understanding of course materials. Use of rubrics and their association with a course assignment allow instructors to fine-tune their lesson plans to their students' understanding.

Creation of assignments, and their associated rubrics, can be a daunting task that can be made easier through the use of online platforms. Expertiza is one such online assignment grading platform. Within Expertiza, instructors create courses and assignments. For each assignment, instructors create one or more peer review rubrics for students to complete. After each round of rubrics, students can submit changes to their assignment based on these rubrics, with each submission and rubric considered a round. Hence, assignments have multiple rounds with each round associated with a rubric.

Instructors use the association between the assignment and the submitted rubric(s) associated with the assignment to understand what subjects need more focus. For example, a low average score on a rubric's particular question, or criterion, can indicate that the class has issues with a particular part of the assignment. Currently, instructors using Expertiza display assignment scores on one pages, but need to visit a completely separate page to display rubric scores. This separation of information hinders the instructor's ability to fully comprehend the associations between the assignment scores and the rubric scores. This, in turn, minimizes the likelihood that instructors will integrate this information to fine-tune their course material.

This project proposes to overcome this weakness in Expertiza by integrating the display of assignment scores and rubric scores onto a single page. This integration will allow instructors to more easily associate low rubric average scores with assignment scores. The ability to visualize assignment scores and rubric scores in a single location will reduce the workload on instructors when the scores. This, in turn, will increase the likelihood that instructors will change their lesson plans to reflect the students' understanding of the course material.

old Proposed Changes

To help instructors understand the relationship between assignment scores and rubric scores, this project proposes two types of visualizations. The first type of visualization allows instructors to examine the rubric statistics for a single assignment. The second type of visualization allows instructors to compare the rubric statistics between two different assignments when the criteria for each assignment is the same.

Existing Views

In both cases, the project will add rubric statistics to the assignment grade view. The current assignment grade view consists of a header containing grade statistics followed by a table of the individual grades. In the assignment grade view header, the left side fo the header shows the average assignment grade as a circle, while the right side of the assignment grade view header shows the assignment grade distribution. Figure 1 shows the current header in the grade assignment view page.

The changes proposed by this project integrate rubric statistics to the above assignment grade view. Rubric statistic integration into the assignment grade view allow two types of visualizations. The first visualization allows the instructor to display rubric statistics within a single assignment. The second visualization allows the instructor to display rubric statistics between different assignments in the same course.

Proposed Assignment Grade Visualization

This project proposes the integration of rubric statistic visualization for both single assignments and multiple assignments into the assignment grade view. The integration occurs with a single change to the header of the assignment grade view, and retains the list of student grades. The following HTML mock-up shows the proposed changes to the header. The list of student grades is not changed and therefore not shown.

Interactive Example

https://jwarren3.github.io/expertiza/tabs.html

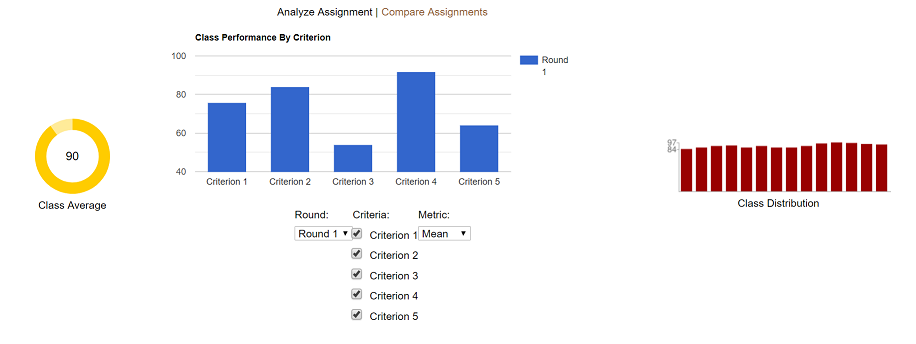

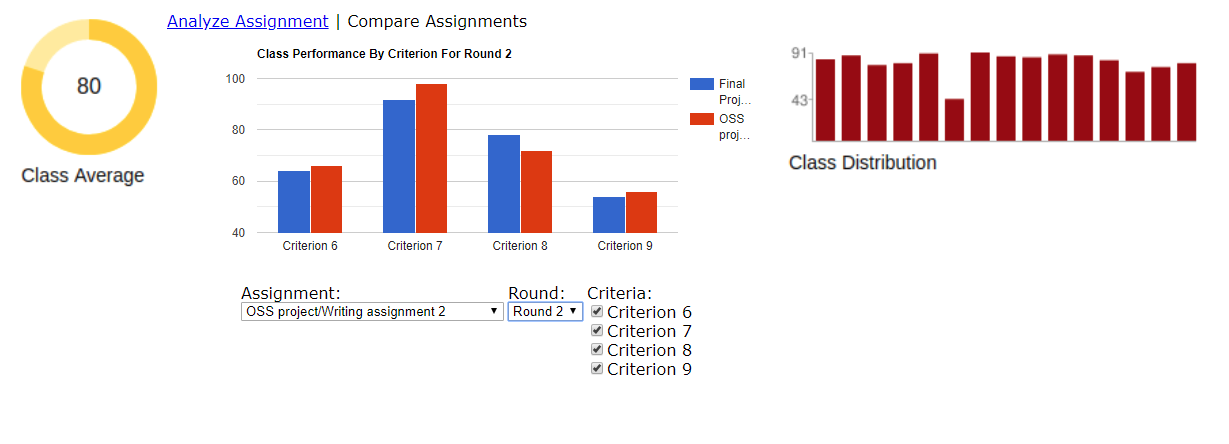

The upper part of the rubric statistic visualization displays a set of tabs that allow the instructor to analyze the rubric within a single assignment or to compare the rubric statistics between multiple assignments. The middle part of the rubric statistic visualization displays the statistics for each selected criteria as a bar graph. The heights of the bars are shown as a percentage of the maximum value for each criteria. The bottom part of the rubric statistic visualization displays a set of options that allow the instructor to filter the statistics shown in the bar graph. The left side (assignment average) and right side (assignment distribution) of the assignment grade view header are not changed.

In the integration of the rubric statistic visualization mock-up within a single assignment, there are two rounds of rubrics for the students to fill out. The rubric in round 1 consisted of five criteria, while the rubric in round 2 consisted of 4 criteria. The round of interest is selected using the drop-down menu on the left side. The criteria shown in the bar graph are selected using radio buttons next to each criterion. Finally, the type of statistic shown is selected using a drop-down menu on the right side of the options. Currently, the statistic is limited to either mean or median. Hovering the mouse over each of the bars in the bar graph shows the numerical value of the chosen statistic.

Visualizing the rubric statistics within a single assignment starts by selecting the left tab titled "Analyze Assignment". The default displays the mean of all criteria from the first round. Changing the round, the type of statistic, and the particular criteria are controlled from the drop-down menus and radio buttons below the bar graph.

Visualizing the rubric statistics between assignments starts by selecting the right tab titled "Compare Assignments". The default displays the comparisons between round one and all criteria of an assignment in the same course. The default assignment is the chronologically earliest assignment. The current assignment is shown in red while the rubric being compared is shown in blue.

New View Examples

old Project Design

During the integration of rubric statistics and assignment grade statistics, this project expects to change controller files, view files and JavaScript files. For methods that are modified by this project, the team will also ensure that Code Climate (an automated code quality checker) issues are resolved. This project will also refactor changed methods to reflect common Ruby and Rails practices.

Proposed Code Changes

The amount of methods and files to be changed during the integration of rubric statistics and assignment grade statistics is minimal. The team proposes changing a controller file, a view file, a JavaScript file, and an RSpec files. The team does not expect to change the database nor do we expect to change the model files. Other files will be changed as required to support the integration. A preliminary list of files to be changed is given below:

grades_controller.rb grading.js _participant_charts.html.erb grades_controllers_spec.rb

Design Flow

Tools and Design Choices

This project proposes using a third party JavaScript library for rendering the rubric statistics. This project proposes two requirements for the visualization library. The first requirement is to use a visualization library that performs client-side rendering. Using a client-side rendering library will minimize the number of server interactions as the instructor changes which rubric statistics to display. This minimization of server interaction will maximize the response time of Expertiza.

The second requirement for the visualization library is compatibility with current Expertiza graphics. The compatibility requirement implies that the visualization library is one that is already being used in Expertiza or a visualization library that could be used to render the current graphics.

Examples of client-side visualization libraries are given below:

Highcharts RaphaelJS dygraphs Protovis Grafico ChartKick VanCharts GoogleCharts

old Test Plan

The team plans to perform automated tests using frameworks RSpec and Capybara. In addition, we will perform manual tests of the user interface (UI), by using the app.

RSpec Framework Tests

The RSpec Testing Framework, automated testing, will be used to verify the Models of the Expertiza Rails Web application feature set. Since this feature is dealing with Visualizations (charts) that is intimately tied with an Active Record Models, we will seed the Testing Database with known data via RSpec. These changes will be automatically rolled-back once the testing is complete.

Capybara Tests

Another automated testing framework that will be used is Capybara. Capybara is an browser type test to simulate a user clicking through your site. We will use this testing framework to verify that our charting object is present on the page and contains the seeded data that we had loaded.

UI Tests

In addition to the automated tests above we will also perform manual testing of the newly added features to include:

- Chart is displaying correctly

- Bars are showing up where expected

- Bar annotations are showing the expected value

- Criteria labels are for the correct bar and displaying correct values

- Hover text is displaying the correct values

- Null values are not present on the chart

- Correct colors are used for the multi-round view

- Show Labels checkbox works as expected

- Round Criteria is displaying correctly

- Round dropdown menu shows all rounds for the assignment

- Selecting a round changes the criteria checkboxes

- All checkboxes are displayed with appropriate text

- Checkboxes correctly remove or add criterion bars to the chart