M1900: Implement missing WebAudio node support: Difference between revisions

| Line 214: | Line 214: | ||

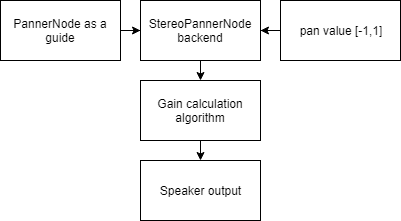

* Implement the backend for StereoPannerNode in the media crate by creating a new node implementation using PannerNode as a guide. The processing algorithm is described in the specification. Create a runnable example based on the example for PannerNode. | * Implement the backend for StereoPannerNode in the media crate by creating a new node implementation using PannerNode as a guide. The processing algorithm is described in the specification. Create a runnable example based on the example for PannerNode. | ||

** The goal for the StereoPannerNode implementation is that the user should be able to specify a pan value between -1 (full left pan) and 1 (full right pan) and the StereoPannerNode will adjust the gain on each of the two speakers accordingly. | ** The goal for the StereoPannerNode implementation is that the user should be able to specify a pan value between -1 (full left pan) and 1 (full right pan) and the StereoPannerNode will adjust the gain on each of the two speakers accordingly. | ||

** We will follow the example in servo/media/examples/panner.rs to create our runnable example. | |||

| Line 219: | Line 220: | ||

* Create the DOM interface for StereoPannerNode and implement the createStereoPannerNode API for BaseAudioContext. | * Create the DOM interface for StereoPannerNode and implement the createStereoPannerNode API for BaseAudioContext. | ||

** We already created the DOM interface for ConstantSourceNode and implemented the createConstantSourceNode API for BaseAudioContext, so we will follow the same procedure when creating the DOM interface for StereoPannerNode and implementing the createStereoPannerNode API. | ** We already created the DOM interface for ConstantSourceNode and implemented the createConstantSourceNode API for BaseAudioContext, so we will follow the same procedure when creating the DOM interface for StereoPannerNode and implementing the createStereoPannerNode API. | ||

Revision as of 01:41, 13 April 2019

Introduction

Major browsers support the WebAudio standard which can be used to create complex media playback applications from low-level building blocks. Servo is a new, experimental browser that supports some of these building blocks (called audio nodes); the goal of this project is to improve compatibility with web content that relies on the WebAudio API by implementing missing pieces of incomplete node types (OscillatorNode) along with entire missing nodes (ConstantSourceNode and StereoPannerNode).

Although the GitHub description for our project is titled "Implement JS support for several missing WebAudio node types", we are not actually programming in JavaScript for this project. This project involves writing Rust code to implement the backend functionality of the WebAudio API, which the end user can then write JavaScript code to utilize. Therefore, the code snippets on this page are entirely in the Rust language. The only portion of our project written in JavaScript/HTML are the tests; however, the tests are not the main focus of this project (feature implementation is).

Tracking Issues

Useful Resources

- Setup for modifying the standalone media backend implementation

- Implementation of audio node processing algorithms

- Runnable examples of audio node processing algorithms

- Example pull request implementing a missing node type in Servo (another example)

- Example pull request implementing the processing backend for a missing node type

- Setup for making changes to Servo's code

- Documentation for introducing new WebIDL-based DOM interfaces to Servo

- Documentation for integrating a version of servo-media that contains your local changes into your local Servo build

Initial Steps (OSS Project)

- Email the mozilla.dev.servo mailing list (be sure to subscribe to it first!) introducing your group and asking any necessary questions.

- Create the DOM interface for ConstantSourceNode and implement the createConstantSource API for BaseAudioContext.

- Connect the DOM interface to the underlying backend node by processing the unimplemented message type.

- Update the expected test results for the relevant tests.

Subsequent Steps (Final Project)

- Implement an audio node message that is specific to OscillatorNode (use BiquadFilterNode as a model) which updates the node's oscillator type.

- Implement the type attribute setter for the OscillatorNode interface which uses the new oscillator node message.

- Implement the backend for StereoPannerNode in the media crate by creating a new node implementation using PannerNode as a guide. The processing algorithm is described in the specification. Create a runnable example based on the example for PannerNode.

- Create the DOM interface for StereoPannerNode and implement the createStereoPannerNode API for BaseAudioContext.

Design Choices

Our project did not involve any design patterns since we were just implementing methods.

However, we faced multiple options of how to create and implement the ConstantSourceNode. Initially, we tried to model it on the OscillatorNode implementation, but faced a build error.

let node_options = options .parent .unwrap_or(2, ChannelCountMode::Max, ChannelInterpretation::Speakers);

We eventually learned that the ConstantSourceNode should not have a parent field because it does not inherit from anything.

let node_options = options .unwrap_or(2, ChannelCountMode::Max, ChannelInterpretation::Speakers);

We faced other design choices that we had to adjust from the OscillatorNode implementation. For example, we encountered errors with this code.

use servo_media::audio::constant_source_node::ConstantSourceNodeOptions;

We then realized that ConstantSourceNodeOptions needed to be cast as a different type in order to function correctly.

use servo_media::audio::constant_source_node::ConstantSourceNodeOptions as ServoMediaConstantSourceOptions;

Completed Work

We created the DOM interface for ConstantSourceNode and implemented the CreateConstantSource API for BaseAudio Context. The connection between the DOM interface and the underlying backend node was already implemented by other contributors before we started the project.

DOM implementation:

use crate::dom::audioparam::AudioParam;

use crate::dom::audioscheduledsourcenode::AudioScheduledSourceNode;

use crate::dom::baseaudiocontext::BaseAudioContext;

use crate::dom::bindings::codegen::Bindings::AudioNodeBinding::{

ChannelCountMode, ChannelInterpretation,

};

use crate::dom::bindings::codegen::Bindings::AudioParamBinding::AutomationRate;

use crate::dom::bindings::codegen::Bindings::ConstantSourceNodeBinding::ConstantSourceNodeMethods;

use crate::dom::bindings::codegen::Bindings::ConstantSourceNodeBinding::{

self, ConstantSourceOptions,

};

use crate::dom::bindings::error::Fallible;

use crate::dom::bindings::reflector::reflect_dom_object;

use crate::dom::bindings::root::{Dom, DomRoot};

use crate::dom::window::Window;

use dom_struct::dom_struct;

use servo_media::audio::node::AudioNodeInit;

use servo_media::audio::constant_source_node::ConstantSourceNodeOptions as ServoMediaConstantSourceOptions;

use servo_media::audio::param::ParamType;

use std::f32;

#[dom_struct]

pub struct ConstantSourceNode {

source_node: AudioScheduledSourceNode,

offset: Dom<AudioParam>,

}

impl ConstantSourceNode {

#[allow(unrooted_must_root)]

pub fn new_inherited(

window: &Window,

context: &BaseAudioContext,

options: &ConstantSourceOptions,

) -> Fallible<ConstantSourceNode> {

let node_options =

options

.parent

.unwrap_or(2, ChannelCountMode::Max, ChannelInterpretation::Speakers);

let source_node = AudioScheduledSourceNode::new_inherited(

AudioNodeInit::ConstantSourceNode(options.into()),

context,

node_options,

0, /* inputs */

1, /* outputs */

)?;

let node_id = source_node.node().node_id();

let offset = AudioParam::new(

window,

context,

node_id,

ParamType::Offset,

AutomationRate::A_rate,

1.,

f32::MIN,

f32::MAX,

);

Ok(ConstantSourceNode {

source_node,

offset: Dom::from_ref(&offset),

})

}

#[allow(unrooted_must_root)]

pub fn new(

window: &Window,

context: &BaseAudioContext,

options: &ConstantSourceOptions,

) -> Fallible<DomRoot<ConstantSourceNode>> {

let node = ConstantSourceNode::new_inherited(window, context, options)?;

Ok(reflect_dom_object(

Box::new(node),

window,

ConstantSourceNodeBinding::Wrap,

))

}

pub fn Constructor(

window: &Window,

context: &BaseAudioContext,

options: &ConstantSourceOptions,

) -> Fallible<DomRoot<ConstantSourceNode>> {

ConstantSourceNode::new(window, context, options)

}

}

impl ConstantSourceNodeMethods for ConstantSourceNode {

fn Offset(&self) -> DomRoot<AudioParam> {

DomRoot::from_ref(&self.offset)

}

}

impl<'a> From<&'a ConstantSourceOptions> for ServoMediaConstantSourceOptions {

fn from(options: &'a ConstantSourceOptions) -> Self {

Self {

offset: *options.offset,

}

}

}

Constant Source Node Webidl DOM:

dictionary ConstantSourceOptions: AudioNodeOptions {

float offset = 1;

};

[Exposed=Window, Constructor (BaseAudioContext context, optional ConstantSourceOptions options)]

interface ConstantSourceNode : AudioScheduledSourceNode {

readonly attribute AudioParam offset;

};

CreateConstantSource API for BaseAudio Context:

use crate::dom::constantsourcenode::ConstantSourceNode;

fn CreateConstantSource(&self) -> Fallible<DomRoot<ConstantSourceNode>> {

ConstantSourceNode::new( &self.global().as_window(), &self, &ConstantSourceOptions::empty())

}

Connection between the DOM interface and the underlying backend node (implemented by other contributors):

AudioNodeInit::ConstantSourceNode(options) => {

Box::new(ConstantSourceNode::new(options, ch))

}

Planned Work (Design Document)

As we continue our project, it will likely not involve any design patterns since we will just be implementing methods. Here is what we must do and our plan for how do it:

- Implement an audio node message that is specific to OscillatorNode (use BiquadFilterNode as a model) which updates the node's oscillator type.

- The audio node message specification is in servo/media/audio/src/node.rs. We will use this specification for guidance.

- Implement the type attribute setter for the OscillatorNode interface which uses the new oscillator node message.

- We will accomplish this by modifying servo/components/script/dom/webidls/OscillatorNode.webidl.

- Implement the backend for StereoPannerNode in the media crate by creating a new node implementation using PannerNode as a guide. The processing algorithm is described in the specification. Create a runnable example based on the example for PannerNode.

- The goal for the StereoPannerNode implementation is that the user should be able to specify a pan value between -1 (full left pan) and 1 (full right pan) and the StereoPannerNode will adjust the gain on each of the two speakers accordingly.

- We will follow the example in servo/media/examples/panner.rs to create our runnable example.

- Create the DOM interface for StereoPannerNode and implement the createStereoPannerNode API for BaseAudioContext.

- We already created the DOM interface for ConstantSourceNode and implemented the createConstantSourceNode API for BaseAudioContext, so we will follow the same procedure when creating the DOM interface for StereoPannerNode and implementing the createStereoPannerNode API.

Testing

Testing from Command Line

After following the setup instructions in the Servo readme, cloning the repository, and changing directories into it, you can build the project with the following command from the terminal (the readme includes the different build commands to use depending on your OS). Note that it may take a long time to build the project.

./mach build --release

After building, you can run the relevant tests with the following command.

./mach test-wpt tests/wpt/web-platform-tests/webaudio/the-audio-api/the-constantsourcenode-interface/

You will notice that all tests pass as expected.

Testing from UI

Our project cannot be tested from a UI since it involves the Servo project.