E1929 Visualizations for Instructors: Difference between revisions

| Line 82: | Line 82: | ||

=== RSpec Test Results === | === RSpec Test Results === | ||

=== UI Tests === | === UI Tests === | ||

Revision as of 11:31, 8 April 2019

Introduction

Expertiza is an online, assignment, grading platform for courses associated with instructors. Each instructor creates courses and assignments associated with those courses. For each assignment, students peer review other students' assignments via questionnaires or rubrics. After each round of rubrics, students can submit changes to their assignment based on these rubrics. Each submission and rubric is considered a round. Hence, assignments have multiple rounds each associated with a rubric.

Instructors use the association between the assignment and the rubrics to understand what subjects need more focus. For example, a low average on a particular question, or criterion, on a rubric can indicate that the class has issues with a particular part of the assignment. Currently, the information about assignment grades is on one pages, while the information on rubrics is on a second page. This project intends to make the visualization of assignment grades and rubric scores easier by showing rubric statistics on the assignment grade page.

Proposed Changes

To help instructors understand the relationship between assignment scores and rubric scores, this project proposes two types of visualizations. The first type of visualization allows instructors to examine the rubric statistics for a single assignment. The second type of visualization allows instructors to compare the rubric statistics between two different assignments when the criteria for each assignment is the same.

Existing Views

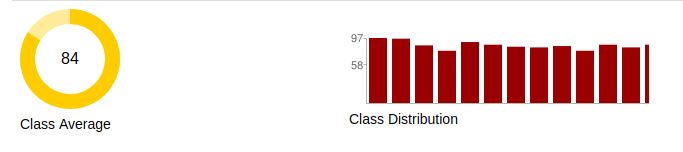

In both cases, the project will add rubric statistics to the assignment grade view. The current assignment grade view consists of a header containing grade statistics followed by a table of the individual grades. In the assignment grade view header, the left side fo the header shows the average assignment grade as a circle, while the right side of the assignment grade view header shows the assignment grade distribution. Figure 1 shows the current header in the grade assignment view page.

The changes proposed by this project integrate rubric statistics to the above assignment grade view. Rubric statistic integration into the assignment grade view allow two types of visualizations. The first visualization allows the instructor to display rubric statistics within a single assignment. The second visualization allows the instructor to display rubric statistics between different assignments in the same course.

Proposed Assignment Grade Visualization

This project proposes the integration of rubric statistic visualization for both single assignments and multiple assignments into the assignment grade view. The integration occurs with a single change to the header of the assignment grade view, and retains the list of student grades. The following HTML mock-up shows the proposed changes to the header. The list of student grades is not changed and therefore not shown.

https://jwarren3.github.io/expertiza/tabs.html

The upper part of the rubric statistic visualization displays a set of tabs that allow the instructor to analyze the rubric within a single assignment or to compare the rubric statistics between multiple assignments. The middle part of the rubric statistic visualization displays the statistics for each selected criteria as a bar graph. The heights of the bars are shown as a percentage of the maximum value for each criteria. The bottom part of the rubric statistic visualization displays a set of options that allow the instructor to filter the statistics shown in the bar graph. The left side (assignment average) and right side (assignment distribution) of the assignment grade view header are not changed.

In the integration of the rubric statistic visualization mock-up within a single assignment, there are two rounds of rubrics for the students to fill out. The rubric in round 1 consisted of five criteria, while the rubric in round 2 consisted of 4 criteria. The round of interest is selected using the drop-down menu on the left side. The criteria shown in the bar graph are selected using radio buttons next to each criterion. Finally, the type of statistic shown is selected using a drop-down menu on the right side of the options. Currently, the statistic is limited to either mean or median. Hovering the mouse over each of the bars in the bar graph shows the numerical value of the chosen statistic.

Visualizing the rubric statistics within a single assignment starts by selecting the left tab titled "Analyze Assignment". The default displays the mean of all criteria from the first round. Changing the round, the type of statistic, and the particular criteria are controlled from the drop-down menus and radio buttons below the bar graph.

Visualizing the rubric statistics between assignments starts by selecting the right tab titled "Compare Assignments". The default displays the comparisons between round one and all criteria of an assignment in the same course. The default assignment is the chronologically earliest assignment. The current assignment is shown in red while the rubric being compared is shown in blue.

Project Design

This project expects to change controller files, view files, JavaScript files, and RSpec files. This project does not expect to change any model files, though models will be changed if model data needs to be reformatted. This project does not expect to change the database.

Design Flow

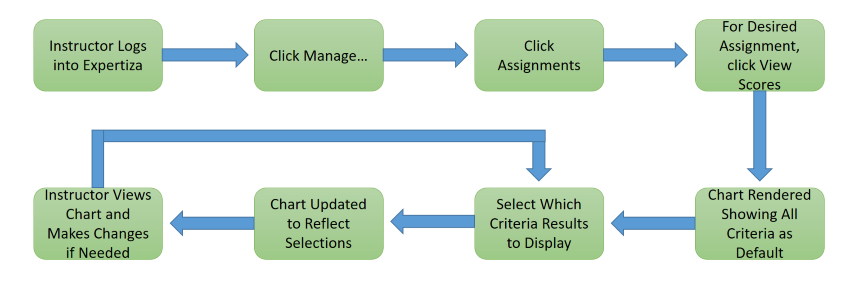

Instructor wants to view student scores on individual rubric criteria:

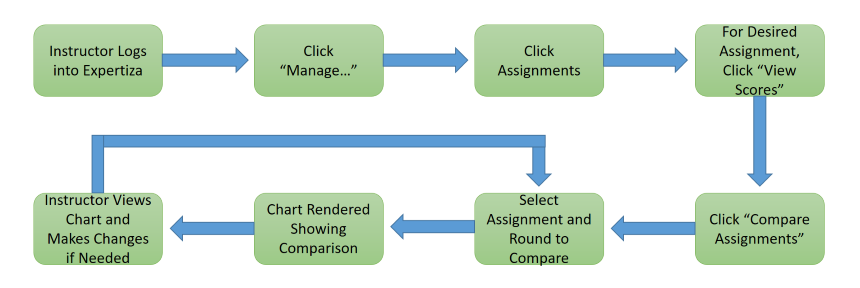

Instructor wants to compare student scores on individual rubric criteria between two compatible rounds of two different assignments:

Tools and Design Choice

This project proposes using a third party library for displaying the rubric statistics. The criteria for this third party library will include TBS.

Test Plan

The team plans to perform both automated tests using frameworks RSpec and Capybara. In addition, we will perform manual tests of the user interface (UI), using the app.

RSpec Framework Tests

The RSpec Testing Framework, automated testing, will be used to verify the Models of the Expertiza Rails Web application feature set. Since this feature is dealing with Visualizations (charts) that is intimately tied with an Active Record Models, we will seed the Testing Database with known data via RSpec. These changes will be automatically rolled-back once the testing is complete.

Capybara Tests

Another automated testing framework that will be used is Capybara. Capybara is an browser type test to simulate a user clicking through your site. We will use this testing framework to verify that our charting object is present on the page and contains the seeded data that we had loaded.

UI Tests

In addition to the automated tests above we will also perform manual testing of the newly added features to include:

- Chart is displaying correctly

- Bars are showing up where expected

- Bar annotations are showing the expected value

- Criteria labels are for the correct bar and displaying correct values

- Hover text is displaying the correct values

- Null values are not present on the chart

- Correct colors are used for the multi-round view

- Show Labels checkbox works as expected

- Round Criteria is displaying correctly

- Round dropdown menu shows all rounds for the assignment

- Selecting a round changes the criteria checkboxes

- All checkboxes are displayed with appropriate text

- Checkboxes correctly remove or add criterion bars to the chart

Files Involved

Test Results

The team ran both automated tests, using the RSpec framework, and manual tests of the user interface (UI), using the app. The automated tests helped to ensure that the basic functionality of the app still worked, while the UI tests ensured that the visualizations were correct.