CSC/ECE 517 Fall 2015 E1580 Text metrics: Difference between revisions

No edit summary |

No edit summary |

||

| Line 111: | Line 111: | ||

The project requires completion of the following tasks | The project requires completion of the following tasks | ||

1 create database table to record all the metrics | 1. create database table to record all the metrics | ||

A table called review_metrics has been added to the project. It has the following columns to record data with respect t each response, i.e. review submitted. | A table called review_metrics has been added to the project. It has the following columns to record data with respect t each response, i.e. review submitted. | ||

<code> | <code> | ||

Revision as of 21:35, 4 December 2015

Introduction

Expertiza is an open-source peer-review based web application which allows for incremental learning. Students can submit learning objects such as articles, wiki pages, repository links and with the help of peer reviews, improve them. The project has been developed using the Ruby on Rails framework and is supported by the National Science Foundation

Project

Purpose

The current version of The Expertiza Project has an automated meta-review system wherein the reviewer gets an e-mail containing various metrics of his review like relevance, plagiarism, number of words etc. , whenever a review is submitted. The purpose of this project is to give students some metrics on the content of the review when the automated meta reviews are disabled. This also includes the addition of new relevant metrics which can help the reviewers and instructors to gain insight into the reviews.

Scope

The scope of the project is limited creating a system where the reviewer and the instructors can view metrics for the submitted reviews.The user or the instructor need to manually visit the link to view the metrics.The instructor can view the metrics for every assignment available whereas the user can view only the metrics of the assignments relating to himself.The scope doesn't include any automated viewing of reports for the metrics.Since the project is mainly related to giving reports about the existing data, we will not be modifying the results saved by the actual peer-review mechanism.The scope also excludes any change of the actual peer review process i.e. submitting a review of an assignment or adding an assignment to a user profile.

Task Description

The project requires completion of the following tasks

- create database table to record all the metrics

- create code to calculate the values of the metrics and also ensure that the code runs fast enough (can give results within 5 seconds) as the current auto text metrics functionality is very slow and diminishes user experience.

- create partials for both students and instructors that show for each assignment:

- Total no.of words

- average no. of words for all the reviews for the particular assignment in a particular round

- if there are suggestions in each reviewer's review

- the percentage of peer reviews that offer any suggestions

- if problems or errors are pointed out in the reviews

- the percentage of the peer-reviews which point out problems in this assignment in this round

- if any offensive language is used

- the percentage of peer-reviews containing offensive language

- No.of different words in a particular reviewer’s review

- No. of questions responded to with complete sentences

- make the code work for an assignment with and without the "vary rubric by rounds" feature

- create tests to make sure the test coverage increases

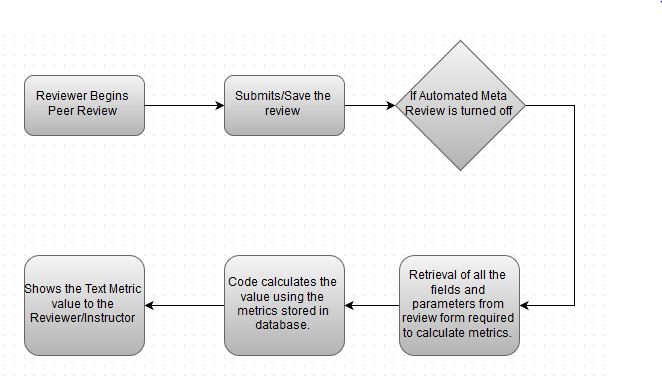

Workflow

Design

Mockups

Reviewer Panel

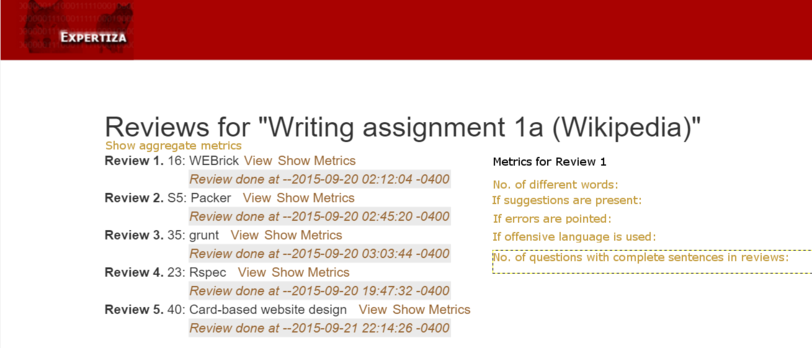

The diagrams below show the proposed changes through the perspective of a reviewer.The reviewer can click the Show aggregate metrics link to view the aggregate metrics.

The reviewer can also click the Show metrics link for each individual review to view the specific metrics for that review.

Instructor Panel

The image represents instructors view of the Review report after our modifications.The instructor can click Show aggregate metrics link to view the aggregate metrics for each team for a given assignment.

Design Patterns

Iterator Pattern<ref>https://en.wikipedia.org/wiki/Iterator_pattern</ref>:The iterator design pattern uses an iterator to traverse a container and access its elements.In our implementation, we would require showing the review metrics for each response.So, we can use the iterator design pattern to iterate over the calculated metrics values.

FlyWeight Pattern<ref>https://en.wikipedia.org/wiki/Flyweight_pattern</ref>: The flyweight design pattern tries to reduce memory footprint by reusing already existing similar objects by storing them and only creates new object when there is no match. In our case, we can use the same partials of the metrics pages for both the students and instructors.

Database Table Design

The image shows the schema for the new table which will be created to store the calculated values of the metrics. Its attributes are explained below:

- response_id: This attribute is automatically generated when a user submits a reviewer submits a review.This can be used to link the metrics to a particular reviewer-response map.

- word_count: This attribute contains the total number of different words for a particular review.

- suggestions_count: This column holds the number of suggestions given per review

- error_count: Field containing the number of comments which point to errors in the code.

- offensive_count: This attribute contains the number of comments containing offensive words.

- comp_reviews: This contains the number of comments which have complete sentences in them.

Use Cases

- View Reviews Text Metrics as Reviewer: As a reviewer, he/she can see the text metrics of individual reviews as well as aggregate metrics for all the reviews done for an assignment/project.

- View Reviews Text Metrics as Reviewee: As a reviewee, he/she can see the text metrics of individual reviews received from reviewers as well as aggregate metrics for all the reviews received for an assignment/project.

- View Reviews Text Metrics as Instructor: As an instructor, he/she can see the text metrics of reviews received by any team for a particular project/assignment. The instructor can also see the text metrics of the reviews done by any reviewer.

Test Plan

- For use case 1, test whether the text metrics Db has entries populated for each type of metrics (no. of words, no. of offensive words, etc), once the reviewer submits any reviews.

- For use case 2, test if the reviewer can see the text metrics of individual reviews received from all reviewers.

- For use case 3, test if the instructor can see the text metrics of reviews received by each team for a project/assignment. Also, test if the instructor can see the text metrics done by any reviewer.

Details of Requirements

Hardware requirements

- Computing Power: Same as the current Expertiza system.

- Memory: Same as the current Expertiza system.

- Disk Storage: Same as the current Expertiza system.

- Peripherals: Same as the current Expertiza system.

- Network: Same as the current Expertiza system.

Software requirements

- Operating system environment : Windows/UNIX/OS X based OS

- Networking environment: Same as it is used in the current Expertiza system

- Tools: Git, Interactive Ruby

Implementation

Task Description

The project requires completion of the following tasks

1. create database table to record all the metrics

A table called review_metrics has been added to the project. It has the following columns to record data with respect t each response, i.e. review submitted.

class CreateReviewMetrics < ActiveRecord::Migration

def change

create_table :review_metrics do |t|

t.integer :response_id

t.integer :total_word_count

t.integer :diff_word_count

t.integer :suggestion_count

t.integer :error_count

t.integer :offensive_count

t.integer :complete_count

t.timestamps null: false

end

add_foreign_key :review_metrics, :responses

end

end

- create code to calculate the values of the metrics and also ensure that the code runs fast enough (can give results within 5 seconds) as the current auto text metrics functionality is very slow and diminishes user experience.

- create partials for both students and instructors that show for each assignment:

- Total no.of words

- average no. of words for all the reviews for the particular assignment in a particular round

- if there are suggestions in each reviewer's review

- the percentage of peer reviews that offer any suggestions

- if problems or errors are pointed out in the reviews

- the percentage of the peer-reviews which point out problems in this assignment in this round

- if any offensive language is used

- the percentage of peer-reviews containing offensive language

- No.of different words in a particular reviewer’s review

- No. of questions responded to with complete sentences

- make the code work for an assignment with and without the "vary rubric by rounds" feature

- create tests to make sure the test coverage increases

Reference

<references/>