User:Mrohatg2: Difference between revisions

No edit summary |

|||

| (20 intermediate revisions by 2 users not shown) | |||

| Line 18: | Line 18: | ||

== Solution == | == Solution == | ||

One solution to the above mentioned problem is to have a common schema which can capture the essence of all the reviews in a peer-review system to its entirety. Moreover, this schema should not capture any information specific to a particular peer-review system. Once such a schema standard is in place the different peer-review systems can export the data in their database to this new standard and the data can be easily shared across different peer-review systems. | One solution to the above mentioned problem is to have a common schema which can capture the essence of all the reviews in a peer-review system to its entirety. Moreover, this schema should not capture any information specific to a particular peer-review system. Once such a schema standard is in place the different peer-review systems can export the data in their database to this new standard and the data can be easily shared across different peer-review systems. | ||

=== PRML === | |||

Peer Review Markup Language is such a standard schema which can capture information about different reviews to its entirety. | |||

== Scope of the Project == | |||

The scope of this project is to export the data from the Expertiza database to PRML format. | |||

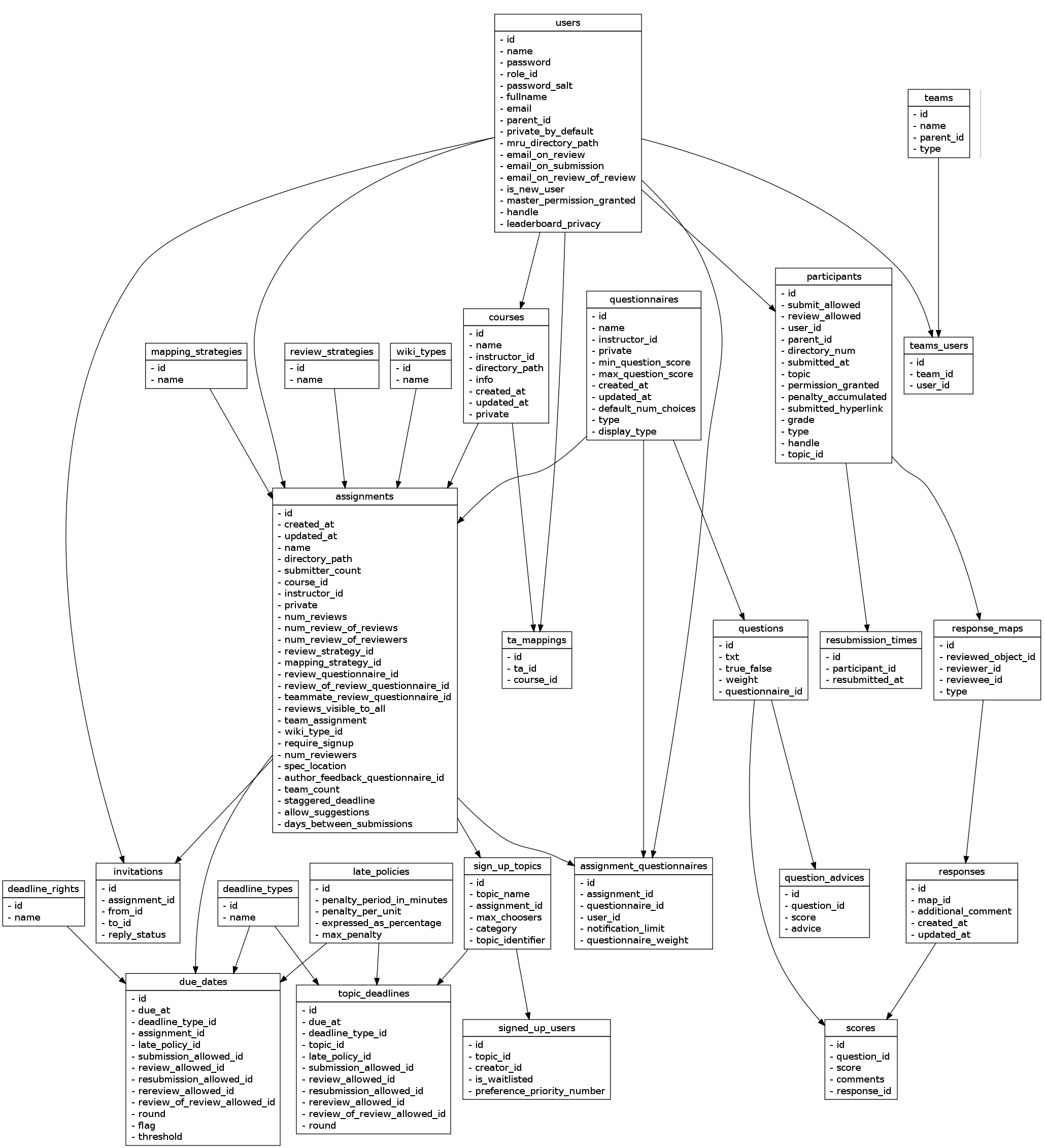

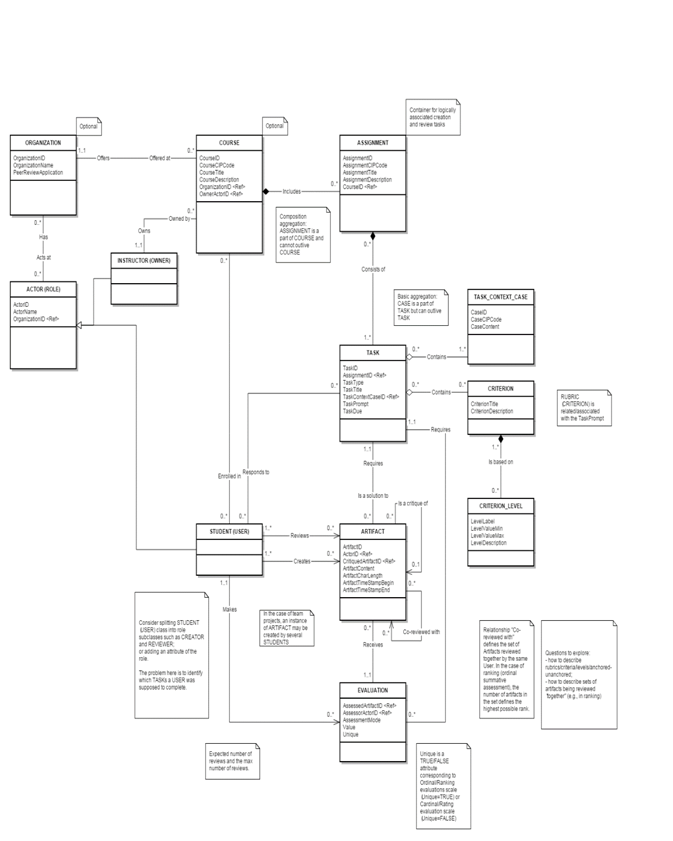

== Expertiza Design Document == | |||

[[File:Pg_development.png]] | |||

== Expertiza Database Diagram == | |||

[[File:PRML.png]] | |||

== Expertiza database schema to PRML database schema map == | |||

{| class="wikitable" | |||

|- | |||

! Table Name | |||

! Courses | |||

! Course | |||

|- | |||

!Attributes | |||

| id | |||

| CourseId | |||

|- | |||

| | |||

|null | |||

|CourseCIPCode | |||

|- | |||

| | |||

|name | |||

|CourseTitle | |||

|- | |||

| | |||

|info | |||

|CourseDescription | |||

|- | |||

| | |||

|null | |||

|CourseLevelID | |||

|- | |||

| | |||

|created_at | |||

|CourseCreated | |||

|- | |||

| | |||

|null | |||

|CourseStarted | |||

|- | |||

| | |||

|null | |||

|CourseEnded | |||

|- | |||

! Table Name | |||

! Assignments | |||

! Assignment | |||

|- | |||

!Attributes | |||

| id | |||

| AssignmentId | |||

|- | |||

| | |||

|null | |||

|AssignmentCIPCode | |||

|- | |||

| | |||

|name | |||

|AssignmentTitle | |||

|- | |||

| | |||

|spec_location | |||

|AssignmentDescription | |||

|- | |||

| | |||

|course_id | |||

|CourseID | |||

|- | |||

!TABLE NAME | |||

!due_dates, deadline_types | |||

!Task | |||

|- | |||

!ATTRIBUTES | |||

|due_dates.id | |||

|TaskID | |||

|- | |||

| | |||

|due_dates.deadline_type_id | |||

|TaskTypeID | |||

|- | |||

| | |||

|deadline_types.name | |||

|TaskTitle | |||

|- | |||

| | |||

|due_dates.description_url | |||

|TaskDescription | |||

|- | |||

| | |||

|NULL | |||

|TaskOpen | |||

|- | |||

| | |||

|due_dates.due_at | |||

|TaskDue | |||

|- | |||

!TABLE NAME | |||

!questions, questionnaires | |||

!criterion | |||

|- | |||

!ATTRIBUTES | |||

|questions.id | |||

|CriterionID | |||

|- | |||

| | |||

|questions.txt | |||

|CriterionTitle | |||

|- | |||

| | |||

|NULL | |||

|CriterionDescription | |||

| | |||

|- | |||

| | |||

|questions.type | |||

|Type | |||

|- | |||

| | |||

|questionnaires.max_question_score | |||

|MaxLabel | |||

|- | |||

| | |||

|questionnaires.min_question_score | |||

|MinLabel | |||

|- | |||

!TABLE NAME | |||

!question_advices | |||

!Level | |||

|- | |||

!ATTRIBUTES | |||

|id | |||

|LevelID | |||

|- | |||

| | |||

|score | |||

|LevelLabel | |||

|- | |||

| | |||

|advice | |||

|LevelDescription | |||

|- | |||

!TABLE NAME | |||

!questions | |||

!Rubric | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|TaskID | |||

|- | |||

| | |||

|questions.id | |||

|CriterionID | |||

|- | |||

!TABLE NAME | |||

!teams | |||

!Actor | |||

|- | |||

!ATTRIBUTES | |||

|id | |||

|ActorID | |||

|- | |||

| | |||

|type | |||

| | |||

|- | |||

| | |||

|parent_id | |||

| | |||

|- | |||

!TABLE NAME | |||

!participants | |||

!Actor | |||

|- | |||

| | |||

|id | |||

|ActorID | |||

|- | |||

| | |||

|type | |||

|- | |||

| | |||

|parent_id | |||

|- | |||

! | |||

! | |||

!ActorTask | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|ActorID | |||

|- | |||

| | |||

| | |||

|TaskID | |||

|- | |||

| | |||

!teams_users | |||

!Participant | |||

|- | |||

| | |||

|user_id | |||

|ParticipantID | |||

|- | |||

| | |||

|team_id | |||

| | |||

|- | |||

| | |||

| | |||

|AppID | |||

|- | |||

!TABLE NAME | |||

!participants | |||

!Participant | |||

|- | |||

!ATTRIBUTES | |||

|user_id | |||

|ParticipantID | |||

|- | |||

| | |||

|id | |||

| | |||

|- | |||

| | |||

| | |||

|AppID | |||

|- | |||

!TABLE NAME | |||

| | |||

!ActorParticipant | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|ActorID | |||

|- | |||

| | |||

| | |||

|ParticipantID | |||

|- | |||

| | |||

| | |||

|RoleID | |||

|- | |||

!TABLE NAME | |||

| | |||

!Enrollment | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|ParticipantID | |||

|- | |||

| | |||

| | |||

|AppID | |||

|- | |||

| | |||

| | |||

|CourseID | |||

|- | |||

!TABLE NAME | |||

!Teams | |||

!Artifact | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|ActorID | |||

|- | |||

| | |||

| | |||

|TaskID | |||

|- | |||

| | |||

|name | |||

|ArtifactContent | |||

|- | |||

| | |||

|NULL | |||

|ArtifactCharLength | |||

|- | |||

| | |||

|NULL | |||

|ArtifactOpen | |||

|- | |||

| | |||

|NULL | |||

|ArtifactEnd | |||

|- | |||

!TABLE NAME | |||

| | |||

!Item | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|ItemContent | |||

|- | |||

| | |||

| | |||

|ArtifactId | |||

|- | |||

!TABLE NAME | |||

| | |||

!Review | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|AssessorActorID | |||

|- | |||

| | |||

| | |||

|TaskID | |||

|- | |||

| | |||

| | |||

|AssessedArtifactID | |||

|- | |||

| | |||

| | |||

|CritiqueArtifactID | |||

|- | |||

!TABLE NAME | |||

| | |||

!ReviewCriterionLevel | |||

|- | |||

!ATTRIBUTES | |||

| | |||

|AssessorActorID | |||

|- | |||

| | |||

| | |||

|TaskID | |||

|- | |||

| | |||

| | |||

|AssessedArtifactID | |||

|- | |||

| | |||

| | |||

|CriterionID | |||

|- | |||

| | |||

| | |||

|CritiqueArtifactID | |||

|- | |||

| | |||

| | |||

|EvalMode | |||

|- | |||

| | |||

| | |||

|EvalLevelID | |||

|- | |||

| | |||

| | |||

|ValueOrdinal | |||

|- | |||

| | |||

| | |||

|ValueCardinal | |||

|- | |||

| | |||

| | |||

|Comment | |||

|} | |||

== Implementation == | |||

We have used an Extract, Transform and Load [https://en.wikipedia.org/wiki/Extract,_transform,_load ETL] tool, [http://community.pentaho.com/projects/data-integration/ Pentaho Kettle] to perform the data migration from Expertiza to PRML database. | |||

[[File:diagram.png]] | |||

The Pentaho Kettle tool extracts data from the expertise schema, performs transformation on it and load the data in the PRML format. | |||

The tables are mapped as shown in the schema map above. | |||

The operations performed by the ETL tool: | |||

'''Extraction''' | |||

The data is extracted from the expertise database and brought into the staging area by this operation of the ETL tool. It is the staging area in which all the transformation are done. | |||

'''Transformation''' | |||

Many operations are performed on the data in the staging area so that the expertise schema can be converted to the PRML schema. This is called transformation of the data. An example of transformation is while mapping of participants table in the expertiza database to actors table in the PRML database. Each value of the id field is incremented by 1000 while inserting in the participants table. | |||

'''Loading:''' | |||

The transformed data when moved from the staging area to the PRML database is called as loading. There are three types of loading implemented on the tables depending on the size of the table – initial load,partial loading and full loading. | |||

Partial Loading: Only the new or updated entries in the expertiza table are transformed and loaded in the corresponding PRML table. This is generally done for table with very large number of entries. Eg: Reviews | |||

Partial loading is implemented with the help of timestamps and checksums. | |||

Timestamp: One way to perform partial loading is to have a 'modified' time stamp in the source table. The destination table should mirror this 'modified' time stamp. Then while loading the destination table we extract only those records from the source table which has a 'modified' time stamp value greater than the latest 'modified' time stamp value in the destination table. The advantage is that we need not fetch all records from the source table. Only those records which needs to be updated in the destination table will be retrieved. (refer load_prml_ModifiedTimeStampTable transformation) | |||

Checksum: Another way to perform partial loading is using checksum. A checksum is computed for each record, on selected field values, of the source table and added to the destination table while initial loading. Loading which follow would again compute the checksum for source table records and if there is no matching checksum in the destination table an update or an insert would be performed. (refer load_prml_checksumtable transformation) | |||

Full Loading: All the entries from the expertiza table are transformed and loaded in the corresponding PRML table. This is generally done for tables with less number of entries. | |||

Eg: Courses | |||

'''Expertiza tables which have been implemented''' | |||

Assignments Table - Full Load | |||

Course Table - Full Load | |||

ModifiedTimeStamp Table - Partial Load | |||

Task Table | |||

Criteria Table | |||

Due_Dates Table | |||

Questionnaire Table | |||

Teams Table | |||

Jobs are being performed in a sequential order in the current project. If the tables do not depend on one another they can be performed in a parallel way to reduce time. | |||

Initially the entries in the table are buffered so that only around 8000 rows are processed at a time. | |||

== Testing == | |||

We have tested our conversion by using a set of sample expertiza data. We run the extract, transform and load methodology on it. Queries are run on the PRML database to check if it gives the same results as the expertiza database. | |||

Though the testing is not through, this is the only way the project can be tested. | |||

==Running the Project== | |||

'''Required Software:''' | |||

*Pentaho’s Data Integration (Kettle). | |||

*MySQL | |||

*JAVA | |||

'''Setup:''' | |||

*Download and Install MySQL and JAVA | |||

*Import to MySQL expertiza database dump file. | |||

*Import to MySQL PRML database dump file. | |||

*Download Pentaho's Data Integration(Kettle) Community Edition (its a copy deployment hence no installation is required). | |||

*Download MySql Connector jar file and copy to <pentaho_root_directory\data-integration\lib> | |||

*Start Spoon.bat if you are using a windows machine or Spoon.sh if you using Linux (pentaho_root_directory\data-integration) | |||

*Download the transformations and jobs attached | |||

*You can open a kettle transformation (.ktr file) using Spoon and run it (Make sure proper MySql DB connections are configured) | |||

*You may also open the kettle job (.kjb file) using spoon and run it (Make sure the path for transformations in the job are correct) | |||

Latest revision as of 20:12, 18 December 2015

Problem Statement

Educational peer-review systems like Expertiza contains a huge volume of data accumulated during the course of its use. Each peer-review system has a different database design. The relational model is so diverse that, it is often difficult to share the data across different peer-review systems.

In order to migrate a student’s data from one peer-review system to another, one will have to do the following:

- Understand the database design/schema of the source peer-review system

- Understand the database design/schema of the destination peer-review system

- Find the equivalent tables and relations in the destination peer-review system for the source peer-review system

- Retrieve the data from the source peer-review system

- Modify it to match the schema of the destination peer-review system

- Insert the data to the destination peer-review system database.

This is a very tedious job. Moreover, with such a diverse schema it is quite difficult to leverage the power of this large structured data and run any sort of analytics on it.

Solution

One solution to the above mentioned problem is to have a common schema which can capture the essence of all the reviews in a peer-review system to its entirety. Moreover, this schema should not capture any information specific to a particular peer-review system. Once such a schema standard is in place the different peer-review systems can export the data in their database to this new standard and the data can be easily shared across different peer-review systems.

PRML

Peer Review Markup Language is such a standard schema which can capture information about different reviews to its entirety.

Scope of the Project

The scope of this project is to export the data from the Expertiza database to PRML format.

Expertiza Design Document

Expertiza Database Diagram

Expertiza database schema to PRML database schema map

| Table Name | Courses | Course | |

|---|---|---|---|

| Attributes | id | CourseId | |

| null | CourseCIPCode | ||

| name | CourseTitle | ||

| info | CourseDescription | ||

| null | CourseLevelID | ||

| created_at | CourseCreated | ||

| null | CourseStarted | ||

| null | CourseEnded | ||

| Table Name | Assignments | Assignment | |

| Attributes | id | AssignmentId | |

| null | AssignmentCIPCode | ||

| name | AssignmentTitle | ||

| spec_location | AssignmentDescription | ||

| course_id | CourseID | ||

| TABLE NAME | due_dates, deadline_types | Task | |

| ATTRIBUTES | due_dates.id | TaskID | |

| due_dates.deadline_type_id | TaskTypeID | ||

| deadline_types.name | TaskTitle | ||

| due_dates.description_url | TaskDescription | ||

| NULL | TaskOpen | ||

| due_dates.due_at | TaskDue | ||

| TABLE NAME | questions, questionnaires | criterion | |

| ATTRIBUTES | questions.id | CriterionID | |

| questions.txt | CriterionTitle | ||

| NULL | CriterionDescription | ||

| questions.type | Type | ||

| questionnaires.max_question_score | MaxLabel | ||

| questionnaires.min_question_score | MinLabel | ||

| TABLE NAME | question_advices | Level | |

| ATTRIBUTES | id | LevelID | |

| score | LevelLabel | ||

| advice | LevelDescription | ||

| TABLE NAME | questions | Rubric | |

| ATTRIBUTES | TaskID | ||

| questions.id | CriterionID | ||

| TABLE NAME | teams | Actor | |

| ATTRIBUTES | id | ActorID | |

| type | |||

| parent_id | |||

| TABLE NAME | participants | Actor | |

| id | ActorID | ||

| type | |||

| parent_id | |||

| ActorTask | |||

| ATTRIBUTES | ActorID | ||

| TaskID | |||

| teams_users | Participant | ||

| user_id | ParticipantID | ||

| team_id | |||

| AppID | |||

| TABLE NAME | participants | Participant | |

| ATTRIBUTES | user_id | ParticipantID | |

| id | |||

| AppID | |||

| TABLE NAME | ActorParticipant | ||

| ATTRIBUTES | ActorID | ||

| ParticipantID | |||

| RoleID | |||

| TABLE NAME | Enrollment | ||

| ATTRIBUTES | ParticipantID | ||

| AppID | |||

| CourseID | |||

| TABLE NAME | Teams | Artifact | |

| ATTRIBUTES | ActorID | ||

| TaskID | |||

| name | ArtifactContent | ||

| NULL | ArtifactCharLength | ||

| NULL | ArtifactOpen | ||

| NULL | ArtifactEnd | ||

| TABLE NAME | Item | ||

| ATTRIBUTES | ItemContent | ||

| ArtifactId | |||

| TABLE NAME | Review | ||

| ATTRIBUTES | AssessorActorID | ||

| TaskID | |||

| AssessedArtifactID | |||

| CritiqueArtifactID | |||

| TABLE NAME | ReviewCriterionLevel | ||

| ATTRIBUTES | AssessorActorID | ||

| TaskID | |||

| AssessedArtifactID | |||

| CriterionID | |||

| CritiqueArtifactID | |||

| EvalMode | |||

| EvalLevelID | |||

| ValueOrdinal | |||

| ValueCardinal | |||

| Comment |

Implementation

We have used an Extract, Transform and Load ETL tool, Pentaho Kettle to perform the data migration from Expertiza to PRML database.

The Pentaho Kettle tool extracts data from the expertise schema, performs transformation on it and load the data in the PRML format. The tables are mapped as shown in the schema map above. The operations performed by the ETL tool:

Extraction The data is extracted from the expertise database and brought into the staging area by this operation of the ETL tool. It is the staging area in which all the transformation are done.

Transformation Many operations are performed on the data in the staging area so that the expertise schema can be converted to the PRML schema. This is called transformation of the data. An example of transformation is while mapping of participants table in the expertiza database to actors table in the PRML database. Each value of the id field is incremented by 1000 while inserting in the participants table.

Loading: The transformed data when moved from the staging area to the PRML database is called as loading. There are three types of loading implemented on the tables depending on the size of the table – initial load,partial loading and full loading. Partial Loading: Only the new or updated entries in the expertiza table are transformed and loaded in the corresponding PRML table. This is generally done for table with very large number of entries. Eg: Reviews

Partial loading is implemented with the help of timestamps and checksums.

Timestamp: One way to perform partial loading is to have a 'modified' time stamp in the source table. The destination table should mirror this 'modified' time stamp. Then while loading the destination table we extract only those records from the source table which has a 'modified' time stamp value greater than the latest 'modified' time stamp value in the destination table. The advantage is that we need not fetch all records from the source table. Only those records which needs to be updated in the destination table will be retrieved. (refer load_prml_ModifiedTimeStampTable transformation)

Checksum: Another way to perform partial loading is using checksum. A checksum is computed for each record, on selected field values, of the source table and added to the destination table while initial loading. Loading which follow would again compute the checksum for source table records and if there is no matching checksum in the destination table an update or an insert would be performed. (refer load_prml_checksumtable transformation)

Full Loading: All the entries from the expertiza table are transformed and loaded in the corresponding PRML table. This is generally done for tables with less number of entries. Eg: Courses

Expertiza tables which have been implemented

Assignments Table - Full Load

Course Table - Full Load

ModifiedTimeStamp Table - Partial Load

Task Table

Criteria Table

Due_Dates Table

Questionnaire Table

Teams Table

Jobs are being performed in a sequential order in the current project. If the tables do not depend on one another they can be performed in a parallel way to reduce time. Initially the entries in the table are buffered so that only around 8000 rows are processed at a time.

Testing

We have tested our conversion by using a set of sample expertiza data. We run the extract, transform and load methodology on it. Queries are run on the PRML database to check if it gives the same results as the expertiza database. Though the testing is not through, this is the only way the project can be tested.

Running the Project

Required Software:

- Pentaho’s Data Integration (Kettle).

- MySQL

- JAVA

Setup:

- Download and Install MySQL and JAVA

- Import to MySQL expertiza database dump file.

- Import to MySQL PRML database dump file.

- Download Pentaho's Data Integration(Kettle) Community Edition (its a copy deployment hence no installation is required).

- Download MySql Connector jar file and copy to <pentaho_root_directory\data-integration\lib>

- Start Spoon.bat if you are using a windows machine or Spoon.sh if you using Linux (pentaho_root_directory\data-integration)

- Download the transformations and jobs attached

- You can open a kettle transformation (.ktr file) using Spoon and run it (Make sure proper MySql DB connections are configured)

- You may also open the kettle job (.kjb file) using spoon and run it (Make sure the path for transformations in the job are correct)