CSC/ECE 506 Spring 2015/5b SA: Difference between revisions

| (129 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

[http://courses.ncsu.edu/csc506/lec/001/homework/ch5_6.html Problem Description] | |||

==Introduction== | ==Introduction== | ||

Parallelization of computations in the presence of dynamic data structures has shown immense potential. Here we discuss | Parallelization of computations in the presence of dynamic data structures has shown immense potential. Here we discuss | ||

how can we parallelize linked data structures [[#References|<sup>[7]<sup>]]. Efficiently exploiting parallelism is fundamental in parallel computing even in the context of | how can we parallelize linked data structures [[#References|<sup>[7]<sup>]]. Efficiently exploiting parallelism is fundamental in parallel computing even in the context of linked data structures. Some amount of '''speculation''' is needed to figure out how to exploit it the best sometimes based on the expected structure of the data. | ||

The idea is to introduce '''concurrency'''[[#References|<sup>[6]</sup>]] in the existing data structures to enable '''multi-threading''' and hence parallelism. We need to handle synchronization since we need concurrency. We discuss the major | The idea is to introduce '''concurrency'''[[#References|<sup>[6]</sup>]] in the existing data structures to enable '''multi-threading''' and hence parallelism. We need to handle synchronization since we need concurrency. We discuss the major approaches in parallelizing data structures and then discuss some algorithms existing in the literature to gain more insight into the process. | ||

==Overview== | ==Overview== | ||

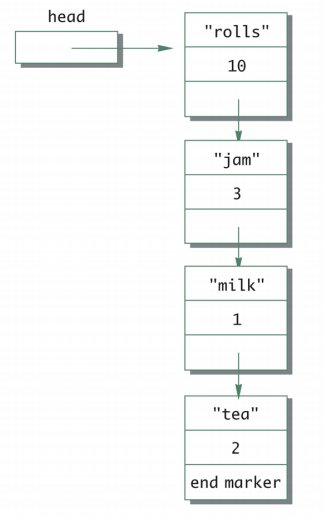

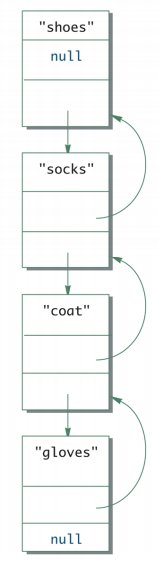

The simplest linked data structure we can think of is a linked list. A single linked list with one pointer pointing to the next node in the list or double linked list where nodes have two pointers pointing to the adjacent nodes to its left and right in the linked list are the most commonly used linked data structures. Following figures are some examples of the same. The first shows the head node to a singly linked list and the second shows a | The simplest linked data structure we can think of is a linked list. A single linked list with one pointer pointing to the next node in the list or double linked list where nodes have two pointers pointing to the adjacent nodes to its left and right in the linked list are the most commonly used linked data structures. Following figures are some examples of the same. The first shows the head node to a singly linked list and the second shows a doubly linked list. | ||

[[File:linked-list2.jpg | [[File:linked-list2.jpg|thumbnail|left|upright=1.9|600px|A linked List]] [http://idb.csie.ncku.edu.tw/tsengsm/COURSE/Java/Slides/Java-15-Linked_Data_Structure.pdf] [[File:linked-list1.jpg|thumbnail|center|Linked List Traversal]] | ||

| Line 16: | Line 18: | ||

Linearizability is an important property of operations that may be executed concurrently | Linearizability is an important property of operations that may be executed concurrently | ||

[Herlihy and Wing(1990)][[#References|<sup>[3]</sup>]][http://dl.acm.org/citation.cfm?id=78972]. An operation | [Herlihy and Wing (1990)][[#References|<sup>[3]</sup>]][http://dl.acm.org/citation.cfm?id=78972]. An operation is linearizable if the rest of the system observes the corresponding system state change as if it occurred | ||

the rest of the system observes the corresponding system state change as if it occurred | |||

instantaneously at a single point in time after the operation started and before it finished. | instantaneously at a single point in time after the operation started and before it finished. | ||

This property ensures that all the linearizable operations in the program have a mutual | This property ensures that all the linearizable operations in the program have a mutual | ||

order observed in the same way by the entire system. | order observed in the same way by the entire system. Basically linearizability is the converse of serializability. It is an | ||

assurance about single operations on single objects. Linearizability helps in maintaining consistency for parallel or distributed systems dealing with concurrent objects. [[#References|<sup>[16]</sup>]] | |||

As mentioned before concurrency needs to be enabled for parallel linked data structures. In that context, linearizability is a useful property to have for concurrent operations. It can identify a single instruction or sub-operation which | As mentioned before concurrency needs to be enabled for parallel linked data structures. In that context, linearizability is a useful property to have for concurrent operations. It can identify a single instruction or sub-operation which | ||

| Line 29: | Line 32: | ||

Unlike data structures like arrays, linked lists are unsuitable for naive parallelization methods which employ divide and | Unlike data structures like arrays, linked lists are unsuitable for naive parallelization methods which employ divide and | ||

conquer policy | conquer policy. They split the input and assign them to multiple workers for parallel processing. Splitting an arbitrary linked list requires traversing half of its elements. For too many operation instances such an O(n) splitting is | ||

unacceptable. Hence, intuitively, it is more efficient to convert the linked list into an array and then use splitters | unacceptable. Hence, intuitively, it is more efficient to convert the linked list into an array and then use splitters | ||

on the array. One thing to be noticed is that these data structures does not retain information about the relative order of elements, hence the operators applied to their data-parallel operations need to be '''commutative'''. | on the array. One thing to be noticed is that these data structures does not retain information about the relative order of elements, hence the operators applied to their data-parallel operations need to be '''commutative'''. | ||

The major challenges of linked data structure parallelization are: | |||

# Loosely coupled data, dynamically created, static partitioning ahead will not help | |||

# Scatter, gather, map, sort are some basic parallel techniques used for parallelizing static data structures like arrays. Since, node traversal is costly and list keeps changing with time, static allocation of nodes to threads is incorrect here. | |||

# Unlike arrays, search in linked list needs to be optimized, since searching in linked list is costlier than arrays which has indices. Thus search optimization is a concern in parallelization of linked data structures. | |||

Linearizability property as mentioned before guarantees that the entire system is in a consistent state during concurrent operations. This property helps in parallelizing dynamic data structures. '''The way to achieve linearizability is to run atomic operations in critical sections where multiple threads concurrently content to work upon'''. In the following examples we shall see how CAS (compare and set) are used those atomic operations and locks are used to achieve linearizability. | |||

As mentioned before, a linked list data structure consists of a set of nodes such that each node points to | As mentioned before, a linked list data structure consists of a set of nodes such that each node points to | ||

| Line 39: | Line 51: | ||

=== ''Example 1 Parallelized Linked List'' === | === ''Example 1 LOCK FREE Parallelized Linked List'' === | ||

The following code snippet shows a parallelized linked list. [http://axel22.github.io/resources/docs/my_thesis.pdf] | The following code snippet shows a parallelized linked list. [http://axel22.github.io/resources/docs/my_thesis.pdf] | ||

| Line 79: | Line 91: | ||

</code> | </code> | ||

[[#References | <sup>[11]<sup>]] and [[#References | <sup>[12]<sup>]] discuss mutex/lock free parallelization of linked data structures which are more in vogue in the recent times. | |||

In the above code snippet, the linked list itself is considered as a stack of elements. In the concrete example above the immutable Lists from the '''Scala standard library''' has been used. Since, multiple workers will work on the elements of this list, we need to have atomic read and '''Compare-and-Set''' or CAS operations. | In the above code snippet, the linked list itself is considered as a stack of elements. In the concrete example above the immutable Lists from the '''Scala standard library''' has been used. Since, multiple workers will work on the elements of this list, we need to have atomic read and '''Compare-and-Set''' or CAS operations. | ||

| Line 84: | Line 98: | ||

The JVM defines volatile variables the writes to which are immediately visible to | The JVM defines volatile variables the writes to which are immediately visible to | ||

all other threads. In Java these variables are defined with the volatile keyword | all other threads. In Java these variables are defined with the volatile keyword | ||

before the declaration, and in Scala with the ''@volatile'' annotation. Whenever | before the declaration, and in Scala with the ''@volatile'' annotation. Whenever a volatile variable x is read, the notation READ(x) is used, to make it explicit that this read is atomic, i.e. it can represent a | ||

linearization point. When writing the value v to the volatile variable, the notation WRITE(x, v) is used. | linearization point. When writing the value v to the volatile variable, the notation WRITE(x, v) is used. | ||

A special compare-and-set or CAS instruction atomically checks if the target memory | A special compare-and-set or CAS instruction atomically checks if the target memory | ||

location is equal to the specified expected value and, if it is, writes the new value, returning | location is equal to the specified expected value and, if it is, writes the new value, returning | ||

true. Otherwise, it returns false. | true. Otherwise, it returns false. | ||

The stack is manipulated using atomic READ and CAS operations to ensure that every element is assigned to exactly one worker. After reading the stack field in line '''''val stack = inv.READ''''' , every worker checks whether it is empty, indicating | The stack is manipulated using atomic READ and CAS operations to ensure that every element is assigned to exactly one worker. After reading the stack field in line '''''val stack = inv.READ''''' , every worker checks whether it is empty, indicating | ||

there is no more work. If the list is non-empty, every worker attempts to replace the | there is no more work. If the list is non-empty, every worker attempts to replace the | ||

current list by its tail with the CAS line '''''if (inv.CAS(stack, tail)) workOn(stack)'''''. Success means that only that worker replaced, so he gains ownership of that element – he must call workOn to process the element. If the CAS is not successful, the worker retries by calling the tail-recursive compute again. | current list by its tail with the CAS line '''''if (inv.CAS(stack, tail)) workOn(stack)'''''. Success means that only that worker replaced, so he gains ownership of that element – he must call workOn to process the element. If the CAS is not successful, the worker retries by calling the tail-recursive compute again. | ||

The algorithm is correct – a successful CAS | The algorithm is correct – a successful CAS guarantees that the stack field has | ||

not changed its value since | not changed its value since it has been READ. No linked list suffix will ever appear | ||

more than once in the field stack because there are no more loops in the linked list | more than once in the field stack because there are no more loops in the linked list | ||

when following the tail pointer. Furthermore, every linked list node will appear in the | when following the tail pointer. Furthermore, every linked list node will appear in the | ||

stack field at least once since the list is connected. Together, these properties ensure | stack field at least once since the list is connected. Together, these properties ensure | ||

that every linked list element is assigned to exactly one worker. | that every linked list element is assigned to exactly one worker. | ||

Thus as we see CAS writes a new value to a location based on the comparison with a supplied old value. This is the atomic | |||

operation used to achieve linearizability here. | |||

The algorithm shown in above is '''''lock-free''''' – although many threads compete to | The algorithm shown in above is '''''lock-free''''' – although many threads compete to | ||

| Line 107: | Line 122: | ||

on the size of the data structure or the number of competing workers, but in this case | on the size of the data structure or the number of competing workers, but in this case | ||

the number of steps is constant and equals the number of instructions between two | the number of steps is constant and equals the number of instructions between two | ||

consecutive CAS calls. In the next example [[#Example 2 Parallelized Queue Linked List]] we show parallelization through '''mutex locks'''. | consecutive CAS calls. In the next example [[#Example 2 LOCK based Parallelized Queue Linked List]] we show parallelization through '''mutex locks'''. | ||

=== ''Example 2 Parallelized Queue Linked List'' === | === ''Example 2 LOCK based Parallelized Queue Linked List'' === | ||

Now, lets consider the queue data structure based on single linked list. The following code snippet explains | Now, lets consider the queue data structure based on single linked list. The following code snippet explains | ||

| Line 117: | Line 132: | ||

<code> #include <pthread.h> </code> | <code> #include <pthread.h> </code> | ||

<code> #include <list.h> | <code> #include <list.h> </code> | ||

<code> | <code> | ||

| Line 138: | Line 153: | ||

pthread_mutex_t _lock; | pthread_mutex_t _lock; | ||

} | } | ||

}; | }; | ||

</code> | </code> | ||

| Line 182: | Line 198: | ||

<code> | <code> | ||

template <typename T> | template <typename T> | ||

class Queue { | class Queue { | ||

public: | public: | ||

| Line 223: | Line 240: | ||

Now, as we discussed the mutex lock based approach, it possesses some problems: | Now, as we discussed the mutex lock based approach, it possesses some problems: | ||

# Waiting on mutexes consumes considerable time. This delay though have negative effects on system scalability. | # Waiting on mutexes consumes considerable time. This delay though have negative effects on system scalability. | ||

# Lower-priority threads could acquire a mutex, thus halting higher-priority threads that require the same mutex to proceed. This problem is known as the famous priority inversion. | # Lower-priority threads could acquire a mutex, thus halting higher-priority threads that require the same mutex to proceed. This problem is known as the famous priority inversion.[[#References | <sup>[8]<sup>]] | ||

# A thread holding a mutex can be de-scheduled, perhaps because it was the end of its time-slice. For other threads waiting on the same mutex, this has a negative effect, because the wait time is now even longer. This problem is known as lock convoying. | # A thread holding a mutex can be de-scheduled, perhaps because it was the end of its time-slice. For other threads waiting on the same mutex, this has a negative effect, because the wait time is now even longer. This problem is known as lock convoying. [[#References | <sup>[9]<sup>]] | ||

Thus, issues with mutexes has compelled developers to come up with solutions that donot use mutexes like [[#Example 1 Parallelized Linked List]]. | Thus, issues with mutexes has compelled developers to come up with solutions that donot use mutexes like the Compare and swap (CAS) synchronization primitive used in [[#Example 1 LOCK FREE Parallelized Linked List]]. | ||

Although | Although mutex locks are tricky to use, they are definitely worth the attention for better performance. As apparent, locks are naive ways to achieve linearizability which enable '''blocking synchronization''' since it prevents other threads from updating or writing when one thread is in the critical section. This is different from '''directly using atomic operations''' and hence suffers from significant overheads of lock contention. | ||

This section provides some insights to the designing of parallelized linked data structures that are amenable to concurrent access. As seen, the design choices could be mutex based or lock-free. Either way, both require ways of thinking that go beyond the traditional functionality of these data structures. We need to be aware of preemption and how the thread resumes when it is rescheduled. At this juncture the solution on the lock-free side of things are more platform/compiler oriented. The Boost library for an implementation of threads and locks and John Valois's paper on lock-free linked lists provide | |||

ways to implement in a lock-free way. [[#References | <sup>[11]<sup>]] [[#References | <sup>[12]<sup>]] can be referred for more pointers. | |||

==Algorithms== | ==Algorithms== | ||

| Line 233: | Line 254: | ||

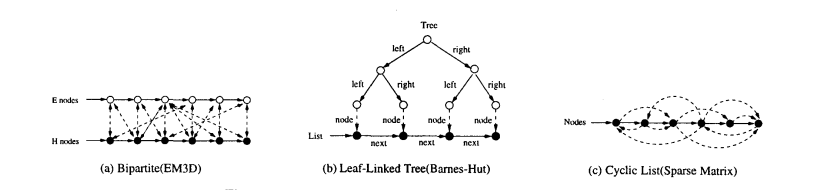

There are several parallelization techniques possible for linked lists. But, each data structure needs to be reviewed on a per-case basis. This means a programmer needs to assess the codes carefully before deciding on which parallelization strategy is best applicable. | There are several parallelization techniques possible for linked lists. But, each data structure needs to be reviewed on a per-case basis. This means a programmer needs to assess the codes carefully before deciding on which parallelization strategy is best applicable. | ||

After parallelism is identified, programs can be parallelized and then executed on parallel multiprocessor systems to achieve good performance. | After parallelism is identified, programs can be parallelized and then executed on parallel multiprocessor systems to achieve good performance. [http://en.wikipedia.org/wiki/Automatic_parallelization_tool Good compiler analysis techniques] can only analyze parallelism in the traversal operations in programs with dynamic pointer-linked data structures. As a result, only the traversal operations will be parallelized while the construction operations will be executed in sequential. Although the traversal phase of such programs, which usually account for over 90% of total execution times, can be parallelized, the rest of programs that accounts for less than 10% will dominate the execution on multiprocessor systems.[[#References|<sup>[13]</sup>]][http://www.iis.sinica.edu.tw/PARALLEL/cthpc/7th/18CTHPC01.pdf] | ||

Let us now look at a few algorithms for achieving parallelism in different Linked Data Structures: | Let us now look at a few algorithms for achieving parallelism in different Linked Data Structures: | ||

| Line 240: | Line 261: | ||

The most easy and intuitive approach to parallelization is to parallelize the traversal of LDS. There are no conflicts between Reads among different threads in a Data Structure. Next, we try to introduce parallelism among the different construction operations – Add and Delete. This means that while different threads can simultaneously operate on two different parts of the Data Structure, no two threads will operate on a single(or adjacent) nodes at the same time. | The most easy and intuitive approach to parallelization is to parallelize the traversal of LDS. There are no conflicts between Reads among different threads in a Data Structure. Next, we try to introduce parallelism among the different construction operations – Add and Delete. This means that while different threads can simultaneously operate on two different parts of the Data Structure, no two threads will operate on a single(or adjacent) nodes at the same time. | ||

This approach gives rise to the implementation of locks. Locks can be used to define a critical section, which can only be accessed by one thread at a time. | This approach gives rise to the implementation of locks. Locks can be used to define a critical section, which can only be accessed by one thread at a time. [http://books.google.com/books/about/Fundamentals_of_Parallel_Computer_Archit.html?id=jPHzPgAACAAJ Fundamentals of Parallel Computer Architecture] very well defines the techniques for implementing a lock on a simple LDS – the linked list. We will not go through the details, as our focus remain on the techniques not introduced before: | ||

'''Readers Lock''' | '''Readers Lock''' | ||

| Line 247: | Line 268: | ||

'''Global Lock''' | '''Global Lock''' | ||

This technique is fairly easy to implement, as it contains only one global lock for the entire Linked Data Structure. As before, threads can traverse the LDS in parallel and identify the node they want to modify. To Modify(add/delete), each thread must acquire a Global Lock. As every thread contends for only one global lock, there is no possibility of deadlocks or livelocks. This approach has enabled us to increase the degree of parallelism by getting rid of Read Locks. However, we still have more scope of parallelism as modification operations can also execute in parallel as long as they are not on the same or adjacent nodes. | |||

'''Fine Grain Lock''' | '''Fine Grain Lock''' | ||

===The Cyclic | This technique implements a Read Lock and Write Lock for each node. A node that is read and must remain valid for other operations to complete must be Read Locked. A node that is to be modified must remain Write Locked. In this approach, multiple nodes may be read and modified in parallel, but each node has locks which allows only one thread to manipulate it at a time. | ||

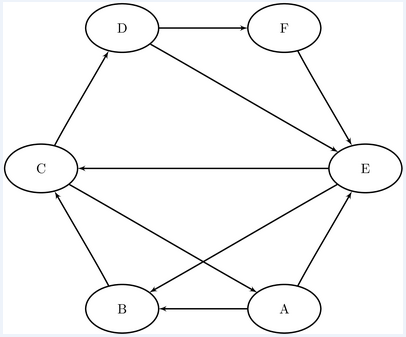

===The Cyclic Graph Parallelization=== | |||

The following section [http://www.iis.sinica.edu.tw/PARALLEL/cthpc/7th/18CTHPC01.pdf] gives an approach that can identify parallelism based on data dependence and shape analysis on programs even with cyclic graphs. If traversal operations on pointer-linked data structures in a program can be executed in any order, it implies the nodes of the traversed data structures can be connected in any order. Parallelism in construction( Modify) operations exists if this condition holds, i.e. nodes of pointer-linked data structures in the program can be connected in any order. One typical example is the list representation of adjacent matrixes, each of which can represented by a list of lists. The lists of such a list of lists can be built independently. | |||

[[File:Cycle1.png|thumbnail|500px|left|An example of a Cyclic Graph]] | |||

[[File:Acyclic1.PNG|thumbnail|900px|center|Examples of Acyclic Graphs]] | |||

The technique can be divided into the following steps: | |||

'''1. Compute definition - use chains''' | |||

Each traversal operation corresponds to a read reference on pointer-linked data structures, while each construction operation corresponds to a write reference. Commonly, people refer to a read reference as '''use''' and to a write reference as '''definition''', the relationships between construction operations and traversal operations of dynamic pointer-linked data structures can be represented by [http://www.cs.rice.edu/~keith/512/2009Lectures/11DUChains.pdf definition-use chains] of the data structures. | |||

'''2. Recognize parallelism in traversal operations ''' | |||

As mentioned above, if we're able to identify parallelism in the traversal operation such that traversal can take place in any order within the Cyclic graphs, then this means that we can modify the graph in any order. However, if there is no parallelism among the traversal techniques, we might have to follow the serial ordering for construction operations. | |||

'''3. Identify parallelism in construction operations''' | |||

With the use of Locks, ensure that each Construction operation can be executed in parallel with respect to another operation. A node may not be allowed to be modified before a thread has completed an operation on it. | |||

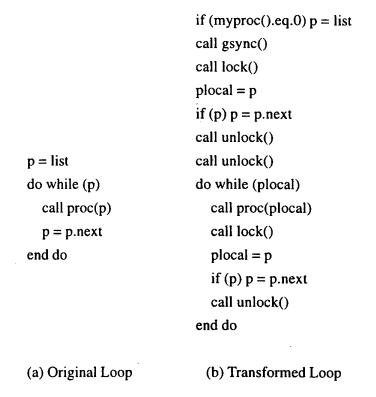

[[File:codexy.PNG|thumbnail|600px|left|Transformation of a Simple Loop Traversal to Parallel Loop Traversal[http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=876120]]] '''Implementation'''[[#References|<sup>[17]</sup>]][http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=876120] | |||

Now let us have a look at the implementation of Parallelism of Cyclic Graphs from a higher level language perspective. The code given below transforms a Simple Loop traversal of a data structure to a Parallel Loop traversal which can be implemented by several threads. | |||

First, notice that '''Part a''' defines the original loop we use for traversing a Data Structure. Here, '''p''' is a Global pointer that is initialized to the list head. The procedure '''call proc(p)''' basically performs the required operation on the given pointer location(here, a Node in the Data Structure). '''p = p.next''' changes the global variable to point to the next node, and '''do while''' keeps on traversing the data structure on valid nodes. Hence, the structure clearly shows that this kind of algorithm is serialized, and no scope of parallelism is present as each node is visited serially. | |||

However, '''part b''' gives an example of how we can parallelize such loops. First notice the use of '''call gsync''' and '''call lock''' at the beginning of the code. '''gsync''' is a type of lock that synchronizes all threads entering into the critical section in order of their PIDs. For example, Thread 0 (or Processor 0) initializes the global pointer '''p''' to the list head and is the first to enter the critical section. Once the critical section is entered, it updates its local pointer variable '''plocal''' to point to the first Node of the Data Structure. Similarly, all threads will enter the critical section and update their '''plocal''' variables to point to a unique node, as determined by the traversal pattern. | |||

Now, each thread can enter the '''do while''' loop in parallel to others and execute the required operation '''call proc''' on its assigned node. So, we see that knowing the traversal pattern in advance helps us in achieving parallelism. Here, the lock and unlock sections are present inside the loop so as the first thread that completes its task can now update its '''plocal''' to the next available '''p''' inside a critical section. | |||

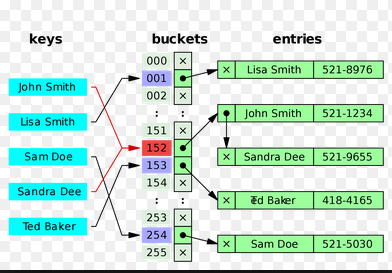

===Hash Tables=== | ===Hash Tables=== | ||

Now let us focus on algorithms for a hash table. Note that a hash table works in key-value pairs as shown below: | |||

[[File:Hash_tab.png|thumbnail|500px|left|An example of a Simple Hash Table]] | |||

Let us begin with the code snippet for implementing Parallelization of a simple fixed Hash Table:[[#References|<sup>[14]</sup>]][http://pages.cs.wisc.edu/~remzi/OSTEP/threads-locks-usage.pdf] | |||

<code> | |||

#define BUCKETS (100) | |||

typedef struct __hash_t { | |||

list_t lists[BUCKETS]; | |||

} hash_t; | |||

void Hash_Init(hash_t *H) { | |||

int i; | |||

for (i = 0; i < BUCKETS; i++) { | |||

List_Init(&H->lists[i]); | |||

} | |||

} | |||

</code> | |||

The above code initializes the fixed data structure. Here, each data structure initialization is assigned to a set of lists. These can later be accessed with the use of keys. Here, each instantiated object can contain upto a hundred elements. | |||

<code> | |||

int Hash_Insert(hash_t *H, int key) { | |||

int bucket = key % BUCKETS; | |||

return List_Insert(&H->lists[bucket], key); | |||

} | |||

int Hash_Lookup(hash_t *H, int key) { | |||

int bucket = key % BUCKETS; | |||

return List_Lookup(&H->lists[bucket], key); | |||

} | |||

</code> | |||

Here, an example of a Modify Operation (Hash_Insert) and a Traversal Operation (Hash_Lookup) is given. Each data structure has a list, whose values can be accessed using a key. | |||

The key point here is that this hash table can be implemented using a concurrent linked list, which we have come across numerous times. An example code of the Linked List is shown below. | |||

<code> | |||

// basic node structure | |||

typedef struct __node_t { | |||

int key; | |||

struct __node_t *next; | |||

} node_t; | |||

// basic list structure (one used per list) | |||

typedef struct __list_t { | |||

node_t *head; | |||

pthread_mutex_t lock; | |||

} list_t; | |||

void List_Init(list_t *L) { | |||

L->head = NULL; | |||

pthread_mutex_init(&L->lock, NULL); | |||

} | |||

int List_Insert(list_t *L, int key) { | |||

pthread_mutex_lock(&L->lock); | |||

node_t *new = malloc(sizeof(node_t)); | |||

if (new == NULL) { | |||

perror("malloc"); | |||

pthread_mutex_unlock(&L->lock); | |||

return -1; // fail | |||

} | |||

new->key = key; | |||

new->next = L->head; | |||

L->head = new; | |||

pthread_mutex_unlock(&L->lock); | |||

return 0; // success | |||

} | |||

int List_Lookup(list_t *L, int key) { | |||

pthread_mutex_lock(&L->lock); | |||

node_t *curr = L->head; | |||

while (curr) { | |||

if (curr->key == key) { | |||

pthread_mutex_unlock(&L->lock); | |||

return 0; // success | |||

} | |||

curr = curr->next; | |||

} | |||

pthread_mutex_unlock(&L->lock); | |||

return -1; // failure | |||

} | |||

</code> | |||

This data structure is parallelized by representing each hash bucket as a list, and using a lock per hash bucket. Hence, implementing a data structure using a LDS has helped create a Concurrent Hash Table. | |||

===PLDS=== | |||

PLDS stands for '''partitioning linked data structures for parallelism'''. PLDS exploits parallelism by dividing a dynamic linked data structure and distributing computations across threads that operate | |||

on that data. The execution flow starts off with creation of a set of threads each of which are bound to a processor core. | |||

After the master thread executes the sequential part of the program, the parallel region is divided via a chosen partitioning strategy to map partitions to threads. Thus, assigned partitions are worked upon by the respective threads skipping or redistributing computations on other partitions. The above execution model of PLDS provides the following entities: | |||

==== Partitioning Support ==== | |||

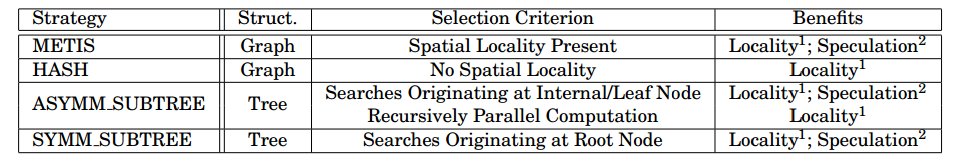

Based on dynamic linked data structures like graphs and trees, PLDS provides METIS and HASH for graphs and SYMM_SUBTREE and ASYMM_SUBTREE for trees along with additional option of customization as partitioning strategies. Following table summarizes | |||

the same: | |||

[[File:table1_plds.jpg]] | |||

For graphs, in the presence of spatial locality METIS is good, in the absence of spatial locality HASH is good. | |||

In METIS, computations are randomly assigned to threads. To improve locality, nodes closer in the graph are grouped into one partition assigned to a thread. Each thread then performs computations on nodes in its own partition. The cache locality | |||

is enhanced. If threads are executed speculatively in parallel, METIS results in low misspeculation rate since it is less likely that two parallel threads will access the same nodes. The following diagrams elucidates the two graph partitioning strategies. | |||

[[File:metis_hash.jpg]] | |||

In HASH strategy, graphs lack spatial locality, nodes are hashed into partitions. Each thread is assigned a partition via hashing and the thread only processes the nodes in its own partition. This works well in load balancing in a BFS (Breadth First Search) kind of graph. | |||

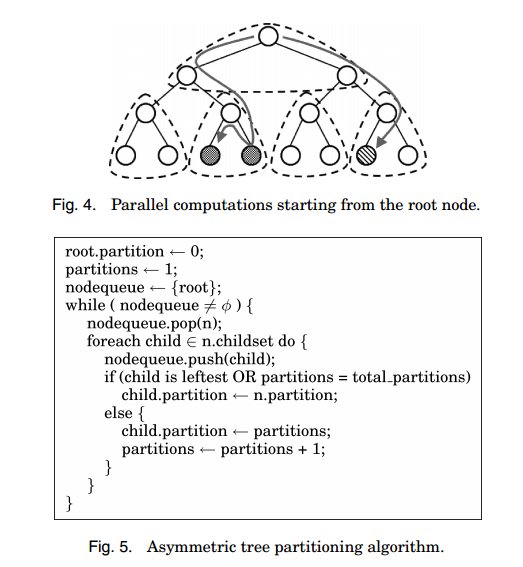

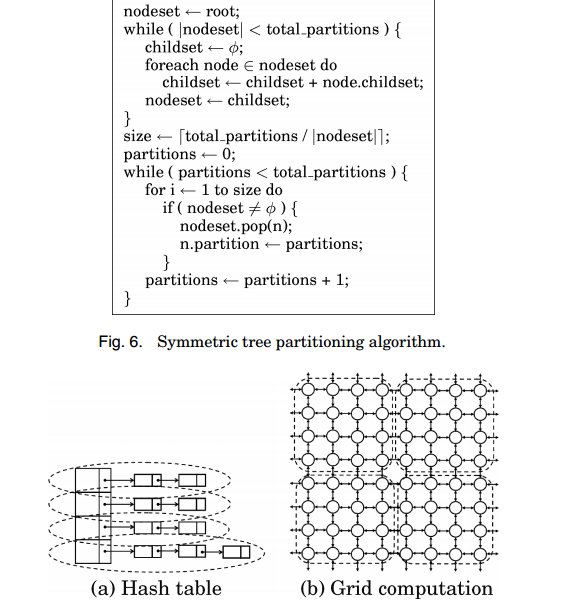

Asymm_Subtree (Figure 4 and 5) is for tree data structures where computation start from internal or leaf nodes and goes recursive across a subtree or towards the root. Symm_Subtree(Figure 6) is for computations starting from the root node moving towards the internal or leaf nodes. Following figures and pseudo code elucidates the same. For further minute details please go through [[#References|<sup>[10]<sup>]]. | |||

[[File:asymm.jpg]] [[File:manual.jpg]] | |||

When programmers know the shape of the data structures, ahead, they can specify strategy like in Figure a) shows hash table with an array of linked list and Figure b) above shows grid computation. | |||

==== Programming Support ==== | |||

The programming support is with OPEN-MP like pragmas. There can be homogeneous, heterogeneous, and speculative kind of | |||

support to incorporate partitioning strategies in applications. Both homogeneous and heterogeneous supports are non speculative approaches. | |||

===== Homogenous Parallel Region ===== | |||

<code> # pragma homo partition (DataType,PartitionType) </code> | |||

<code> # pragma inpartition (partition) </code> | |||

The first pragma marks the code region that is to be executed in parallel and where partitioning is to be applied. The homo clause indicates that homogeneous parallelism is to be used and the partition clause indicates the type of partitioning to be used. The second pragma is used to check in which thread the subsequent computation should be performed. The current performs | |||

the specified computation if the partitiontype is the one which is assigned to it. | |||

===== Heterogeneous Parallel Region ===== | |||

<code> #pragma hetero partition (DataType,PartitionType) </code> | |||

<code> #pragma distribute inpartition (partition) </code> | |||

<code> #pragma join </code> | |||

The first pragma marks the code region that is to be executed | |||

in parallel and where partitioning is to be applied. The hetero clause indicates that | |||

heterogeneous parallelism is to be used and the partition clause indicates the type | |||

of partitioning to be used. The second pragma is used to check in which thread the | |||

subsequent computation should be performed. The distribute clause indicates that | |||

the pragma will try to assign the subsequent computation to another thread to which | |||

the partition belongs so that the subsequent computation can be performed in parallel. The third pragma, join, is used for synchronization between threads. | |||

===== Speculative parallelism ===== | |||

<code> #pragma [conditional] speculate </code> | |||

<code> #pragma repartition </code> | |||

<code> #pragma touchpostpone(partition) </code> | |||

The first pragma is used to mark the code region that requires speculative execution | |||

to enforce atomicity. When theconditionalclause is not specified, standard speculation | |||

mechanism is used, when specified, the conditional speculation mechanism is | |||

used for the region where speculation is only used for computations that dynamically | |||

are determined to require access to multiple threads’ partitions. The second pragma is | |||

used to incrementally assign partitions to newly created nodes. The third pragma is used to assist in conditional speculation. It postpones the execution of current computation if the given partition is not a local partition. The | |||

postponed computation is later executed using standard speculation mechanism. | |||

PLDS prototype is implemented and evaluated with multiple number of threads to see the efficacy. For our focus on parallelized linked data structures, the partitioning and programming strategy gains paramount importance since, it shows some unique heuristics to partition data and do computation on it. | |||

==Use cases and Applications== | ==Use cases and Applications== | ||

Note Below, some references of organizations which implement parallel data structures: | |||

'''[http://www.ibm.com/developerworks/aix/library/au-multithreaded_structures1/ 1. IBM]''' | |||

Implements concurrent data structures in a multithreaded environment. It uses the POSIX and BOOST thread libraries. | |||

'''[https://msdn.microsoft.com/en-us/library/dd460718(v=vs.110).aspx 2. Microsoft]''' | |||

The .NET Framework implements a collection of Concurrent Classes with synchronization primitives. | |||

'''[http://http.developer.nvidia.com/GPUGems2/gpugems2_chapter33.html 3. GPUs]''' | |||

today efficiently make use of data structures to implement 2D Memory arrays, Parallel read/write, Sort/Scan etc. | |||

==Conclusions== | ==Conclusions== | ||

To understand parallelization with data structures, we need to look into what program we're dealing with. Each code can be parallelized based on its data dependencies, code structure and the underlying algorithm. When we use a Data Structure, it defines the basic code structure for the complete program. Hence, to achieve parallelization, the very nature of the basic data structure must have the components for enabling parallelism. We implement this for the simplest linked data structure - the linked lists- by using locks. Then we move to the more complex structures - cyclic graph and then the hash tables. Cyclic graphs can be tricky, in the sense that to achieve concurrency, we have to identify a traversal algorithm which enables threads to access each node within the graph independently. Hash Tables however, when implemented using the basic linked lists (for which parallelism is enabled), inherently give way to parallel implementations. | |||

To summarize, we have seen lock or mutex based approaches to parallelize linked lists which has concerns like priority inversion etc. We have lock free approaches like CAS (compare and set) which is in vogue now. We have also seen in PLDS the unique ways of graph and tree partitioning to parallelize computation based on the structure like root to leaf or leaf to root, has spatial locality or not etc. These ideas definitely gives us some insight into the different ways of handling dynamic data structures in the context of our work. | |||

==References== | ==References== | ||

| Line 281: | Line 572: | ||

9. http://en.wikipedia.org/wiki/Lock_convoy | 9. http://en.wikipedia.org/wiki/Lock_convoy | ||

10. http://dl.acm.org/citation.cfm?id=2086717&dl=ACM&coll=DL - Partitioning linked data structures for parallelism by Min Feng, Changhui Lin, Rajiv Gupta | |||

11. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.41.9506 - Lock-Free Linked Lists Using Compare-and-Swap by John D. Valois | |||

12. http://www.cl.cam.ac.uk/research/srg/netos/lock-free/ - Practical lock-free data structures | |||

13. http://www.iis.sinica.edu.tw/PARALLEL/cthpc/7th/18CTHPC01.pdf | |||

14. http://pages.cs.wisc.edu/~remzi/OSTEP/threads-locks-usage.pdf | |||

15. https://msdn.microsoft.com/en-us/library/dd460718(v=vs.110).aspx | |||

16. http://www.cs.toronto.edu/~christoff/files/Linearizability-ACorrectnessConditionForConcurrentObjects.pdf | |||

17. http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=876120 | |||

Latest revision as of 00:52, 18 March 2015

Introduction

Parallelization of computations in the presence of dynamic data structures has shown immense potential. Here we discuss how can we parallelize linked data structures [7]. Efficiently exploiting parallelism is fundamental in parallel computing even in the context of linked data structures. Some amount of speculation is needed to figure out how to exploit it the best sometimes based on the expected structure of the data. The idea is to introduce concurrency[6] in the existing data structures to enable multi-threading and hence parallelism. We need to handle synchronization since we need concurrency. We discuss the major approaches in parallelizing data structures and then discuss some algorithms existing in the literature to gain more insight into the process.

Overview

The simplest linked data structure we can think of is a linked list. A single linked list with one pointer pointing to the next node in the list or double linked list where nodes have two pointers pointing to the adjacent nodes to its left and right in the linked list are the most commonly used linked data structures. Following figures are some examples of the same. The first shows the head node to a singly linked list and the second shows a doubly linked list.

Linearizability

Linearizability is an important property of operations that may be executed concurrently [Herlihy and Wing (1990)][3][3]. An operation is linearizable if the rest of the system observes the corresponding system state change as if it occurred instantaneously at a single point in time after the operation started and before it finished. This property ensures that all the linearizable operations in the program have a mutual order observed in the same way by the entire system. Basically linearizability is the converse of serializability. It is an assurance about single operations on single objects. Linearizability helps in maintaining consistency for parallel or distributed systems dealing with concurrent objects. [16]

As mentioned before concurrency needs to be enabled for parallel linked data structures. In that context, linearizability is a useful property to have for concurrent operations. It can identify a single instruction or sub-operation which

changes the data structure state and is known to be itself linearizable. In example 1, we

will identify (Compare-and-Set) CAS instructions as linearization points.

Linked Data Structure parallelization

Unlike data structures like arrays, linked lists are unsuitable for naive parallelization methods which employ divide and conquer policy. They split the input and assign them to multiple workers for parallel processing. Splitting an arbitrary linked list requires traversing half of its elements. For too many operation instances such an O(n) splitting is unacceptable. Hence, intuitively, it is more efficient to convert the linked list into an array and then use splitters on the array. One thing to be noticed is that these data structures does not retain information about the relative order of elements, hence the operators applied to their data-parallel operations need to be commutative.

The major challenges of linked data structure parallelization are:

- Loosely coupled data, dynamically created, static partitioning ahead will not help

- Scatter, gather, map, sort are some basic parallel techniques used for parallelizing static data structures like arrays. Since, node traversal is costly and list keeps changing with time, static allocation of nodes to threads is incorrect here.

- Unlike arrays, search in linked list needs to be optimized, since searching in linked list is costlier than arrays which has indices. Thus search optimization is a concern in parallelization of linked data structures.

Linearizability property as mentioned before guarantees that the entire system is in a consistent state during concurrent operations. This property helps in parallelizing dynamic data structures. The way to achieve linearizability is to run atomic operations in critical sections where multiple threads concurrently content to work upon. In the following examples we shall see how CAS (compare and set) are used those atomic operations and locks are used to achieve linearizability.

As mentioned before, a linked list data structure consists of a set of nodes such that each node points to

another node in the set. A special node in this set is considered to be the head of the

linked list. We consider connected linked lists without loops, which means every node can be reached

from the head, no two nodes point to the same node and no node points at the root.

Example 1 LOCK FREE Parallelized Linked List

The following code snippet shows a parallelized linked list. [4]

:class Invocation[T](xs: List[T]) {

::@volatile var stack = xs

::def READ = unsafe.getObjectVolatile(this, OFFSET)

::def CAS(ov: List[T], nv: List[T]) = unsafe.compareAndSwapObject(this, OFFSET, ov, nv)

:}

:abstract class ListTask[T] extends RecursiveTask[T] {

::val inv: Invocation[T]

::def workOn(elem: T): Unit

::def compute() {

::: val stack = inv.READ

::: stack match {

:::: case Nil =>

::::// no more work

::: case head :: tail =>

:::: if (inv.CAS(stack, tail)) workOn(stack)

:::: compute()

:::}

::}

:}

:class ListForeach[T, U](f: T => U, val inv: Invocation[T]) extends ListTask[T] {

:: def workOn(elem: T) = f(elem)

:}

:implicit class ListOps[T](val par: Par[List[T]]) {

::def foreach[U](f: T => U) = {

:::val inv = new Invocation(par.seq)

:::val tasks = for (i <- 0 until P) yield new ListForeach[T](f, inv)

:::for (t <- tasks) t.fork()

:::for (t <- tasks) t.join()

::}

:}

[11] and [12] discuss mutex/lock free parallelization of linked data structures which are more in vogue in the recent times.

In the above code snippet, the linked list itself is considered as a stack of elements. In the concrete example above the immutable Lists from the Scala standard library has been used. Since, multiple workers will work on the elements of this list, we need to have atomic read and Compare-and-Set or CAS operations.

The JVM defines volatile variables the writes to which are immediately visible to all other threads. In Java these variables are defined with the volatile keyword before the declaration, and in Scala with the @volatile annotation. Whenever a volatile variable x is read, the notation READ(x) is used, to make it explicit that this read is atomic, i.e. it can represent a linearization point. When writing the value v to the volatile variable, the notation WRITE(x, v) is used. A special compare-and-set or CAS instruction atomically checks if the target memory location is equal to the specified expected value and, if it is, writes the new value, returning true. Otherwise, it returns false.

The stack is manipulated using atomic READ and CAS operations to ensure that every element is assigned to exactly one worker. After reading the stack field in line val stack = inv.READ , every worker checks whether it is empty, indicating there is no more work. If the list is non-empty, every worker attempts to replace the current list by its tail with the CAS line if (inv.CAS(stack, tail)) workOn(stack). Success means that only that worker replaced, so he gains ownership of that element – he must call workOn to process the element. If the CAS is not successful, the worker retries by calling the tail-recursive compute again. The algorithm is correct – a successful CAS guarantees that the stack field has not changed its value since it has been READ. No linked list suffix will ever appear more than once in the field stack because there are no more loops in the linked list when following the tail pointer. Furthermore, every linked list node will appear in the stack field at least once since the list is connected. Together, these properties ensure that every linked list element is assigned to exactly one worker.

Thus as we see CAS writes a new value to a location based on the comparison with a supplied old value. This is the atomic operation used to achieve linearizability here.

The algorithm shown in above is lock-free – although many threads compete to update the field stack at the same time, after a certain finite number of steps some thread will always complete its operation. In general this number of steps can depend on the size of the data structure or the number of competing workers, but in this case the number of steps is constant and equals the number of instructions between two consecutive CAS calls. In the next example #Example 2 LOCK based Parallelized Queue Linked List we show parallelization through mutex locks.

Example 2 LOCK based Parallelized Queue Linked List

Now, lets consider the queue data structure based on single linked list. The following code snippet explains a parallelized queue. [4] [5]

#include <pthread.h>

#include <list.h>

namespace concurrent {

template <typename T>

class Queue {

public:

Queue( ) {

pthread_mutex_init(&_lock, NULL);

}

~Queue( ) {

pthread_mutex_destroy(&_lock);

}

void push(const T& data);

T pop( );

private:

list<T> _list;

pthread_mutex_t _lock;

}

};

Multiple threads of control can simultaneously try to push data to the queue or remove data, so we need a mutex object to manage the synchronization. The constructor and destructor of the queue class are responsible for the creation and destruction of the mutex, as shown above.

For insertion and deletion of data from a concurrent queue, we need mutex locks.

What happens if multiple threads intend to append data to the queue? The first thread locks the mutex and appends data to the queue, while the other threads wait for their turn. The operating system decides which thread adds the data next in the queue, once the first thread unlocks or releases the mutex. By default, there is no real time priority threads, the thread waiting the longest is the next to wake up, acquire the lock, and append the data to the queue. Below snippet shows insertion:

procedure: Pushing data to the queue

void Queue<T>::push(const T& value ) {

: pthread_mutex_lock(&_lock);

: _list.push_back(value);

: pthread_mutex_unlock(&_lock);

}

The code for popping data out is similar. Below code snippet shows:

Procedure: Popping data from the queue

T Queue<T>::pop( ) {

: if (_list.empty( )) {

: throw ”element not found”;

: }

: pthread_mutex_lock(&_lock);

: T _temp = _list.front( );

: _list.pop_front( );

: pthread_mutex_unlock(&_lock);

: return _temp;

}

As seen from the above snippets, locking is done before pushing and popping and release locks after the operations are done. However, if we have a long queue, and there are significantly more threads reading data out of the queue than those appending data at some point during code execution, the read speed is slowed down as per the above implementation. The reason is we are sharing the same mutex for push and pop operations. The writer threads access the lock, hence reads are inhibited. Can we use two locks? One for the read operation and another for the write should do the trick. Following snippet, shows the modified version of more efficient concurrent queue using two locks instead of one:

template <typename T>

class Queue {

public:

Queue( ) {

pthread_mutex_init(&_rlock, NULL);

pthread_mutex_init(&_wlock, NULL);

}

~Queue( ) {

pthread_mutex_destroy(&_rlock);

pthread_mutex_destroy(&_wlock);

}

void push(const T& data);

T pop( );

private:

list<T> _list;

pthread_mutex_t _rlock, _wlock;

}

Pop and push snippets shown below are modified using two distinct locks, one for read and the other for write:

void Queue<T>::push(const T& value ) {

pthread_mutex_lock(&_wlock);

_list.push_back(value);

pthread_mutex_unlock(&_wlock);

}

T Queue<T>::pop( ) {

if (_list.empty( )) {

throw ”element not found”;

}

pthread_mutex_lock(&_rlock);

T _temp = _list.front( );

_list.pop_front( );

pthread_mutex_unlock(&_rlock);

return _temp;

}

Now, as we discussed the mutex lock based approach, it possesses some problems:

- Waiting on mutexes consumes considerable time. This delay though have negative effects on system scalability.

- Lower-priority threads could acquire a mutex, thus halting higher-priority threads that require the same mutex to proceed. This problem is known as the famous priority inversion. [8]

- A thread holding a mutex can be de-scheduled, perhaps because it was the end of its time-slice. For other threads waiting on the same mutex, this has a negative effect, because the wait time is now even longer. This problem is known as lock convoying. [9]

Thus, issues with mutexes has compelled developers to come up with solutions that donot use mutexes like the Compare and swap (CAS) synchronization primitive used in #Example 1 LOCK FREE Parallelized Linked List. Although mutex locks are tricky to use, they are definitely worth the attention for better performance. As apparent, locks are naive ways to achieve linearizability which enable blocking synchronization since it prevents other threads from updating or writing when one thread is in the critical section. This is different from directly using atomic operations and hence suffers from significant overheads of lock contention.

This section provides some insights to the designing of parallelized linked data structures that are amenable to concurrent access. As seen, the design choices could be mutex based or lock-free. Either way, both require ways of thinking that go beyond the traditional functionality of these data structures. We need to be aware of preemption and how the thread resumes when it is rescheduled. At this juncture the solution on the lock-free side of things are more platform/compiler oriented. The Boost library for an implementation of threads and locks and John Valois's paper on lock-free linked lists provide

ways to implement in a lock-free way. [11] [12] can be referred for more pointers.

Algorithms

There are several parallelization techniques possible for linked lists. But, each data structure needs to be reviewed on a per-case basis. This means a programmer needs to assess the codes carefully before deciding on which parallelization strategy is best applicable.

After parallelism is identified, programs can be parallelized and then executed on parallel multiprocessor systems to achieve good performance. Good compiler analysis techniques can only analyze parallelism in the traversal operations in programs with dynamic pointer-linked data structures. As a result, only the traversal operations will be parallelized while the construction operations will be executed in sequential. Although the traversal phase of such programs, which usually account for over 90% of total execution times, can be parallelized, the rest of programs that accounts for less than 10% will dominate the execution on multiprocessor systems.[13][5]

Let us now look at a few algorithms for achieving parallelism in different Linked Data Structures:

Simple Locks

The most easy and intuitive approach to parallelization is to parallelize the traversal of LDS. There are no conflicts between Reads among different threads in a Data Structure. Next, we try to introduce parallelism among the different construction operations – Add and Delete. This means that while different threads can simultaneously operate on two different parts of the Data Structure, no two threads will operate on a single(or adjacent) nodes at the same time.

This approach gives rise to the implementation of locks. Locks can be used to define a critical section, which can only be accessed by one thread at a time. Fundamentals of Parallel Computer Architecture very well defines the techniques for implementing a lock on a simple LDS – the linked list. We will not go through the details, as our focus remain on the techniques not introduced before:

Readers Lock

In this technique, we implement a Read Lock and a Write Lock. A Traversal Operation must obtain a Read Lock prior to execution, and a Modify Operation must obtain a Write Lock prior to execution. Both must release locks after completion. When a Read Lock is granted to an operation, a request for another Read Lock can be granted, but a request for Write Lock cannot be granted until all Read Locks have been released. Finally, if a Write Lock is granted to an Operation no requests for Read or Write Locks will be granted until the operation has completed execution and released the lock.

Global Lock

This technique is fairly easy to implement, as it contains only one global lock for the entire Linked Data Structure. As before, threads can traverse the LDS in parallel and identify the node they want to modify. To Modify(add/delete), each thread must acquire a Global Lock. As every thread contends for only one global lock, there is no possibility of deadlocks or livelocks. This approach has enabled us to increase the degree of parallelism by getting rid of Read Locks. However, we still have more scope of parallelism as modification operations can also execute in parallel as long as they are not on the same or adjacent nodes.

Fine Grain Lock

This technique implements a Read Lock and Write Lock for each node. A node that is read and must remain valid for other operations to complete must be Read Locked. A node that is to be modified must remain Write Locked. In this approach, multiple nodes may be read and modified in parallel, but each node has locks which allows only one thread to manipulate it at a time.

The Cyclic Graph Parallelization

The following section [6] gives an approach that can identify parallelism based on data dependence and shape analysis on programs even with cyclic graphs. If traversal operations on pointer-linked data structures in a program can be executed in any order, it implies the nodes of the traversed data structures can be connected in any order. Parallelism in construction( Modify) operations exists if this condition holds, i.e. nodes of pointer-linked data structures in the program can be connected in any order. One typical example is the list representation of adjacent matrixes, each of which can represented by a list of lists. The lists of such a list of lists can be built independently.

The technique can be divided into the following steps:

1. Compute definition - use chains

Each traversal operation corresponds to a read reference on pointer-linked data structures, while each construction operation corresponds to a write reference. Commonly, people refer to a read reference as use and to a write reference as definition, the relationships between construction operations and traversal operations of dynamic pointer-linked data structures can be represented by definition-use chains of the data structures.

2. Recognize parallelism in traversal operations

As mentioned above, if we're able to identify parallelism in the traversal operation such that traversal can take place in any order within the Cyclic graphs, then this means that we can modify the graph in any order. However, if there is no parallelism among the traversal techniques, we might have to follow the serial ordering for construction operations.

3. Identify parallelism in construction operations

With the use of Locks, ensure that each Construction operation can be executed in parallel with respect to another operation. A node may not be allowed to be modified before a thread has completed an operation on it.

Now let us have a look at the implementation of Parallelism of Cyclic Graphs from a higher level language perspective. The code given below transforms a Simple Loop traversal of a data structure to a Parallel Loop traversal which can be implemented by several threads.

First, notice that Part a defines the original loop we use for traversing a Data Structure. Here, p is a Global pointer that is initialized to the list head. The procedure call proc(p) basically performs the required operation on the given pointer location(here, a Node in the Data Structure). p = p.next changes the global variable to point to the next node, and do while keeps on traversing the data structure on valid nodes. Hence, the structure clearly shows that this kind of algorithm is serialized, and no scope of parallelism is present as each node is visited serially.

However, part b gives an example of how we can parallelize such loops. First notice the use of call gsync and call lock at the beginning of the code. gsync is a type of lock that synchronizes all threads entering into the critical section in order of their PIDs. For example, Thread 0 (or Processor 0) initializes the global pointer p to the list head and is the first to enter the critical section. Once the critical section is entered, it updates its local pointer variable plocal to point to the first Node of the Data Structure. Similarly, all threads will enter the critical section and update their plocal variables to point to a unique node, as determined by the traversal pattern.

Now, each thread can enter the do while loop in parallel to others and execute the required operation call proc on its assigned node. So, we see that knowing the traversal pattern in advance helps us in achieving parallelism. Here, the lock and unlock sections are present inside the loop so as the first thread that completes its task can now update its plocal to the next available p inside a critical section.

Hash Tables

Now let us focus on algorithms for a hash table. Note that a hash table works in key-value pairs as shown below:

Let us begin with the code snippet for implementing Parallelization of a simple fixed Hash Table:[14][8]

#define BUCKETS (100)

typedef struct __hash_t {

list_t lists[BUCKETS];

} hash_t;

void Hash_Init(hash_t *H) {

int i;

for (i = 0; i < BUCKETS; i++) {

List_Init(&H->lists[i]);

}

}

The above code initializes the fixed data structure. Here, each data structure initialization is assigned to a set of lists. These can later be accessed with the use of keys. Here, each instantiated object can contain upto a hundred elements.

int Hash_Insert(hash_t *H, int key) {

int bucket = key % BUCKETS;

return List_Insert(&H->lists[bucket], key);

}

int Hash_Lookup(hash_t *H, int key) {

int bucket = key % BUCKETS;

return List_Lookup(&H->lists[bucket], key);

}

Here, an example of a Modify Operation (Hash_Insert) and a Traversal Operation (Hash_Lookup) is given. Each data structure has a list, whose values can be accessed using a key.

The key point here is that this hash table can be implemented using a concurrent linked list, which we have come across numerous times. An example code of the Linked List is shown below.

// basic node structure

typedef struct __node_t {

int key;

struct __node_t *next;

} node_t;

// basic list structure (one used per list)

typedef struct __list_t {

node_t *head;

pthread_mutex_t lock;

} list_t;

void List_Init(list_t *L) {

L->head = NULL;

pthread_mutex_init(&L->lock, NULL);

}

int List_Insert(list_t *L, int key) {

pthread_mutex_lock(&L->lock);

node_t *new = malloc(sizeof(node_t));

if (new == NULL) {

perror("malloc");

pthread_mutex_unlock(&L->lock);

return -1; // fail

}

new->key = key;

new->next = L->head;

L->head = new;

pthread_mutex_unlock(&L->lock);

return 0; // success

}

int List_Lookup(list_t *L, int key) {

pthread_mutex_lock(&L->lock);

node_t *curr = L->head;

while (curr) {

if (curr->key == key) {

pthread_mutex_unlock(&L->lock);

return 0; // success

}

curr = curr->next;

}

pthread_mutex_unlock(&L->lock);

return -1; // failure

}

This data structure is parallelized by representing each hash bucket as a list, and using a lock per hash bucket. Hence, implementing a data structure using a LDS has helped create a Concurrent Hash Table.

PLDS

PLDS stands for partitioning linked data structures for parallelism. PLDS exploits parallelism by dividing a dynamic linked data structure and distributing computations across threads that operate on that data. The execution flow starts off with creation of a set of threads each of which are bound to a processor core. After the master thread executes the sequential part of the program, the parallel region is divided via a chosen partitioning strategy to map partitions to threads. Thus, assigned partitions are worked upon by the respective threads skipping or redistributing computations on other partitions. The above execution model of PLDS provides the following entities:

Partitioning Support

Based on dynamic linked data structures like graphs and trees, PLDS provides METIS and HASH for graphs and SYMM_SUBTREE and ASYMM_SUBTREE for trees along with additional option of customization as partitioning strategies. Following table summarizes

the same:

For graphs, in the presence of spatial locality METIS is good, in the absence of spatial locality HASH is good.

In METIS, computations are randomly assigned to threads. To improve locality, nodes closer in the graph are grouped into one partition assigned to a thread. Each thread then performs computations on nodes in its own partition. The cache locality

is enhanced. If threads are executed speculatively in parallel, METIS results in low misspeculation rate since it is less likely that two parallel threads will access the same nodes. The following diagrams elucidates the two graph partitioning strategies.

In HASH strategy, graphs lack spatial locality, nodes are hashed into partitions. Each thread is assigned a partition via hashing and the thread only processes the nodes in its own partition. This works well in load balancing in a BFS (Breadth First Search) kind of graph.

Asymm_Subtree (Figure 4 and 5) is for tree data structures where computation start from internal or leaf nodes and goes recursive across a subtree or towards the root. Symm_Subtree(Figure 6) is for computations starting from the root node moving towards the internal or leaf nodes. Following figures and pseudo code elucidates the same. For further minute details please go through [10].

When programmers know the shape of the data structures, ahead, they can specify strategy like in Figure a) shows hash table with an array of linked list and Figure b) above shows grid computation.

Programming Support

The programming support is with OPEN-MP like pragmas. There can be homogeneous, heterogeneous, and speculative kind of support to incorporate partitioning strategies in applications. Both homogeneous and heterogeneous supports are non speculative approaches.

Homogenous Parallel Region

# pragma homo partition (DataType,PartitionType)

# pragma inpartition (partition)

The first pragma marks the code region that is to be executed in parallel and where partitioning is to be applied. The homo clause indicates that homogeneous parallelism is to be used and the partition clause indicates the type of partitioning to be used. The second pragma is used to check in which thread the subsequent computation should be performed. The current performs the specified computation if the partitiontype is the one which is assigned to it.

Heterogeneous Parallel Region

#pragma hetero partition (DataType,PartitionType)

#pragma distribute inpartition (partition)

#pragma join

The first pragma marks the code region that is to be executed in parallel and where partitioning is to be applied. The hetero clause indicates that heterogeneous parallelism is to be used and the partition clause indicates the type of partitioning to be used. The second pragma is used to check in which thread the subsequent computation should be performed. The distribute clause indicates that the pragma will try to assign the subsequent computation to another thread to which the partition belongs so that the subsequent computation can be performed in parallel. The third pragma, join, is used for synchronization between threads.

Speculative parallelism

#pragma [conditional] speculate

#pragma repartition

#pragma touchpostpone(partition)

The first pragma is used to mark the code region that requires speculative execution to enforce atomicity. When theconditionalclause is not specified, standard speculation mechanism is used, when specified, the conditional speculation mechanism is used for the region where speculation is only used for computations that dynamically are determined to require access to multiple threads’ partitions. The second pragma is used to incrementally assign partitions to newly created nodes. The third pragma is used to assist in conditional speculation. It postpones the execution of current computation if the given partition is not a local partition. The postponed computation is later executed using standard speculation mechanism.

PLDS prototype is implemented and evaluated with multiple number of threads to see the efficacy. For our focus on parallelized linked data structures, the partitioning and programming strategy gains paramount importance since, it shows some unique heuristics to partition data and do computation on it.

Use cases and Applications

Note Below, some references of organizations which implement parallel data structures:

1. IBM Implements concurrent data structures in a multithreaded environment. It uses the POSIX and BOOST thread libraries.

2. Microsoft The .NET Framework implements a collection of Concurrent Classes with synchronization primitives.

3. GPUs today efficiently make use of data structures to implement 2D Memory arrays, Parallel read/write, Sort/Scan etc.

Conclusions

To understand parallelization with data structures, we need to look into what program we're dealing with. Each code can be parallelized based on its data dependencies, code structure and the underlying algorithm. When we use a Data Structure, it defines the basic code structure for the complete program. Hence, to achieve parallelization, the very nature of the basic data structure must have the components for enabling parallelism. We implement this for the simplest linked data structure - the linked lists- by using locks. Then we move to the more complex structures - cyclic graph and then the hash tables. Cyclic graphs can be tricky, in the sense that to achieve concurrency, we have to identify a traversal algorithm which enables threads to access each node within the graph independently. Hash Tables however, when implemented using the basic linked lists (for which parallelism is enabled), inherently give way to parallel implementations.

To summarize, we have seen lock or mutex based approaches to parallelize linked lists which has concerns like priority inversion etc. We have lock free approaches like CAS (compare and set) which is in vogue now. We have also seen in PLDS the unique ways of graph and tree partitioning to parallelize computation based on the structure like root to leaf or leaf to root, has spatial locality or not etc. These ideas definitely gives us some insight into the different ways of handling dynamic data structures in the context of our work.

References

1. http://idb.csie.ncku.edu.tw/tsengsm/COURSE/Java/Slides/Java-15-Linked_Data_Structure.pdf

2. http://axel22.github.io/resources/docs/my_thesis.pdf

3. http://dl.acm.org/citation.cfm?id=78972 Maurice Herlihy and Jeannette M. Wing. Linearizability: A correctness condition for concurrent objects. ACM Transactions on Programming Languages and Systems, 12(3):463–492, July 1990.

4. http://www.ibm.com/developerworks/aix/library/au-multithreaded_structures1/#list2

5. http://www.ibm.com/developerworks/aix/library/au-multithreaded_structures2/

6. http://en.wikipedia.org/wiki/Concurrent_data_structure

7. http://en.wikipedia.org/wiki/Linked_data_structure

8. http://en.wikipedia.org/wiki/Priority_inversion

9. http://en.wikipedia.org/wiki/Lock_convoy

10. http://dl.acm.org/citation.cfm?id=2086717&dl=ACM&coll=DL - Partitioning linked data structures for parallelism by Min Feng, Changhui Lin, Rajiv Gupta

11. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.41.9506 - Lock-Free Linked Lists Using Compare-and-Swap by John D. Valois

12. http://www.cl.cam.ac.uk/research/srg/netos/lock-free/ - Practical lock-free data structures

13. http://www.iis.sinica.edu.tw/PARALLEL/cthpc/7th/18CTHPC01.pdf

14. http://pages.cs.wisc.edu/~remzi/OSTEP/threads-locks-usage.pdf

15. https://msdn.microsoft.com/en-us/library/dd460718(v=vs.110).aspx

17. http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=876120