CSC/ECE 517 Spring 2015/ch1a 7 SA: Difference between revisions

| (50 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[File:S3.gif|frame|Source: http://www.w7cloud.com/7-reasons-to-use-amazon-s3-cloud-computing-online-storage/|right]] Amazon Simple Storage Service (Amazon S3) is a remote, scalable, secure, and cost efficient storage space service provided by Amazon. Users are able to access their storage on Amazon S3 from the web via REST <ref> | [[File:S3.gif|frame|Source: http://www.w7cloud.com/7-reasons-to-use-amazon-s3-cloud-computing-online-storage/|right]] Amazon Simple Storage Service (Amazon S3) is a remote, scalable, secure, and cost efficient storage space service provided by Amazon. Users are able to access their storage on Amazon S3 from the web via REST <ref>[http://en.wikipedia.org/wiki/Representational_state_transfer Wikipedia: REST]</ref> HTTP <ref>[http://en.wikipedia.org/wiki/Hypertext_Transfer_Protocol HTTP]</ref>, or SOAP <ref>[http://en.wikipedia.org/wiki/SOAP SOAP]</ref> making their data accessible from virtually anywhere in the world. Amazon S3 implements redundancy across multiple devices on multiple facilities in order to safeguard against application failure ,data loss and minimization of downtime <ref>[http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AmazonS3.html Amazon S3]</ref>. Some of the most prominent users of Amazon S3 include: Netflix, SmugMug, Wetransfer, Pinterest, and NASDAQ <ref>[http://aws.amazon.com/s3/ Amazon S3 Homepage]</ref>. | ||

[https://docs.google.com/a/ncsu.edu/document/d/1TgBtp7flIPKJwkkShgtcIkt--mtHuwVHsQX6Tpzj1rc/edit Writing Assignment 1a] | [https://docs.google.com/a/ncsu.edu/document/d/1TgBtp7flIPKJwkkShgtcIkt--mtHuwVHsQX6Tpzj1rc/edit Writing Assignment 1a] | ||

| Line 6: | Line 6: | ||

==Background== | ==Background== | ||

Amazon S3 launched in March of 2006 in the United States [http://phx.corporate-ir.net/phoenix.zhtml?c=176060&p=irol-newsArticle&ID=830816] and in Europe in November of 2007 [http://phx.corporate-ir.net/phoenix.zhtml?c=176060&p=irol-newsArticle&ID=1072982]. Since its inception, Amazon S3 has reported tremendous growth. Beginning in July of 2006, S3 hosted 800 million objects | Amazon S3 launched in March of 2006 in the United States <ref>[http://phx.corporate-ir.net/phoenix.zhtml?c=176060&p=irol-newsArticle&ID=830816 Amazon Press Release]</ref> and in Europe in November of 2007 <ref>[http://phx.corporate-ir.net/phoenix.zhtml?c=176060&p=irol-newsArticle&ID=1072982 Amazon Press Release]</ref>. Since its inception, Amazon S3 has reported tremendous growth. Beginning in July of 2006, S3 hosted 800 million objects; April of 2007, 5 billion objects; October of 2007, 10 billion; Jan 2008, 14 billion <ref>[http://www.allthingsdistributed.com/2008/03/happy_birthday_amazon_s3.html Happy birthday Amazon S3]</ref>; October 2008, 29 billion <ref>[https://aws.amazon.com/blogs/aws/amazon-s3-now/ amazon s3 now]</ref>; March 2009, 52 billion <ref>[https://aws.amazon.com/blogs/aws/celebrating-s3s-third-birthday-with-an-upload-promotion/ Upload promotion]</ref>; August 2009, 64 billion <ref>[http://www.eweek.com/c/a/Cloud-Computing/Amazons-Head-Start-in-the-Cloud-Pays-Off-584083 Amazons Head Start in the Cloud]</ref>. In April of 2013, S3 now hosts more than 2 trillion objects and on average 1.1 million requests every second! <ref>[https://aws.amazon.com/blogs/aws/amazon-s3-two-trillion-objects-11-million-requests-second/ Two trillion objects]</ref>. While Amazon is a fast paced, growing cloud storage service, there exists many alternatives to Amazon S3. A brief overview of the cost to performance comparison is shown below. | ||

===Design | |||

==Alternatives to S3== | |||

There currently exists alternatives to AmazonS3: | |||

:*[https://cloud.google.com/storage/| Google Cloud Storage] | |||

:*[http://azure.microsoft.com/en-us/ |MSAzure] | |||

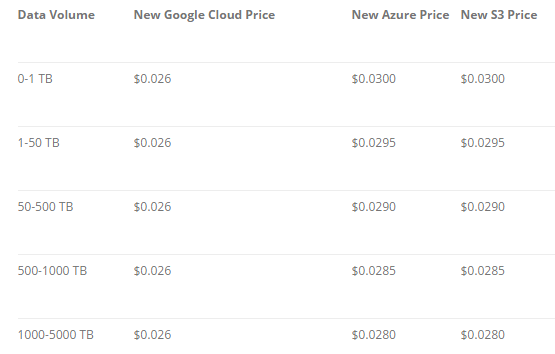

As with any online cloud storage service, the cost of storage is always a concern. The following table shows a cost comparison between them: | |||

[[File:PriceCompare.png |frame |center|Source: http://www.cloudberrylab.com/blog/amazon-s3-azure-and-google-cloud-prices-compare/]] | |||

While all three services are fairly comparable in price, there are some developer support considerations for selecting a service. For instance, if an app is focused around the [https://www.android.com/| Android OS], using the google cloud storage provides you with more tightly coupled APIs for your app. The overhead of development may be much less compared to using S3 to rails APIs for a Google app. Similarly, [http://www.windowsphone.com/en-us/features| Windows Phone OS] would provide more native support for Windows Phone apps and likewise kindle fire apps would have more native support on [https://developer.amazon.com/public/apis| Fire OS]. | |||

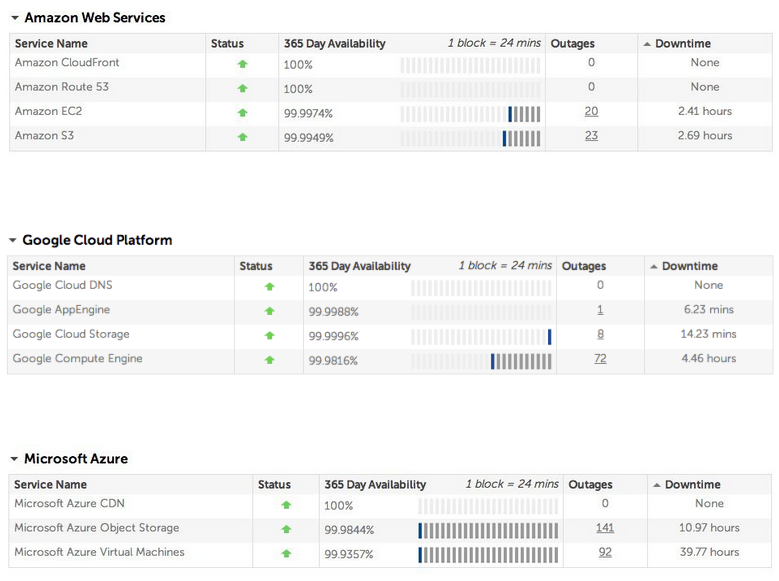

The reliability of a cloud storage service like S3 plays a heavy role in the selection process. Less downtime translates to a more robust and reliable app. In 2014, Amazon S3 registered only 23 outages totaling 2.69 hours of downtime whereas Google registered 8 outages totaling 14.23 hours of downtime. Microsoft Azure totaled 141 outages summing 10.97 hours. <ref>[https://gigaom.com/2015/01/07/amazon-web-services-tops-list-of-most-reliable-public-clouds/ Barb Darrow. Amazon Web Services tops list of most reliable public clouds ]</ref> | |||

[[File:ServiceCompare.png |frame |center|Source: https://gigaom.com/2015/01/07/amazon-web-services-tops-list-of-most-reliable-public-clouds/]] | |||

==Design== | |||

S3 is an example of an object storage and is not like a traditional hierarchical file system. S3 exposes a simple feature set to improve robustness and all data in S3 is accessed in the terms of objects and buckets. | S3 is an example of an object storage and is not like a traditional hierarchical file system. S3 exposes a simple feature set to improve robustness and all data in S3 is accessed in the terms of objects and buckets. | ||

===Objects=== | |||

Objects are the basic units of storage in Amazon S3. Each object is composed of object data and metadata. S3 supports a size of up to 5 Terabytes per object. Each object has | Objects are the basic units of storage in Amazon S3. Each object is composed of object data and metadata. S3 supports a size of up to 5 Terabytes per object. Each object has an associated metadata that is used to identify the object. Metadata is a set of name-value pairs that describe the object like date modified. Custom data about the object can be stored in metadata by the user. Every object is identified by a user defined key and is versioned by default. <ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/Introduction.html S3 Introduction]</ref>. An object consists of the following - Key, Version ID, Value, Metadata, Subresources and Access Control Information. <ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingObjects.html Using Objects]</ref> | ||

===Buckets=== | |||

A bucket is a container for objects and every object must be part of a bucket. Any number of objects can be part of a Bucket. Buckets can be configured to be hosted in a particular region (US, EU, Asia Pacific etc.) in order to optimize latency. S3 limits the number of buckets per account to 100. [http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingBucket.html] | A bucket is a container for objects and every object must be part of a bucket. Any number of objects can be part of a Bucket. Buckets can be configured to be hosted in a particular region (US, EU, Asia Pacific etc.) in order to optimize latency. S3 limits the number of buckets per account to 100. <ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingBucket.html Using Bucket]</ref> | ||

===Keys and Metadata=== | |||

An user specifies a key | An user specifies a key on object creation which is used to uniquely identify the object in the bucket. Keys for the objects can be at most 1024 bytes long. | ||

There are two kinds of metadata for an object - System metadata and Object metadata. System metadata is used by S3 for object management. For eg. - Data, Content-Type etc. are stored as System metadata. Object metadata is optional and can be used by the user to add additional metadata to the objects | There are two kinds of metadata for an object - System metadata and Object metadata. System metadata is used by S3 for object management. For eg. - Data, Content-Type etc. are stored as System metadata. Object metadata is optional and can be used by the user to add additional metadata to the objects during object creation. <ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingMetadata.html Using Metadata]</ref> | ||

===Regions=== | |||

Regions allow a user to specify the geographical region where the buckets will be stored. This can be used to optimize latency and minimizing costs. | Regions allow a user to specify the geographical region where the buckets will be stored. This can be used to optimize latency and minimizing costs. | ||

S3 supports the following regions - US Standard, US West (Oregon) region, US West (N. California) region, EU (Ireland) region, EU (Frankfurt) region, Asia Pacific (Singapore) region, Asia Pacific (Tokyo) region, Asia Pacific (Sydney) region, South America (Sao Paulo) region [http://docs.aws.amazon.com/AmazonS3/latest/dev/Introduction.html#Regions] | S3 supports the following regions - US Standard, US West (Oregon) region, US West (N. California) region, EU (Ireland) region, EU (Frankfurt) region, Asia Pacific (Singapore) region, Asia Pacific (Tokyo) region, Asia Pacific (Sydney) region, South America (Sao Paulo) region <ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/Introduction.html#Regions Regions]</ref> | ||

====Versioning==== | ====Versioning==== | ||

All objects in S3 are versioned by default and it can be used to retrieve and restore every version of an object in a bucket. Every change to an object(create, modify, delete) results in a separate version of the object which can be later used for restoring or recovery. Versioning is done at the bucket level and not for individual objects. It can be turned off or on per bucket but a versioned-enabled bucket cannot be turned to an unversioned bucket. Versioning can only be paused in these cases. [http://docs.aws.amazon.com/AmazonS3/latest/dev/Versioning.html] | All objects in S3 are versioned by default and it can be used to retrieve and restore every version of an object in a bucket. Every change to an object(create, modify, delete) results in a separate version of the object which can be later used for restoring or recovery. Versioning is done at the bucket level and not for individual objects. It can be turned off or on per bucket but a versioned-enabled bucket cannot be turned to an unversioned bucket. Versioning can only be paused in these cases. <ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/Versioning.html Versioning]</ref> | ||

===Access Permissions=== | |||

All resources (buckets,objects etc) are private in Amazon S3 by default. Only the resource owner can access the resource and can grant access to other users to accesss the resource. There are two types of access policies in S3 - Resource-based and user policies. Resource-based policies are attached to a particular resource and user policies are assigned to a particular user.<ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/s3-access-control.html Access Control]</ref> | |||

=== | ===Data Protection=== | ||

Amazon Web Services (AWS) provides an SDK | Objects are redundantly stored on multiple devices across multiple facilities within a region for durability. To improve durability, write requests do not return success before storing the data across multiple facilities. Also checksums are used to verify data integrity. If any corruption is detected, it is repaired using redundant data.<ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/DataDurability.html Data Durability]</ref> | ||

==Ruby and Amazon S3== | |||

Amazon Web Services (AWS) provides an SDK <ref>[http://rubygems.org/gems/aws-sdk| download]</ref> that works with Ruby for many amazon webservices, including Amazon S3. Developers new to the Amazon AWS SDK should begin with version 2 as it includes many built in features such as waiters, automatically paginated responses, and a streamlined plugin style architecture. Version 2 of the SDK has 2 "packages", also referred to as "gems" <ref>[http://en.wikipedia.org/wiki/RubyGems Wikipedia: RubyGems]</ref>: | |||

:* '''aws-sdk-core''' - provides a direct mapping to the AWS APIs including automatic response paging, waiters, parameter validation, and Ruby type support | :* '''aws-sdk-core''' - provides a direct mapping to the AWS APIs including automatic response paging, waiters, parameter validation, and Ruby type support | ||

| Line 43: | Line 68: | ||

It should also be noted that there exists a Version 1 of the aws sdk that lacks some "convenience features" otherwise available in version 2 of the sdk. For more information see the [http://ruby.awsblog.com/post/Tx2OMCYFEZX2I6A/AWS-SDK-for-Ruby-V2-Preview-Release|AWS Ruby Development Blog] | It should also be noted that there exists a Version 1 of the aws sdk that lacks some "convenience features" otherwise available in version 2 of the sdk. For more information see the [http://ruby.awsblog.com/post/Tx2OMCYFEZX2I6A/AWS-SDK-for-Ruby-V2-Preview-Release| AWS Ruby Development Blog]. | ||

==Examples== | ==Examples== | ||

The following section contains examples of ruby interfacing with S3. Sources and documentation for the code are provided. Please observe the copyrights if you choose to use any or all of the posted code. | |||

There are 3 key classes in AWS SDK [http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingTheMPRubyAPI.html] - | |||

'''Note:''' There are 3 key classes in AWS SDK <ref>[http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingTheMPRubyAPI.html Using the Ruby API]</ref> - | |||

:* '''AWS::S3''' - Denotes an interface to Amazon S3 for the Ruby SDK. It has the ''#buckets'' instance method for creating new buckets or accessing existing buckets. | :* '''AWS::S3''' - Denotes an interface to Amazon S3 for the Ruby SDK. It has the ''#buckets'' instance method for creating new buckets or accessing existing buckets. | ||

| Line 53: | Line 80: | ||

:* '''AWS::S3::S3Object''' - Denotes an Amazon S3 Object. It provides the method that gives information about the object and also setting access permissions, copying, deleting and uploading objects. | :* '''AWS::S3::S3Object''' - Denotes an Amazon S3 Object. It provides the method that gives information about the object and also setting access permissions, copying, deleting and uploading objects. | ||

===Creating a connection to S3 server=== | |||

Connecting to the S3 server is the essential starting point of accessing your data. The follow example shows how to connect to the server via. SSL <ref>Wikipedia: SSL http://en.wikipedia.org/wiki/SSL</ref> | |||

<pre> | <pre> | ||

AWS::S3::Base.establish_connection!( | AWS::S3::Base.establish_connection!( | ||

| Line 67: | Line 94: | ||

===Listing all buckets you own=== | ===Listing all buckets you own=== | ||

Buckets hold data about the objects you upload. To access any object, you must access the bucket first. This example shows you how to query the S3 server for a list of all the buckets you own. | |||

<pre> | <pre> | ||

AWS::S3::Service.buckets.each do |bucket| | AWS::S3::Service.buckets.each do |bucket| | ||

| Line 82: | Line 110: | ||

===Listing a bucket's contents=== | ===Listing a bucket's contents=== | ||

For a known bucket (see example for listing all buckets you own), you can also query the server for all objects inside. This code shows you how. | |||

<pre> | <pre> | ||

new_bucket = AWS::S3::Bucket.find('my-new-bucket') | new_bucket = AWS::S3::Bucket.find('my-new-bucket') | ||

new_bucket.each do |object| | new_bucket.each do |object| | ||

puts "#{object.key}\t#{object.about['content-length']}\t#{object.about['last-modified']}" | puts "#{object.key}\t#{object.about<ref>['content-length']}\t#{object.about<ref>['last-modified']}" | ||

end | end | ||

</pre> | </pre> | ||

| Line 92: | Line 121: | ||

file1.filex 251262 2011-08-08T21:35:48.000Z | file1.filex 251262 2011-08-08T21:35:48.000Z | ||

file2.filex 262518 2011-08-08T21:38:01.000Z | file2.filex 262518 2011-08-08T21:38:01.000Z | ||

</pre> | |||

:Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | |||

===Deleting an object=== | |||

This example shows you how to delete the object "goodbye.txt". You must specify the bucket as the second parameter of the S3Object.delete function. | |||

<pre> | |||

AWS::S3::S3Object.delete('goodbye.txt', 'my-new-bucket') | |||

</pre> | </pre> | ||

:Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | :Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | ||

===Deleting a bucket=== | ===Deleting a bucket=== | ||

Bucket removal may be necessary when you're trying to reduce the cost of maintaining your data on the S3 servers. This code allows you to delete buckets but only if they're empty. | |||

<pre> | <pre> | ||

AWS::S3::Bucket.delete('my-new-bucket') | AWS::S3::Bucket.delete('my-new-bucket') | ||

| Line 103: | Line 139: | ||

===Forced removal of non-empty buckets=== | ===Forced removal of non-empty buckets=== | ||

If you want to forcibly remove a bucket and dump all of its contents, you can force a deletion as shown below. | |||

<pre> | <pre> | ||

AWS::S3::Bucket.delete('my-new-bucket', :force => true) | AWS::S3::Bucket.delete('my-new-bucket', :force => true) | ||

| Line 109: | Line 146: | ||

===Creating an object=== | ===Creating an object=== | ||

Object creation is important to any program. If your app stores data via objects, you can use the S3 server to host them. In this example we create a text file hello.txt with content "Hello World!" and save it to my-new-bucket. Note that the :content_type is required for the ruby method S3Object.store. | |||

<pre> | <pre> | ||

AWS::S3::S3Object.store( | AWS::S3::S3Object.store( | ||

| Line 120: | Line 158: | ||

===Change an object's ACL (access control list)=== | ===Change an object's ACL (access control list)=== | ||

Securing user data from view by any web user is important if you're keeping sensitive information about users. This example shows you how to open the hello.txt object created earlier in my-new-bucket to the public (anyone can access it). This example also shows how to restrict the secret_plans.txt so that no one can access them. | |||

<pre> | <pre> | ||

policy = AWS::S3::S3Object.acl('hello.txt', 'my-new-bucket') | policy = AWS::S3::S3Object.acl('hello.txt', 'my-new-bucket') | ||

policy.grants = [ AWS::S3::ACL::Grant.grant(:public_read) ] | policy.grants = <ref>[ AWS::S3::ACL::Grant.grant(:public_read) ] | ||

AWS::S3::S3Object.acl('hello.txt', 'my-new-bucket', policy) | AWS::S3::S3Object.acl('hello.txt', 'my-new-bucket', policy) | ||

policy = AWS::S3::S3Object.acl('secret_plans.txt', 'my-new-bucket') | policy = AWS::S3::S3Object.acl('secret_plans.txt', 'my-new-bucket') | ||

policy.grants = [] | policy.grants = <ref>[] | ||

AWS::S3::S3Object.acl('secret_plans.txt', 'my-new-bucket', policy) | AWS::S3::S3Object.acl('secret_plans.txt', 'my-new-bucket', policy) | ||

</pre> | </pre> | ||

:Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | :Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | ||

===Generating object download urls=== | ===Generating object download urls=== | ||

Generating download links for objects on the S3 server can make it easier for you to share your objects with other users. This example shows how to create a download link for the hello.txt object we created earlier. | |||

Note that generating a link requires you to specify the bucket it resides in. Links can be public, as in the case of hello.txt below, or be set to expire on a timer through the expires_in symbol. In the example, the expiration of secret_plans.txt is 1 hour (60s * 60). | |||

<pre> | <pre> | ||

puts AWS::S3::S3Object.url_for( | puts AWS::S3::S3Object.url_for( | ||

| Line 167: | Line 190: | ||

http://objects.dreamhost.com/my-bucket-name/hello.txt | http://objects.dreamhost.com/my-bucket-name/hello.txt | ||

http://objects.dreamhost.com/my-bucket-name/secret_plans.txt?Signature=XXXXXXXXXXXXXXXXXXXXXXXXXXX&Expires=1316027075&AWSAccessKeyId=XXXXXXXXXXXXXXXXXXX | http://objects.dreamhost.com/my-bucket-name/secret_plans.txt?Signature=XXXXXXXXXXXXXXXXXXXXXXXXXXX&Expires=1316027075&AWSAccessKeyId=XXXXXXXXXXXXXXXXXXX | ||

</pre> | |||

:Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | |||

===Download an object to a folder on S3=== | |||

An app may choose to retrieve objects from a server and save these to the S3 server, where they can be accessed later. This example shows how to download the poetry.pdf object to the bucket my-new-bucket. | |||

<pre> | |||

open('/home/larry/documents/poetry.pdf', 'w') do |file| | |||

AWS::S3::S3Object.stream('poetry.pdf', 'my-new-bucket') do |chunk| | |||

file.write(chunk) | |||

end | |||

end | |||

</pre> | </pre> | ||

:Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | :Source: http://ceph.com/docs/master/radosgw/s3/ruby/ | ||

===Upload a file to Amazon S3=== | ===Upload a file to Amazon S3=== | ||

:As per the Apache License v 2.0, the follow code is reproducible and redistributable with the following [http://aws.amazon.com/apache-2-0/ license]. | This example is a combination of many examples shown above. A bucket is created and filled with a local file. The program also generates a url for the upload and allows the option of deleting the local copy of the object at the user's discretion. | ||

:As per the Apache License v 2.0, the follow code is reproducible and redistributable with the following license <ref>[http://aws.amazon.com/apache-2-0/ license]</ref>. | |||

<pre> | <pre> | ||

# Copyright 2011-2013 Amazon.com, Inc. or its affiliates. All Rights Reserved. | # Copyright 2011-2013 Amazon.com, Inc. or its affiliates. All Rights Reserved. | ||

| Line 217: | Line 252: | ||

o.delete | o.delete | ||

</pre> | </pre> | ||

See the following link for the documentation for AWS SDK - <ref>[http://docs.aws.amazon.com/AWSRubySDK/latest/_index.html AWS SDK for Ruby]</ref> | |||

==References== | ==References== | ||

<references/> | <references/> | ||

Latest revision as of 16:46, 2 March 2015

Amazon Simple Storage Service (Amazon S3) is a remote, scalable, secure, and cost efficient storage space service provided by Amazon. Users are able to access their storage on Amazon S3 from the web via REST <ref>Wikipedia: REST</ref> HTTP <ref>HTTP</ref>, or SOAP <ref>SOAP</ref> making their data accessible from virtually anywhere in the world. Amazon S3 implements redundancy across multiple devices on multiple facilities in order to safeguard against application failure ,data loss and minimization of downtime <ref>Amazon S3</ref>. Some of the most prominent users of Amazon S3 include: Netflix, SmugMug, Wetransfer, Pinterest, and NASDAQ <ref>Amazon S3 Homepage</ref>.

Background

Amazon S3 launched in March of 2006 in the United States <ref>Amazon Press Release</ref> and in Europe in November of 2007 <ref>Amazon Press Release</ref>. Since its inception, Amazon S3 has reported tremendous growth. Beginning in July of 2006, S3 hosted 800 million objects; April of 2007, 5 billion objects; October of 2007, 10 billion; Jan 2008, 14 billion <ref>Happy birthday Amazon S3</ref>; October 2008, 29 billion <ref>amazon s3 now</ref>; March 2009, 52 billion <ref>Upload promotion</ref>; August 2009, 64 billion <ref>Amazons Head Start in the Cloud</ref>. In April of 2013, S3 now hosts more than 2 trillion objects and on average 1.1 million requests every second! <ref>Two trillion objects</ref>. While Amazon is a fast paced, growing cloud storage service, there exists many alternatives to Amazon S3. A brief overview of the cost to performance comparison is shown below.

Alternatives to S3

There currently exists alternatives to AmazonS3:

As with any online cloud storage service, the cost of storage is always a concern. The following table shows a cost comparison between them:

While all three services are fairly comparable in price, there are some developer support considerations for selecting a service. For instance, if an app is focused around the Android OS, using the google cloud storage provides you with more tightly coupled APIs for your app. The overhead of development may be much less compared to using S3 to rails APIs for a Google app. Similarly, Windows Phone OS would provide more native support for Windows Phone apps and likewise kindle fire apps would have more native support on Fire OS.

The reliability of a cloud storage service like S3 plays a heavy role in the selection process. Less downtime translates to a more robust and reliable app. In 2014, Amazon S3 registered only 23 outages totaling 2.69 hours of downtime whereas Google registered 8 outages totaling 14.23 hours of downtime. Microsoft Azure totaled 141 outages summing 10.97 hours. <ref>Barb Darrow. Amazon Web Services tops list of most reliable public clouds </ref>

Design

S3 is an example of an object storage and is not like a traditional hierarchical file system. S3 exposes a simple feature set to improve robustness and all data in S3 is accessed in the terms of objects and buckets.

Objects

Objects are the basic units of storage in Amazon S3. Each object is composed of object data and metadata. S3 supports a size of up to 5 Terabytes per object. Each object has an associated metadata that is used to identify the object. Metadata is a set of name-value pairs that describe the object like date modified. Custom data about the object can be stored in metadata by the user. Every object is identified by a user defined key and is versioned by default. <ref>S3 Introduction</ref>. An object consists of the following - Key, Version ID, Value, Metadata, Subresources and Access Control Information. <ref>Using Objects</ref>

Buckets

A bucket is a container for objects and every object must be part of a bucket. Any number of objects can be part of a Bucket. Buckets can be configured to be hosted in a particular region (US, EU, Asia Pacific etc.) in order to optimize latency. S3 limits the number of buckets per account to 100. <ref>Using Bucket</ref>

Keys and Metadata

An user specifies a key on object creation which is used to uniquely identify the object in the bucket. Keys for the objects can be at most 1024 bytes long.

There are two kinds of metadata for an object - System metadata and Object metadata. System metadata is used by S3 for object management. For eg. - Data, Content-Type etc. are stored as System metadata. Object metadata is optional and can be used by the user to add additional metadata to the objects during object creation. <ref>Using Metadata</ref>

Regions

Regions allow a user to specify the geographical region where the buckets will be stored. This can be used to optimize latency and minimizing costs. S3 supports the following regions - US Standard, US West (Oregon) region, US West (N. California) region, EU (Ireland) region, EU (Frankfurt) region, Asia Pacific (Singapore) region, Asia Pacific (Tokyo) region, Asia Pacific (Sydney) region, South America (Sao Paulo) region <ref>Regions</ref>

Versioning

All objects in S3 are versioned by default and it can be used to retrieve and restore every version of an object in a bucket. Every change to an object(create, modify, delete) results in a separate version of the object which can be later used for restoring or recovery. Versioning is done at the bucket level and not for individual objects. It can be turned off or on per bucket but a versioned-enabled bucket cannot be turned to an unversioned bucket. Versioning can only be paused in these cases. <ref>Versioning</ref>

Access Permissions

All resources (buckets,objects etc) are private in Amazon S3 by default. Only the resource owner can access the resource and can grant access to other users to accesss the resource. There are two types of access policies in S3 - Resource-based and user policies. Resource-based policies are attached to a particular resource and user policies are assigned to a particular user.<ref>Access Control</ref>

Data Protection

Objects are redundantly stored on multiple devices across multiple facilities within a region for durability. To improve durability, write requests do not return success before storing the data across multiple facilities. Also checksums are used to verify data integrity. If any corruption is detected, it is repaired using redundant data.<ref>Data Durability</ref>

Ruby and Amazon S3

Amazon Web Services (AWS) provides an SDK <ref>download</ref> that works with Ruby for many amazon webservices, including Amazon S3. Developers new to the Amazon AWS SDK should begin with version 2 as it includes many built in features such as waiters, automatically paginated responses, and a streamlined plugin style architecture. Version 2 of the SDK has 2 "packages", also referred to as "gems" <ref>Wikipedia: RubyGems</ref>:

- aws-sdk-core - provides a direct mapping to the AWS APIs including automatic response paging, waiters, parameter validation, and Ruby type support

- aws-sdk-resources - provides an object-oriented abstraction over low-level interfaces in the core to reduce the complexity of utilizing core interfaces; resource objects reference other objects such as an Amazon S3 instance and the attributes and actions as instance variables and methods.

It should also be noted that there exists a Version 1 of the aws sdk that lacks some "convenience features" otherwise available in version 2 of the sdk. For more information see the AWS Ruby Development Blog.

Examples

The following section contains examples of ruby interfacing with S3. Sources and documentation for the code are provided. Please observe the copyrights if you choose to use any or all of the posted code.

Note: There are 3 key classes in AWS SDK <ref>Using the Ruby API</ref> -

- AWS::S3 - Denotes an interface to Amazon S3 for the Ruby SDK. It has the #buckets instance method for creating new buckets or accessing existing buckets.

- AWS::S3::Bucket - Denotes an Amazon S3 Bucket. It provides the #objects instance method to access existing objects and also other methods to get information about a bucket.

- AWS::S3::S3Object - Denotes an Amazon S3 Object. It provides the method that gives information about the object and also setting access permissions, copying, deleting and uploading objects.

Creating a connection to S3 server

Connecting to the S3 server is the essential starting point of accessing your data. The follow example shows how to connect to the server via. SSL <ref>Wikipedia: SSL http://en.wikipedia.org/wiki/SSL</ref>

AWS::S3::Base.establish_connection!(

:server => 'objects.example.com',

:use_ssl => true,

:access_key_id => 'my-access-key',

:secret_access_key => 'my-secret-key'

)

Listing all buckets you own

Buckets hold data about the objects you upload. To access any object, you must access the bucket first. This example shows you how to query the S3 server for a list of all the buckets you own.

AWS::S3::Service.buckets.each do |bucket|

puts "#{bucket.name}\t#{bucket.creation_date}"

end

Expected output:

mybuckat1 2011-04-21T18:05:39.000Z mybuckat2 2011-04-21T18:05:48.000Z mybuckat3 2011-04-21T18:07:18.000Z

Listing a bucket's contents

For a known bucket (see example for listing all buckets you own), you can also query the server for all objects inside. This code shows you how.

new_bucket = AWS::S3::Bucket.find('my-new-bucket')

new_bucket.each do |object|

puts "#{object.key}\t#{object.about<ref>['content-length']}\t#{object.about<ref>['last-modified']}"

end

Expected output

file1.filex 251262 2011-08-08T21:35:48.000Z file2.filex 262518 2011-08-08T21:38:01.000Z

Deleting an object

This example shows you how to delete the object "goodbye.txt". You must specify the bucket as the second parameter of the S3Object.delete function.

AWS::S3::S3Object.delete('goodbye.txt', 'my-new-bucket')

Deleting a bucket

Bucket removal may be necessary when you're trying to reduce the cost of maintaining your data on the S3 servers. This code allows you to delete buckets but only if they're empty.

AWS::S3::Bucket.delete('my-new-bucket')

Forced removal of non-empty buckets

If you want to forcibly remove a bucket and dump all of its contents, you can force a deletion as shown below.

AWS::S3::Bucket.delete('my-new-bucket', :force => true)

Creating an object

Object creation is important to any program. If your app stores data via objects, you can use the S3 server to host them. In this example we create a text file hello.txt with content "Hello World!" and save it to my-new-bucket. Note that the :content_type is required for the ruby method S3Object.store.

AWS::S3::S3Object.store(

'hello.txt',

'Hello World!',

'my-new-bucket',

:content_type => 'text/plain'

)

Change an object's ACL (access control list)

Securing user data from view by any web user is important if you're keeping sensitive information about users. This example shows you how to open the hello.txt object created earlier in my-new-bucket to the public (anyone can access it). This example also shows how to restrict the secret_plans.txt so that no one can access them.

policy = AWS::S3::S3Object.acl('hello.txt', 'my-new-bucket')

policy.grants = <ref>[ AWS::S3::ACL::Grant.grant(:public_read) ]

AWS::S3::S3Object.acl('hello.txt', 'my-new-bucket', policy)

policy = AWS::S3::S3Object.acl('secret_plans.txt', 'my-new-bucket')

policy.grants = <ref>[]

AWS::S3::S3Object.acl('secret_plans.txt', 'my-new-bucket', policy)

Generating object download urls

Generating download links for objects on the S3 server can make it easier for you to share your objects with other users. This example shows how to create a download link for the hello.txt object we created earlier. Note that generating a link requires you to specify the bucket it resides in. Links can be public, as in the case of hello.txt below, or be set to expire on a timer through the expires_in symbol. In the example, the expiration of secret_plans.txt is 1 hour (60s * 60).

puts AWS::S3::S3Object.url_for(

'hello.txt',

'my-new-bucket',

:authenticated => false

)

puts AWS::S3::S3Object.url_for(

'secret_plans.txt',

'my-new-bucket',

:expires_in => 60 * 60

)

Expected Output:

http://objects.dreamhost.com/my-bucket-name/hello.txt http://objects.dreamhost.com/my-bucket-name/secret_plans.txt?Signature=XXXXXXXXXXXXXXXXXXXXXXXXXXX&Expires=1316027075&AWSAccessKeyId=XXXXXXXXXXXXXXXXXXX

Download an object to a folder on S3

An app may choose to retrieve objects from a server and save these to the S3 server, where they can be accessed later. This example shows how to download the poetry.pdf object to the bucket my-new-bucket.

open('/home/larry/documents/poetry.pdf', 'w') do |file|

AWS::S3::S3Object.stream('poetry.pdf', 'my-new-bucket') do |chunk|

file.write(chunk)

end

end

Upload a file to Amazon S3

This example is a combination of many examples shown above. A bucket is created and filled with a local file. The program also generates a url for the upload and allows the option of deleting the local copy of the object at the user's discretion.

- As per the Apache License v 2.0, the follow code is reproducible and redistributable with the following license <ref>license</ref>.

# Copyright 2011-2013 Amazon.com, Inc. or its affiliates. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License"). You

# may not use this file except in compliance with the License. A copy of

# the License is located at

#

# http://aws.amazon.com/apache2.0/

#

# or in the "license" file accompanying this file. This file is

# distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF

# ANY KIND, either express or implied. See the License for the specific

# language governing permissions and limitations under the License.

require 'aws-sdk'

(bucket_name, file_name) = ARGV

unless bucket_name && file_name

puts "Usage: upload_file.rb <BUCKET_NAME> <FILE_NAME>"

exit 1

end

# get an instance of the S3 interface using the default configuration

s3 = AWS::S3.new

# create a bucket

b = s3.buckets.create(bucket_name)

# upload a file

basename = File.basename(file_name)

o = b.objects[basename]

o.write(:file => file_name)

puts "Uploaded #{file_name} to:"

puts o.public_url

# generate a presigned URL

puts "\nUse this URL to download the file:"

puts o.url_for(:read)

puts "(press any key to delete the object)"

$stdin.getc

o.delete

See the following link for the documentation for AWS SDK - <ref>AWS SDK for Ruby</ref>

References

<references/>