CSC/ECE 517 Fall 2014/ch1a 20 kv: Difference between revisions

(Accounted some of the reasonable suggestions in the 1st review) |

|||

| (22 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

='''Scalability in Web Applications'''= | |||

Scalability is the capability to increase resources to yield a linear (ideally) increase in service capacity. The key characteristic of a scalable application is that additional load only requires additional resources rather than extensive modification of the application itself. | Scalability is the capability to increase resources to yield a linear (ideally) increase in service capacity. The key characteristic of a scalable application is that additional load only requires additional resources rather than extensive modification of the application itself. | ||

| Line 12: | Line 12: | ||

===Scalable Architecture=== | ===Scalable Architecture=== | ||

The different types of Scalable architectures are: | The different types of Scalable architectures are: | ||

====Scaling Up (vertical scaling)==== | =====Scaling Up (vertical scaling)===== | ||

Scaling up is the commonly used term for achieving scalability using better, faster, and more expensive hardware. Scaling up includes adding more memory, adding more or faster processors, or simply migrating the application to a more powerful, single machine. Typically, this method allows for an increase in capacity without requiring changes to source code. Administratively, things remain the same since there is still only one machine to manage. Upgrading a hardware component in a machine simply moves the processing capacity limit from one part of the machine to another. For example, a machine that is at 100 percent CPU utilization could increase capacity by adding another CPU. However, the limitation may shift from the CPU to the system memory. Adding CPUs does not add performance in a linear fashion. Instead, the performance gain curve slowly tapers off as each additional processor is added. For machines with [http://www.webopedia.com/TERM/S/SMP.html symmetric multi-processor](SMP) configurations, each additional processor incurs system overhead. Consequently, a four-processor machine will not realize a 400 percent gain in capacity over the uniprocessor version. Once you have upgraded each hardware component to its maximum capacity, you will eventually reach the real limit of the machine's processing capacity. At that point, the next step in scaling up is to move to another machine. | Scaling up is the commonly used term for achieving scalability using better, faster, and more expensive hardware. Scaling up includes adding more memory, adding more or faster processors, or simply migrating the application to a more powerful, single machine. Typically, this method allows for an increase in capacity without requiring changes to source code. Administratively, things remain the same since there is still only one machine to manage. Upgrading a hardware component in a machine simply moves the processing capacity limit from one part of the machine to another. For example, a machine that is at 100 percent CPU utilization could increase capacity by adding another CPU. However, the limitation may shift from the CPU to the system memory. Adding CPUs does not add performance in a linear fashion. Instead, the performance gain curve slowly tapers off as each additional processor is added. For machines with [http://www.webopedia.com/TERM/S/SMP.html symmetric multi-processor](SMP) configurations, each additional processor incurs system overhead. Consequently, a four-processor machine will not realize a 400 percent gain in capacity over the uniprocessor version. Once you have upgraded each hardware component to its maximum capacity, you will eventually reach the real limit of the machine's processing capacity. At that point, the next step in scaling up is to move to another machine. | ||

| Line 19: | Line 19: | ||

The key to successfully scaling out an application is location transparency. If any of the application code depends on knowing what server is running the code, location transparency has not been achieved and scaling out will be difficult. This situation is called location affinity. Location affinity requires code changes to scale out an application from one server to many, which is seldom an economical option. If you design the application with location transparency in mind, scaling out becomes an easier task. <ref>http://msdn.microsoft.com/en-us/library/aa292203(v=vs.71).aspx</ref> | The key to successfully scaling out an application is location transparency. If any of the application code depends on knowing what server is running the code, location transparency has not been achieved and scaling out will be difficult. This situation is called location affinity. Location affinity requires code changes to scale out an application from one server to many, which is seldom an economical option. If you design the application with location transparency in mind, scaling out becomes an easier task. <ref>http://msdn.microsoft.com/en-us/library/aa292203(v=vs.71).aspx</ref> | ||

==Factors to be considered while designing a Scalable Web Application== | ==Factors to be considered while designing a Scalable Web Application== | ||

| Line 26: | Line 39: | ||

Term performance of web application is used to mean several things. Most developers are primarily concerned with 2 important factors which are '''response time''' and '''scalability'''. | Term performance of web application is used to mean several things. Most developers are primarily concerned with 2 important factors which are '''response time''' and '''scalability'''. | ||

===''Response Time''=== | |||

It is the time taken by a web application to process request and return response. Applications should respond to requests (response time) within acceptable duration. If application is taking beyond the acceptable time, it is said to be non-performing or degraded. | It is the time taken by a web application to process request and return response. Applications should respond to requests (response time) within acceptable duration. If application is taking beyond the acceptable time, it is said to be non-performing or degraded. | ||

Scalability and the design architecture implemented, as discussed earlier, also have a major role in determining the performance of the system. | Scalability and the design architecture implemented, as discussed earlier, also have a major role in determining the performance of the system. | ||

===''Response Time Vs Scalability''=== | |||

Response time and Scalability don’t always go together i.e. application might have acceptable response times but can not handle more than certain number of requests or application can handle increasing number of requests but has poor or long response times. We have to strike a balance between scalability and response time to get good performance of the application. | Response time and Scalability don’t always go together i.e. application might have acceptable response times but can not handle more than certain number of requests or application can handle increasing number of requests but has poor or long response times. We have to strike a balance between scalability and response time to get good performance of the application. | ||

| Line 53: | Line 66: | ||

==Scalability Issues in web applications== | ==Scalability Issues in web applications== | ||

The different and the major issues to be considered while scaling a web application are: | The different and the major issues to be considered while scaling a web application are: | ||

* ''Architecture bottlenecks'' | * ''Architecture bottlenecks'' | ||

* ''Scaling Database'' | * ''Scaling Database'' | ||

* ''CPU Bound Application'' and | * ''CPU Bound Application'' and | ||

* ''IO Bound Application'' | * ''IO Bound Application'' | ||

| Line 60: | Line 73: | ||

===Architecture bottlenecks=== | ===Architecture bottlenecks=== | ||

Scaling bottlenecks are formed due to two issues | Scaling bottlenecks are formed due to two issues | ||

====''Centralised component''==== | |||

====''Centralised component''==== | |||

A component in application architecture which can not be scaled out adds an upper limit on number of requests that entire architecture or request pipeline can handle. | A component in application architecture which can not be scaled out adds an upper limit on number of requests that entire architecture or request pipeline can handle. | ||

====''High latency component''==== | ====''High latency component''==== | ||

A slow component in request pipeline puts lower limit on the response time of the application. Usual solution to fix this issue is to make high latency components into background jobs or executing them asynchronously with queuing. | A slow component in request pipeline puts lower limit on the response time of the application. Usual solution to fix this issue is to make high latency components into background jobs or executing them asynchronously with queuing. | ||

===Scaling Database=== | ===Scaling Database=== | ||

Database can be scaled vertically (Partitioning) or horizontally (Sharding). | Database can be scaled vertically (Partitioning) or horizontally (Sharding). | ||

====''Vertically Scaling (Partitioning)''==== | ====''Vertically Scaling (Partitioning)''==== | ||

Database can be split into multiple loosely coupled sub-databases based on domain concepts. Examples are Customer database, Product Database etc. Another way to split database is by moving few columns of an entity to one database and few other columns to another database. Examples are Customer database, Customer contact Info database, Customer Orders database etc. | Database can be split into multiple loosely coupled sub-databases based on domain concepts. Examples are Customer database, Product Database etc. Another way to split database is by moving few columns of an entity to one database and few other columns to another database. Examples are Customer database, Customer contact Info database, Customer Orders database etc. | ||

====''Horizontally scaling (Sharding)''==== | |||

====''Horizontally scaling (Sharding)''==== | |||

Database can be horizontally split into multiple database based on some discrete attribute. Examples American Customers database, European Customers database. Transiting from single database to multiple database using partitioning or sharding is a challenging task. | Database can be horizontally split into multiple database based on some discrete attribute. Examples American Customers database, European Customers database. Transiting from single database to multiple database using partitioning or sharding is a challenging task. | ||

===CPU Bound Application=== | ===CPU Bound Application=== | ||

An application is said to be CPU bound if application throughput is limited by its CPU. By increasing CPU speed application response time can be reduced. | An application is said to be CPU bound if application throughput is limited by its CPU. By increasing CPU speed application response time can be reduced. Few scenarios where applications could be CPU Bound are | ||

Few scenarios where applications could be CPU Bound are | |||

* Applications which are computing or processing data without performing IO operations. (Finance or Trading Applications) | * Applications which are computing or processing data without performing IO operations. (Finance or Trading Applications) | ||

* Applications which use cache heavily and don’t perform any IO operations | * Applications which use cache heavily and don’t perform any IO operations | ||

* Applications which are asynchronous (i.e. Non Blocking), don’t wait on external resources. (Reactive Pattern Applications, [http://nodejs.org/ NodeJS] application) | * Applications which are asynchronous (i.e. Non Blocking), don’t wait on external resources. (Reactive Pattern Applications, [http://nodejs.org/ NodeJS] application) | ||

In the above | |||

In the scenarios discussed above, the application is working efficiently, but consider the scenario where the applications are written with inefficient code which may increase the CPU usage by giving heavy calculation or looping requests. The kind of issues can be fixed by | |||

* Profiling application | |||

* Caching precomputing values | * Caching precomputing values | ||

* Performing the computation in separate background job. | * Performing the computation in separate background job. | ||

| Line 90: | Line 108: | ||

==Best practices to improve scalability== | ==Best practices to improve scalability== | ||

* ''Offload the database'' | * '''''Offload the database:''''' Stay away from the database as much as possible. That means don’t open connections to it and don’t start transactions unless you have to. | ||

* ''What a difference a cache makes'' | |||

* ''Cache as coarse-grained objects as possible'' | * '''''What a difference a cache makes:''''' Caches can greatly offload the database especially for applications accessing the database in read-only mode. In-memory cache is better than an on-disk one, which is better than a remote or a relational database. | ||

* ''Don’t store transient state permanently'' | |||

* ''Location, Location'' | * '''''Cache as coarse-grained objects as possible:''''' Caching coarse-grained objects will save CPU and time required to interrogate n number of cache zones rather than a single cache zone. Furthermore, retrieving a full object graph saves time assembling the object graph. | ||

* '''''Don’t store transient state permanently:''''' Avoid storing transient data, like login session data, in a database. The “state monster” is a dangerous beast. As a rule of thumb, only store actual, necessary, critical and actionable business data in permanent storage (database, disk) and nothing else. | |||

* '''''Location, Location:''''' Put things close to where they are supposed to be delivered. Instead of going through a load balancer, a web server, an application server and a database, it is faster and less consuming to go through the load balancer and the web server and retrieve some of the content from a Content Delivery Network ([http://www.webopedia.com/TERM/C/CDN.html CDN]). | |||

* '''''Constrain concurrent access to limited resources:''''' If more than one request accesses the same resource and performs the same calculation, it is better to proceed with the first and let the others wait until it finishes its job to just use the final results. Letting all the threads to access the resource will only slow down the process. | |||

* '''''Staged, asynchronous processing:''''' Separating a process through asynchronicity into discrete, separate steps separated by queues and executed by a limited number of workers/threads in each step will quite often do wonders for both scalability and performance. | |||

* '''''Minimize network chatter:''''' Try to make the application as remotely untalkative as possible because network communications are considerably slower than in-memory ones. <ref>http://www.infoq.com/news/2009/05/8-Best-Practices-Scalability</ref> | |||

Similar practices are implemented in some of the major websites such as [http://highscalability.com/amazon-architecture Amazon] and | |||

[https://sites.google.com/site/io/building-scalable-web-applications-with-google-app-engine Google]. | |||

==Improving the performance of Ruby on Rails Application== | ==Improving the performance of Ruby on Rails Application== | ||

All the above discussed sacalability issues and design considerations can be used in any web development framework and improve the scalability of the web apps. Lets discuss some of the important action items {different design and coding principles} to be considered while designing a Rails Web App are: | |||

===Choose right session storage=== | ===Choose right session storage=== | ||

Based on your level of need, choose your session storage carefully. Rails provide the following levels of storage: | Based on your level of need, choose your session storage carefully. Rails provide the following levels of storage: | ||

Latest revision as of 19:36, 23 September 2014

Scalability in Web Applications

Scalability is the capability to increase resources to yield a linear (ideally) increase in service capacity. The key characteristic of a scalable application is that additional load only requires additional resources rather than extensive modification of the application itself.

Scalability Overview

Scalability must be part of the design process because it is not a discrete feature that you can add later. As with other application capabilities, the decisions you take during the design and early coding phases will largely dictate the scalability of your application.

Application scalability requires a balanced partnership between two distinct domains, software and hardware. You can make great strides that increase the scalability in one domain only to sabotage them by making mistakes in the other. For example, building a load-balanced farm of Web servers will not benefit a Web application that has been designed to run only on a single machine. Likewise, designing a highly scalable application and then deploying it to machines connected to a low-bandwidth network will not handle heavy loads well when traffic saturates the network. Since scalability is not a design concern of stand-alone applications, the applications discussed here are distributed applications. Distributed applications are also a step beyond traditional client-server applications.

Distributed applications are applications that are designed as n-tier applications. Such distributed application architectures promote the design of scalable applications by sharing resources, such as business components and databases.

Scalable Architecture

The different types of Scalable architectures are:

Scaling Up (vertical scaling)

Scaling up is the commonly used term for achieving scalability using better, faster, and more expensive hardware. Scaling up includes adding more memory, adding more or faster processors, or simply migrating the application to a more powerful, single machine. Typically, this method allows for an increase in capacity without requiring changes to source code. Administratively, things remain the same since there is still only one machine to manage. Upgrading a hardware component in a machine simply moves the processing capacity limit from one part of the machine to another. For example, a machine that is at 100 percent CPU utilization could increase capacity by adding another CPU. However, the limitation may shift from the CPU to the system memory. Adding CPUs does not add performance in a linear fashion. Instead, the performance gain curve slowly tapers off as each additional processor is added. For machines with symmetric multi-processor(SMP) configurations, each additional processor incurs system overhead. Consequently, a four-processor machine will not realize a 400 percent gain in capacity over the uniprocessor version. Once you have upgraded each hardware component to its maximum capacity, you will eventually reach the real limit of the machine's processing capacity. At that point, the next step in scaling up is to move to another machine.

Scaling Out (horizontal scaling)

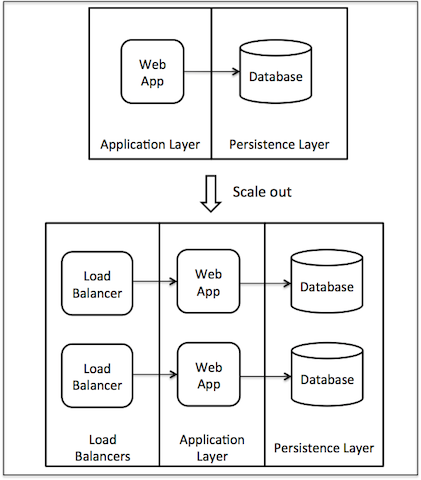

An alternative to scaling up is scaling out. Scaling out leverages the economics of using commodity PC hardware to distribute the processing load across more than one server.

Although scaling out is achieved using many machines, the collection essentially functions as a single machine. By dedicating several machines to a common task, application fault tolerance is increased. Of course, from the administrator's perspective, scaling out also presents a greater management challenge due to the increased number of machines. Developers and administrators use a variety of load balancing techniques to scale out with the Windows platform. Load balancing allows a site to scale out across a cluster of servers, making it easy to add capacity by adding more replicated servers. It also provides redundancy, giving the site failover capabilities so that it remains available to users even if one or more servers fail (or need to be taken down for maintenance). Scaling out provides a method of scalability that is not hampered by hardware limitations. Each additional server provides a near linear increase in scalability.

The key to successfully scaling out an application is location transparency. If any of the application code depends on knowing what server is running the code, location transparency has not been achieved and scaling out will be difficult. This situation is called location affinity. Location affinity requires code changes to scale out an application from one server to many, which is seldom an economical option. If you design the application with location transparency in mind, scaling out becomes an easier task. <ref>http://msdn.microsoft.com/en-us/library/aa292203(v=vs.71).aspx</ref>

Factors to be considered while designing a Scalable Web Application

While designing a Web application, one should consider many factors that affect the proper functioning of the system/app. These factors play a very important rule in whether or not the web application is scalable or not. We'll look into a few of them here.

Performance

Term performance of web application is used to mean several things. Most developers are primarily concerned with 2 important factors which are response time and scalability.

Response Time

It is the time taken by a web application to process request and return response. Applications should respond to requests (response time) within acceptable duration. If application is taking beyond the acceptable time, it is said to be non-performing or degraded.

Scalability and the design architecture implemented, as discussed earlier, also have a major role in determining the performance of the system.

Response Time Vs Scalability

Response time and Scalability don’t always go together i.e. application might have acceptable response times but can not handle more than certain number of requests or application can handle increasing number of requests but has poor or long response times. We have to strike a balance between scalability and response time to get good performance of the application.

Capacity Planning

Capacity planning is an exercise of figuring out the required hardware to handle expected load in production. Usually it involves figuring out performance of application with fewer boxes and based on performance per box projecting it. Finally verifying it with load/performance tests.

Scalable Architecture

Application architecture is scalable if each layer in multi layered architecture is scalable (scale out). For ex as shown in fig 1.3 below we should be able to linearly scale by adding additional boxes in Application Layer or Database Layer.

Scaling Load Balancer

Load balancers can be scaled out by single DNS to multiple IP addresses and using DNS Round Robin for IP address lookup. Other option is to use another load balancer which distributes load to next level load balancers. Adding multiple Load balancers is rare as a single box running nginx or HAProxy can handle more than 20K concurrent connections per box compared to web application boxes which can handle few thousand concurrent requests. So a single load balancer box can handle several web application boxes.

Scaling Database

Scaling database is one of the most common issues faced. Adding business logic in database layer brings in additional overhead and complexity. Here we'll see a few specific databases and scalability feature in them.

RDBMS

RDBMS database can be scaled by having master-slave mode with read/writes on master database and only reads on slave databases. Master-Slave provides limited scaling of reads beyond which developers have to split the database into multiple databases.

NoSQL

CAP theorem has shown that it is not possible to get Consistency, Availability and Partition tolerance simultaneously. NoSql databases usually compromise on consistency to get high availability and partition.

Scalability Issues in web applications

The different and the major issues to be considered while scaling a web application are:

- Architecture bottlenecks

- Scaling Database

- CPU Bound Application and

- IO Bound Application

Architecture bottlenecks

Scaling bottlenecks are formed due to two issues

Centralised component

A component in application architecture which can not be scaled out adds an upper limit on number of requests that entire architecture or request pipeline can handle.

High latency component

A slow component in request pipeline puts lower limit on the response time of the application. Usual solution to fix this issue is to make high latency components into background jobs or executing them asynchronously with queuing.

Scaling Database

Database can be scaled vertically (Partitioning) or horizontally (Sharding).

Vertically Scaling (Partitioning)

Database can be split into multiple loosely coupled sub-databases based on domain concepts. Examples are Customer database, Product Database etc. Another way to split database is by moving few columns of an entity to one database and few other columns to another database. Examples are Customer database, Customer contact Info database, Customer Orders database etc.

Horizontally scaling (Sharding)

Database can be horizontally split into multiple database based on some discrete attribute. Examples American Customers database, European Customers database. Transiting from single database to multiple database using partitioning or sharding is a challenging task.

CPU Bound Application

An application is said to be CPU bound if application throughput is limited by its CPU. By increasing CPU speed application response time can be reduced. Few scenarios where applications could be CPU Bound are

- Applications which are computing or processing data without performing IO operations. (Finance or Trading Applications)

- Applications which use cache heavily and don’t perform any IO operations

- Applications which are asynchronous (i.e. Non Blocking), don’t wait on external resources. (Reactive Pattern Applications, NodeJS application)

In the scenarios discussed above, the application is working efficiently, but consider the scenario where the applications are written with inefficient code which may increase the CPU usage by giving heavy calculation or looping requests. The kind of issues can be fixed by

- Profiling application

- Caching precomputing values

- Performing the computation in separate background job.

IO Bound Application

An application is said to be IO bound if application throughput is limited by its IO or network operations and increasing CPU speed does not bring down application response times. Most applications are IO bound due to the CRUD operation in most applications Performance tuning or scaling IO bound applications is a difficult job due to its dependency on other systems downstream. Few scenarios where applications could be IO Bound are

- Applications which are depended on database and perform CRUD operations

- Applications which consume drown stream web services for performing its operations <ref>http://highscalability.com/blog/2014/5/12/4-architecture-issues-when-scaling-web-applications-bottlene.html</ref>

Best practices to improve scalability

- Offload the database: Stay away from the database as much as possible. That means don’t open connections to it and don’t start transactions unless you have to.

- What a difference a cache makes: Caches can greatly offload the database especially for applications accessing the database in read-only mode. In-memory cache is better than an on-disk one, which is better than a remote or a relational database.

- Cache as coarse-grained objects as possible: Caching coarse-grained objects will save CPU and time required to interrogate n number of cache zones rather than a single cache zone. Furthermore, retrieving a full object graph saves time assembling the object graph.

- Don’t store transient state permanently: Avoid storing transient data, like login session data, in a database. The “state monster” is a dangerous beast. As a rule of thumb, only store actual, necessary, critical and actionable business data in permanent storage (database, disk) and nothing else.

- Location, Location: Put things close to where they are supposed to be delivered. Instead of going through a load balancer, a web server, an application server and a database, it is faster and less consuming to go through the load balancer and the web server and retrieve some of the content from a Content Delivery Network (CDN).

- Constrain concurrent access to limited resources: If more than one request accesses the same resource and performs the same calculation, it is better to proceed with the first and let the others wait until it finishes its job to just use the final results. Letting all the threads to access the resource will only slow down the process.

- Staged, asynchronous processing: Separating a process through asynchronicity into discrete, separate steps separated by queues and executed by a limited number of workers/threads in each step will quite often do wonders for both scalability and performance.

- Minimize network chatter: Try to make the application as remotely untalkative as possible because network communications are considerably slower than in-memory ones. <ref>http://www.infoq.com/news/2009/05/8-Best-Practices-Scalability</ref>

Similar practices are implemented in some of the major websites such as Amazon and Google.

Improving the performance of Ruby on Rails Application

All the above discussed sacalability issues and design considerations can be used in any web development framework and improve the scalability of the web apps. Lets discuss some of the important action items {different design and coding principles} to be considered while designing a Rails Web App are:

Choose right session storage

Based on your level of need, choose your session storage carefully. Rails provide the following levels of storage:

- CookieStore – Stores everything on the client.

- DRbStore – Stores the data on a DRb server.

- MemCacheStore – Stores the data in a memcache.

- ActiveRecordStore – Stores the data in a database using Active Record.

DRY (Don’t repeat yourself)

This is the most common things programmers tend to listen and don’t follow. Here is very basic example:

if(Player.find_by_id(1).name == "James") return Player.find_by_id(1) else return nil end

The above code should be written as:

player = Player.find_by_id(1) if(player.name == "James") then player else nil end

Eager loading

Eager loading is a way to solve the classic N + 1 query performance problem caused by inefficient use of child objects. Let’s look at the following code. It will fetch zip of 10 users.

users = User.all(:limit => 10) users.each do |user| puts user.address.zip end

Hence, 11 queries will be executed, 1 for the top and 10. The solution is to rewrite it to eager load address:

users = User.includes(:address).limit(10) users.each do |user| puts user.address.zip end

Caching

Rails makes caching easy, though the best caching is the type used without involving the application. Page caching of static files when using Rails helps in optimizing the rails application to a great extent. The different types of caching that are available are.

- Page Caching

- Action Caching

- Fragment Caching

- SQL Caching

- Asset caching <ref>http://www.nascenia.com/10-tips-to-boost-up-performance-of-your-ruby-on-rails-application</ref>

# creates on #index, #show, etc caches_page :index # expires on #creates, #update, #destroy, etc expire_page :action => :index

Using Database Indexes

A database index is exactly what it sounds like. If you think of the index at the back of a reference book: a quickly searchable list of pointers to get to the actual data. Without an index, a database query might have to look at every row in your database table to find the correct result. If you find that a SQL query is causing a performance problem and it contains a where clause or a join then adding an index will greatly speed it up. <ref>http://rakeroutes.com/blog/increase-rails-performance-with-database-indexes</ref>

User.where(:email => current_user.email)

Rails automatically adds an index to the id field of a database table, but in this case we’re asking it to find a row based on another piece of data. If you do that often enough you should almost certainly add an index for that data.

# a migration to index to the users email column

class AddEmailIndexToUsers < ActiveRecord::Migration

def change

add_index :users, :email, :unique => true

end

end

Use of CDN

CDN aka content delivery network is an interconnected system of computers on the Internet that provides Web content rapidly to numerous users by duplicating the content on multiple servers and directing the content to users based on proximity. This will improve the performance of the servers and helps in load balancing of the servers too.

Scalability in Rails Apps

As with any other web application, Scalability is very much important in Rails applications as well. A web application designed in Rails can be made to accommodate increasing traffic by distributing the load across many sub-divisions(or servers). In order to have a scalable architecture in Rails apps, we can divide the deployment into different elements.

- Unicorn Rails Application Servers

- Nginx Front-facing HTTP Server/Load Balancer

Unicorn Rails Application Servers

Unicorn is a remarkable application server that contains Rails applications to process the incoming requests. These application servers will only deal with requests that need processing, after having them filtered and pre-processed by front-facing Nginx server(s), working as a load-balancer.

As a very mature web application server, Unicorn is absolutely fully-featured. It denies by design trying to do everything and only handles what needs to be done by a web application and it delegates the rest of the responsibilities to the operating system (i.e. juggling processes).

Unicorn's master process spawns workers to serve the requests. This process also monitors the workers in order to prevent memory and process related staggering issues. What this means for system administrators is that it will kill a process if, for example, it ends up taking too much time to complete a task or in case memory issues occur.

Nginx Front-facing HTTP Server/Load Balancer

Nginx HTTP server is designed from ground up to act as a multi-purpose, front-facing web server. It is capable of serving static files (e.g. images, text files etc.) extremely well, balance connections and deal with certain exploits attempts. It will act as the first entry point of all requests, and it is going to distribute them, to be processed, web-application servers running Unicorn. <ref>https://www.digitalocean.com/community/tutorials/how-to-scale-ruby-on-rails-applications-across-multiple-droplets-part-1</ref>

Testing for Scalability

Careful planning and development are necessary for any application development project. However, to make a truly scalable application, it is important to rigorously and regularly test it for scalability problems. Scalability testing is an extension of performance testing. The purpose of scalability testing is to identify major workloads and mitigate bottlenecks that can impede the scalability of the application. Use performance testing to establish a baseline against which you can compare future performance tests. As an application is scaled up or out, a comparison of performance test results will indicate the success of scaling the application. When scaling results in degraded performance, it is typically the result of a bottleneck in one or more resources. When your application does not meet performance requirements, you should analyze data from the test results to identify bottlenecks in the system and to hypothesize a cause. Sometimes the test data is not sufficient to form a hypothesis, and you must run additional tests using other performance-monitoring tools to isolate the cause of the bottleneck. You can often alleviate bottlenecks through performance tuning. The goal of tuning code, the operating system, or even the hardware on which it is running is to optimize the allocation of resources. Overcoming some bottlenecks usually requires more expense and some bottlenecks cannot be overcome or do not warrant the expense. In every application, you will always reach a limit that you cannot overcome due to time, money, or technology. <ref>http://msdn.microsoft.com/en-us/library/aa292189(v=vs.71).aspx</ref>

Scalability in a Nutshell

While there’s been a fair amount of details to be considered, one may find it useful to bear these few simple rules in mind when designing a system to be scalable:

- Design components that can scale linearly by adding more hardware.

- If you can’t scale linearly, figure out the return for each piece of hardware added.

- Load balance requests between clusters of components.

- Take into redundancy account as a percentage of your platform, rather than a

fixed number.

- Design your components to be fault-tolerant and easy to recover.

- Federate large datasets into fixed-size chunk <ref>Building Scalable Web Sites- Building, Scaling and Optimizing the next generation of Web Applications by Carl Henderson</ref>

See also

References

<references/>