CSC/ECE 506 Spring 2014/4a ad: Difference between revisions

| (55 intermediate revisions by 3 users not shown) | |||

| Line 4: | Line 4: | ||

== Introduction == | == Introduction == | ||

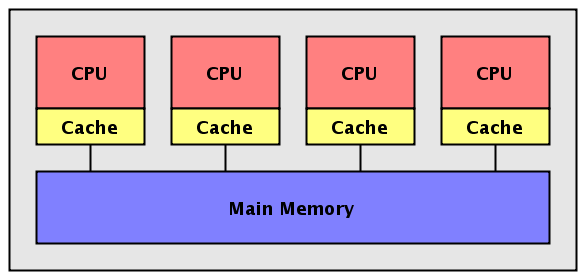

<p>With increasing transistor densities, multi-core computing systems are being touted as the most popular means of delivering performance Unless the application is parallelized efficiently, one cannot realize the potential of multi core environment.[[Image:fig03.png|1000px|thumb|right|Multi core system]]It is a challenge to efficiently parallelize sequential programs without any errors. It is widely accepted that manual parallelization of code, by expert programmers, leads to the most streamlined parallel implementation. But it is also a costly and time consuming process. If one can parallelize the compiler technology | <p>With increasing transistor densities, multi-core computing systems are being touted as the most popular means of delivering performance. Unless the application is parallelized efficiently, one cannot realize the potential of multi core environment.[[Image:fig03.png|1000px|thumb|right|Multi core system]]It is a challenge to efficiently parallelize sequential programs without any errors. It is widely accepted that manual parallelization of code, by expert programmers, leads to the most streamlined parallel implementation. But it is also a costly and time consuming process. If one can parallelize the compiler technology itself while ensuring the formal correctness of the resulting parallel code, time-to-market and cost can be greatly reduced. | ||

</p> | </p> | ||

== Approaches == | == Approaches == | ||

<p>Generally, a high fraction of a program’s execution time takes place in one form of loop or the other. So the initial emphasis of auto parallelization was on loop level parallelization. | <p>Generally, a high fraction of a program’s execution time takes place in one form of loop or the other. So the initial emphasis of auto parallelization was on loop level parallelization.</p> | ||

<p>We will first examine the two main approaches to parallelization of loops(pipelined multi-threading and cyclic multi-threading) and then continue the discussion by looking into different analysis techniques for parallellization </p> | |||

=== Cyclic multithreading === | === Cyclic multithreading === | ||

<p>A parallelizing compiler using cyclic multithreading tries to split a loop in a such a way that each iteration of a loop can be executed concurrently on a different processor.Before actually parallelizing the code, the compiler does two passes analyzing the following in each pass the code respectively.</p> | <p>A parallelizing compiler using cyclic multithreading tries to split a loop in a such a way that each iteration of a loop can be executed concurrently on a different processor. Before actually parallelizing the code, the compiler does two passes analyzing the following in each pass the code respectively.</p> | ||

*''' | *'''How safe is it to parallelize the loop?''' | ||

<p>Accurate dependency analysis and alias analysis is required to answer the above question. | <p>Accurate dependency analysis and alias analysis is required to answer the above question. | ||

The compiler needs to first determine whether each iteration of the loop can be executed independently of others. Some forms of data dependencies can be addressed but they might incur additional overhead in the form of message passing or synchronization of shared memory or some other method of processor communication. | The compiler needs to first determine whether each iteration of the loop can be executed independently of others. Some forms of data dependencies can be addressed but they might incur additional overhead in the form of message passing or synchronization of shared memory or some other method of processor communication. | ||

</p> | </p> | ||

*''' | *'''Can we gain an appreciable performance enhancement if we parallelize the loop?''' | ||

<p>In order to estimate it, the compiler compares the theoretical execution time of code after parallelization to the sequential execution time. This has to be done because sometimes the overhead of parallelization and execution over multiple processors might dominate the actual enhancement obtained due to parallelization. In such cases the actual speedup will be less than the potential speedup. | <p>In order to estimate it, the compiler compares the theoretical execution time of code after parallelization to the sequential execution time. This has to be done because sometimes the overhead of parallelization and execution over multiple processors might dominate the actual enhancement obtained due to parallelization. In such cases the actual speedup will be less than the potential speedup. | ||

</p> | </p> | ||

| Line 37: | Line 39: | ||

</pre> | </pre> | ||

<p>The above for loop cannot be auto-parallelized because z[i] depends on the previous iteration value z[i-1].But it doesn’t mean that it cannot be parallelized. The above loop is equal to the following</p> | <p>The above for loop cannot be auto-parallelized because z[i] depends on the previous iteration value z[i-1]. But it doesn’t mean that it cannot be parallelized. The above loop is equal to the following</p> | ||

<pre> | <pre> | ||

| Line 53: | Line 55: | ||

Example: | Example: | ||

<pre> | <pre> | ||

for(i=1;i<=n;i++){ | for (i=1;i<=n;i++){ | ||

x[i] = x[i-1] + y[i]; | x[i] = x[i-1] + y[i]; | ||

z[i] = z[i] + x[i]; | z[i] = z[i] + x[i]; | ||

| Line 60: | Line 62: | ||

</pre> | </pre> | ||

<p>The compiler can split the above loop into two loops(each can be executed on a different processor) and embed special instructions to forward output of one processor to another.</p> | <p>The compiler can split the above loop into two loops (each can be executed on a different processor) and embed special instructions to forward output of one processor to another.</p> | ||

<pre> | <pre> | ||

Loop 1: | Loop 1: | ||

for(i=1;i<=n;i++){ | for (i=1;i<=n;i++){ | ||

x[i] = x[i-1] + y[i]; | x[i] = x[i-1] + y[i]; | ||

send(x[i]); | send(x[i]); | ||

| Line 70: | Line 72: | ||

Loop 2: | Loop 2: | ||

for(i=1;i<=n;i++){ | for (i=1;i<=n;i++){ | ||

receive(x[i]); | receive(x[i]); | ||

z[i] = z[i] + x[i]; | z[i] = z[i] + x[i]; | ||

| Line 78: | Line 80: | ||

<p>So loop 1 can be run on one processor and the result sent to the second processor which executes loop 2.</p> | <p>So loop 1 can be run on one processor and the result sent to the second processor which executes loop 2.</p> | ||

===Scalar Analysis === | ===Scalar Analysis === | ||

<p> Scalar Analysis, Array Analysis and Commutativity Analysis techniques primarily focus on the back end of the compiler to generate the primary output that can be parallelized. These techniques deal with parallelization with specific system in mind. Scalar analysis and array analysis are two techniques often used in conjunction. Because of the nature of the analyses, they are used in imperative languages like FORTRAN and C.</p> | <p>'Scalar Analysis', 'Array Analysis' and 'Commutativity Analysis' techniques primarily focus on the back end of the compiler to generate the primary output that can be parallelized. These techniques deal with parallelization with specific system in mind. Scalar analysis and array analysis are two techniques often used in conjunction. Because of the nature of the analyses, they are used in imperative languages like FORTRAN and C.</p> | ||

<p>In the scalar analysis a program is broken down to analyze use of scalar variable and the dependencies that they have. Scalar analysis identify the loop carried dependencies | <p>In the scalar analysis a program is broken down to analyze use of scalar variable and the dependencies that they have. Scalar analysis identify the loop carried dependencies. When the memory location is written in one iteration of a loop and it is accessed (read or write) in a different iteration, there is a loop carried dependence. In addition, scalar analysis determines if parallelization may be enabled by privatization or by reduction transformations. There is a slight variation in regular scalar analysis, that is known as scalar symbolic analysis and it is used to check the dependencies on array elements.</P> | ||

<p>''Limitations of Scalar | <p>''Limitations of Scalar Analysis'': This analysis is applicable only to specific forms of program constructs. For example, if an array is accessed in a linear form via an equation that acts as if the array were multidimensional , then this form of analysis cannot parallelize the code. </P> | ||

<p>Consider the loop below:</p> | <p>Consider the loop below:</p> | ||

| Line 95: | Line 95: | ||

} | } | ||

</pre> | </pre> | ||

<p>Here the array is accessed in standard | <p>Here the array is accessed in standard linear fashion, but by a function of two variables. So this cannot be parallelized by scalar analysis.</p> | ||

===Array analysis === | ===Array analysis === | ||

<p> | <p>Array analysis is used in conjunction with the scalar analysis. It uses the method of privatization which works on array data to find privatizable arrays. Access to the array is analyzed to determine an equation of access into the array, then, if possible, that array can be privatized. Specifically this is called array dataflow analysis.</p> | ||

<p>Consider the loop below | <p>Consider the loop below. It has a data dependence and thus cannot be privatized.</p> | ||

<pre> | <pre> | ||

for i = 0; i < x.length; ++i) { | for i = 0; i < x.length; ++i) { | ||

| Line 116: | Line 116: | ||

=== Commutativity Analysis === | === Commutativity Analysis === | ||

<p>This technique is used to automatically identify and exploit commutative operations. The operations are called commutative when they still give the same results even when their order of operations is changed. Commutativity analysis is designed to work with "separable operations,” or operations that | <p>This technique is used to automatically identify and exploit commutative operations. The operations are called commutative when they still give the same results even when their order of operations is changed. Commutativity analysis is designed to work with "separable operations,” or operations that can be decomposed into object section and an invocation sections.[6]. </p> | ||

<p>Commutativity of operation can be tested by using two conditions. These conditions are as follows: </p> | <p>Commutativity of operation can be tested by using two conditions. These conditions are as follows: </p> | ||

<p> “The new value of each instance variable of the receiver objects of A and B must be the same after the execution of the object section of A followed by the object section of B as after the execution of the object section of B followed by the object section of A" [ | <p> “The new value of each instance variable of the receiver objects of A and B must be the same after the execution of the object section of A followed by the object section of B as after the execution of the object section of B followed by the object section of A" [6] </p> | ||

<p> “The multiset of operations directly invoked by either A or B under the execution order A followed by B must be the same as the multiset of operations directly invoked by either A or B under the execution order B followed by A” [ | <p> “The multiset of operations directly invoked by either A or B under the execution order A followed by B must be the same as the multiset of operations directly invoked by either A or B under the execution order B followed by A” [6]</p> | ||

<p>Consider the code below:</p> | <p>Consider the code below:</p> | ||

| Line 144: | Line 144: | ||

</pre> | </pre> | ||

<p>After analyzing the code , it is observed that there are two recursive calls to the method 'visit' which remain over the same set. There is no dependence on the 'sum'(sum is not read by any other statement) , | <p>After analyzing the code , it is observed that there are two recursive calls to the method 'visit' which remain over the same set. There is no dependence on the 'sum'(sum is not read by any other statement), which means that regardless of the execution order, the sum will retain the same value. Instance variables 'sum' marked left and right are all accessed within the method which conforms to the restriction that none of them are directly accessed. </p> | ||

| Line 151: | Line 151: | ||

=== High level parallelization technique === | === High level parallelization technique === | ||

<p> This approach focuses on building a “portable lightweight parallel run time | <p> This approach focuses on building a “portable lightweight parallel run time library”[3]. It uses following steps: </p> | ||

* Scalarization. | * Scalarization. | ||

* Transformation stage. This transformation stage optimizes loops for single processor machines. | * Transformation stage. This transformation stage optimizes loops for single processor machines. | ||

| Line 159: | Line 159: | ||

<p> This approach incorporates Inter-procedural Reduction recognition. This algorithm is simple yet powerful enough to recognize reductions to array regions, even in the presence of arbitrarily complex data dependence.This uses inter-procedural interval analysis to perform data-flow analysis over the entire program with only two passes over the code. This interval-based approach is not only more efficient than inter-procedural iterative data-flow analysis, but it also avoids propagating information along unrealizable paths.</p> | <p> This approach incorporates Inter-procedural Reduction recognition. This algorithm is simple yet powerful enough to recognize reductions to array regions, even in the presence of arbitrarily complex data dependence.This uses inter-procedural interval analysis to perform data-flow analysis over the entire program with only two passes over the code. This interval-based approach is not only more efficient than inter-procedural iterative data-flow analysis, but it also avoids propagating information along unrealizable paths.</p> | ||

<p> | <p>Inter-procedural Parallelization Technique using Reductions produces high precision without un-manageable code expansion.</p> | ||

== Limitations == | |||

*Smart, intelligent parallel programmer can definitely think from a different perspective about a program algorithm and re- arrange the algorithm to achieve parallelism yielding same results with high performance. A compiler will probably never accomplish this, due to inability of rewriting efficient algorithm. | |||

*Compiler must produce the result with parallel computing same as the results by sequential computing. When there is a risk or possibility that the same results cannot be produced, then the compiler fail to parallelize the program. | |||

*Pointer based dynamic data structure pose limitations on parallelization. | |||

*If the program size is very large, the efficient execution time may not be achieved. | |||

== Examples of Auto Parallel Compilers == | == Examples of Auto Parallel Compilers == | ||

=== MIPSpro APO Compiler === | |||

<p> The MIPSpro APO integrates automatic parallelization using interprocedural analysis (IPA) with other compiler optimizations such as loop nest optimization (LNO). | |||

<p> | It supports C++ and has improved runtime and compile-time performance.</p> | ||

=== SUIF Compiler === | |||

<p> | <p>SUIF (Stanford University Intermediate Format) is a free infrastructure designed to support collaborative research in optimizing and parallelizing compilers.[11]</p> | ||

=== Vienna Fortran Compiler === | |||

<p> | <p>It is a new source-to-source parallelization system for HPF+ (optimized version of HPF), which addresses the requirements of irregular applications.[12]</p> | ||

=== PLUTO === | |||

<p> | <p>Pluto is an automatic parallelization tool based on the polyhedral model. The polyhedral model for compiler optimization is a representation for programs that makes it convenient to perform high-level transformations such as loop nest optimizations and loop parallelization.[13]</p> | ||

== | === Par4All === | ||

<p>Par4All is an automatic parallelizing and optimizing compiler (workbench) for C and Fortran sequential programs.[10]</p> | |||

=== Omni OpenMP Compiler === | |||

<p>It translates C and Fortran programs with OpenMP pragmas into C code suitable for compiling with a native compiler linked with the Omni OpenMP runtime library.[9]</p> | |||

== References == | == References == | ||

<p>[1] "Comparative Survey Of Approaches To Automatic Parallelization" by Nicholas DiPasquale, Vijay Gehlot, Thomas Way http://www.csc.villanova.edu/~tway/publications/DiPasquale_Masplas05_Paper5.pdf </p> | |||

<p>[2] "Inter-ptocedural Parallelization Analysis: Preliminary Results" by Mary W.Hall, Saman P. Amarasinghe, Brian R.Murphy, Shih-Wei Liao http://www.researchgate.net/publication/237812210_Interprocedural_Parallelization_Analysis_Preliminary_Results </p> | |||

<p>[3] "Automatic Parallelization for Symmetric Shared Memory Multiprocessors," by J.H.Chow, L.E. Lyon, and V.Sarkar, in Proceedings of CASCON : 76 89, Toronto, ON, November 12 14, 1996.</p> | |||

<p>[4] "Toward Efficient and Robust Software Speculative Parallelization in Multiprocessors" by M. Cintra and, D. R. Llanos. In Proceedings of ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, June 2003.</p> | |||

<p>[5] "DoAcross : Beyond vectorization for multiprocessors" by Ron Cytron in Int’l.Conf. on ParallelProcessing, Aug. 1986.</p> | |||

<p>[6] "Commutativity Analysis: A New Analysis Technique for Parallelizing Compilers" by M. Diniz and P. Diniz. In ACM Transactions on Programming Languages and Systems, Vol.19, No. 6, pages 1-47, 1997. </p> | |||

<p>[7] http://en.wikipedia.org/wiki/Automatic_parallelization_tool</p> | |||

<p>[8] http://en.wikipedia.org/wiki/Automatic_parallelization</p> | |||

<p>[9] http://www.hpcs.cs.tsukuba.ac.jp/omni-compiler/</p> | |||

<p>[10] http://www.par4all.org/</p> | |||

<p>[11] http://suif.stanford.edu/suif/suif.html</p> | |||

<p>[12] http://www.deepdyve.com/lp/ios-press/vfc-the-vienna-fortran-compiler-hVsU0MEwzT</p> | |||

<p>[13] http://pluto-compiler.sourceforge.net/</p> | |||

Latest revision as of 19:50, 26 April 2014

Automatic Parallelism

Auto parallelization is the technique of automatically converting a sequential code into multi-threaded or vectorized (or even both) code. Therefore one can take advantage of a shared memory multi processor environment to run the multi threaded code and obtain a better performance. This also serves the goal of relieving programmers from the tedious and error-prone manual parallelization process.

Introduction

With increasing transistor densities, multi-core computing systems are being touted as the most popular means of delivering performance. Unless the application is parallelized efficiently, one cannot realize the potential of multi core environment.

It is a challenge to efficiently parallelize sequential programs without any errors. It is widely accepted that manual parallelization of code, by expert programmers, leads to the most streamlined parallel implementation. But it is also a costly and time consuming process. If one can parallelize the compiler technology itself while ensuring the formal correctness of the resulting parallel code, time-to-market and cost can be greatly reduced.

Approaches

Generally, a high fraction of a program’s execution time takes place in one form of loop or the other. So the initial emphasis of auto parallelization was on loop level parallelization.

We will first examine the two main approaches to parallelization of loops(pipelined multi-threading and cyclic multi-threading) and then continue the discussion by looking into different analysis techniques for parallellization

Cyclic multithreading

A parallelizing compiler using cyclic multithreading tries to split a loop in a such a way that each iteration of a loop can be executed concurrently on a different processor. Before actually parallelizing the code, the compiler does two passes analyzing the following in each pass the code respectively.

- How safe is it to parallelize the loop?

Accurate dependency analysis and alias analysis is required to answer the above question. The compiler needs to first determine whether each iteration of the loop can be executed independently of others. Some forms of data dependencies can be addressed but they might incur additional overhead in the form of message passing or synchronization of shared memory or some other method of processor communication.

- Can we gain an appreciable performance enhancement if we parallelize the loop?

In order to estimate it, the compiler compares the theoretical execution time of code after parallelization to the sequential execution time. This has to be done because sometimes the overhead of parallelization and execution over multiple processors might dominate the actual enhancement obtained due to parallelization. In such cases the actual speedup will be less than the potential speedup.

Example:

for(i=0;i<n;i++) z[i] = x[i] + y[i];

The above code exhibits DOALL parallelism because each iteration is independent of the others, and the final result of z[] will not depend on the execution order of the other iterations. Therefore it can be auto parallelized by the compiler because each iteration is independent of the others, and the final result of array z will be correct regardless of the execution order of the other iterations.

for(i=2;i<n;i++) z[i] = z[i-1]*2;

The above for loop cannot be auto-parallelized because z[i] depends on the previous iteration value z[i-1]. But it doesn’t mean that it cannot be parallelized. The above loop is equal to the following

for(i=2;i<n;i++) z[i] = z[1]*2^(i-1);

But current parallelizing compilers are not usually capable of bringing out these parallelisms automatically, and one cannot say for sure that this piece of code will benefit from parallelization.

Pipelined multithreading

A pipelined multi-threading parallelizing compiler attempts to break up the sequence of operations inside a loop into a series of code block. Each of these code blocks can be executed by separate processors concurrently. The compiler could assign each of the code blocks to different processors and insert appropriate code to forward the output of one processor to another.

Example:

for (i=1;i<=n;i++){

x[i] = x[i-1] + y[i];

z[i] = z[i] + x[i];

}

The compiler can split the above loop into two loops (each can be executed on a different processor) and embed special instructions to forward output of one processor to another.

Loop 1:

for (i=1;i<=n;i++){

x[i] = x[i-1] + y[i];

send(x[i]);

}

Loop 2:

for (i=1;i<=n;i++){

receive(x[i]);

z[i] = z[i] + x[i];

}

So loop 1 can be run on one processor and the result sent to the second processor which executes loop 2.

Scalar Analysis

'Scalar Analysis', 'Array Analysis' and 'Commutativity Analysis' techniques primarily focus on the back end of the compiler to generate the primary output that can be parallelized. These techniques deal with parallelization with specific system in mind. Scalar analysis and array analysis are two techniques often used in conjunction. Because of the nature of the analyses, they are used in imperative languages like FORTRAN and C.

In the scalar analysis a program is broken down to analyze use of scalar variable and the dependencies that they have. Scalar analysis identify the loop carried dependencies. When the memory location is written in one iteration of a loop and it is accessed (read or write) in a different iteration, there is a loop carried dependence. In addition, scalar analysis determines if parallelization may be enabled by privatization or by reduction transformations. There is a slight variation in regular scalar analysis, that is known as scalar symbolic analysis and it is used to check the dependencies on array elements.

Limitations of Scalar Analysis: This analysis is applicable only to specific forms of program constructs. For example, if an array is accessed in a linear form via an equation that acts as if the array were multidimensional , then this form of analysis cannot parallelize the code.

Consider the loop below:

for (i = 0; i < x.length; ++i) {

for (j = 0; j < i; ++j) {

x[i * x.length + j] = z[j];

}

}

Here the array is accessed in standard linear fashion, but by a function of two variables. So this cannot be parallelized by scalar analysis.

Array analysis

Array analysis is used in conjunction with the scalar analysis. It uses the method of privatization which works on array data to find privatizable arrays. Access to the array is analyzed to determine an equation of access into the array, then, if possible, that array can be privatized. Specifically this is called array dataflow analysis.

Consider the loop below. It has a data dependence and thus cannot be privatized.

for i = 0; i < x.length; ++i) {

for j = 1; j < x[i].length; ++j) {

x[i][j -1] = f(x[i][j]);

}

}

In order to parallelize the code segment the loop requires a transformation of the data, if a transformation cannot be applied then the array analysis will not be able to parallelize this segment of code.

The potential of these two techniques can be maximized if the compiler does optimization inter-procedurally. In the code above, compiler must be able to optimize across the function calls.

Limitations of Array Analysis:

These techniques of analysis can only support a subset of language features of a language like C. The compilers cannot detect dependencies if the data is dynamic and pointer based. This technique focus heavily on parallelizing loops in the source. With a richer language like C++ or Java these analysis techniques might not be that effective.

Commutativity Analysis

This technique is used to automatically identify and exploit commutative operations. The operations are called commutative when they still give the same results even when their order of operations is changed. Commutativity analysis is designed to work with "separable operations,” or operations that can be decomposed into object section and an invocation sections.[6].

Commutativity of operation can be tested by using two conditions. These conditions are as follows:

“The new value of each instance variable of the receiver objects of A and B must be the same after the execution of the object section of A followed by the object section of B as after the execution of the object section of B followed by the object section of A" [6]

“The multiset of operations directly invoked by either A or B under the execution order A followed by B must be the same as the multiset of operations directly invoked by either A or B under the execution order B followed by A” [6]

Consider the code below:

class Node {

private: bool marked;

int: value, sum;

Node*left, *right;

public: void visits(int);

void Node:: visits ( int p) {

sum = sum + p;

if (!marked)

marked = true;

if (left != NULL)

left ->visit (value);

if (right != NULL)

right ->visit (value)

}

}

After analyzing the code , it is observed that there are two recursive calls to the method 'visit' which remain over the same set. There is no dependence on the 'sum'(sum is not read by any other statement), which means that regardless of the execution order, the sum will retain the same value. Instance variables 'sum' marked left and right are all accessed within the method which conforms to the restriction that none of them are directly accessed.

Limitations of Commutativity Analysis:

Since Commutativity analysis is designed to work with "separable operations,” the codes need to be decomposed onto object and invocation sections, the object section performs any access into the receiver. The invocation section makes calls to operations, the receiver is not accessible in this section. This is a limitation on Commutativity analysis.

High level parallelization technique

This approach focuses on building a “portable lightweight parallel run time library”[3]. It uses following steps:

- Scalarization.

- Transformation stage. This transformation stage optimizes loops for single processor machines.

- This stage focuses on the parallelization optimizations.

Inter-procedural Parallelization Technique using Reductions

This approach incorporates Inter-procedural Reduction recognition. This algorithm is simple yet powerful enough to recognize reductions to array regions, even in the presence of arbitrarily complex data dependence.This uses inter-procedural interval analysis to perform data-flow analysis over the entire program with only two passes over the code. This interval-based approach is not only more efficient than inter-procedural iterative data-flow analysis, but it also avoids propagating information along unrealizable paths.

Inter-procedural Parallelization Technique using Reductions produces high precision without un-manageable code expansion.

Limitations

- Smart, intelligent parallel programmer can definitely think from a different perspective about a program algorithm and re- arrange the algorithm to achieve parallelism yielding same results with high performance. A compiler will probably never accomplish this, due to inability of rewriting efficient algorithm.

- Compiler must produce the result with parallel computing same as the results by sequential computing. When there is a risk or possibility that the same results cannot be produced, then the compiler fail to parallelize the program.

- Pointer based dynamic data structure pose limitations on parallelization.

- If the program size is very large, the efficient execution time may not be achieved.

Examples of Auto Parallel Compilers

MIPSpro APO Compiler

The MIPSpro APO integrates automatic parallelization using interprocedural analysis (IPA) with other compiler optimizations such as loop nest optimization (LNO). It supports C++ and has improved runtime and compile-time performance.

SUIF Compiler

SUIF (Stanford University Intermediate Format) is a free infrastructure designed to support collaborative research in optimizing and parallelizing compilers.[11]

Vienna Fortran Compiler

It is a new source-to-source parallelization system for HPF+ (optimized version of HPF), which addresses the requirements of irregular applications.[12]

PLUTO

Pluto is an automatic parallelization tool based on the polyhedral model. The polyhedral model for compiler optimization is a representation for programs that makes it convenient to perform high-level transformations such as loop nest optimizations and loop parallelization.[13]

Par4All

Par4All is an automatic parallelizing and optimizing compiler (workbench) for C and Fortran sequential programs.[10]

Omni OpenMP Compiler

It translates C and Fortran programs with OpenMP pragmas into C code suitable for compiling with a native compiler linked with the Omni OpenMP runtime library.[9]

References

[1] "Comparative Survey Of Approaches To Automatic Parallelization" by Nicholas DiPasquale, Vijay Gehlot, Thomas Way http://www.csc.villanova.edu/~tway/publications/DiPasquale_Masplas05_Paper5.pdf

[2] "Inter-ptocedural Parallelization Analysis: Preliminary Results" by Mary W.Hall, Saman P. Amarasinghe, Brian R.Murphy, Shih-Wei Liao http://www.researchgate.net/publication/237812210_Interprocedural_Parallelization_Analysis_Preliminary_Results

[3] "Automatic Parallelization for Symmetric Shared Memory Multiprocessors," by J.H.Chow, L.E. Lyon, and V.Sarkar, in Proceedings of CASCON : 76 89, Toronto, ON, November 12 14, 1996.

[4] "Toward Efficient and Robust Software Speculative Parallelization in Multiprocessors" by M. Cintra and, D. R. Llanos. In Proceedings of ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, June 2003.

[5] "DoAcross : Beyond vectorization for multiprocessors" by Ron Cytron in Int’l.Conf. on ParallelProcessing, Aug. 1986.

[6] "Commutativity Analysis: A New Analysis Technique for Parallelizing Compilers" by M. Diniz and P. Diniz. In ACM Transactions on Programming Languages and Systems, Vol.19, No. 6, pages 1-47, 1997.

[7] http://en.wikipedia.org/wiki/Automatic_parallelization_tool

[8] http://en.wikipedia.org/wiki/Automatic_parallelization

[9] http://www.hpcs.cs.tsukuba.ac.jp/omni-compiler/

[11] http://suif.stanford.edu/suif/suif.html

[12] http://www.deepdyve.com/lp/ios-press/vfc-the-vienna-fortran-compiler-hVsU0MEwzT