User:Ufmuhamm1: Difference between revisions

| (33 intermediate revisions by 3 users not shown) | |||

| Line 13: | Line 13: | ||

[[Image:kcomp2.jpg|thumb|right|250px|A 'K computer' rack. Each computer rack is equipped with about 100 CPUs]] | [[Image:kcomp2.jpg|thumb|right|250px|A 'K computer' rack. Each computer rack is equipped with about 100 CPUs]] | ||

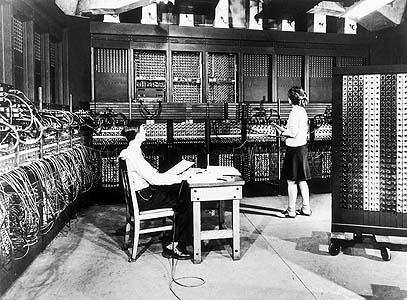

The United States government has played the key role in the development and use of supercomputers, During World War II, the US Army paid for the construction of [http://en.wikipedia.org/wiki/ENIAC Electronic Numerical Integrator And Computer(ENIAC)]in order to speed the calculations of artillery tables. In the 30 years after World War II, the US government used high-performance computers to design nuclear weapons, break codes, and perform other security-related applications. | The United States government has played the key role in the development and use of supercomputers, During World War II, the US Army paid for the construction of [http://en.wikipedia.org/wiki/ENIAC Electronic Numerical Integrator And Computer (ENIAC)]in order to speed the calculations of artillery tables. In the 30 years after World War II, the US government used high-performance computers to design nuclear weapons, break codes, and perform other security-related applications. | ||

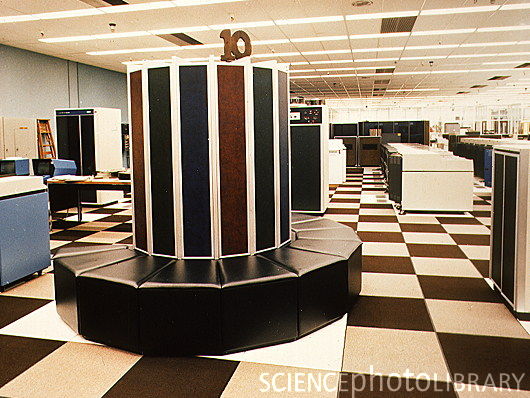

The most powerful supercomputers introduced in the 1960s were designed primarily by Seymour Cray at [http://en.wikipedia.org/wiki/Control_Data_Corporation Control Data Corporation] (CDC). They led the market into the 1970s until Cray left to form his own company,[http://en.wikipedia.org/wiki/Cray_Research Cray Research]. | The most powerful supercomputers introduced in the 1960s were designed primarily by Seymour Cray at [http://en.wikipedia.org/wiki/Control_Data_Corporation Control Data Corporation] (CDC). They led the market into the 1970s until Cray left to form his own company,[http://en.wikipedia.org/wiki/Cray_Research Cray Research]. | ||

| Line 31: | Line 31: | ||

[[Image:Eniac.jpg|thumb|right|400px|ENIAC - The World's first supercomputer]] | [[Image:Eniac.jpg|thumb|right|400px|ENIAC - The World's first supercomputer]] | ||

The [http://en.wikipedia.org/wiki/ENIAC Electronic Numerical Integrator And Computer(ENIAC)] was first developed in 1949 and it took the world by storm. Originally, it was built to solve very complex problems that would take several months or years to solve. Because of this some of us use computers today but ENIAC was built with a single purpose: to solve scientific problems for the entire nation. The military were first to use it, benefiting the country's defenses. Even today, most new supercomputer technology is designed for the military first, and then is redesigned for civilian uses. | The [http://en.wikipedia.org/wiki/ENIAC Electronic Numerical Integrator And Computer (ENIAC)] was first developed in 1949 and it took the world by storm. Originally, it was built to solve very complex problems that would take several months or years to solve. Because of this some of us use computers today but ENIAC was built with a single purpose: to solve scientific problems for the entire nation. The military were first to use it, benefiting the country's defenses. Even today, most new supercomputer technology is designed for the military first, and then is redesigned for civilian uses. | ||

This system actually was used to compute the firing tables for White Sands missile range from 1949 until it was replaced in 1957. This allowed the military to synchronize the liftoff of missiles should it be deemed necessary. This was one of the important milestones in military history for the United States, at least on a technological level. | This system actually was used to compute the firing tables for White Sands missile range from 1949 until it was replaced in 1957. This allowed the military to synchronize the liftoff of missiles should it be deemed necessary. This was one of the important milestones in military history for the United States, at least on a technological level. | ||

| Line 43: | Line 43: | ||

A number of improvements were also made to ENIAC after 1948, including a primitive read-only stored | A number of improvements were also made to ENIAC after 1948, including a primitive read-only stored | ||

programming mechanism using the function table as program [http://en.wikipedia.org/wiki/Read-only_memory ROM] | programming mechanism using the function table as program [http://en.wikipedia.org/wiki/Read-only_memory ROM] | ||

=== Mid-1960s Control Data Corporation Machines === | |||

Control Data Corporation (CDC), a supercomputer firm founded in 1957, was a leader in the industry during the 1960s. The company was created when Engineering Research Associates (ERA) left their parent company Sperry, who was known for the UNIVAC system. The ERAs main source of contracts had been with the US Navy, and involved developing code breaking technology and memory solutions. When the ERA members left to form CDC, they initially started by selling these type memory technologies. | |||

A year after CDC was created, it was joined by Seymour Cray, who later went on to create the dominant Cray Computer Corporation. His first introduction was a six bit transistor machine called the CDC Little Character, which served as a base model for later large scale systems. Cray set the goal of designing a machine that would be the fastest in the world and 50 times faster than CDCs previous product, the CDC 1604. It was at this time that Cray and a team of engineers formed Cippew Falls lab in Cray's home town in order to focus on this goal. With use of Silicon rather than Germanium transistors, they developed and released the CDC 6600, which became the dominant computer on the market. It had 10x the performance of any machine at the time and stood up to IBMs efforts to match it. Specifically, IBM funded the development of the ACS-1, but it failed to meet compatibility specifications with their flagship System/360 line. IBM later promised a 360/92 as a follow up to their popular 360/91, saying that it could out perform the 6600, but were sued for antitrust violations since no such device existed. | |||

=== Mid-1970s Cray's Era <ref>http://www.cray.com/Assets/PDF/about/CrayTimeline.pdf</ref>=== | === Mid-1970s Cray's Era <ref>http://www.cray.com/Assets/PDF/about/CrayTimeline.pdf</ref>=== | ||

| Line 63: | Line 68: | ||

Cray Research merged with SGI (Silicon Graphics, Inc.) in February 1996 then the company was renamed Cray Inc. and the ticker symbol was changed to CRAY. In August 1999, SGI created a separate Cray Research business unit to focus completely and exclusively on the unique requirements of high-end supercomputing customers. Assets of this business unit were sold to Tera Computer Company in March 2000. Tera began software development for the Multithreaded Architecture (MTA) systems in 1988 and hardware design commenced in 1991. The Cray MTA-2 system provides scalable shared memory, in which every processor has equal access to every memory location, which greatly simplifies the programming because it eliminates concerns about the layout of memory.Company received its first order for the MTA from the San Diego Supercomputer Center. The multiprocessor system was accepted by the center in 1998, and has since been upgraded to eight processors. | Cray Research merged with SGI (Silicon Graphics, Inc.) in February 1996 then the company was renamed Cray Inc. and the ticker symbol was changed to CRAY. In August 1999, SGI created a separate Cray Research business unit to focus completely and exclusively on the unique requirements of high-end supercomputing customers. Assets of this business unit were sold to Tera Computer Company in March 2000. Tera began software development for the Multithreaded Architecture (MTA) systems in 1988 and hardware design commenced in 1991. The Cray MTA-2 system provides scalable shared memory, in which every processor has equal access to every memory location, which greatly simplifies the programming because it eliminates concerns about the layout of memory.Company received its first order for the MTA from the San Diego Supercomputer Center. The multiprocessor system was accepted by the center in 1998, and has since been upgraded to eight processors. | ||

Upon acquiring the Cray Research division of [http://www.sgi.com/ Silicon Graphics] in 2000, the company was renamed to Cray Inc. | Upon acquiring the Cray Research division of [http://www.sgi.com/ Silicon Graphics] in 2000, the company was renamed to Cray Inc. Since 2000, Cray has collaborated with companies such as Intel Corporation to push forward new products, integrating other technology into their designs. They have delivered several supercomputers to the Oak Ridge National Laboratory, the largest in 2009 called Jaguar, which was the fastest computer in the world at the time according to some benchmarks. In an effort to expand the company's cluster system offerings, Cray acquired Appro Technologies in 2012. Throughout the last decade, Cray has gained vendor systems share and has 9.6% share as of November 2013. | ||

=== 1980s Supercomputer History in Japan<ref>http://www.versionone.com/Agile101/Methodologies.asp </ref> === | === 1980s Supercomputer History in Japan<ref>http://www.versionone.com/Agile101/Methodologies.asp </ref> === | ||

| Line 127: | Line 132: | ||

!Vendor | !Vendor | ||

!System Count | !System Count | ||

!System Share(%) | !System Share (%) | ||

!Rmax(GFlops) | !Rmax (GFlops) | ||

!Rpeak(GFlops) | !Rpeak (GFlops) | ||

!Processor cores | !Processor cores | ||

|- | |- | ||

| Line 205: | Line 210: | ||

==== Legend ==== | ==== Legend ==== | ||

* '''Vendor''' – The manufacturer of the platform and hardware. | * '''Vendor''' – The manufacturer of the platform and hardware. | ||

* '''Rmax''' – The highest score measured using the [http://en.wikipedia.org/wiki/LINPACK LINPACK] benchmark suite. This is the number that is used to rank the computers. Measured in quadrillions of floating point operations per second, i.e. Petaflops(Pflops). | * '''Rmax''' – The highest score measured using the [http://en.wikipedia.org/wiki/LINPACK LINPACK] benchmark suite. This is the number that is used to rank the computers. Measured in quadrillions of floating point operations per second, i.e. Petaflops (Pflops). | ||

* '''Rpeak''' – This is the theoretical peak performance of the system. Measured in Pflops. | * '''Rpeak''' – This is the theoretical peak performance of the system. Measured in Pflops. | ||

* '''Processor cores''' – The number of active [http://en.wikipedia.org/wiki/Multi-core processor cores] used. | * '''Processor cores''' – The number of active [http://en.wikipedia.org/wiki/Multi-core processor cores] used. | ||

===Top 10 supercomputers of today<ref>http://www.top500.org/lists/2012/11/</ref>=== | ===Top 10 supercomputers of today<ref>http://www.top500.org/lists/2012/11/</ref>=== | ||

Below are the Top 10 supercomputers in the World(as of November 2012). An effort has been made to compare the architectural features of these supercomputers. | Below are the Top 10 supercomputers in the World (as of November 2012). An effort has been made to compare the architectural features of these supercomputers. | ||

'''1.Titan - Cray XK7:''' | '''1.Titan - Cray XK7:''' | ||

| Line 251: | Line 256: | ||

*The SuperMUC will have 18,432 Intel Xeon Sandy Bridge-EP processors running in IBM System x iDataPlex servers with a total of 147,456 cores and a peak performance of about 3 petaFLOPS (3 × 1015 FLOPS). | *The SuperMUC will have 18,432 Intel Xeon Sandy Bridge-EP processors running in IBM System x iDataPlex servers with a total of 147,456 cores and a peak performance of about 3 petaFLOPS (3 × 1015 FLOPS). | ||

* The main memory will be 288 terabytes(288 × 1012 bytes) together with 12 petabytes (12 × 1015 bytes) of hard disk space based on the IBM General Parallel File System(GPFS). | * The main memory will be 288 terabytes (288 × 1012 bytes) together with 12 petabytes (12 × 1015 bytes) of hard disk space based on the IBM General Parallel File System (GPFS). | ||

| Line 298: | Line 303: | ||

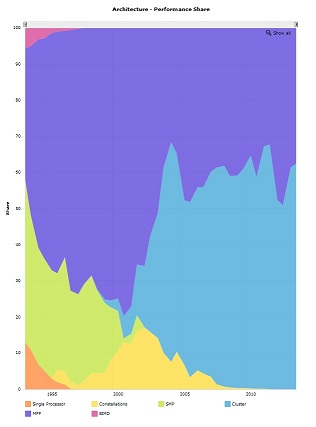

=== Supercomputer Architecture <ref>http://http://www.top500.org</ref>=== | === Supercomputer Architecture <ref>http://http://www.top500.org</ref>=== | ||

[[Image:Architecture | [[Image:Architecture share.jpg|right|Architecture Share]] | ||

[[Image:Architecture | [[Image:Architecture performance.jpg|right| Architecture Performance]] | ||

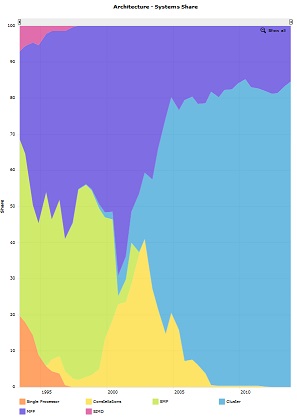

Over the years, we see the changes in supercomputer architecture. Various architectures were developed and abandoned, as computer technology progressed. | Over the years, we see the changes in supercomputer architecture. Various architectures were developed and abandoned, as computer technology progressed. | ||

| Line 343: | Line 346: | ||

b)Distributed memory systems: In this case each CPU has its own associated memory. The CPUs are connected by some network and may exchange data between their respective memories when required. In contrast to shared memory machines the user must be aware of the location of the data in the local memories and will have to move or distribute these data explicitly when needed. Again, distributed memory systems may be either SIMD or MIMD. The first class of SIMD systems mentioned which operate in lock step, all have distributed memories associated to the processors. [http://en.wikipedia.org/wiki/Distributed_memory Distributed-memory] MIMD systems exhibit a large variety in the topology of their connecting network. The details of this topology are largely hidden from the user which is quite helpful with respect to portability of applications. For the distributed-memory systems we will sometimes use DM-SIMD and DM-MIMD to indicate the two subclasses. | b)Distributed memory systems: In this case each CPU has its own associated memory. The CPUs are connected by some network and may exchange data between their respective memories when required. In contrast to shared memory machines the user must be aware of the location of the data in the local memories and will have to move or distribute these data explicitly when needed. Again, distributed memory systems may be either SIMD or MIMD. The first class of SIMD systems mentioned which operate in lock step, all have distributed memories associated to the processors. [http://en.wikipedia.org/wiki/Distributed_memory Distributed-memory] MIMD systems exhibit a large variety in the topology of their connecting network. The details of this topology are largely hidden from the user which is quite helpful with respect to portability of applications. For the distributed-memory systems we will sometimes use DM-SIMD and DM-MIMD to indicate the two subclasses. | ||

As of 2013, Massively Parallel Processor (MPP) is the second most widely deployed architecture for supercomputers after cluster. | |||

The Goodyear MPP machine was one of the first MPP architecture machines, and was built for NASA in 1983 for analysis of satellite images. | |||

The construction consists of many smaller, less complex processing units, as opposed to larger CPUs. The operation of the processors is in | |||

a MIMD or SIMD type operation. Cluster architectures are the most widely deployed as they offer many advantages. There is lower cost associated | |||

with the machines. Additionally, resources can be added or removed with relative ease in order to meet changing workloads. | |||

==== Massively Distributed Parallel Architecture ==== | ==== Massively Distributed Parallel Architecture ==== | ||

| Line 369: | Line 378: | ||

* '''Commodity Parts'''. Probably this is the most important reason. With commodity parts, design and non-recurring manufacturing costs can be spread over a larger number of chips. If a vector only sells a few dozen copies, then design costs can easily be the dominant overall cost. | * '''Commodity Parts'''. Probably this is the most important reason. With commodity parts, design and non-recurring manufacturing costs can be spread over a larger number of chips. If a vector only sells a few dozen copies, then design costs can easily be the dominant overall cost. | ||

* '''Memory Cost'''. The most expensive part of a computer( whether a PC, workstation, or supercomputer) is usually the memory system. Vector processors provide high performance memory systems that sustain very large bandwidths between main memory and the vector registers. To achieve this bandwidth, vector processors rely on high-performance, highly interleaved memory systems. Moreover, for a high performance machine, latency also plays an important role. Therefore, vector supercomputers use the fastest memory technology available. | * '''Memory Cost'''. The most expensive part of a computer (whether a PC, workstation, or supercomputer) is usually the memory system. Vector processors provide high performance memory systems that sustain very large bandwidths between main memory and the vector registers. To achieve this bandwidth, vector processors rely on high-performance, highly interleaved memory systems. Moreover, for a high performance machine, latency also plays an important role. Therefore, vector supercomputers use the fastest memory technology available. | ||

* '''High Bandwidths'''. Consider a 20 GB/s memory system and a typical [http://en.wikipedia.org/wiki/Cmos CMOS] package that allows its pins to operate at 133 MHz. A back-of-the-envelope calculation indicates that 1200 pins would be needed to sustain a peak of 20 GB/s. Such numbers of pins are difficult to implement. In the past, vector manufacturers have employed multi-chip designs. These designs tend to be substantially more expensive than single-chip solutions. | * '''High Bandwidths'''. Consider a 20 GB/s memory system and a typical [http://en.wikipedia.org/wiki/Cmos CMOS] package that allows its pins to operate at 133 MHz. A back-of-the-envelope calculation indicates that 1200 pins would be needed to sustain a peak of 20 GB/s. Such numbers of pins are difficult to implement. In the past, vector manufacturers have employed multi-chip designs. These designs tend to be substantially more expensive than single-chip solutions. | ||

| Line 377: | Line 386: | ||

* '''Difficulty in Achieving Peak Performance''' on vector supercomputers. Despite high performance processors and high bandwidth memory systems, even programs that are highly vectorized fall short of theoretical peak performance. | * '''Difficulty in Achieving Peak Performance''' on vector supercomputers. Despite high performance processors and high bandwidth memory systems, even programs that are highly vectorized fall short of theoretical peak performance. | ||

* ''' | * '''Lack of Architectural Innovation'''. Finally, it is important to note that there have been relatively few architectural innovations since the CRAY-1. The top of the line CRAY T90 still has only 8 vector registers and has a relatively slow scalar microarchitecture when compared to current [http://en.wikipedia.org/wiki/Superscalar superscalar] microprocessors. Meanwhile, superscalar microprocessors have adopted many architectural features to increase performance while still retaining low cost. | ||

Japan's Fujitsu announced its decision to shift to scalar processors in the year 2009. [http://www.rit.edu/news/?v=47077 Read article]. | Japan's Fujitsu announced its decision to shift to scalar processors in the year 2009. [http://www.rit.edu/news/?v=47077 Read article]. | ||

| Line 383: | Line 392: | ||

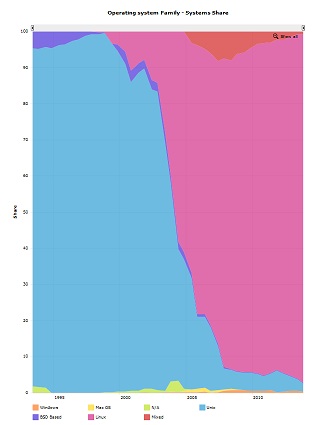

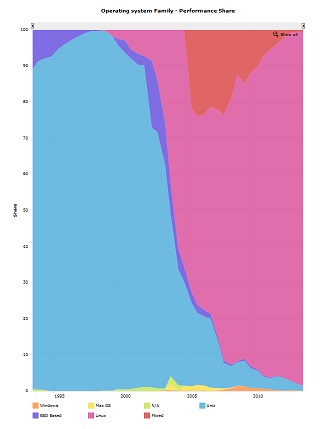

=== Supercomputer Operating Systems <ref>http://http://www.top500.org</ref>=== | === Supercomputer Operating Systems <ref>http://http://www.top500.org</ref>=== | ||

[[Image: | [[Image:OS share.jpg|right]] | ||

[[Image: | [[Image:OS performance.jpg|right]] | ||

Supercomputer use various of operating systems. The operating system of a specific supercomputer depends on its vendor. Until the early-to-mid-1980s, supercomputers usually sacrificed instruction-set compatibility and code portability for performance (processing and memory access speed). For the most part, supercomputers at this time (unlike high-end mainframes) had vastly different operating systems. The Cray-1 alone had at least six different proprietary OSs largely unknown to the general computing community. In a similar manner, there existed different and incompatible vectorizing and parallelizing compilers for Fortran. This trend would have continued with the ETA-10 were it not for the initial instruction set compatibility between the Cray-1 and the Cray X-MP, and the adoption of computer systems such as Cray's Unicos, or Linux. | |||

Before the beginning of 21st century, almost all the OSs fell into the Unix family[[http://top500.org/]], while after year 2000 more and more Linux versions were adopted for supercomputers. In the 2009/11 list, 446 out of 500 supercomputers at the top were using their own distribution of Linux. And 18 of the top 20 supercomputers in the world are running some form of Linux. | |||

As of 2013, the Linux operating system family is still completely dominant on supercomputers. There are several different Linux distributions that are deployed, including CentOS, Red hat enterprise and custom distros. Unix still has a small presence, while BSD and Windows systems are virtually extinct. The customizability, coupled with the performance and cost of Linux make it the idea replacement to Unix as the dominant operating system for | |||

supercomputers. | |||

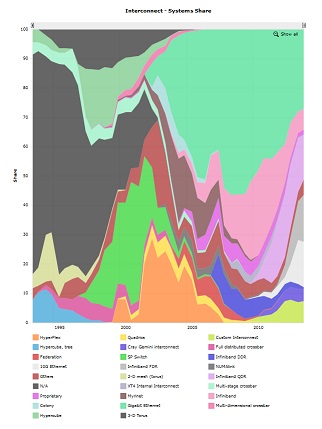

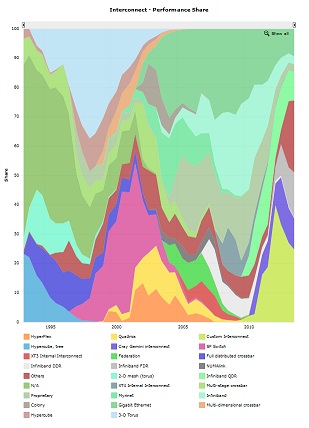

===Supercomputer Interconnects<ref>http://compnetworking.about.com/library/weekly/aa051902d.htm</ref>=== | ===Supercomputer Interconnects<ref>http://compnetworking.about.com/library/weekly/aa051902d.htm</ref>=== | ||

[[Image: | [[Image:Interconnect share.jpg|right| Interconnect Share]] | ||

[[Image: | [[Image:Interconnect performance.jpg|right| Interconnect Performance]] | ||

In order for a large number of processors to work together, supercomputers utilize specialized network interfaces. These interconnects support high bandwidth and very low latency communication. | In order for a large number of processors to work together, supercomputers utilize specialized network interfaces. These interconnects support high bandwidth and very low latency communication. | ||

| Line 430: | Line 411: | ||

Supercomputer nodes fit together into a network topology. Modern supercomputers have utilized several different specialized network topologies including hypercube, two-dimensional and three-dimensional mesh, and torus. Supercomputer network topologies can be either static (fixed) or dynamic (through the use of switches). | Supercomputer nodes fit together into a network topology. Modern supercomputers have utilized several different specialized network topologies including hypercube, two-dimensional and three-dimensional mesh, and torus. Supercomputer network topologies can be either static (fixed) or dynamic (through the use of switches). | ||

Supercomputer interconnects involve large quantities of network cabling. These cables can be very difficult to install as they often must fit within small spaces. Supercomputers do not utilize wireless networking internally as the bandwidth and latency properties of wireless are not suitable for high-performance communications. | Supercomputer interconnects involve large quantities of network cabling. These cables can be very difficult to install as they often must fit within small spaces. Supercomputers do not utilize wireless networking internally as the bandwidth and latency properties of wireless are not suitable for high-performance communications. | ||

The standard Infiniband interconnect is virtually extinct in the current supercomputer market, along with Infiniband QDR. | |||

There is an increasing change to custom interconnect technologies and 10G Ethernet and Infiniband FDR. 10G Ethernet is different from traditional Ethernet in both | |||

technical implementation and the achievable speed of 10Gbit/s, which eclipses traditional 1Gbits/s Ethernet. Infini FDR (fourteen data rate) has a throughput of 13.64 (Gbit/s), | |||

and is eclipsed by the Infiniband EDR (enhanced data rate), which is not yet seeing common use. | |||

== Supercomputing Applications == | == Supercomputing Applications == | ||

| Line 514: | Line 423: | ||

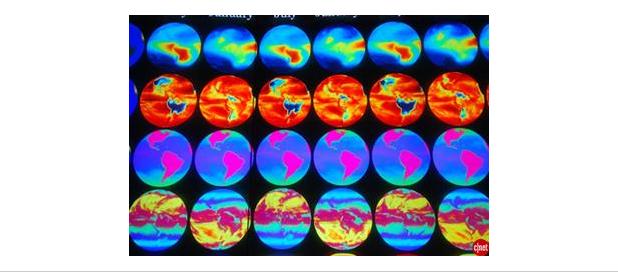

The primary tasks that the supercomputers are used for are solidly focused on number crunching and enormous calculation intensive tasks that involve massive datasets requiring real-time resolution that for all intent and purpose are beyond the generation lifetime of general purpose computers even in large numbers or that of the average humans life expectancy today. | The primary tasks that the supercomputers are used for are solidly focused on number crunching and enormous calculation intensive tasks that involve massive datasets requiring real-time resolution that for all intent and purpose are beyond the generation lifetime of general purpose computers even in large numbers or that of the average humans life expectancy today. | ||

The type of tasks that supercomputers are built to tackle are : | The type of tasks that supercomputers are built to tackle are : | ||

Physics | Physics (i.e. Quantum mechanics, cosmology, Nuclear research ..) ,Weather forecasting, climate research,chemistry, Biology, industry (i.e. simulating airplanes dynamics), Engineering Design (i.e Structural simulations, bridges,earthquake tolerance...), Earth Sciences ... etc. | ||

Engineering Design | |||

Earth Sciences | |||

The main users of these supercomputers include: universities, military agencies, NASA, scientific research laboratories and major corporations. | The main users of these supercomputers include: universities, military agencies, NASA, scientific research laboratories and major corporations. | ||

== Supercomputers of the Future == | == Supercomputers of the Future == | ||

Latest revision as of 00:06, 30 April 2014

Introduction<ref>http://en.wikipedia.org/wiki/Supercomputer</ref>

A supercomputer is generally considered to be the front-line “cutting-edge” in terms of processing capacity (number crunching) and computational speed at the time it is built, but with the pace of development, yesterday's supercomputers have become regular servers today. A state-of-the-art supercomputer is an extremely powerful computer capable of manipulating massive amounts of data in a relatively short amount of time. Supercomputers are very expensive and are deployed for specialized scientific and engineering applications that must handle very large databases or do a great amount of computation -- among them are meteorology, animated graphics, fluid dynamic calculations, nuclear energy research, weapons simulation and petroleum exploration.

Supercomputer Evolution <ref>http://www.bukisa.com/articles/13059_supercomputer-evolution</ref>

The United States government has played the key role in the development and use of supercomputers, During World War II, the US Army paid for the construction of Electronic Numerical Integrator And Computer (ENIAC)in order to speed the calculations of artillery tables. In the 30 years after World War II, the US government used high-performance computers to design nuclear weapons, break codes, and perform other security-related applications.

The most powerful supercomputers introduced in the 1960s were designed primarily by Seymour Cray at Control Data Corporation (CDC). They led the market into the 1970s until Cray left to form his own company,Cray Research.

With Moore’s Law still holding after more than thirty years, the rate at which future mass-market technologies overtake today’s cutting-edge super-duper wonders continues to accelerate. The effects of this are manifest in the abrupt about-face we have witnessed in the underlying philosophy of building supercomputers.

During the 1970s and all the way through the mid-1980s supercomputers were built using specialized custom vector processors working in parallel. Typically, this meant anywhere between four to sixteen CPUs. The next phase of the supercomputer evolution saw the introduction of massive parallel processing and a drift away from vector-only microprocessors. However, the processors used in the construction of this generation of supercomputers were still primarily highly specialized purpose-specific custom designed and fabricated units.Nowadays,with the level of high integration, "of-the-shelf" multi-core processors can be used to build (i.e. IBM RoadRunner )

Currently, the K computer is the world's fastest supercomputer at 10.51 petaFLOPS. K is built by the Japanese computer firm Fujitsu, based in Kobe's Riken Advanced Institute for Computational Science. It consists of 88,000 SPARC64 VIIIfx CPUs, and spans 864 server racks. In November 2011, the power consumption was reported to be 12659.89 kW<ref>http://www.top500.org/list/2011/11/100</ref>. K's performance is equivalent to one million linked desktop computers, which is more than its five closest competitors combined. It consists of 672 cabinets stuffed with circuit-boards, and its creators plan to increase that to 800 in the coming months. It uses enough energy to power nearly 10,000 homes and costs $10 million (£6.2 million) annually to run<ref>http://www.telegraph.co.uk/technology/news/8586655/Japanese-supercomputer-K-is-worlds-fastest.html</ref>.

Some of the companies which build supercomputers are Silicon Graphics, Intel, IBM, Cray, Orion, Aspen Systems etc.

Here is a list of the top 10 supercomputers [1] as of November 2012.

1960s First Supercomputer ( ENIAC )

The Electronic Numerical Integrator And Computer (ENIAC) was first developed in 1949 and it took the world by storm. Originally, it was built to solve very complex problems that would take several months or years to solve. Because of this some of us use computers today but ENIAC was built with a single purpose: to solve scientific problems for the entire nation. The military were first to use it, benefiting the country's defenses. Even today, most new supercomputer technology is designed for the military first, and then is redesigned for civilian uses.

This system actually was used to compute the firing tables for White Sands missile range from 1949 until it was replaced in 1957. This allowed the military to synchronize the liftoff of missiles should it be deemed necessary. This was one of the important milestones in military history for the United States, at least on a technological level.

ENIAC was a huge machine that used nineteen thousand vacuum tubes and occupied a massive fifteen thousand square feet of floor space. It weighted nearly thirty tons, making it one of the largest machines of the time. It was considered the greatest scientific invention up to this point because it took only 2 hours of computation time to do what normally took a team of one hundred engineers a period of a year. That made it almost a miracle in some people's eyes and people got excited about this emerging technology. ENIAC could perform five thousand additions in seconds. Though it seemed very fast, by today's standards, that is extremely slow. Most computers today do millions of additions per second in comparison.

The other nine units in ENIAC were the Initiating Unit (which started and stopped the machine), the Cycling Unit (used for synchronizing the other units), the Master Programmer (which controlled "loop" sequencing), the Reader (which controlled an IBM punched card reader), the Printer (which controlled an IBM punched card punch), the Constant Transmitter, and three Function Tables.

The ENIAC function table. A look-up table that was hand programmed with values by turning the dials to represent numbers in the different places of the table.

A number of improvements were also made to ENIAC after 1948, including a primitive read-only stored programming mechanism using the function table as program ROM

Mid-1960s Control Data Corporation Machines

Control Data Corporation (CDC), a supercomputer firm founded in 1957, was a leader in the industry during the 1960s. The company was created when Engineering Research Associates (ERA) left their parent company Sperry, who was known for the UNIVAC system. The ERAs main source of contracts had been with the US Navy, and involved developing code breaking technology and memory solutions. When the ERA members left to form CDC, they initially started by selling these type memory technologies.

A year after CDC was created, it was joined by Seymour Cray, who later went on to create the dominant Cray Computer Corporation. His first introduction was a six bit transistor machine called the CDC Little Character, which served as a base model for later large scale systems. Cray set the goal of designing a machine that would be the fastest in the world and 50 times faster than CDCs previous product, the CDC 1604. It was at this time that Cray and a team of engineers formed Cippew Falls lab in Cray's home town in order to focus on this goal. With use of Silicon rather than Germanium transistors, they developed and released the CDC 6600, which became the dominant computer on the market. It had 10x the performance of any machine at the time and stood up to IBMs efforts to match it. Specifically, IBM funded the development of the ACS-1, but it failed to meet compatibility specifications with their flagship System/360 line. IBM later promised a 360/92 as a follow up to their popular 360/91, saying that it could out perform the 6600, but were sued for antitrust violations since no such device existed.

Mid-1970s Cray's Era <ref>http://www.cray.com/Assets/PDF/about/CrayTimeline.pdf</ref>

Cray Inc. has a history that extends back to 1972, when the legendary Seymour Cray, the "father of supercomputing," founded Cray Research. R&D and manufacturing were based in his hometown of Chippewa Falls, Wisconsin and business headquarters were in Minneapolis, Minnesota.

The first Cray-1 system was installed at Los Alamos National Laboratory in 1976 for $8.8 million. It boasted a world-record speed of 160 million floating-point operations per second (160 megaflops) and an 8 megabyte (1 million word) main memory. In order to increase the speed of the system, the Cray-1 had a unique "C" shape which made integrated circuits to be closer together and no wire in the system was more than four feet long. To handle the intense heat generated by the computer, Cray developed an innovative refrigeration system using Freon.

In order to concentrate his efforts more on the design, Cray left the CEO position in 1980 and became an independent contractor. Later he worked on the follow-on to the Cray-1, another group within the company developed the first multiprocessor supercomputer, the Cray X-MP, which was introduced in 1982. The Cray-2 system made its debut in 1985, providing a tenfold increase in performance over the Cray-1. In 1988, Cray Y-MP was introduced, the world's first supercomputer to sustain over 1 gigaflop on many applications. Multiple 333 MFLOPS processors powered the system speeds of up-to 2.3 gigaflops.

Always a visionary, Seymour Cray had been exploring the use of gallium arsenide in creating a semiconductor faster than silicon. However, the costs and complexities of this material made it difficult for the company to support both the Cray-3 and the Cray C90 development efforts. In 1989, Cray Research spun off the Cray-3 project into a separate company, Cray Computer Corporation, headed by Seymour Cray and based in Colorado Springs, Colorado. Tragically, Seymour Cray died in September 1996 at the age of 71.

The 1990s brought a number of transformations to Cray Research. The company continued its leadership in providing the most powerful supercomputers for production applications. The Cray C90 featured a new central processor which produced a performance of 1 gigaflop. Using 16 of these powerful processors and 256 million words of central memory, the system boasted of amazing total performance. The company also produced its first "mini-supercomputer," the Cray XMS system, followed by the Cray Y-MP EL series and the subsequent Cray J90. In 1993, it offered the first massively parallel processing (MPP) system, the Cray T3D supercomputer, and quickly captured MPP market leadership from early MPP companies such as Thinking Machines and MasPar. The Cray T3D proved to be exceptionally robust, reliable, sharable and easy-to-administer, compared with competing MPP systems.

The successor, Cray T3E supercomputer has been the world's best selling MPP system. The Cray T3E-1200E system was the first supercomputer to sustain one teraflop (1 trillion calculations per second) on a real-world application. In November 1998, a joint scientific team from Oak Ridge National Laboratory, the National Energy Research Scientific Computing Center (NERSC), Pittsburgh Supercomputing Center and the University of Bristol (UK) ran a magnetism application at a sustained speed of 1.02 teraflops. In another technological landmark, the Cray T90 became the world's first wireless supercomputer when it was released in 1994. Also the Cray J90 series which was released during the same year has become the world's most popular supercomputer, with over 400 systems sold.

Cray Research merged with SGI (Silicon Graphics, Inc.) in February 1996 then the company was renamed Cray Inc. and the ticker symbol was changed to CRAY. In August 1999, SGI created a separate Cray Research business unit to focus completely and exclusively on the unique requirements of high-end supercomputing customers. Assets of this business unit were sold to Tera Computer Company in March 2000. Tera began software development for the Multithreaded Architecture (MTA) systems in 1988 and hardware design commenced in 1991. The Cray MTA-2 system provides scalable shared memory, in which every processor has equal access to every memory location, which greatly simplifies the programming because it eliminates concerns about the layout of memory.Company received its first order for the MTA from the San Diego Supercomputer Center. The multiprocessor system was accepted by the center in 1998, and has since been upgraded to eight processors.

Upon acquiring the Cray Research division of Silicon Graphics in 2000, the company was renamed to Cray Inc. Since 2000, Cray has collaborated with companies such as Intel Corporation to push forward new products, integrating other technology into their designs. They have delivered several supercomputers to the Oak Ridge National Laboratory, the largest in 2009 called Jaguar, which was the fastest computer in the world at the time according to some benchmarks. In an effort to expand the company's cluster system offerings, Cray acquired Appro Technologies in 2012. Throughout the last decade, Cray has gained vendor systems share and has 9.6% share as of November 2013.

1980s Supercomputer History in Japan<ref>http://www.versionone.com/Agile101/Methodologies.asp </ref>

With the 1970’s introduction of the Cray vector architectures and a good programming model based on parallelizing, vectorizing Fortran compilers, the Japanese computer companies saw a clear plan forward.<ref>http://research.microsoft.com/en-us/um/people/gbell/supers/supercomputing-a_brief_history_1965_2002.htm</ref> Fujitsu F230-75 APU was produced in 1977, which is considered to be the first prototype developed in Japan<ref>http://www.fujitsu.com/global/services/solutions/tc/supercomputer/history/</ref>. However Fujitsu's VP-200 and Hitachi's S-810 were the first officially announced versions. NEC announced its SX series slightly later.

The last decade has rather been a surprise. About three generations of machines have been produced by each of the domestic manufacturers. During the last ten years about 300 supercomputer systems have been shipped and installed in Japan, and a whole infrastructure of supercomputing has been established. All major universities, many of the large industrial companies and research centers have supercomputers.

Fujitsu's VP and VPP series <ref>http://www.netlib.org/benchmark/top500/reports/report94/Japan/node5.html</ref>

In 1977 Fujitsu produced the first supercomputer prototype called the F230-75 APU which was a pipelined vector processor added to a scalar processor. This attached processor was installed in the Japanese Atomic Energy Commission (JAERI) and the National Aeronautic Lab (NAL).

In 1983 the company came out with the VP-200 and VP-100 systems. In 1986 VP-400 was released with twice as many pipelines as the VP-200 and during mid-1987 the whole family became the E-series with the addition of an extra (multiply-add) pipelined floating point unit that increased the performance potential by 50%. With the flexible range of systems in this generation (VP-30E to VP-400E), good marketing and a broad range of applications, Fujitsu has became the largest domestic supplier with over 80 systems installed, many of which are named in TOP500.

Available since 1990, the VP-2000 family can offer a peak performance of 5 Gflop/s due to a vector cycle time of 3.2 ns. The family was initially announced with four vector performance levels (model 2100, 2200, 2400, and 2600) where each level could have either one of two scalar processors, but the VP-2400/40 doubled this limit offering a peak vector performance similar to the VP-2600. Most of these models are now represented in the Japanese TOP500.

Previous machines wre heavily criticized for the lack of memory throughput. The VP-400 series had only one load/store path to memory that peaked at 4.57 GB/s. This was improved in the VP-2000 series by doubling the paths so that each pipeline set can do two load/store operations per cycle giving a total transfer rate of 20 GB/s. Fujitsu recently decided to use the label, VPX-2x0, for the VP-2x00 systems adapted to their Unix system. Keio Daigaku university now runs such a system.

In 1993 Fujitsu sprung a surprise to the world by announcing a Vector Parallel Processor (VPP) series that was designed for reaching in the range of hundreds of Gflop/s. At the core of the system is a combined Ga-As/Bi-CMOS processor, based largely on the original design of the VP-200. The processor chips gate delay was made as low as 60 ps in the Ga-As chips by using the most advanced hardware technology available. The resulting cycle time was 9.5 ns. The processor has four independent pipelines each capable of executing two Multiply-Add instructions in parallel resulting in a peak speed of 1.7 Gflop/s per processor. Each processor board is equipped with 256 Megabytes of central memory.

The most amazing part of the VPP-500 is the capability to interconnect up to 222 processors via a cross-bar network with two independent (read/write) connections, each operating at 400 MB/s. The total memory is addressed via virtual shared memory primitives. The system is meant to be front-ended by a VP-2x00 system that handles input/output and permanent file store, and job queue logistics.

As mentioned in the introduction, an early version of this system called the Numeric Wind Tunnel, was developed together with NAL. This early version of the VPP-500 (with 140 processors) is today the fastest supercomputer in the world and stands out at the beginning of the TOP500 due to a value that is twice that of the TMC CM-5/1024 installed at Los Alamo.

Hitachi's Supercomputers

Hitachi has been producing supercomputers since 1983 but differs from other manufacturers by not exporting them. For this reason, their supercomputers are less well known in the West. After having gone through two generations of supercomputers, the S-810 series started in 1983 and the S-820 series in 1988, Hitachi leapfrogged NEC in 1992 by announcing the most powerful vector supercomputer ever.The top S-820 model consisted of one processor operating at 4 ns and contained 4 vector pipelines with four pipelines and two independent floating-point units. This corresponded to a peak performance of 2 Gflop/s. Hitachi put great emphasis on a fast memory although this meant limiting its size to a maximum of 512 MB. The memory bandwidth of 2 words per pipe per vector cycle, giving a peak rate of 16 GB/s was a respectable achievement, but it was not enough to keep all functional units busy.

The S-3800 was announced in 1993 and is comparable to NEC's SX- 3R in its features. It has up to four scalar processors with a vector processing unit each. These vector units have in turn up to four independent pipelines and two floating point units that can each perform a multiply/add operation per cycle. With a cycle time of 2.0 ns, the whole system achieves a peak performance level of 32 Gflop/s.

The S-3600 systems can be seen as the design of the S-820 recast in more modern technology. The system consists of a single scalar processor with an attached vector processor. The 4 models in the range correspond to a successive reduction of the number of pipelines and floating point units installed. Link showing the list of the top 500 super computers top 500 super computers. Link showing the statistics of top 500 supercomputer statistics

NEC Supercomputers

In 1984 the NEC announced the SX-1 and SX-2 and started delivery in 1985. The first two SX-2 systems were domestic deliveries to Osaka University and the Institute for Computational Fluid Dynamics (ICFD). The SX-2 had multiple pipelines with one set of add and multiply floating point units each.It had a cycle time of 6 nanoseconds so each pipelined floating-point unit could peak at 167 Mflop/s. With four pipelines per unit and two floating-point units, the peak performance was about 1.3 Gflop/s. Due to limited memory bandwidth and other issues the performance in benchmark tests was less than half the peak value. The SX-1 had a slightly higher cycle time of 7 ns than the SX-2 and had only half the number of pipelines. The maximum execution rate was 570 Mflop/s.

At the end of 1987, NEC improved its supercomputer family with the introduction of A-series which gave improvements to the memory and I/O bandwidth. The top model, the SX-2A, had the same theoretical peak performance as the SX-2. Several low-range models were also announced but today none of them qualify for the TOP500.

In 1989 NEC announced a rather aggressive new model, the SX-3, with several important changes. The vector cycle time was brought down to 2.9 ns, the number of pipelines was doubled, but most significantly NEC added multiprocessing capability to its new series. It contained four independent arithmetic processors each with a scalar and a vector processing unit and NEC increased its performance by more than one order of magnitude of 22 Gflop/s from 1.33 on the SX-2A. The combination of these features made SX-3 the most powerful vector processor in the world. The total memory bandwidth was subdivided into two halves which in turn featured two vector load and one vector store paths per pipeline set as well as one scalar load and one scalar store path. This gave a total memory bandwidth to the vector units of about 66 GB/s. Like its predecessors, the SX-3 was to offer the memory bandwidth needed to sustain peak performance unless most operands were contained in the vector registers.

In 1992 NEC announced the SX-3R with a couple of improvements compared to the first version. The clock was further reduced to 2.5 ns, so that the peak performance increased to 6.4 Gflop/s per processor.

Mid-1990s Rising of IBM

In the early 1950s, IBM built their first scientific computer, the IBM 701. The IBM 704 and other high-end systems appeared in the 1950s and 1960s, but by today's standards, these early machines were little more than oversized calculators. After going through a rough patch, IBM re-emerged as a leader in supercomputing research and development in the mid-1990s, creating several systems for the U.S. Government's Accelerated Strategic Computing Initiative (ASCI). These computers boast approximately 100 times as much computational power as supercomputers of just ten years ago.

Sequoia is a petascale Blue Gene/Q supercomputer being constructed by IBM for the National Nuclear Security Administration as part of the Advanced Simulation and Computing Program (ASC). It is scheduled to be delivered to the Lawrence Livermore National Laboratory in 2011 and fully deployed in 2012.

Sequoia was revealed in February 2009; the targeted performance of 20 petaflops was more than the combined performance of the top 500 supercomputers in the world and about 20 times faster than Roadrunner, the reigning champion of the time. It will be twice as fast as the current record-holding K computer and also twice as fast as the intended future performance of Pleiades.

IBM has also built a smaller prototype called "Dawn," capable of 500 teraflops, using the Blue Gene/P design, to evaluate the Sequoia design. This system was delivered in April 2009 and entered the Top500 list at 9th place in June 2009

Supercomputer speeds are advancing rapidly as manufacturers latch on to new techniques and cheaper prices for computer chips. The first machine to break the teraflop barrier - a trillion calculations per second - was only built in 1996. Two years ago a $59m machine from Sun Microsystems, called Constellation, attempted to take the crown of world's fastest with operating speeds of 421 teraflops. Just two years later, Sequoia was able to achieve nearly 50 times the computing power.

Current Top Supercomputers<ref>http://http://www.top500.org</ref>

Comparison of Top Supercomputer Vendors In The World (November 2012)<ref>http://www.top500.org/statistics/list/</ref>

| Vendor | System Count | System Share (%) | Rmax (GFlops) | Rpeak (GFlops) | Processor cores |

|---|---|---|---|---|---|

| IBM | 193 | 38.6 | 66216230 | 89070758 | 6769140 |

| Hewlett-Packard | 141 | 29.2 | 18133778 | 32189810 | 2300904 |

| Cray Inc. | 31 | 6.2 | 28189811 | 40380860 | 1908880 |

| Appro | 24 | 4.8 | 5105350 | 6934803 | 373200 |

| SGI | 19 | 3.8 | 5137603 | 6449416 | 434406 |

| Appro International | 13 | 2.6 | 2371260 | 3122119.2 | 219648 |

| Bull | 18 | 3.6 | 6113556 | 7585005 | 467916 |

| Dell | 11 | 2.2 | 3990399 | 5645902 | 332618 |

| Fujitsu | 10 | 2 | 13760040 | 14866751 | 921048 |

| Oracle | 6 | 1.2 | 1424410 | 1700533 | 158880 |

Legend

- Vendor – The manufacturer of the platform and hardware.

- Rmax – The highest score measured using the LINPACK benchmark suite. This is the number that is used to rank the computers. Measured in quadrillions of floating point operations per second, i.e. Petaflops (Pflops).

- Rpeak – This is the theoretical peak performance of the system. Measured in Pflops.

- Processor cores – The number of active processor cores used.

Top 10 supercomputers of today<ref>http://www.top500.org/lists/2012/11/</ref>

Below are the Top 10 supercomputers in the World (as of November 2012). An effort has been made to compare the architectural features of these supercomputers.

1.Titan - Cray XK7:

- Open for science group

- Titan has AMD Opteron CPUs in conjunction with Nvidia Tesla GPUs to maintain energy efficiency while providing an exponential increasein computational power over Jaguar.

2.Sequoia - BlueGene/Q:

- 98,304 computer nodes feature 1.6 million cores with 1GB of RAM per core – 1.6 petabytes of RAM total

- Parallel design is based on IBM’s 18-core PowerPC A2 processor

3. K computer:

- SPARC64 VIIIfx 2.0GHz, Tofu interconnect

- The K computer is based on adistributed memory architecture with over 80,000 computer nodes.

- the K computer comprises 88,128 2.0 GHz 8-core SPARC64 VIIIfx processors contained in 864 cabinets, for a total of 705,024 cores, manufactured by Fujitsu with 45 nmCMOS technology.

4. Mira:

- BlueGene/Q, Power BQC 16C 1.60GHz, Custom

- It has a performance of 8.16 petaflops and consumes 3.9 MW in power.

- Mira will be used for scientific research, including studies in the fields of material science, climatology, seismology, and computational chemistry

5.JUQUEEN:

- BlueGene/Q, Power BQC 16C 1.600GHz, Custom Interconnect

- Main memory is 448 TB and overall peak performance is 5.9 Petaflops

- 28 racks (7 rows à 4 racks) - 28,672 nodes (458,752 cores)

6. SuperMUC:

- The SuperMUC will have 18,432 Intel Xeon Sandy Bridge-EP processors running in IBM System x iDataPlex servers with a total of 147,456 cores and a peak performance of about 3 petaFLOPS (3 × 1015 FLOPS).

- The main memory will be 288 terabytes (288 × 1012 bytes) together with 12 petabytes (12 × 1015 bytes) of hard disk space based on the IBM General Parallel File System (GPFS).

7. Stampede:

- PowerEdge C8220, Xeon E5-2680 8C 2.700GHz, Infiniband FDR, Intel Xeon PhiDell

- Stampede Dell PowerEdge C8220 Cluster is configured with 6,400 Dell DCS Zeus compute nodes, each with two 2.7 GHz E5-2680 Intel Xeon (Sandy Bridge) processors

- With 32 GB of memory and 50 GB of storage per node, users have access to an aggregate of 205 TB of memory and 275+ TB of local storage.

8. Tianhe 1A:

- equipped with 14,336 Xeon X5670 processors and 7,168 Nvidia Tesla M2050 general purpose GPUs.

- The Tianhe-1A system is composed of 112 computer cabinets, 12 storage cabinets, 6 communications cabinets, and 8 I/O cabinets.

9. fermi:

- FERMI is composed of 10.240 PowerA2 sockets running at 1.6GHz, with 16 cores each, totaling 163.840 compute cores and a system peak performance of 2.1 PFlops.

- Each processor comes with 16Gbyte of RAM (1Gbyte per core). The BG/Q system will be equipped with a performant scratch storage system with a capacity of 2Pbyte and a bandwidth in excess of 100 GByte/s.

10. DARPA Trial Subset:

- The system is an IBM Power 775 supercomputer with 63,360 cores that achieved a score of 1.5 petaflop/s on the Linpack benchmark.

- Here is a vedio about it: http://youtu.be/cQwqfiQEjNM

Benchmarking: The benchmarks – that is, the figures which are in petaflops – are carried out using LINPACK. LINPACK is basically a collection of FORTRAN subroutines that analyzes and solves linear equations and linear least-square problems. The computer runs a program that solves a system of linear equations and the floating point rate of execution is measured. It is currently the best way to understand how fast a computer works thus making it a benchmarking standard in the world of supercomputers.

Supercomputer Design

There are two approaches to the design of supercomputers. One, called massively parallel processing (MPP), is to chain together thousands of commercially available microprocessors utilizing parallel processing techniques. A variant of this, called a Beowulf cluster or cluster computing, employs large numbers of personal computers interconnected by a local area network and running programs written for parallel processing. The other approach, called vector processing, is to develop specialized hardware to solve complex calculations. This technique was employed in the Earth Simulator, a Japanese supercomputer introduced in 2002 that utilizes 640 nodes composed of 5104 specialized processors to execute 35.6 trillion mathematical operations per second. it is used to analyze earthquake, weather patterns, climate change, including global warming.

Supercomputer Architecture <ref>http://http://www.top500.org</ref>

Over the years, we see the changes in supercomputer architecture. Various architectures were developed and abandoned, as computer technology progressed.

Early Systems with a few processors

- Single Processor

1960s, pipelining was an innovation.

1970s, vector processor was established.->Early Cray Series

- Small Number of Processors -- Shared Memory Architecture

1. Uniform Memory Access (UMA)

2. Non-uniform Memory Access (NUMA)

3. Cache-only Memory Architecture (COMA)

Main Architecture Classes<ref>http://www.linuxdocs.org/HOWTOs/CPU-Design-HOWTO-6.html</ref>

- SISD machines

These are the conventional systems that contain one CPU, so can accommodate one instruction stream that is executed serially. Nowadays many large mainframes may have more than one CPU but each of these execute instruction streams that are unrelated. Therefore, such systems still should be regarded as SISD machines acting on different data spaces. Examples of SISD machines are workstations like those of DEC, Hewlett-Packard and Sun Microsystems.

- SIMD machines

Such systems often have a large number of processing units, ranging from 1,024 to 16,384 that all may execute the same instruction on different data in lock-step. So, a single instruction manipulates many data items in parallel. Examples of SIMD machines are CPP DAP Gamma II and the Quadrics Apemille.

Another subclass of the SIMD systems are the vectorprocessors. Vectorprocessors act on arrays of similar data rather than on single data items using specially structured CPUs. When data can be manipulated by these vector units, results can be delivered with a rate of one, two or three per clock cycle. So, vector processors execute on their data in an almost parallel way but only when executing in vector mode. In this case they are several times faster than when executing in conventional scalar mode. For practical purposes vectorprocessors are mostly regarded as SIMD machines. An example of such a system is for instance the NEC SX-6i.

- MISD machines

Theoretically in these type of machines multiple instructions should act on a single stream of data. As yet no practical machine in this class has been constructed nor are such systems easily to conceive.

- MIMD machines

These machines execute several instruction streams in parallel on different data. The difference with the multi-processor SISD machines is that the instructions and data are related because they represent different parts of the same task to be executed. So, MIMD systems may run many sub-tasks in parallel in order to shorten the time-to-solution for the main task to be executed. There is a large variety of MIMD systems and especially in this class the Flynn taxonomy proves to be not fully adequate for the classification of systems. Systems that behave very differently like a four-processor NEC SX-6 and a thousand processor SGI/Cray T3E fall both in this class. Now we will make another important distinction between classes of systems.

a)Shared memory systems: Shared memory systems have multiple CPUs all of which share the same address space. This means that the knowledge of where data is stored is of no concern to the user as there is only one memory accessed by all CPUs on an equal basis. Shared memory systems can be both SIMD or MIMD. Single-CPU vector processors can be regarded as an example of the former, while the multi-CPU models of these machines are examples of the latter. We will sometimes use the abbreviations SM-SIMD and SM-MIMD for the two subclasses.

b)Distributed memory systems: In this case each CPU has its own associated memory. The CPUs are connected by some network and may exchange data between their respective memories when required. In contrast to shared memory machines the user must be aware of the location of the data in the local memories and will have to move or distribute these data explicitly when needed. Again, distributed memory systems may be either SIMD or MIMD. The first class of SIMD systems mentioned which operate in lock step, all have distributed memories associated to the processors. Distributed-memory MIMD systems exhibit a large variety in the topology of their connecting network. The details of this topology are largely hidden from the user which is quite helpful with respect to portability of applications. For the distributed-memory systems we will sometimes use DM-SIMD and DM-MIMD to indicate the two subclasses.

As of 2013, Massively Parallel Processor (MPP) is the second most widely deployed architecture for supercomputers after cluster. The Goodyear MPP machine was one of the first MPP architecture machines, and was built for NASA in 1983 for analysis of satellite images. The construction consists of many smaller, less complex processing units, as opposed to larger CPUs. The operation of the processors is in a MIMD or SIMD type operation. Cluster architectures are the most widely deployed as they offer many advantages. There is lower cost associated with the machines. Additionally, resources can be added or removed with relative ease in order to meet changing workloads.

Massively Distributed Parallel Architecture

- Computer Cluster

As time passed by, more units were available. In the early 2000s, constellation computing was widely used, and MPP reached its peak percentage. With the rise of cluster computing, the supercomputer world was transformed. In 2009, cluster computing accounted for 83.4% of the architectures in the Top 500. A cluster computer is a group of linked computers, working together closely so that in many respects they form a single computer. Compared to a single computer, clusters are deployed to improve performance and/or availability, while being more cost-effective than single computers of comparable speed or availability. Cluster computers offer a high-performance computing alternative over SMP and massively parallel computing systems. Using redundancy, cluster architectures also aim to provide reliability. From the analysis above, we can see that supercomputers are highly related to technological change, and actively motivated by it.

- Grid Computing

The supercomputer of today is built on a hierarchical design where a number of clustered computers are joined by ultra high speed network optical interconnections. Grid computing uses a large number of computers in distributed, diverse administrative domains. It is an opportunistic approach which uses resources whenever they are available.<ref>Prodan, Radu; Thomas Fahringer (2007). Grid computing experiment management, tool integration, and scientific workflows. Berlin: Springer. pp. 1–4. ISBN 3-540-69261-4.</ref>

- Quasi-Opportunistic Supercomputing

The quasi-opportunistic approach coordinates computers which are often under different ownerships to achieve reliable and fault-tolerant high performance with more control than opportunistic computer grids in which computational resources are used whenever they may become available.<ref>Quasi-opportunistic supercomputing in grids by Valentin Kravtsov, David Carmeli , Werner Dubitzky , Ariel Orda , Assaf Schuster , Benny Yoshpa, in IEEE International Symposium on High Performance Distributed Computing, 2007, pages 233-244</ref>

Why have vector machines declined so fast in popularity?<ref>http://jes.ece.wisc.edu/papers/ics98.espasa.pdf</ref>

Since the early nineties, supercomputers based on the vector paradigm have lost their dominance of the supercomputing market. In June 1993, of the top 500 computers, 310 were parallel-vector machines. All the machines included in the list at that time totaled a peak computing power of 1.8 Teraflops. The 310 vector systems represented roughly 43% of all that computing power. Four and a half years later, in November 97, the same list reports that only 108 PVP's are still in the top-500 systems. Moreover, the total peak power of all systems listed had sky-rocketed to 24.2 Teraflops, but now the vector machines only accounted for 17% of this power.

The main reason for the decline of vector machines is the cost. Why are vector supercomputers so much more expensive than MPPs or SMPs? There are several related reasons.

- Commodity Parts. Probably this is the most important reason. With commodity parts, design and non-recurring manufacturing costs can be spread over a larger number of chips. If a vector only sells a few dozen copies, then design costs can easily be the dominant overall cost.

- Memory Cost. The most expensive part of a computer (whether a PC, workstation, or supercomputer) is usually the memory system. Vector processors provide high performance memory systems that sustain very large bandwidths between main memory and the vector registers. To achieve this bandwidth, vector processors rely on high-performance, highly interleaved memory systems. Moreover, for a high performance machine, latency also plays an important role. Therefore, vector supercomputers use the fastest memory technology available.

- High Bandwidths. Consider a 20 GB/s memory system and a typical CMOS package that allows its pins to operate at 133 MHz. A back-of-the-envelope calculation indicates that 1200 pins would be needed to sustain a peak of 20 GB/s. Such numbers of pins are difficult to implement. In the past, vector manufacturers have employed multi-chip designs. These designs tend to be substantially more expensive than single-chip solutions.

- Base Technology. Up to very recently, most vector designs were based on ECL. While this choice was adequate in the 1976-1991 time frame, vector vendors apparently failed to realize the potential of CMOS implementations. Nor were they willing to shift from gate array to custom design in order to exploit the capabilities of CMOS. In the last 8 years, CMOS chips have outperformed ECL in numbers of transistors, speed, and reliability. Recently, most vector vendors have introduced CMOS-based vector machines.

- Difficulty in Achieving Peak Performance on vector supercomputers. Despite high performance processors and high bandwidth memory systems, even programs that are highly vectorized fall short of theoretical peak performance.

- Lack of Architectural Innovation. Finally, it is important to note that there have been relatively few architectural innovations since the CRAY-1. The top of the line CRAY T90 still has only 8 vector registers and has a relatively slow scalar microarchitecture when compared to current superscalar microprocessors. Meanwhile, superscalar microprocessors have adopted many architectural features to increase performance while still retaining low cost.

Japan's Fujitsu announced its decision to shift to scalar processors in the year 2009. Read article.

Supercomputer Operating Systems <ref>http://http://www.top500.org</ref>

Supercomputer use various of operating systems. The operating system of a specific supercomputer depends on its vendor. Until the early-to-mid-1980s, supercomputers usually sacrificed instruction-set compatibility and code portability for performance (processing and memory access speed). For the most part, supercomputers at this time (unlike high-end mainframes) had vastly different operating systems. The Cray-1 alone had at least six different proprietary OSs largely unknown to the general computing community. In a similar manner, there existed different and incompatible vectorizing and parallelizing compilers for Fortran. This trend would have continued with the ETA-10 were it not for the initial instruction set compatibility between the Cray-1 and the Cray X-MP, and the adoption of computer systems such as Cray's Unicos, or Linux.

Before the beginning of 21st century, almost all the OSs fell into the Unix family[[2]], while after year 2000 more and more Linux versions were adopted for supercomputers. In the 2009/11 list, 446 out of 500 supercomputers at the top were using their own distribution of Linux. And 18 of the top 20 supercomputers in the world are running some form of Linux.

As of 2013, the Linux operating system family is still completely dominant on supercomputers. There are several different Linux distributions that are deployed, including CentOS, Red hat enterprise and custom distros. Unix still has a small presence, while BSD and Windows systems are virtually extinct. The customizability, coupled with the performance and cost of Linux make it the idea replacement to Unix as the dominant operating system for supercomputers.

Supercomputer Interconnects<ref>http://compnetworking.about.com/library/weekly/aa051902d.htm</ref>

In order for a large number of processors to work together, supercomputers utilize specialized network interfaces. These interconnects support high bandwidth and very low latency communication.

Interconnects join nodes inside the supercomputer together. A node is a communication endpoint running one instance of the operating system. Nodes utilize one or several processors and different types of nodes can exist within the system. Compute nodes, for example, execute the processes and threads required for raw computation. I/O nodes handle the reading and writing of data to disks within the system. Service nodes and network nodes provide the user interface into the system and also network interfaces to the outside world. Special-purpose nodes improve overall performance by segregating the system workload with hardware and system software configured to best handle that workload.

Supercomputer nodes fit together into a network topology. Modern supercomputers have utilized several different specialized network topologies including hypercube, two-dimensional and three-dimensional mesh, and torus. Supercomputer network topologies can be either static (fixed) or dynamic (through the use of switches).

Supercomputer interconnects involve large quantities of network cabling. These cables can be very difficult to install as they often must fit within small spaces. Supercomputers do not utilize wireless networking internally as the bandwidth and latency properties of wireless are not suitable for high-performance communications.

The standard Infiniband interconnect is virtually extinct in the current supercomputer market, along with Infiniband QDR. There is an increasing change to custom interconnect technologies and 10G Ethernet and Infiniband FDR. 10G Ethernet is different from traditional Ethernet in both technical implementation and the achievable speed of 10Gbit/s, which eclipses traditional 1Gbits/s Ethernet. Infini FDR (fourteen data rate) has a throughput of 13.64 (Gbit/s), and is eclipsed by the Infiniband EDR (enhanced data rate), which is not yet seeing common use.

Supercomputing Applications

The primary tasks that the supercomputers are used for are solidly focused on number crunching and enormous calculation intensive tasks that involve massive datasets requiring real-time resolution that for all intent and purpose are beyond the generation lifetime of general purpose computers even in large numbers or that of the average humans life expectancy today. The type of tasks that supercomputers are built to tackle are : Physics (i.e. Quantum mechanics, cosmology, Nuclear research ..) ,Weather forecasting, climate research,chemistry, Biology, industry (i.e. simulating airplanes dynamics), Engineering Design (i.e Structural simulations, bridges,earthquake tolerance...), Earth Sciences ... etc.

The main users of these supercomputers include: universities, military agencies, NASA, scientific research laboratories and major corporations.

Supercomputers of the Future

Research centers are constantly delving into new applications like data mining to explore additional uses of supercomputing. Data mining is a class of applications that looks for hidden patterns in a group of data which allows scientists to discover previously unknown relationships among the data. For instance, the Protein Data Bank at the San Diego Supercomputer Center is a collection of scientific data that provides scientists around the world with a greater understanding of biological systems. Over the years, the Protein Data Bank has developed into a web-based international repository for three-dimension almolecular structure data that contains detailed information on the atomic structure of complex molecules. The three-dimensional structures of proteins and other molecules contained in the Protein Data Bank and supercomputer analysis of the data provide researchers with new insights on the causes, effects, and treatment of many diseases.

Other modern supercomputing applications involve the advancement of brain research. Researchers are beginning to use supercomputers to provide them with a better understanding of the relationship between the structure and function of the brain, and operation of the brain. Specifically, neuroscientists use supercomputers to look at the dynamic and physiological structures of the brain. Scientists are also working toward development of three-dimensional simulation programs that will allow them to conduct research on areas such as memory processing and cognitive recognition.

In addition to new applications, the future of supercomputing includes the assembly of the next generation of computational research infrastructure and the introduction of new supercomputing architectures. Parallel supercomputers have many processors, distributed and shared memory, and many communications parts. We have yet to explore all of the ways in which they can be assembled. Supercomputing applications and capabilities will continue to develop as institutions around the world share their discoveries and researchers become more proficient at parallel processing.

External links

3.Top500-The supercomputer website

5.Supercomputers to "see" black holes

6.Supercomputer simulates stellar evolution

7.Encyclopedia on supercomputer

10.Water-cooling System Enables Supercomputers to Heat Buildings

13.UC-Irvine Supercomputer Project Aims to Predict Earth's Environmental Future

14.Wikipedia

15.Parallel programming in C with MPI and OpenMP ByMichael Jay Quinn

19.SuperComputers for One-Fifth the Price

References

<references/>