CSC/ECE 517 Fall 2013/oss ssv: Difference between revisions

No edit summary |

(→Issues) |

||

| (176 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

= Refactoring and testing — degree_of_relevance.rb = | |||

[[File:RelevanceJoke.png|frame|Relevance <ref>http://www.smartinsights.com/wp-content/uploads/2012/05/relevance-rankmaniac.jpg</ref>]] | |||

__TOC__ | |||

= | = Introduction = | ||

Reviews are text-based feedback provided by a reviewer to the author of a submission. Reviews are used not only in education to assess student work, but also in e-commerce applications, to assess the quality of products on sites like Amazon, e-bay etc. Since reviews play a crucial role in providing feedback to people who make assessment decisions (e.g. deciding a student’s grade, choosing to purchase a product) it is important to find out how relevant reviews are to a submission <ref>http://repository.lib.ncsu.edu/ir/handle/1840.16/8813</ref>. | |||

== Testing == | The class ''DegreeOfRelevance'' in Expertiza is used to calculate how relevant one piece of text is to another. It calculates relevance between the submitted work and reviews(or meta reviews) for an assignment. It is important to do this evaluation to ensure that if the review is not relevant to the submission, it is considered to be invalid and does not impact students' grade. | ||

== | |||

== References = | ''degree_of_relevance.rb'' presently contains long and complex methods and a lot of duplicated code. It has been assigned grade "F" by [https://codeclimate.com/ Code Climate], on metrics such as code complexity, duplication, lines of code per method etc. Since this file is important for the research on Expertiza, it should be re-factored to reduce complexity and duplication issues and introduce coding best practices. Our task for this project is to re-factor this class and test it thoroughly. | ||

= Theory of Relevance = | |||

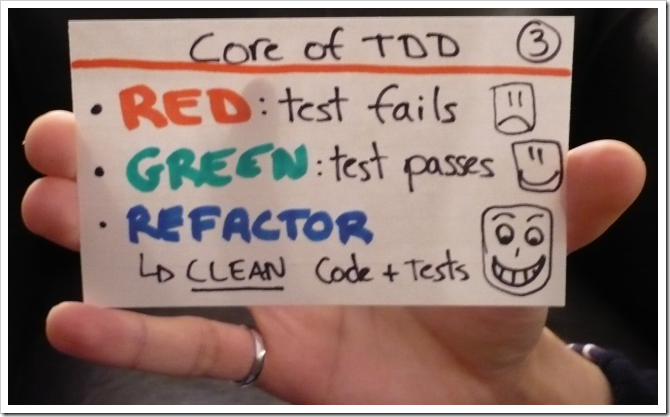

Relevance between two texts can be defined as: | |||

[[File:Relevance.png|frame|none|Definition of Relevance]] | |||

''degree_of_relevance.rb'' file calculates [http://en.wikipedia.org/wiki/Relevance relevance] between submission and review [http://en.wikipedia.org/wiki/Graph_%28data_structure%29 graphs]. The algorithm present in the file implements a variance of [http://en.wikipedia.org/wiki/Dependency_graph dependency graphs] called word-order graph to capture both governance and ordering information crucial to obtaining more accurate results. | |||

== Word-order graph generation == | |||

In word-order graph vertices represent noun, verb, adjective or adverbial words or phrases in a text, and edges represent relationships between vertices. The first step to graph generation is dividing input text into segments on the basis of a predefined punctuation list. The text is then tagged with part-of-speech information which is used to determine how to group words into phrases while still maintaining the order. [http://nlp.stanford.edu/software/tagger.shtml Stanford NLP POS tagger] is used to generate the tagged text. The vertex and edges are created using the algorithm below. Graph edges that are subsequently created are then labeled with dependency(word-modifier) information. | |||

[[File:MainAlgo.png|frame|none|Algorithm to create vertices and edges]] | |||

[http://en.wikipedia.org/wiki/Wordnet WordNet] is used to identify [http://en.wikipedia.org/wiki/Semantic_relatedness semantic relatedness] between two terms. The comparison between reviews and submissions would involve checking for relatedness across verb, adjective or adverbial forms, checking for cases of [http://en.wikipedia.org/wiki/Text_normalization normalizations] (noun form of adjectives) etc. | |||

== Lexico-Semantic Graph-based Matching == | |||

=== Phrase or token matching === | |||

In phrase or token matching, vertices containing phrases or tokens are compared across graphs. This matching succeeds in capturing [http://en.wikipedia.org/wiki/Semantic_relatedness semantic relatedness] between single or compound words. | |||

[[File:Phrase.png|frame|none|Phrase-matching]] | |||

=== Context matching === | |||

Context matching compares edges with same and different syntax, and edges of different types across two text graphs. The match is referred to as context matching since contiguous phrases, which capture additional context information, are chosen from a graph for comparison with those in another. Edge labels capture grammatical relations, and play an important role in matching. | |||

[[File:Context.png|frame|none|Context-matching]] | |||

r(e) and s(e) refer to review and submission edges. The formula calculates the average for each of the above three types of matches ordered, [http://en.wikipedia.org/wiki/Metadata_discovery#Lexical_Matching lexical] and [http://en.wikipedia.org/wiki/Nominalization nominalized]. E<sub>r</sub> and E<sub>s</sub> represent the sets of review and submission edges. | |||

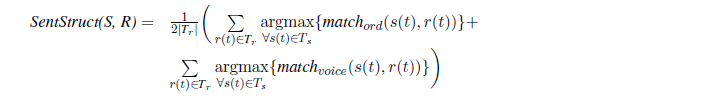

=== Sentence structure matching === | |||

Sentence structure matching compares double edges (two contiguous edges), which constitute a complete segment (e.g. subject–verb–object), across graphs. In this case, only single and double edges are considered, and not more contiguous edges (triple edges etc.), for text matching. The matching captures similarity across segments and it captures voice changes. | |||

[[File:Structurem.png|frame|none|Structure-matching]] | |||

r(t) and s(t) refer to double edges, and T<sub>r</sub> and T<sub>s</sub> are the number of double edges in the review and submission texts respectively. match<sub>ord</sub> and match<sub>voice</sub> are the averages of the best ordered and voice change matches. | |||

= Existing Design = | |||

The current design has a method ''get_relevance'' which is a single entry point to ''degree_of_relevance.rb''. It takes as input submission and review graphs in array form along with other important parameters. The algorithm is broken down into different parts each of which is handled by a different helper method. The results obtained from these methods are used in the following formula to obtain degree of relevance. | |||

[[File:RelevanceFormula.png|frame|none|Formula to calculate relevance]] | |||

The implementation in Expertiza leaves room for applying separate weights to different types of matches instead of a fixed value of 0.33 in above formula. | |||

[[File:ModifiedEq.png|frame|none|Formula used in Expertiza]] | |||

For example, a possible set of values could be: | |||

alpha = 0.55 | |||

beta = 0.35 | |||

gamma = 0.1 | |||

The rule generally followed is alpha > beta > gamma | |||

== Helper Methods == | |||

The helper methods used by ''get_relevance'' are: | |||

=== compare_vertices === | |||

This method compares the vertices from across the two graphs(submission and revision) to identify matches and quantify various metrics. Every vertex is compared with every other vertex to obtain the comparison results. | |||

=== compare_edges_non_syntax_diff === | |||

This is the method where SUBJECT-SUBJECT and VERB-VERB or VERB-VERB and OBJECT-OBJECT comparisons are done to identify matches and quantify various metrics. It compares edges of the same type ie SUBJECT-VERB edges are matched with SUBJECT-VERB matches. | |||

=== compare_edges_syntax_diff === | |||

This method handles comparisons between edges of type SUBJECT-VERB with VERB-OBJECT. It also does comparison between SUBJECT-OBJECT and VERB_VERB edges. It compares edges of the same type. | |||

=== compare_edges_diff_types === | |||

This method does comparison between edges of different types. Comparisons related to SUBJECT-VERB, VERB-SUBJECT, OBJECT-VERB, VERB-OBJECT are handled and relevant results returned. | |||

=== compare_SVO_edges === | |||

This method does comparison between edges of type SUBJECT-VERB-OBJECT. The comparison done is between edges of same type. | |||

=== compare_SVO_diff_syntax === | |||

This method also does comparison between edges of type SUBJECT-VERB-OBJECT. However, the comparison done is between edges of different types. | |||

== Program Flow == | |||

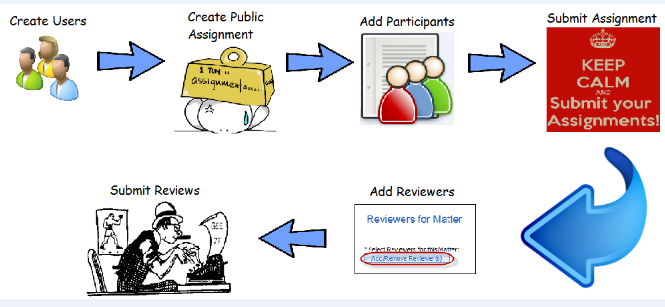

''degree_of_relevance.rb'' is buried deep inside models and to bring control to this file, the tester needs to undertake some tasks. The diagram below gives a quick look into what needs to be done. | |||

[[File:ProgramFlow1.png|frame|none|Initial setup]] | |||

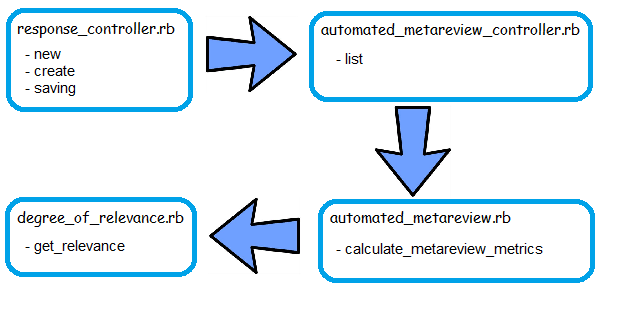

When a user tries to review an existing work, it calls ''new'' method in ''response_controller.rb'' which renders the page where user fills up the review. On submission, it calls ''create'' method of the same file, which then redirects to ''saving'' method in the same file again. ''saving'' makes a call to ''calculate_metareview_metrics'' in ''automated_metareview.rb'' which eventually calls ''get_relevance'' in ''degree_of_relevance.rb''. The figure below shows the flow in the code. | |||

[[File:ProgramFlow2.png|frame|none|Flow in the code(filename and corresponding methods called)]] | |||

= Re-factoring = | |||

=== Design Changes === | |||

---- | |||

We have taken ideas from [http://en.wikipedia.org/wiki/Template_method_pattern Template design pattern] to improve the design of the class. Although we did not directly implement this design pattern on the class, the idea of defining a skeleton of an algorithm in one class, and deferring some steps to subclasses, allowing us to come up with a similar design which segregates different functionality to different classes. The following is a brief outline of the changes made: | |||

* Divided the code into 4 classes to segregate the functionality, making a logical separation of code. | |||

* These classes are: | |||

** CompareGraphEdges | |||

** CompareGraphSVOEdges | |||

** CompareGraphVertices | |||

** DegreeOfRelevance | |||

* Extracted common code in methods that can be re-used. | |||

The main class DegreeOfRelevance calculates the scaled relevance using the formula described above. It calculates the relevance based on comparisons done between submission and review graphs. We have grouped together similar functionality in one class. Following is a brief description of the classes. | |||

* Class CompareGraphEdges: | |||

This class compares edges from across two graphs. Related methods compare_edges_non_syntax_diff and compare_edges_syntax_diff are grouped together in this class. This ensures that this class is just responsible for making comparison between graph edges and return the appropriate metric to the main class DegreeOfRelevance. | |||

* Class CompareGraphVertices: | |||

This class is responsible for comparing every vertex of submission graph with every vertex of review graph. This class contains only one method compare_vertices. Its sole responsibility is comparing vertices and calculating the appropriate metrics required by the main class(DegreeOfRelevance). | |||

* Class CompareGraphSVOEdges: | |||

This class is responsible for making comparison between edges of type SUBJECT-VERB-OBJECT.Related methods - compare_SVO_edges and compare_SVO_diff_syntax are grouped together in this class. | |||

The whole purpose of creating such a design is to make clear separation of logic in different classes. Although each of these classes contain helper methods to be used by the main method(get_relevance) to calculate relevance, yet this kind of design improves maintainability and readability of code for any programmer who is new to this code. The figure below illustrates the concept used in the design. | |||

[[File:class design.png|frame|none|Main class calling helper classes]] | |||

As shown in the figure above ,the main class degree_of_relevance.rb calls the helper methods in the classes to the right to get the appropriate metrics required for evaluating relevance. This implements delegation of functionality to helper classes making a clear segregation of role of each class. | |||

=== Types of Re-factoring === | |||

The following are the three main types of refactoring we have done in our project. | |||

* '''Move out common code in re-usable method:''' | |||

The following is a sample of the duplicated code which was present in many methods. We moved it to a separate method and just called this method with appropriate parameters wherever required. This implements nil condition check on an edge of the graph and if the id of the vertex is not invalid(negative). | |||

def edge_nil_cond_check(edge) | |||

return (!edge.nil? && edge.in_vertex.node_id != -1 && edge.out_vertex.node_id != -1) | |||

end | |||

* '''Reduce number of lines of code per method''' | |||

We ensured that each method should be short enough to fit in less than one screen length(no need to scroll). | |||

* '''Miscellaneous''' | |||

** Combined conditions together in one statement instead of nesting them inside one another. | |||

** Took out code that can be re-used or logically separated in separate methods. | |||

** Removed commented debug statements. | |||

Code sample before Refactoring: | |||

def compare_edges_diff_types(rev, subm, num_rev_edg, num_sub_edg) | |||

# puts("*****Inside compareEdgesDiffTypes :: numRevEdg :: #{num_rev_edg} numSubEdg:: #{num_sub_edg}") | |||

best_SV_VS_match = Array.new(num_rev_edg){Array.new} | |||

cum_edge_match = 0.0 | |||

count = 0 | |||

max = 0.0 | |||

flag = 0 | |||

wnet = WordnetBasedSimilarity.new | |||

for i in (0..num_rev_edg - 1) | |||

if(!rev[i].nil? and rev[i].in_vertex.node_id != -1 and rev[i].out_vertex.node_id != -1) | |||

#skipping edges with frequent words for vertices | |||

if(wnet.is_frequent_word(rev[i].in_vertex.name) and wnet.is_frequent_word(rev[i].out_vertex.name)) | |||

next | |||

end | |||

#identifying best match for edges | |||

for j in (0..num_sub_edg - 1) | |||

if(!subm[j].nil? and subm[j].in_vertex.node_id != -1 and subm[j].out_vertex.node_id != -1) | |||

#checking if the subm token is a frequent word | |||

if(wnet.is_frequent_word(subm[j].in_vertex.name) and wnet.is_frequent_word(subm[j].out_vertex.name)) | |||

next | |||

end | |||

#for S-V with S-V or V-O with V-O | |||

if(rev[i].in_vertex.type == subm[j].in_vertex.type and rev[i].out_vertex.type == subm[j].out_vertex.type) | |||

#taking each match separately because one or more of the terms may be a frequent word, for which no @vertex_match exists! | |||

sum = 0.0 | |||

cou = 0 | |||

if(!@vertex_match[rev[i].in_vertex.node_id][subm[j].out_vertex.node_id].nil?) | |||

sum = sum + @vertex_match[rev[i].in_vertex.node_id][subm[j].out_vertex.node_id] | |||

cou +=1 | |||

end | |||

if(!@vertex_match[rev[i].out_vertex.node_id][subm[j].in_vertex.node_id].nil?) | |||

sum = sum + @vertex_match[rev[i].out_vertex.node_id][subm[j].in_vertex.node_id] | |||

cou +=1 | |||

end | |||

if(cou > 0) | |||

best_SV_VS_match[i][j] = sum.to_f/cou.to_f | |||

else | |||

best_SV_VS_match[i][j] = 0.0 | |||

end | |||

#-- Vertex and SRL | |||

best_SV_VS_match[i][j] = best_SV_VS_match[i][j]/ compare_labels(rev[i], subm[j]) | |||

flag = 1 | |||

if(best_SV_VS_match[i][j] > max) | |||

max = best_SV_VS_match[i][j] | |||

end | |||

#for S-V with V-O or V-O with S-V | |||

elsif(rev[i].in_vertex.type == subm[j].out_vertex.type and rev[i].out_vertex.type == subm[j].in_vertex.type) | |||

#taking each match separately because one or more of the terms may be a frequent word, for which no @vertex_match exists! | |||

sum = 0.0 | |||

cou = 0 | |||

if(!@vertex_match[rev[i].in_vertex.node_id][subm[j].in_vertex.node_id].nil?) | |||

sum = sum + @vertex_match[rev[i].in_vertex.node_id][subm[j].in_vertex.node_id] | |||

cou +=1 | |||

end | |||

if(!@vertex_match[rev[i].out_vertex.node_id][subm[j].out_vertex.node_id].nil?) | |||

sum = sum + @vertex_match[rev[i].out_vertex.node_id][subm[j].out_vertex.node_id] | |||

cou +=1 | |||

end | |||

if(cou > 0) | |||

best_SV_VS_match[i][j] = sum.to_f/cou.to_f | |||

else | |||

best_SV_VS_match[i][j] =0.0 | |||

end | |||

flag = 1 | |||

if(best_SV_VS_match[i][j] > max) | |||

max = best_SV_VS_match[i][j] | |||

end | |||

end | |||

end #end of the if condition | |||

end #end of the for loop for submission edges | |||

if(flag != 0) #if the review edge had any submission edges with which it was matched, since not all S-V edges might have corresponding V-O edges to match with | |||

# puts("**** Best match for:: #{rev[i].in_vertex.name} - #{rev[i].out_vertex.name} -- #{max}") | |||

cum_edge_match = cum_edge_match + max | |||

count+=1 | |||

max = 0.0 #re-initialize | |||

flag = 0 | |||

end | |||

end #end of if condition | |||

end #end of for loop for review edges | |||

Code sample after Refactoring : | |||

def compare_edges_diff_types(vertex_match, rev, subm, num_rev_edg, num_sub_edg) | |||

best_SV_VS_match = Array.new(num_rev_edg){Array.new} | |||

cum_edge_match = max = 0.0 | |||

count = flag = 0 | |||

wnet = WordnetBasedSimilarity.new | |||

for i in (0..num_rev_edg - 1) | |||

if(edge_nil_cond_check(rev[i]) && !wnet_frequent_word_check(wnet, rev[i])) | |||

#identifying best match for edges | |||

for j in (0..num_sub_edg - 1) | |||

if(edge_nil_cond_check(subm[j]) && !wnet_frequent_word_check(wnet, subm[j])) | |||

#for S-V with S-V or V-O with V-O | |||

if(edge_symm_vertex_type_check(rev[i],subm[j]) || edge_asymm_vertex_type_check(rev[i],subm[j])) | |||

if(edge_symm_vertex_type_check(rev[i],subm[j])) | |||

cou, sum = sum_cou_asymm_calculation(vertex_match, rev[i], subm[j]) | |||

else | |||

cou, sum = sum_cou_symm_calculation(vertex_match, rev[i], subm[j]) | |||

end | |||

max, best_SV_VS_match[i][j] = max_calculation(cou,sum, rev[i], subm[j], max) | |||

flag = 1 | |||

end | |||

end #end of the if condition | |||

end #end of the for loop for submission edges | |||

flag_cond_var_set(:cum_edge_match, :max, :count, :flag, binding) | |||

end | |||

end #end of 'for' loop for the review's edges | |||

return calculate_avg_match(cum_edge_match, count) | |||

end #end of the method | |||

* '''Re-written some methods containing complicated logic'''. | |||

Below is a sample code that we have re-written to make the logic more simple and maintainable. | |||

Before Refactoring: | |||

def compare_labels(edge1, edge2) | |||

result = EQUAL | |||

if(!edge1.label.nil? and !edge2.label .nil?) | |||

if(edge1.label.downcase == edge2.label.downcase) | |||

result = EQUAL #divide by 1 | |||

else | |||

result = DISTINCT #divide by 2 | |||

end | |||

elsif((!edge1.label.nil? and !edge2.label.nil?) or (edge1.label.nil? and !edge2.label.nil? )) #if only one of the labels was null | |||

result = DISTINCT | |||

elsif(edge1.label.nil? and edge2.label.nil?) #if both labels were null! | |||

result = EQUAL | |||

end | |||

return result | |||

end # end of method | |||

After Refactoring: | |||

def compare_labels(edge1, edge2) | |||

if(((!edge1.label.nil? && !edge2.label.nil?) &&(edge1.label.downcase == edge2.label.downcase)) || | |||

(edge1.label.nil? and edge2.label.nil?)) | |||

result = EQUAL | |||

else | |||

result = DISTINCT | |||

end | |||

return result | |||

end # end of method | |||

We have achieved the following after refactoring: | |||

* Reduced duplication significantly. | |||

* Lines of code per method reduced from 75 to 25. This makes the code more readable and maintainable. | |||

* Improved the design of classes by making logical separation of code into different classes. | |||

* After complete re-factoring, the grade for the class DegreeOfRelevance changed from "F" to "C" according to codeclimate. | |||

= Testing = | |||

[http://en.wikipedia.org/wiki/ Ruby_on_Rails] and [http://en.wikipedia.org/wiki/Test_automation Automated Testing] go hand in hand. Rails ships in with a built-in test framework and if we want, we can replace the existing with Capybara, Rspec etc. Testing is pretty important in Rails—yet many people developing in Rails either don't test their projects at all, or at best only add a few test specs on model validations. | |||

If it's worth building, it's worth testing. | |||

If it's not worth testing, why are you wasting your time working on it?<ref>http://www.agiledata.org/essays/tdd.html</ref> | |||

There are several reasons for this. Perhaps working with Ruby or web frameworks is a novel enough concept; that adding extra work seems unimportant. Or maybe there is a perceived time constraint—spending time on writing tests takes time away from writing the features demanded by clients or bosses. Or maybe the habit of defining “test” as clicking links in the browser is too hard to break. | |||

Testing helps us find defects in our applications. But, it also ensures that the developers follow the release plan and the rule-set of necessary requirements, instead of just developing carelessly. | |||

Testing can be done in two approaches: | |||

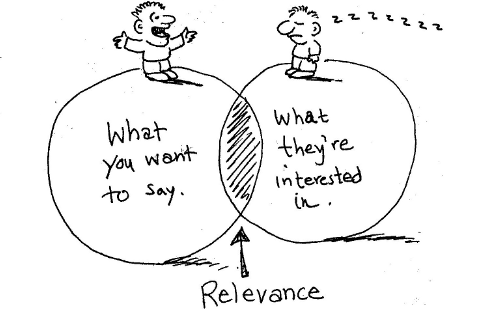

*[http://en.wikipedia.org/wiki/ Test-driven_development] is a way of driving the design of code by writing a test which expresses what you intend the code to do, making that test pass, and continuously refactoring it to keep the design as simple as possible. | |||

[[File:Test-driven development.png]] | |||

*[http://en.wikipedia.org/wiki/ Behavior-driven_development] combines the general techniques and principles of TDD to provide software developers and business analysts to collaborate on productive software development. | |||

We have used the BDD approach, in which the tests are described in a natural language, which makes them more accessible to people outside of development or testing teams. | |||

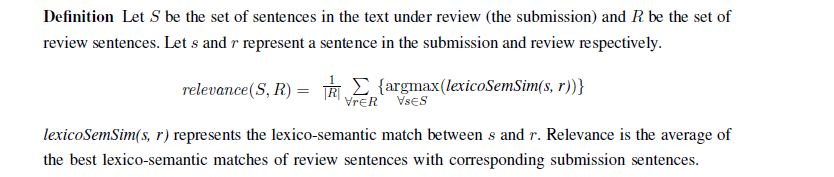

Start by writing a failing test (Red.) Implement the simplest solution that will cause the test to pass (Green.) Search for duplication and remove it (Refactor.) "RED-GREEN-REFACTOR" has become almost a mantra for many TDD practitioners. | |||

[[File:TestDrivenGameDevelopment1.png|<ref>http://agilefeedback.blogspot.com/2012/07/tdd-do-you-speak.html</ref>]] | |||

==Cucumber== | |||

Of the various tools available we have used [http://en.wikipedia.org/wiki/Cucumber_(software) Cucumber] <ref>http://net.tutsplus.com/tutorials/ruby/ruby-for-newbies-testing-web-apps-with-capybara-and-cucumber</ref>for testing. | |||

The Given, When, Then syntax used by Cucumber is designed to be intuitive. Consider the syntax elements: | |||

*'''Given''' provides context for the test scenario about to be executed, such as the point in your application that the test occurs as well as any prerequisite data. | |||

*'''When''' specifies the set of actions that triggers the test, such as user or subsystem actions. | |||

*'''Then''' specifies the expected result of the test. | |||

As Cucumber doesn’t know how to interpret the features by itself, the next step is to create step definitions explaining it what to do when it comes across that step in one of the scenarios. The step definitions are written in Ruby. This gives much flexibility on how the test steps are executed | |||

==Steps for Testing== | |||

*First of all, we have to include the required gems for our tests in the '''Gemfile'''. | |||

group :test do | |||

gem 'capybara' | |||

gem 'cucumber-rails', require: false | |||

end | |||

*Then, write the actual tests in the feature file, '''deg_rel_set.feature''' located in the features folder. | |||

The degree or relevance file that we are dealing with consists of six major functions.We have written tests for implementing all the six functions. | |||

The major feature is to test the degree of relevance by finding the comparison between the expected values and the actual values of the Submission and Review Graphs. | |||

Feature: To check degree of Relevance | |||

In order to check the various methods of the class | |||

As an Administrator | |||

I want to check if the actual and expected values match. | |||

@one | |||

Scenario: Check actual v/s expected values | |||

Given Instance of the class is created | |||

When I compare actual value and expected value of "compare_vertices" | |||

Then It will return true if both are equal. | |||

Similarly, we have tested all the six major modules of degree of relevance like comparing edges, vertices and subject-verb objects. | |||

*Cucumber feature files are written to be readable to non-programmers, so we have to implement step definitions for undefined steps, provided by Cucumber. Cucumber will load any files in the folder features/step_definitions for steps. We have created a step file, '''deg_relev_step.rb'''. | |||

Given /^Instance of the class is created$/ do | |||

@inst=DegreeOfRelevance.new | |||

end | |||

When /^I compare (\d+) and "(\S+)"$/ do |qty| | |||

actual=qty | |||

if(assert_equal(qty,@inst.compare_vertices(@vertex_match,@pos_tagger, @review_vertices, @subm_vertices, @num_rev_vert, @num_sub_vert, @speller) )) | |||

step("It will return true") | |||

else | |||

step(“It will return false”) | |||

end | |||

end | |||

Then /^It will return true$/ do | |||

#print true or false | |||

End | |||

*The dummy model/data is created in the setup function defined in the class Deg_rel_setup present in '''lib/deg_rel_setup.rb'''. It generates a graph of submissions and reviews and then executes the 6 different methods. | |||

class Deg_rel_setup | |||

def setup | |||

#set up nodes and graphs | |||

end | |||

end | |||

*Cucumber provides a number of hooks <ref>https://github.com/cucumber/cucumber/wiki/Hooks </ref>which allow us to run blocks at various points in the Cucumber test cycle. we can put them in the '''support/env.rb''' file or any other file under the support directory, for example in a file called '''support/hooks.rb'''. There is no association between where the hook is defined and which scenario/step it is run for, but you can use tagged hooks, if we want more fine grained control. | |||

All defined hooks are run whenever the relevant event occurs. | |||

Before hooks will be run before the first step of each scenario. They will run in the same order of which they are registered. | |||

Before do | |||

# Do something before each scenario. | |||

end | |||

Sometimes we may want a certain hook to run only for certain scenarios. This can be achieved by associating a Before, After,Around or AfterStep hook with one or more tags. We have used a tag named one, and hence the following code snippet will be executed everytime before the implementation of tag one. | |||

Before ('@one') do | |||

set= Deg_rel_setup.new | |||

set.setup | |||

end | |||

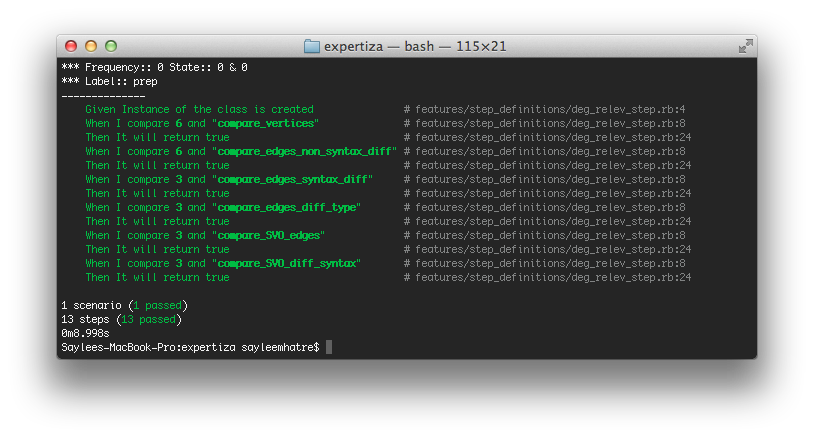

*To run the test we simply need to execute, | |||

rake db:migrate #To initialize the test database | |||

cucumber features/deg_rel.feature #This will execute the feature along with it's step definitons | |||

[[File:cucumber.png|frame|none]] | |||

In the end, though, the most important thing is that tests are reliable and understandable, even if they’re not quite as optimized as they could be, they are a great way to start an application. We should keep taking advantage of the fully automated test suites available and use tests to drive development and ferret out potential bugs in our application. | |||

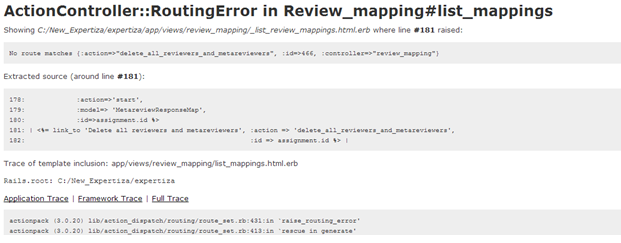

=Issues= | |||

The original Expertiza code had lot of bugs and the application was throwing a lot of errors at each step. | |||

Even simple steps like submitting an assignment and performing reviews took a lot of debugging and bug fixing in various other files not related to our class DegreeOfRelevance. | |||

Lot of routes were missing from the routes.rb file, which we fixed. Here is one of the sample routing error we faced. | |||

[[File:12.png|frame|none|Routing error]] | |||

Encountered following error when trying to give permission to user to review other's work. | |||

[[File:16.png|frame|none|Error while trying to assign review permission]] | |||

There were many errors related to Aspell gem, which is used as a spell-checker for checking relevance between the submissions and reviews. | |||

[[File:aspell-error.png|frame|none|Aspell error]] | |||

= Conclusion = | |||

We were successful in refactoring the given class of Degree of Relevance by using the ideas of Template design pattern and adapting its implementation according to our needs. | |||

According to Code-Climate the grade for the previous class was 'F', while after refactoring the class the grade is 'C'. | |||

Also, we tested the functionality of the class using Cucumber, behavior driven approach. | |||

=References= | |||

<references /> | |||

Latest revision as of 04:13, 31 October 2013

Refactoring and testing — degree_of_relevance.rb

Introduction

Reviews are text-based feedback provided by a reviewer to the author of a submission. Reviews are used not only in education to assess student work, but also in e-commerce applications, to assess the quality of products on sites like Amazon, e-bay etc. Since reviews play a crucial role in providing feedback to people who make assessment decisions (e.g. deciding a student’s grade, choosing to purchase a product) it is important to find out how relevant reviews are to a submission <ref>http://repository.lib.ncsu.edu/ir/handle/1840.16/8813</ref>.

The class DegreeOfRelevance in Expertiza is used to calculate how relevant one piece of text is to another. It calculates relevance between the submitted work and reviews(or meta reviews) for an assignment. It is important to do this evaluation to ensure that if the review is not relevant to the submission, it is considered to be invalid and does not impact students' grade.

degree_of_relevance.rb presently contains long and complex methods and a lot of duplicated code. It has been assigned grade "F" by Code Climate, on metrics such as code complexity, duplication, lines of code per method etc. Since this file is important for the research on Expertiza, it should be re-factored to reduce complexity and duplication issues and introduce coding best practices. Our task for this project is to re-factor this class and test it thoroughly.

Theory of Relevance

Relevance between two texts can be defined as:

degree_of_relevance.rb file calculates relevance between submission and review graphs. The algorithm present in the file implements a variance of dependency graphs called word-order graph to capture both governance and ordering information crucial to obtaining more accurate results.

Word-order graph generation

In word-order graph vertices represent noun, verb, adjective or adverbial words or phrases in a text, and edges represent relationships between vertices. The first step to graph generation is dividing input text into segments on the basis of a predefined punctuation list. The text is then tagged with part-of-speech information which is used to determine how to group words into phrases while still maintaining the order. Stanford NLP POS tagger is used to generate the tagged text. The vertex and edges are created using the algorithm below. Graph edges that are subsequently created are then labeled with dependency(word-modifier) information.

WordNet is used to identify semantic relatedness between two terms. The comparison between reviews and submissions would involve checking for relatedness across verb, adjective or adverbial forms, checking for cases of normalizations (noun form of adjectives) etc.

Lexico-Semantic Graph-based Matching

Phrase or token matching

In phrase or token matching, vertices containing phrases or tokens are compared across graphs. This matching succeeds in capturing semantic relatedness between single or compound words.

Context matching

Context matching compares edges with same and different syntax, and edges of different types across two text graphs. The match is referred to as context matching since contiguous phrases, which capture additional context information, are chosen from a graph for comparison with those in another. Edge labels capture grammatical relations, and play an important role in matching.

r(e) and s(e) refer to review and submission edges. The formula calculates the average for each of the above three types of matches ordered, lexical and nominalized. Er and Es represent the sets of review and submission edges.

Sentence structure matching

Sentence structure matching compares double edges (two contiguous edges), which constitute a complete segment (e.g. subject–verb–object), across graphs. In this case, only single and double edges are considered, and not more contiguous edges (triple edges etc.), for text matching. The matching captures similarity across segments and it captures voice changes.

r(t) and s(t) refer to double edges, and Tr and Ts are the number of double edges in the review and submission texts respectively. matchord and matchvoice are the averages of the best ordered and voice change matches.

Existing Design

The current design has a method get_relevance which is a single entry point to degree_of_relevance.rb. It takes as input submission and review graphs in array form along with other important parameters. The algorithm is broken down into different parts each of which is handled by a different helper method. The results obtained from these methods are used in the following formula to obtain degree of relevance.

The implementation in Expertiza leaves room for applying separate weights to different types of matches instead of a fixed value of 0.33 in above formula.

For example, a possible set of values could be:

alpha = 0.55 beta = 0.35 gamma = 0.1

The rule generally followed is alpha > beta > gamma

Helper Methods

The helper methods used by get_relevance are:

compare_vertices

This method compares the vertices from across the two graphs(submission and revision) to identify matches and quantify various metrics. Every vertex is compared with every other vertex to obtain the comparison results.

compare_edges_non_syntax_diff

This is the method where SUBJECT-SUBJECT and VERB-VERB or VERB-VERB and OBJECT-OBJECT comparisons are done to identify matches and quantify various metrics. It compares edges of the same type ie SUBJECT-VERB edges are matched with SUBJECT-VERB matches.

compare_edges_syntax_diff

This method handles comparisons between edges of type SUBJECT-VERB with VERB-OBJECT. It also does comparison between SUBJECT-OBJECT and VERB_VERB edges. It compares edges of the same type.

compare_edges_diff_types

This method does comparison between edges of different types. Comparisons related to SUBJECT-VERB, VERB-SUBJECT, OBJECT-VERB, VERB-OBJECT are handled and relevant results returned.

compare_SVO_edges

This method does comparison between edges of type SUBJECT-VERB-OBJECT. The comparison done is between edges of same type.

compare_SVO_diff_syntax

This method also does comparison between edges of type SUBJECT-VERB-OBJECT. However, the comparison done is between edges of different types.

Program Flow

degree_of_relevance.rb is buried deep inside models and to bring control to this file, the tester needs to undertake some tasks. The diagram below gives a quick look into what needs to be done.

When a user tries to review an existing work, it calls new method in response_controller.rb which renders the page where user fills up the review. On submission, it calls create method of the same file, which then redirects to saving method in the same file again. saving makes a call to calculate_metareview_metrics in automated_metareview.rb which eventually calls get_relevance in degree_of_relevance.rb. The figure below shows the flow in the code.

Re-factoring

Design Changes

We have taken ideas from Template design pattern to improve the design of the class. Although we did not directly implement this design pattern on the class, the idea of defining a skeleton of an algorithm in one class, and deferring some steps to subclasses, allowing us to come up with a similar design which segregates different functionality to different classes. The following is a brief outline of the changes made:

- Divided the code into 4 classes to segregate the functionality, making a logical separation of code.

- These classes are:

- CompareGraphEdges

- CompareGraphSVOEdges

- CompareGraphVertices

- DegreeOfRelevance

- Extracted common code in methods that can be re-used.

The main class DegreeOfRelevance calculates the scaled relevance using the formula described above. It calculates the relevance based on comparisons done between submission and review graphs. We have grouped together similar functionality in one class. Following is a brief description of the classes.

- Class CompareGraphEdges:

This class compares edges from across two graphs. Related methods compare_edges_non_syntax_diff and compare_edges_syntax_diff are grouped together in this class. This ensures that this class is just responsible for making comparison between graph edges and return the appropriate metric to the main class DegreeOfRelevance.

- Class CompareGraphVertices:

This class is responsible for comparing every vertex of submission graph with every vertex of review graph. This class contains only one method compare_vertices. Its sole responsibility is comparing vertices and calculating the appropriate metrics required by the main class(DegreeOfRelevance).

- Class CompareGraphSVOEdges:

This class is responsible for making comparison between edges of type SUBJECT-VERB-OBJECT.Related methods - compare_SVO_edges and compare_SVO_diff_syntax are grouped together in this class.

The whole purpose of creating such a design is to make clear separation of logic in different classes. Although each of these classes contain helper methods to be used by the main method(get_relevance) to calculate relevance, yet this kind of design improves maintainability and readability of code for any programmer who is new to this code. The figure below illustrates the concept used in the design.

As shown in the figure above ,the main class degree_of_relevance.rb calls the helper methods in the classes to the right to get the appropriate metrics required for evaluating relevance. This implements delegation of functionality to helper classes making a clear segregation of role of each class.

Types of Re-factoring

The following are the three main types of refactoring we have done in our project.

- Move out common code in re-usable method:

The following is a sample of the duplicated code which was present in many methods. We moved it to a separate method and just called this method with appropriate parameters wherever required. This implements nil condition check on an edge of the graph and if the id of the vertex is not invalid(negative).

def edge_nil_cond_check(edge) return (!edge.nil? && edge.in_vertex.node_id != -1 && edge.out_vertex.node_id != -1) end

- Reduce number of lines of code per method

We ensured that each method should be short enough to fit in less than one screen length(no need to scroll).

- Miscellaneous

- Combined conditions together in one statement instead of nesting them inside one another.

- Took out code that can be re-used or logically separated in separate methods.

- Removed commented debug statements.

Code sample before Refactoring:

def compare_edges_diff_types(rev, subm, num_rev_edg, num_sub_edg)

# puts("*****Inside compareEdgesDiffTypes :: numRevEdg :: #{num_rev_edg} numSubEdg:: #{num_sub_edg}")

best_SV_VS_match = Array.new(num_rev_edg){Array.new}

cum_edge_match = 0.0

count = 0

max = 0.0

flag = 0

wnet = WordnetBasedSimilarity.new

for i in (0..num_rev_edg - 1)

if(!rev[i].nil? and rev[i].in_vertex.node_id != -1 and rev[i].out_vertex.node_id != -1)

#skipping edges with frequent words for vertices

if(wnet.is_frequent_word(rev[i].in_vertex.name) and wnet.is_frequent_word(rev[i].out_vertex.name))

next

end

#identifying best match for edges

for j in (0..num_sub_edg - 1)

if(!subm[j].nil? and subm[j].in_vertex.node_id != -1 and subm[j].out_vertex.node_id != -1)

#checking if the subm token is a frequent word

if(wnet.is_frequent_word(subm[j].in_vertex.name) and wnet.is_frequent_word(subm[j].out_vertex.name))

next

end

#for S-V with S-V or V-O with V-O

if(rev[i].in_vertex.type == subm[j].in_vertex.type and rev[i].out_vertex.type == subm[j].out_vertex.type)

#taking each match separately because one or more of the terms may be a frequent word, for which no @vertex_match exists!

sum = 0.0

cou = 0

if(!@vertex_match[rev[i].in_vertex.node_id][subm[j].out_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].in_vertex.node_id][subm[j].out_vertex.node_id]

cou +=1

end

if(!@vertex_match[rev[i].out_vertex.node_id][subm[j].in_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].out_vertex.node_id][subm[j].in_vertex.node_id]

cou +=1

end

if(cou > 0)

best_SV_VS_match[i][j] = sum.to_f/cou.to_f

else

best_SV_VS_match[i][j] = 0.0

end

#-- Vertex and SRL

best_SV_VS_match[i][j] = best_SV_VS_match[i][j]/ compare_labels(rev[i], subm[j])

flag = 1

if(best_SV_VS_match[i][j] > max)

max = best_SV_VS_match[i][j]

end

#for S-V with V-O or V-O with S-V

elsif(rev[i].in_vertex.type == subm[j].out_vertex.type and rev[i].out_vertex.type == subm[j].in_vertex.type)

#taking each match separately because one or more of the terms may be a frequent word, for which no @vertex_match exists!

sum = 0.0

cou = 0

if(!@vertex_match[rev[i].in_vertex.node_id][subm[j].in_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].in_vertex.node_id][subm[j].in_vertex.node_id]

cou +=1

end

if(!@vertex_match[rev[i].out_vertex.node_id][subm[j].out_vertex.node_id].nil?)

sum = sum + @vertex_match[rev[i].out_vertex.node_id][subm[j].out_vertex.node_id]

cou +=1

end

if(cou > 0)

best_SV_VS_match[i][j] = sum.to_f/cou.to_f

else

best_SV_VS_match[i][j] =0.0

end

flag = 1

if(best_SV_VS_match[i][j] > max)

max = best_SV_VS_match[i][j]

end

end

end #end of the if condition

end #end of the for loop for submission edges

if(flag != 0) #if the review edge had any submission edges with which it was matched, since not all S-V edges might have corresponding V-O edges to match with

# puts("**** Best match for:: #{rev[i].in_vertex.name} - #{rev[i].out_vertex.name} -- #{max}")

cum_edge_match = cum_edge_match + max

count+=1

max = 0.0 #re-initialize

flag = 0

end

end #end of if condition

end #end of for loop for review edges

Code sample after Refactoring :

def compare_edges_diff_types(vertex_match, rev, subm, num_rev_edg, num_sub_edg)

best_SV_VS_match = Array.new(num_rev_edg){Array.new}

cum_edge_match = max = 0.0

count = flag = 0

wnet = WordnetBasedSimilarity.new

for i in (0..num_rev_edg - 1)

if(edge_nil_cond_check(rev[i]) && !wnet_frequent_word_check(wnet, rev[i]))

#identifying best match for edges

for j in (0..num_sub_edg - 1)

if(edge_nil_cond_check(subm[j]) && !wnet_frequent_word_check(wnet, subm[j]))

#for S-V with S-V or V-O with V-O

if(edge_symm_vertex_type_check(rev[i],subm[j]) || edge_asymm_vertex_type_check(rev[i],subm[j]))

if(edge_symm_vertex_type_check(rev[i],subm[j]))

cou, sum = sum_cou_asymm_calculation(vertex_match, rev[i], subm[j])

else

cou, sum = sum_cou_symm_calculation(vertex_match, rev[i], subm[j])

end

max, best_SV_VS_match[i][j] = max_calculation(cou,sum, rev[i], subm[j], max)

flag = 1

end

end #end of the if condition

end #end of the for loop for submission edges

flag_cond_var_set(:cum_edge_match, :max, :count, :flag, binding)

end

end #end of 'for' loop for the review's edges

return calculate_avg_match(cum_edge_match, count)

end #end of the method

- Re-written some methods containing complicated logic.

Below is a sample code that we have re-written to make the logic more simple and maintainable.

Before Refactoring:

def compare_labels(edge1, edge2)

result = EQUAL

if(!edge1.label.nil? and !edge2.label .nil?)

if(edge1.label.downcase == edge2.label.downcase)

result = EQUAL #divide by 1

else

result = DISTINCT #divide by 2

end

elsif((!edge1.label.nil? and !edge2.label.nil?) or (edge1.label.nil? and !edge2.label.nil? )) #if only one of the labels was null

result = DISTINCT

elsif(edge1.label.nil? and edge2.label.nil?) #if both labels were null!

result = EQUAL

end

return result

end # end of method

After Refactoring:

def compare_labels(edge1, edge2)

if(((!edge1.label.nil? && !edge2.label.nil?) &&(edge1.label.downcase == edge2.label.downcase)) ||

(edge1.label.nil? and edge2.label.nil?))

result = EQUAL

else

result = DISTINCT

end

return result

end # end of method

We have achieved the following after refactoring:

- Reduced duplication significantly.

- Lines of code per method reduced from 75 to 25. This makes the code more readable and maintainable.

- Improved the design of classes by making logical separation of code into different classes.

- After complete re-factoring, the grade for the class DegreeOfRelevance changed from "F" to "C" according to codeclimate.

Testing

Ruby_on_Rails and Automated Testing go hand in hand. Rails ships in with a built-in test framework and if we want, we can replace the existing with Capybara, Rspec etc. Testing is pretty important in Rails—yet many people developing in Rails either don't test their projects at all, or at best only add a few test specs on model validations.

If it's worth building, it's worth testing. If it's not worth testing, why are you wasting your time working on it?<ref>http://www.agiledata.org/essays/tdd.html</ref>

There are several reasons for this. Perhaps working with Ruby or web frameworks is a novel enough concept; that adding extra work seems unimportant. Or maybe there is a perceived time constraint—spending time on writing tests takes time away from writing the features demanded by clients or bosses. Or maybe the habit of defining “test” as clicking links in the browser is too hard to break. Testing helps us find defects in our applications. But, it also ensures that the developers follow the release plan and the rule-set of necessary requirements, instead of just developing carelessly. Testing can be done in two approaches:

- Test-driven_development is a way of driving the design of code by writing a test which expresses what you intend the code to do, making that test pass, and continuously refactoring it to keep the design as simple as possible.

- Behavior-driven_development combines the general techniques and principles of TDD to provide software developers and business analysts to collaborate on productive software development.

We have used the BDD approach, in which the tests are described in a natural language, which makes them more accessible to people outside of development or testing teams. Start by writing a failing test (Red.) Implement the simplest solution that will cause the test to pass (Green.) Search for duplication and remove it (Refactor.) "RED-GREEN-REFACTOR" has become almost a mantra for many TDD practitioners.

Cucumber

Of the various tools available we have used Cucumber <ref>http://net.tutsplus.com/tutorials/ruby/ruby-for-newbies-testing-web-apps-with-capybara-and-cucumber</ref>for testing. The Given, When, Then syntax used by Cucumber is designed to be intuitive. Consider the syntax elements:

- Given provides context for the test scenario about to be executed, such as the point in your application that the test occurs as well as any prerequisite data.

- When specifies the set of actions that triggers the test, such as user or subsystem actions.

- Then specifies the expected result of the test.

As Cucumber doesn’t know how to interpret the features by itself, the next step is to create step definitions explaining it what to do when it comes across that step in one of the scenarios. The step definitions are written in Ruby. This gives much flexibility on how the test steps are executed

Steps for Testing

- First of all, we have to include the required gems for our tests in the Gemfile.

group :test do gem 'capybara' gem 'cucumber-rails', require: false end

- Then, write the actual tests in the feature file, deg_rel_set.feature located in the features folder.

The degree or relevance file that we are dealing with consists of six major functions.We have written tests for implementing all the six functions. The major feature is to test the degree of relevance by finding the comparison between the expected values and the actual values of the Submission and Review Graphs.

Feature: To check degree of Relevance In order to check the various methods of the class As an Administrator I want to check if the actual and expected values match.

@one Scenario: Check actual v/s expected values Given Instance of the class is created When I compare actual value and expected value of "compare_vertices" Then It will return true if both are equal.

Similarly, we have tested all the six major modules of degree of relevance like comparing edges, vertices and subject-verb objects.

- Cucumber feature files are written to be readable to non-programmers, so we have to implement step definitions for undefined steps, provided by Cucumber. Cucumber will load any files in the folder features/step_definitions for steps. We have created a step file, deg_relev_step.rb.

Given /^Instance of the class is created$/ do

@inst=DegreeOfRelevance.new

end

When /^I compare (\d+) and "(\S+)"$/ do |qty|

actual=qty

if(assert_equal(qty,@inst.compare_vertices(@vertex_match,@pos_tagger, @review_vertices, @subm_vertices, @num_rev_vert, @num_sub_vert, @speller) ))

step("It will return true")

else

step(“It will return false”)

end

end

Then /^It will return true$/ do

#print true or false

End

- The dummy model/data is created in the setup function defined in the class Deg_rel_setup present in lib/deg_rel_setup.rb. It generates a graph of submissions and reviews and then executes the 6 different methods.

class Deg_rel_setup def setup #set up nodes and graphs end end

- Cucumber provides a number of hooks <ref>https://github.com/cucumber/cucumber/wiki/Hooks </ref>which allow us to run blocks at various points in the Cucumber test cycle. we can put them in the support/env.rb file or any other file under the support directory, for example in a file called support/hooks.rb. There is no association between where the hook is defined and which scenario/step it is run for, but you can use tagged hooks, if we want more fine grained control.

All defined hooks are run whenever the relevant event occurs. Before hooks will be run before the first step of each scenario. They will run in the same order of which they are registered.

Before do # Do something before each scenario. end

Sometimes we may want a certain hook to run only for certain scenarios. This can be achieved by associating a Before, After,Around or AfterStep hook with one or more tags. We have used a tag named one, and hence the following code snippet will be executed everytime before the implementation of tag one.

Before ('@one') do

set= Deg_rel_setup.new

set.setup

end

- To run the test we simply need to execute,

rake db:migrate #To initialize the test database cucumber features/deg_rel.feature #This will execute the feature along with it's step definitons

In the end, though, the most important thing is that tests are reliable and understandable, even if they’re not quite as optimized as they could be, they are a great way to start an application. We should keep taking advantage of the fully automated test suites available and use tests to drive development and ferret out potential bugs in our application.

Issues

The original Expertiza code had lot of bugs and the application was throwing a lot of errors at each step. Even simple steps like submitting an assignment and performing reviews took a lot of debugging and bug fixing in various other files not related to our class DegreeOfRelevance. Lot of routes were missing from the routes.rb file, which we fixed. Here is one of the sample routing error we faced.

Encountered following error when trying to give permission to user to review other's work.

There were many errors related to Aspell gem, which is used as a spell-checker for checking relevance between the submissions and reviews.

Conclusion

We were successful in refactoring the given class of Degree of Relevance by using the ideas of Template design pattern and adapting its implementation according to our needs. According to Code-Climate the grade for the previous class was 'F', while after refactoring the class the grade is 'C'. Also, we tested the functionality of the class using Cucumber, behavior driven approach.

References

<references />