CSC/ECE 506 Spring 2013/8c da: Difference between revisions

No edit summary |

No edit summary |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 34: | Line 34: | ||

<ref>Experimental Analysis of Snoop Filters for MPSoC Embedded Systems By Aanjhan Ranganathan</ref>A snoop filter is a small cache-like structure present between the data cache and the bus as shown in figure, which filters probable data cache misses and forwards those snoop induced look-ups that might result in a hit. The snoop filters achieve filtering by either keeping track of a super-set of blocks currently present in the cache or those that are not present in the cache. The most important factor to be considered while designing these filters is that a look-up in any of these filters must consume less energy than a normal cache look-up. Several snoop filter architectures have been proposed in previous literature depending on the filtering methodology. | <ref>Experimental Analysis of Snoop Filters for MPSoC Embedded Systems By Aanjhan Ranganathan</ref>A snoop filter is a small cache-like structure present between the data cache and the bus as shown in figure, which filters probable data cache misses and forwards those snoop induced look-ups that might result in a hit. The snoop filters achieve filtering by either keeping track of a super-set of blocks currently present in the cache or those that are not present in the cache. The most important factor to be considered while designing these filters is that a look-up in any of these filters must consume less energy than a normal cache look-up. Several snoop filter architectures have been proposed in previous literature depending on the filtering methodology. | ||

[[File:Sf.png]] | |||

Each bus segment in a processing unit can be separated into different cache coherency domains using a snoop filter, with very little traffic occurring between the two. The snoop filter is most likely implemented as a large table that stores recent cache line requests, the state [http://en.wikipedia.org/wiki/MESI_protocol (MESI)] of each cache line, and bits to indicate which segment the cache line is in (or both). When a cache miss occurs, the originating CPU broadcasts a snoop request on its bus. Both the snoop filter and the other CPU in the package will receive the request and take action appropriately. If the read request hits in the snoop filter, then it will check where the requested cache line is located. If the requested cache line is only available on the other bus segment, then the snoop request will be sent to the other segment. If the requested cache line is available on both buses or only on the originating CPU’s bus or only in main memory, then the snoop filter does not pass along the request, thereby saving front side bus bandwidth. | Each bus segment in a processing unit can be separated into different cache coherency domains using a snoop filter, with very little traffic occurring between the two. The snoop filter is most likely implemented as a large table that stores recent cache line requests, the state [http://en.wikipedia.org/wiki/MESI_protocol (MESI)] of each cache line, and bits to indicate which segment the cache line is in (or both). When a cache miss occurs, the originating CPU broadcasts a snoop request on its bus. Both the snoop filter and the other CPU in the package will receive the request and take action appropriately. If the read request hits in the snoop filter, then it will check where the requested cache line is located. If the requested cache line is only available on the other bus segment, then the snoop request will be sent to the other segment. If the requested cache line is available on both buses or only on the originating CPU’s bus or only in main memory, then the snoop filter does not pass along the request, thereby saving front side bus bandwidth. | ||

| Line 68: | Line 70: | ||

This was introduced by IBM Researchers which went on to be used the Blue Gene/P supercomputer. This uses stream registers to encode cache lines stored in the cache in particular way as mentioned in the following text. Each of these stream register (SR) is consists of the following; a base register, a mask register, and a valid bit. The base register here keeps the starting point of a line to be reached out, while the mask register encodes the entries of the block that have been accessed as offsets of the base. Due to space constraints, the offsets are not represented explicitly. Rather, the mask represents a super-set of the offsets that have been accessed. | This was introduced by IBM Researchers which went on to be used the Blue Gene/P supercomputer. This uses stream registers to encode cache lines stored in the cache in particular way as mentioned in the following text. Each of these stream register (SR) is consists of the following; a base register, a mask register, and a valid bit. The base register here keeps the starting point of a line to be reached out, while the mask register encodes the entries of the block that have been accessed as offsets of the base. Due to space constraints, the offsets are not represented explicitly. Rather, the mask represents a super-set of the offsets that have been accessed. | ||

[[File:SR-Filter.png]] | |||

'''Counting Stream Register Snoop Filters''' | '''Counting Stream Register Snoop Filters''' | ||

| Line 119: | Line 123: | ||

Data integrity between the BlueGene/P cores is maintained with a cache coherence protocol based on write-invalidates, with all L1-caches operating in write-through mode. Every store not only updates the L1-cache of the issuing core, but also sends the write data via the L2 write buffer to the shared L3 cache. The L2s broadcast an invalidate request for the write address to ensure that no stale copy of the same datum will remain in the other L1s and L2s. A snoop filter is introduced at each of the four processor located outside the L1 caches. | Data integrity between the BlueGene/P cores is maintained with a cache coherence protocol based on write-invalidates, with all L1-caches operating in write-through mode. Every store not only updates the L1-cache of the issuing core, but also sends the write data via the L2 write buffer to the shared L3 cache. The L2s broadcast an invalidate request for the write address to ensure that no stale copy of the same datum will remain in the other L1s and L2s. A snoop filter is introduced at each of the four processor located outside the L1 caches. | ||

Each snoop filter receives invalidation requests from three remote cores and the network [http://en.wikipedia.org/wiki/Direct_memory_access DMA] by way of a point-to-point interconnect, so it must process requests from four memory writers concurrently. To handle these simultaneous requests, we implement a separate snoop filter block, or port filter, for each interconnect port. Thus, coherency requests on all ports are processed concurrently, and a small fraction of all requests are forwarded to the processor. As shown in figure, there are four separate port filters, each of which handles requests from one remoter processor. | Each snoop filter receives invalidation requests from three remote cores and the network [http://en.wikipedia.org/wiki/Direct_memory_access DMA] by way of a point-to-point interconnect, so it must process requests from four memory writers concurrently. To handle these simultaneous requests, we implement a separate snoop filter block, or port filter, for each interconnect port. Thus, coherency requests on all ports are processed concurrently, and a small fraction of all requests are forwarded to the processor. As shown in figure 2 and figure 3, there are four separate port filters, each of which handles requests from one remoter processor. | ||

[[File:Blue gene-3.png]] | |||

The snoop cache is essentially a Vector-Exclusive-JETTY<ref>http://cucis.eecs.northwestern.edu/publications/pdf/MosMem01A.pdf</ref> records blocks that have been snooped recently (thus invalidated in the cache). It consists of a small, direct-mapped array, where an entry is created for each snoop request. A subsequent snoop request for the same block will match in the snoop cache and be filtered. If the block is loaded in the processor's [http://en.wikipedia.org/wiki/CPU_cache L1 cache], the corresponding entry is removed from the snoop cache, and any new snoop request to the same block will miss in the snoop cache and be forwarded to the L1 cache. | The snoop cache is essentially a Vector-Exclusive-JETTY<ref>http://cucis.eecs.northwestern.edu/publications/pdf/MosMem01A.pdf</ref> records blocks that have been snooped recently (thus invalidated in the cache). It consists of a small, direct-mapped array, where an entry is created for each snoop request. A subsequent snoop request for the same block will match in the snoop cache and be filtered. If the block is loaded in the processor's [http://en.wikipedia.org/wiki/CPU_cache L1 cache], the corresponding entry is removed from the snoop cache, and any new snoop request to the same block will miss in the snoop cache and be forwarded to the L1 cache. | ||

[[File:Blue gene-4.png]] | |||

Unlike the snoop cache that keeps track of what is not in the cache, the stream register filter keeps track of what is in the cache. More precisely, the stream registers keep track of the lines that are in the cache, but may assume that some lines are cached which are not actually there. The heart of the filter is the stream registers themselves, of which there are a small number. One of these registers is updated with the line address every time the cache loads a new line. A particular register is chosen for update based upon the current stream register state and the address of the new line being loaded into the cache. | Unlike the snoop cache that keeps track of what is not in the cache, the stream register filter keeps track of what is in the cache. More precisely, the stream registers keep track of the lines that are in the cache, but may assume that some lines are cached which are not actually there. The heart of the filter is the stream registers themselves, of which there are a small number. One of these registers is updated with the line address every time the cache loads a new line. A particular register is chosen for update based upon the current stream register state and the address of the new line being loaded into the cache. | ||

| Line 197: | Line 205: | ||

d. Vector-Exclusive-JETTY snoop filter | d. Vector-Exclusive-JETTY snoop filter | ||

Answers 1-a, 2-b , 3-d , 4-b , 5-d, 6-d, 7-a, 8-d, 9-d, 10-c | Answers: 1-a, 2-b , 3-d , 4-b , 5-d, 6-d, 7-a, 8-d, 9-d, 10-c | ||

Latest revision as of 02:25, 21 March 2013

Snoop Filters

Introduction

One of the issues with large systems with multiple processors having shared memory and each processor having its own private cache is the cache coherence problem. The non-coherent view of values of a single data item in these different caches is referred to as the cache coherence problem. A protocol which ensures a coherent view of cached values as seen by multiple processors is referred to as cache coherence protocol.

Snooping is the process where the individual caches monitor address lines for accesses to memory locations that they have cached. When a write operation is observed to a location that a cache has a copy of, the cache controller invalidates its own copy of the snooped memory location. Many cache coherence protocols require a hardware component to monitor the bus transactions.

Hardware support for implementing cache coherence protocols at each node in a bus-based multiprocessor system can be provided using coherence controller. The coherence controller has a component called the snooper. The role of the snooper is to snoop each bus transaction involved in the cache coherence transaction. For each snooped bus transaction, the coherence controller checks the cache tag array to see if it has the block that is involved in the transaction, checks the current state of the block (if the block is found), and reacts accordingly by responding with data or by changing the state of the block.<ref>Fundamentals of Prallel Computer Architecture by Yan Solihin</ref>

In most well-optimized programs, much data is not shared among threads. So most of the time, most snooped bus transactions do not find the block in the local cache. Even in that case, snooper has snooped the bus transaction and checked the cache tag to determine whether the cache has the block, thus incurred unnecessary work. There is the possibility that contention can occur between processor and the snooper to access the cache tag. One possible solution to reduce contention between the processor and the snooper is to introduce a snoop filter, which determines whether a snooper needs to check the cache tag or not. By reducing the number of snooped transactions the need to check the cache tags, contention and power consumption can be reduced.

Why Snoop Filtering?<ref>http://www.eecg.toronto.edu/~moshovos/filter/doku.php?id=start#snoop_protocol_challenges</ref>

With the advent of modern computers built with multiple processing cores and shared memory programming model it has become necessary to use cache coherence protocols to maintain coherence between different caches attached to individual processing units and many coherence protocols are snoop based.

Challenges faced by Snoop-based protocols

Snoop protocols face three main challenges:

1. Request ordering:

It is necessary to ensure the relative order of snoop broadcasts. This can be achieved in small multiprocessors by relying on network ordering properties. But larger multiprocessors with arbitrary network topologies cannot rely on the network to order requests.

2. Network bandwidth requirements:

Snoop-based protocols require to do snoop broadcasts during all cache misses. The snoops consume large amounts of network bandwidth and this is the main factor that limits the scalability of snoop coherence protocols.

3. Tag look-up bandwidth requirements:

In addition to network bandwidth, each broadcast consumes significant tag look-up bandwidth as each cache checks to see if it has a copy of the requested block. As the number of cores in the system grows, the rate of snoop-induced tag look-ups can cause contention that delays demand look-ups from the local core and hurts performance. In addition, the challenge of simply providing sufficient network and tag look-up bandwidth, it is important to consider the energy consumed in the network and in the tag arrays as a result of snoop broadcasts.

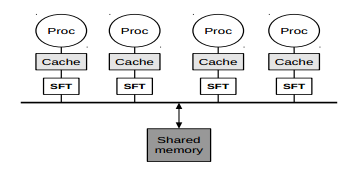

Snoop Filter as a solution

<ref>Experimental Analysis of Snoop Filters for MPSoC Embedded Systems By Aanjhan Ranganathan</ref>A snoop filter is a small cache-like structure present between the data cache and the bus as shown in figure, which filters probable data cache misses and forwards those snoop induced look-ups that might result in a hit. The snoop filters achieve filtering by either keeping track of a super-set of blocks currently present in the cache or those that are not present in the cache. The most important factor to be considered while designing these filters is that a look-up in any of these filters must consume less energy than a normal cache look-up. Several snoop filter architectures have been proposed in previous literature depending on the filtering methodology.

Each bus segment in a processing unit can be separated into different cache coherency domains using a snoop filter, with very little traffic occurring between the two. The snoop filter is most likely implemented as a large table that stores recent cache line requests, the state (MESI) of each cache line, and bits to indicate which segment the cache line is in (or both). When a cache miss occurs, the originating CPU broadcasts a snoop request on its bus. Both the snoop filter and the other CPU in the package will receive the request and take action appropriately. If the read request hits in the snoop filter, then it will check where the requested cache line is located. If the requested cache line is only available on the other bus segment, then the snoop request will be sent to the other segment. If the requested cache line is available on both buses or only on the originating CPU’s bus or only in main memory, then the snoop filter does not pass along the request, thereby saving front side bus bandwidth.

Another way to implement the snoop filter is to duplicate the cache tag array and filter out all snoop requests that miss. But this is not practical and can result in serious performance bottlenecks. But, it has been shown that very accurate filtering can be achieved with small designs that conservatively approximate the cache contents and operate at a reasonable frequency.

Types of Snoop Filters

There are many different attributes that can be used to categorize these filters. We chose to use the point of origin as the first order attribute for classification. These filters can be grouped into three broad classes:

Destination Based Snoop Filters

Destination-based snoop filters are the ones those are inclined to reduce the number of tag look-ups as a result of various snoop broadcasts. These in effect don’t reduce the number of snoop broadcasts; however they try to optimize the actions taken in response to it. They achieve this by filtering the snoop requests and hence avoid local tag look-ups. This optimization technique tries to reduce energy and bandwidth utilization.

On receiving a snoop request, a these category of snoop filters might give off immediate response without requiring a tag array look-up. This goes in to reduce the energy consumption and also the contention for the tag array. For general purpose large multiprocessors, these tag look-ups can easily out-number demand look-ups from the local processor, giving way to high contention and a loss of performance so it is important to try to reduce that contention.

Atoofian and Baniasadi Filter<ref>E. Atoofian and A. Baniasadi, “Using Supplier Locality in Power-Aware Interconnects and Caches in Chip Multiprocessors”, Journal of Systems Architecture, vol. 54, no. 5, pp. 507-518, October 2007.</ref>

This is a kind of filter needing very little area and energy overhead. It maintains a table consisting of saturating counters at each cache with one counter for each processor core in the system. When a snoop request is received for reading, it only performs a look-up if the counter corresponding to the requesting core is “saturated”. If not so, it replays with an negative acknowledgement. There might be another core with a positive reply or if the requester may get all negative acknowledgements. In that case, it tries again and this all of them are force to do look-ups. A “saturating” counter is always incremented when a cache supplies data in response to a snoop request from the corresponding core, and it is reset to zero when a snoop request from the corresponding core fails to find the data in the cache. This protocol serves good in case of workloads that exhibit supplier locality. They reduce energy and contention traffic. However if the workload does not exhibit supplier locality, this may have significant performance losses. An example of such filter is SPLAS-2.

Inclusive Filters

Inclusive filters keep a track of all lines that result in positive responses to snoop requests. In other words, an inclusive snoop filter keeps track of a super-set of the blocks that are cached. This ensures a miss in an inclusive filter is guaranteed to miss in the cache, so there is no need to forward the request. Similarly, a hit in an inclusive snoop filter may or may not hit in the cache, so the request must be forwarded. The pivotal challenge here is to provide an area and energy-efficient structure that can represent this set of blocks.

Subset Filters

Strauss et al. Filters<ref>Strauss, K., Shen, X., and Torrellas, J. 2006. Flexible Snooping: Adaptive Forwarding and Filtering of Snoops in Embedded-Ring Multiprocessors. SIGARCH Comput. Archit. News 34, 2 (May. 2006), 327-338</ref>

Strauss et al. filters focus on filtering snoops for read requests and track those blocks that are in a “supplier” state and hence could give a positive response to a snoop request. A filter by the name of “subset” filter keeps track of the subset of blocks in the cache that are in one of the “supplier” states. As a result of which lines in shared state will return a negative reply to a read snoop. A set-associative array contains the tags of all supplier blocks in the cache. This at times might result in increased bandwidth utilization and also the subset filter only keeps track of lines in order to do efficient tag-look up for read snoop requests only and it does filter the invalidate messages.

Superset Filters

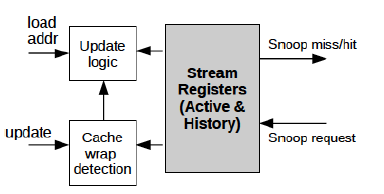

Stream Register Snoop Filters

This was introduced by IBM Researchers which went on to be used the Blue Gene/P supercomputer. This uses stream registers to encode cache lines stored in the cache in particular way as mentioned in the following text. Each of these stream register (SR) is consists of the following; a base register, a mask register, and a valid bit. The base register here keeps the starting point of a line to be reached out, while the mask register encodes the entries of the block that have been accessed as offsets of the base. Due to space constraints, the offsets are not represented explicitly. Rather, the mask represents a super-set of the offsets that have been accessed.

Counting Stream Register Snoop Filters

The disadvantage with the above filter is over time, as more and more unique addresses are accessed by the filter, more and more of the bits in the mask will be set to 0. Hence, all possible addresses that the stream register can filter will decrease over time. Gradually, all of the mask bits become zero, and the SR filters no further addresses. At this point in time, even if the address that were accessed long back are being evicted out or invalidated then also they will not be filtered buy the snoop filter. This happens until a cache wrap occurs and all of them are flushed out to start afresh. The important point is this whole flushing part results in significant amount of overheads.

Counting Stream Register Snoop Filters overcome this by keeping a counter value instead of the valid bit used in the original Stream Register based Snoop Filters. Each time a particular block offset address in accessed, in addition to making the corresponding super-set of bits to 0, it also increments the counter value by 1. And every time a cache invalidation or eviction occurs, it decrements the counter value by 1. So by using this logic, the overhead of flushing out the register in case of cache wrap is not required. It simply checks the counter value and filters it if the value is 0.

Exclusive Filters

In contrast to the inclusive filters mentioned above, an exclusive snoop filter maintains information about blocks that are not being cached. A hit here ensures that the cache does not contain the block, so there is no need to forward the request. However a miss must be forwarded to the cache for processing.

Exclude-Jetty Filters<ref>JETTY: Snoop Filtering for Reduced Energy Consumption in SMP Servers, Andreas Moshovos, Gokhan Memik, Babak Falsafi and Alok Choudhary, Proc. of HPCA-7 (Symposium on High Performance Computer Architecture), Jan. 2001.</ref>

This is an exclusive filter where a set-associative table containing the most recently snooped addresses that returned negative responses is maintained. This technique benefits due to the principle of temporal locality in snoop addresses, filtering out most unnecessary snoops for highly contended blocks. The Blue Gene/P system incorporates a variation of this in the name of “vector exclude Jetty” as part of its snoop filter.

Blue Gene/P Range Filter

The Blue Gene/P has a different kind of exclusive snoop filter namely “range filter”. This filter keeps track of a range of addresses that are either outside the range of the pertinent cache or they are not cached. Hence the snoop requests for this range of addresses are ignored. The range filter is software-controlled and performs well when multiple processors are known to be using completely distinct and continuous portions of physical memory.

Source-based Snoop Filters

The destination-based snoop filters filter out unnecessary tag lookups but these requests and their corresponding responses still require bandwidth. Hence if the requesters can somehow able to predict in advance either that no other caches will have copies of the requested block, or that only certain cache might have copies, then it can avoid sending a snoop broadcast all together, or potentially send a multicast instead of a full broadcast. This is the technique used by so called source-based snoop filters. This also results in decreased bandwidth utilization and better scaling snoop protocol to many cores.

Speculative Selective Requests-based Snoop Filters

Speculative selective requests (SSR) uses the idea of keeping a “saturating counter” and a supplier ID information at each core to predict the supplier cache from where to ask required block when needed. It starts incrementing the counter corresponding to a particular core every time a block is provided by the same core. When this counter reaches a saturating value, the cache controller further approaches this pertinent supplier directly for the block when needed. Until then it uses the broadcast mechanism. For workloads that exhibit a large degree of supplier locality, such as SPLASH-2, data is often streamed from one cache to another, making SSR very effective. However, other workloads with less predictable behavior see little benefit from SSR as the counter's seldom saturate, or potentially suffer performance losses if frequent miss predictions occur.

Software-Assisted Filters

Various software based source-based snoop filters that avoided broadcasting snoops for certain accesses where those snoops were known to fail were also proposed. The first filter is based on the fact that data in the stack of each thread are private and are not shared hence snoop request for these need not be sent. Another proposed filter mechanism uses a “Snoop-Me-Not” bit to all instructions that access memory. Programmers, compilers and operating systems aid in setting the bits appropriately depending on the type of application. This technique requires minimal architectural support, but needs the programmer or compiler to understand the memory layout of the program, and possibly deal with issues.

Other Categories filters

Serial Snooping

Serial-snooping is a form of protocol-based filtering where snoop messages are exchanged between involved processors until a valid copy of the data is found. In this way, it reduces the number of messages exchanged. However this may not be the case always. The benefits of serial snooping also largely depend on the network topology in which it is being used. In some cases latency may be reduced while in other cases this may lead to unnecessary overheads.

In-Network Snoop Filtering

Consider small networks with each network comprising of a set of caches. The networks here are interconnected by routers. The idea used in In-Network Snoop Filtering is to modify the outgoing snoop broadcasts from a network depending on some predefined criteria and the information regarding the networks where to send the request are stored in a table at each outgoing router of a network. A RegionTracker cache is used for the same which tracks which regions are present in each cache. In other words, each router uses a small table to track the sharing information for the most recently accessed regions. Hence a snoop broadcast is only sent to those regions who actually share the relevant block. The major setback in this approach is that in-network filtering apparently is not compatible with non-adaptive routing protocols.

Snoop Filtering in Virtualized Multi-Cores<ref>Virtual Snooping: Filtering Snoops in Virtualized Multi-cores Daehoon Kim, Hwanju Kim, Jaehyuk Huh (KAIST), 43rd International Symposium on Microarchitecture, Dec. 2010</ref>

In virtual environments, usually the sharing tends to occur only between threads and processes running in the same virtual machine. Hence by the virtue of this boundary, snoop requests could only be limited to within this virtual machine rather sending it everywhere. However such an approach requires the support of the operating system along with hyper-visor.

Example<ref>Valentina Salapura, Matthias A. Blumrich, Alan Gara: Design and implementation of the blue gene/P snoop filter. HPCA 2008</ref>

An example of snoop filter implementations can be found in the IBM BlueGene supercomputer. The Blue Gene/P supercomputer is a scalable distributed-memory system consisting of up to 262,144 nodes. Each node is built around a single compute ASIC with 2GB or 4 GB of external DDR2 DRAM. The compute ASIC is a highly integrated System-on-a-Chip (SoC) chip multiprocessor (CMP)<ref>Chinnakrishnan S. Ballapuram, Ahmad Sharif, and Hsien-Hsin S. Lee. “Exploiting Access Semantics and Program Behavior to Reduce Snoop Power in Chip Multiprocessors.” In Proceedings of the 13th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, pp.60-69, Seattle, WA, March, 2008.</ref>. It contains four PowerPC 450 embedded processor cores, each with private, highly-associative, 32 KB rst-level instruction and data caches. Each core is coupled to a dual-pipeline SIMD floating-point unit and to a small, private, second-level cache whose principal responsibility is to prefetch streams of data. In addition, the chip integrates an 8 MB, shared third-level cache, two memory controllers, five network controllers, and a performance monitor.

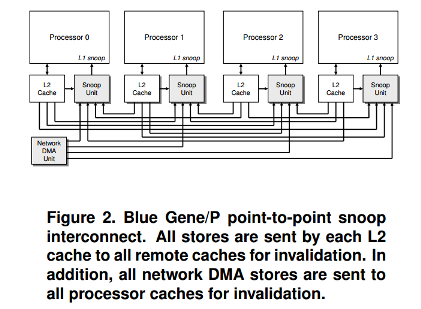

Data integrity between the BlueGene/P cores is maintained with a cache coherence protocol based on write-invalidates, with all L1-caches operating in write-through mode. Every store not only updates the L1-cache of the issuing core, but also sends the write data via the L2 write buffer to the shared L3 cache. The L2s broadcast an invalidate request for the write address to ensure that no stale copy of the same datum will remain in the other L1s and L2s. A snoop filter is introduced at each of the four processor located outside the L1 caches.

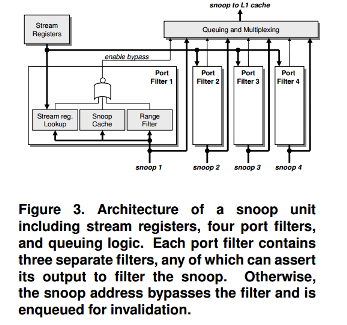

Each snoop filter receives invalidation requests from three remote cores and the network DMA by way of a point-to-point interconnect, so it must process requests from four memory writers concurrently. To handle these simultaneous requests, we implement a separate snoop filter block, or port filter, for each interconnect port. Thus, coherency requests on all ports are processed concurrently, and a small fraction of all requests are forwarded to the processor. As shown in figure 2 and figure 3, there are four separate port filters, each of which handles requests from one remoter processor.

The snoop cache is essentially a Vector-Exclusive-JETTY<ref>http://cucis.eecs.northwestern.edu/publications/pdf/MosMem01A.pdf</ref> records blocks that have been snooped recently (thus invalidated in the cache). It consists of a small, direct-mapped array, where an entry is created for each snoop request. A subsequent snoop request for the same block will match in the snoop cache and be filtered. If the block is loaded in the processor's L1 cache, the corresponding entry is removed from the snoop cache, and any new snoop request to the same block will miss in the snoop cache and be forwarded to the L1 cache.

Unlike the snoop cache that keeps track of what is not in the cache, the stream register filter keeps track of what is in the cache. More precisely, the stream registers keep track of the lines that are in the cache, but may assume that some lines are cached which are not actually there. The heart of the filter is the stream registers themselves, of which there are a small number. One of these registers is updated with the line address every time the cache loads a new line. A particular register is chosen for update based upon the current stream register state and the address of the new line being loaded into the cache.

Conclusion

With the advent of commodity multi-core processors, we have entered the era of the SMP-on-a-chip. These high-performance systems will generate an enormous amount of shared memory traffic, so it will be important to eliminate as much of the useless inter-processor snooping as possible. In addition, power dissipation has become a major factor with increased chip density, so mechanisms to eliminate useless coherence actions will be important.

In this wiki page we have discussed how snoop filters solve the above mentioned issues. We have also discussed the architectures of different types of snoop filters and how snoop filters are implemented in complex multi-core processors such as the IBM BlueGene supercomputer. In conclusion snoop filter can be used as an effective solution to some of the challenges such as large bandwidth requirements and request ordering faced by snoop-based protocols .

References

<references />

Quiz

1. Which of the following is a destination-based snoop filtering protocol? a. Stream Register based Snoop Protocol b. Speculative Selective Requests c. Software-Assisted Filters d. Serial Snooping Protocol

2. Which one of the following is a source-based snoop filtering protocol? a. Stream Register based Snoop Protocol b. Software-Assisted Filters c. Serial Snooping Protocol d. In-Network Snoop Filtering

3. Which one of the following protocols does not belong to either Destination-based or Source-based protocols? a. Stream Register based Snoop Protocol b. Speculative Selective Requests c. Software-Assisted Filters d. Serial Snooping Protocol

4. Which one of the following snoop filtering protocols reduces the number of snoop broadcasts? a. Stream Register based Snoop Protocol b. Speculative Selective Requests c. Serial Snooping Protocol d. In-Network Snoop Filtering

5. Choose one of the following which fall in the category of exclusive snoop filters? a. Stream Register based Snoop Protocol b. Software-Assisted Filters c. Serial Snooping Protocol d. Blue Gene/P Range Filter

6. Which of the following is/are challenges faced by snoop-based protocosl? a. Request ordering b. High network/bus bandwidth requirement. c. High tag look-up bandwidth requirements d. All of the above

7. What is snooping? a. The process where the individual caches monitor address lines for accesses to memory locations that they have cached. b. The process of monitoring all bus transactions. c. The process of monitoring memory locations to see if write happens to these memory locations. d. All of the above.

8. Which one of the following is/are use(s) of snoop filters? a. To reduce bus bandwidth requirements. b. To reduce power consumption. c. To reduce contention between processor and snooper to access cache tag. d. All of the above.

9. What kind of cache is used in the snoop filter implemented in BlueGene supercomputer? a. Speculative Selective Requests-based b. Counting Stream Register based c. Stream Register based d. Vector-Exclusive-JETTY based

10. What keeps track of what is there in the cache in BlueGene supercomputer? a. Speculative Selective Requests-based snoop filter b. Counting Stream Register snoop filter c. Stream Register Snoop filter d. Vector-Exclusive-JETTY snoop filter

Answers: 1-a, 2-b , 3-d , 4-b , 5-d, 6-d, 7-a, 8-d, 9-d, 10-c