CSC/ECE 506 Spring 2013/7a bs: Difference between revisions

| (121 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Previous Page: http://wiki.expertiza.ncsu.edu/index.php/CSC_456_Spring_2012/ch7_AA | |||

Current Page: http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/7a_bs | |||

===Introduction=== | ===Introduction=== | ||

[[Image:sharedmem.jpg|thumbnail|right|550px|Shared Memory system with dedicated Cache for each processor<sup><span id="3body"></span></sup>]] | |||

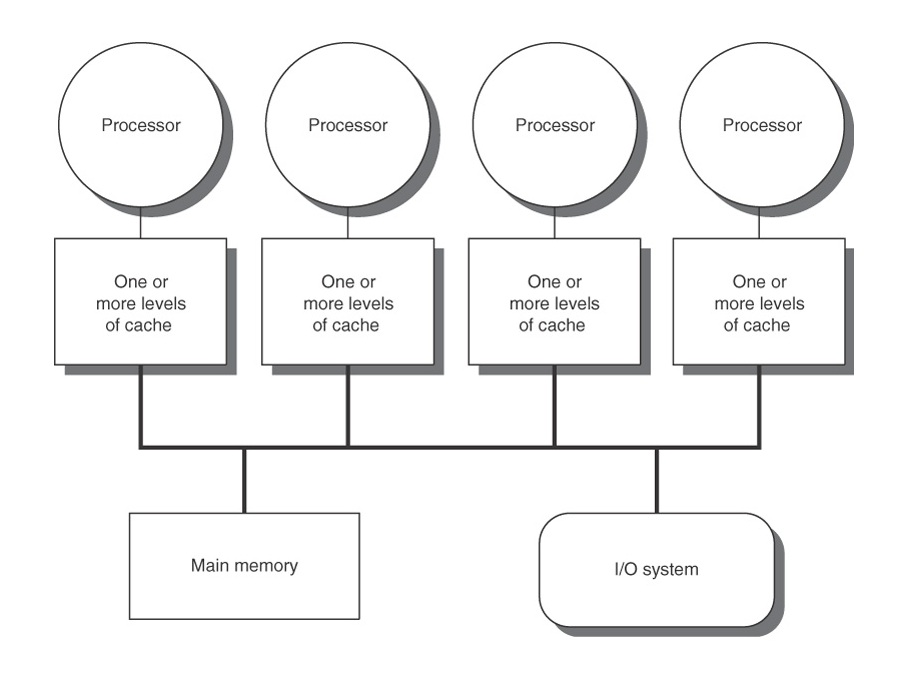

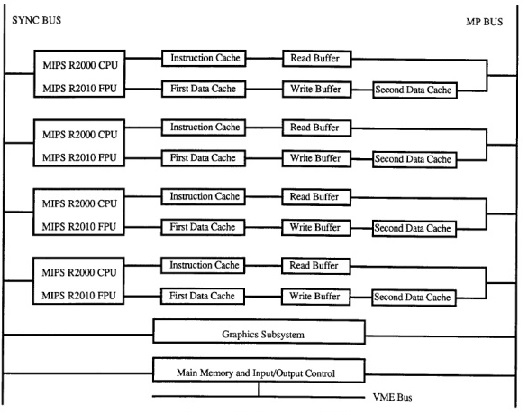

A cache is considered '''coherent''' if its read operations always return the most recently written values at the same address. In a system with a single processor (single core), maintaining cache coherence is simple and easy, but in a [http://en.wikipedia.org/wiki/Multiprocessor multiprocessor] system, it is not as simple. Data can be present in any processor's cache, and [http://en.wikipedia.org/wiki/Protocol_(object-oriented_programming) protocol] needs to ensure that the data is same in every cache. If it cannot ensure that all the caches are the same, then it needs to flag a cache line to indicate that it is not updated. | |||

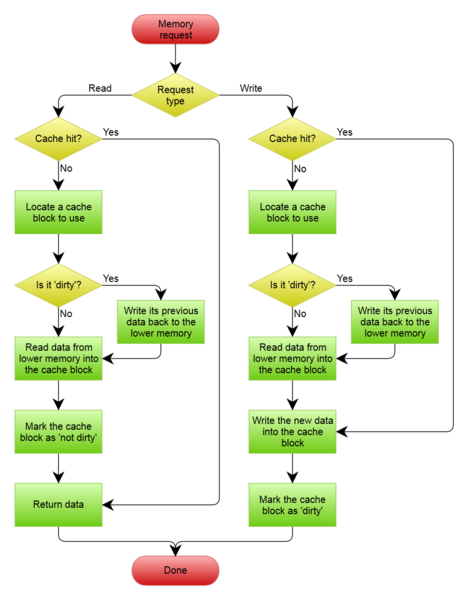

In | In the figure shown here, there is a 4 processor shared memory system where each processor has its own cache. Suppose processor P1 reads memory location M1 and stores it in its local cache. Then, processor P2 also reads from M1 and stores its own local cache. Now, if P1 changes value of M1, there will be two copies of same data residing in different caches, but the one in P1's cache will be different. The problem arises when P2 operates on M1, and uses the stale value of M1 that was stored in its cache. | ||

===Cache Coherence=== | |||

One may think that cache write policy can provide cache coherence, but it is not true. Cache write policy only controls how a change in value of a cache is propagated to a lower level cache or main memory. It is not responsible for propagating changes to other caches. | |||

In order to understand what this means, we need to first understand what cache coherence is and compare it with the purpose of a cache write policy. | |||

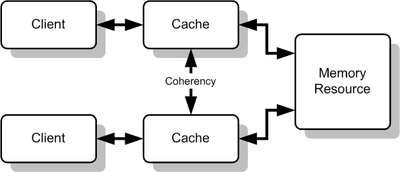

[http://en.wikipedia.org/wiki/Cache_coherency '''Cache coherence'''] refers to the consistency of data stored in local caches of a shared resource. | |||

[[Image:Cache_Coherency_Generic.png|400px|center|Cache Coherence]] | |||

In a shared memory multiprocessor system, each processor may have a separate cache. Hence, in such conditions, it is possible to have multiple copies of the instruction in each cache; one copy in the main memory and one in each cache memory. Here, when one copy of an operand is changed, the other copies of the operand must be changed also. Thus, cache coherence is the discipline that ensures that changes in the values of shared operands are propagated throughout the system in a timely fashion. Examples of cache coherence protocols include MSI, MOESI, MESI etc. | |||

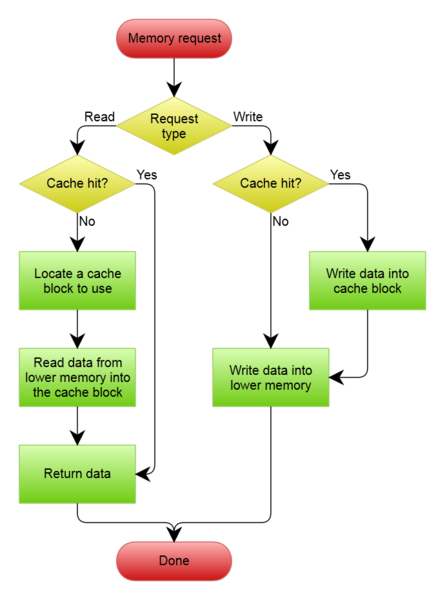

A [http://en.wikipedia.org/wiki/Cache_(computing) '''Cache write policy'''] on the other hand refers to the write policy by which the cache handles writes to the lower level caches or the main memory. The timing of this write is controlled by what is known as the write policy. | |||

There are two basic writing policies<ref>http://en.wikipedia.org/wiki/Cache_(computing)</ref>: | |||

• '''Write-through''' – here, the write is done synchronously, both to the cache and to the backing store.i.e Every time the processor updates a cached memory location, both the cache and the underlying memory location is updated. | |||

• '''Write-back (or Write-behind or copy back)''' – here, writing is initially done only to the cache. The write to the backing store is postponed until the cache blocks containing the data are about to be modified/replaced by new content<ref>http://www.pcguide.com/ref/mbsys/cache/funcWrite-c.html</ref>. | |||

[[Image:Write_back_with_write_allocation.png|550px|Writeback with write allocation<sup><span id="3body"></span></sup>]] | |||

[[Image:Writethrough.png|550px|Write Through Policy<sup><span id="3body"></span></sup>]] | |||

Hence, we see that it is not the responsibility of the cache write policy to propagate changes to others caches, but only to the lower level memory. It is the responsibility of the Coherance protocols followed to implement this. | |||

Cache coherence solutions are mainly classified as '''software-based''' or '''hardware-based''' solutions. Software-based solutions can be implemented using compiler-based or run-time system support. In addition, some of them can also be done with hardware assistance. Hardware-based solutions can also be implemented in many different ways. For example, one approach could be done by having each cache controller listen for traffic on the bus that would invalidate its cache line. Alternatively, the solution could use directories which track which cache blocks most recently accessed a particular cache block. Another variation of implementation is write-through versus write-back; the former writes to main memory whenever a cache is written to, while the latter waits to update main memory until the cache block is evicted. | Cache coherence<ref>http://en.wikipedia.org/wiki/Cache_coherency</ref> solutions are mainly classified as '''software-based''' or '''hardware-based''' solutions. Software-based solutions can be implemented using [http://en.wikipedia.org/wiki/Compiler compiler]-based or run-time system support. In addition, some of them can also be done with hardware assistance. Hardware-based solutions can also be implemented in many different ways. For example, one approach could be done by having each cache controller listen for traffic on the bus that would invalidate its cache line. Alternatively, the solution could use directories which track which cache blocks most recently accessed a particular cache block. Another variation of implementation is write-through versus write-back; the former writes to main memory whenever a cache is written to, while the latter waits to update main memory until the cache block is evicted. | ||

The main concern in the case of software-based solutions is that perfect information is needed at all times when memory aliasing and explicit parallelism are required. So, the focus is more on improving hardware-based solutions, making them more common. Studies have shown that different snoop-based cache coherence schemes are more strongly sensitive toward write-policy than the specific coherence protocol. Write-back schemes are more efficient despite the increased hardware complexity involved in cache coherence support. | The main concern in the case of software-based solutions is that perfect information is needed at all times when memory aliasing and explicit parallelism are required. So, the focus is more on improving hardware-based solutions, making them more common. Studies have shown that different snoop-based cache coherence schemes are more strongly sensitive toward write-policy than the specific coherence protocol. Write-back schemes are more efficient despite the increased hardware complexity involved in cache coherence support. | ||

Hardware-based cache-coherence protocols, though more competitive in terms of performance with respect to basic architectures with no hardware support, incur significant power cost as coherence traffic grows. Thus, as power constraints become tighter and the degree of multiprocessing increases, viability of hardware-based solutions becomes doubtful. | Hardware-based cache-coherence protocols, though more competitive in terms of performance with respect to basic architectures with no hardware support, incur significant power cost as coherence traffic grows. Thus, as power constraints become tighter and the degree of multiprocessing increases, viability of hardware-based solutions becomes doubtful. | ||

===Protocols=== | ===Protocols=== | ||

Whenever a processor changes a word in its cache, all other caches holding the value must be notified. In order to keep their own values consistent with the freshly written cache, the cache's could be either '''updated''' or '''invalidated'''. In the update method, if variable 'x' is modified by core 1, core 1 has to send the updated value of 'x' onto the inter-core bus. Each cache listens to the inter-core bus and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable. | Whenever a processor changes a word in its cache, all other caches holding the value must be notified. In order to keep their own values consistent with the freshly written cache, the cache's could be either '''updated''' or '''[http://en.wikipedia.org/wiki/Cache_invalidation invalidated]'''. In the update method, if variable 'x' is modified by core 1, core 1 has to send the updated value of 'x' onto the inter-core bus. Each cache listens to the inter-core bus and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable. | ||

In case of invalidation, an invalidation message is sent onto the inter-core bus when a variable is changed. The other caches will read this invalidation signal; if its core attempts to access that variable, it will result in a cache miss and the variable will be read from main memory. | In case of invalidation, an invalidation message is sent onto the inter-core bus when a variable is changed. The other caches will read this invalidation signal; if its core attempts to access that variable, it will result in a cache miss and the variable will be read from main memory. | ||

| Line 53: | Line 54: | ||

The update method results in significant amount of traffic on the inter-core bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method. | The update method results in significant amount of traffic on the inter-core bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method. | ||

====Invalidate Coherence Protocols==== | |||

* '''MSI''' | * '''MSI''' | ||

MSI stands for Modified, Shared, and Invalid, which is based on the three states that a line of cache can be in. The Modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The Shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The Invalid state means that the value of the variable has been modified by another cache and is invalid; the cache must read a new value from main memory (or another cache). | MSI stands for Modified, Shared, and Invalid, which is based on the three states that a line of cache can be in. The Modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The Shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The Invalid state means that the value of the variable has been modified by another cache and is invalid; the cache must read a new value from main memory (or another cache). | ||

[[File:MSI.jpg|thumb|center|MSI State Diagram|500px]] | |||

*'''MESI''' | *'''MESI''' | ||

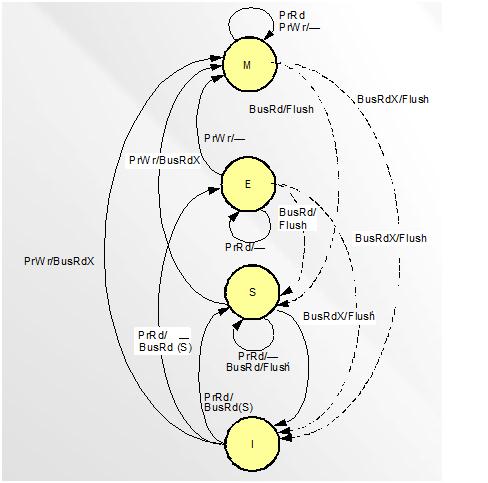

MESI stands for Modified, Exclusive, Shared, and Invalid. The Modified and Invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the Exclusive state. The Exclusive state means that the variable is only in this cache and its value matches the value within the main memory. This now means that the Shared state indicates that the variable is contained in more than one cache. | MESI stands for Modified, Exclusive, Shared, and Invalid. The Modified and Invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the Exclusive state. The Exclusive state means that the variable is only in this cache and its value matches the value within the main memory. This now means that the Shared state indicates that the variable is contained in more than one cache. | ||

[[File:MESI.jpg|thumb|center|MESI State Diagram|500px]] | |||

* '''MOSI''' | * '''MOSI''' | ||

| Line 67: | Line 73: | ||

The MOSI protocol is identical to the MSI protocol except that it adds an Owned state. The Owned state means that the processor "Owns" the variable and will provide the current value to other caches when requested (or at least it will decide if it will provide it when asked). This is useful because another cache will not have to read the value from main memory and will receive it from the Owning cache much, much, faster. | The MOSI protocol is identical to the MSI protocol except that it adds an Owned state. The Owned state means that the processor "Owns" the variable and will provide the current value to other caches when requested (or at least it will decide if it will provide it when asked). This is useful because another cache will not have to read the value from main memory and will receive it from the Owning cache much, much, faster. | ||

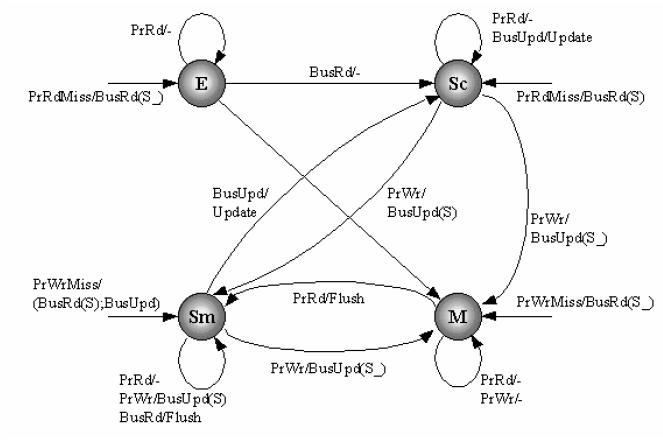

* '''MOESI''' | * '''MOESI'''<ref>http://en.wikipedia.org/wiki/MOESI_protocol</ref> | ||

MOESI is a full cache [http://en.wikipedia.org/wiki/Cache_coherence coherency] protocol that encompasses all of the possible states commonly used in other protocols. In addition to the four common [http://en.wikipedia.org/wiki/MESI_protocol MESI] protocol states, there is a fifth "Owned" state representing data that is both modified and shared. This avoids the need to write modified data back to main memory before sharing it. While the data must still be written back eventually, the write-back may be deferred. | |||

In order for this to be possible, direct cache-to-cache transfers of data must be possible, so a cache with the data in the modified state can supply that data to another reader without transferring it to memory. | |||

[[File:MOESI.jpg|thumb|center|MOESI State Diagram|500px]] | |||

* '''MESIF''' | * '''MESIF'''<ref>http://en.wikipedia.org/wiki/MESIF_protocol</ref> | ||

The MESIF protocol is a cache coherency and memory coherence protocol developed by Intel for cache coherent non-uniform memory architectures. | The MESIF protocol is a cache coherency and memory coherence protocol developed by Intel for cache coherent [http://en.wikipedia.org/wiki/Non-Uniform_Memory_Access non-uniform memory] architectures.The protocol consists of five states, Modified (M), Exclusive (E), Shared (S), Invalid (I) and Forward (F). | ||

The M, E, S and I states are the same as in the MESI protocol. The F state is a specialized form of the S state, and indicates that a cache should act as a designated responder for any requests for the given line. The protocol ensures that, if any cache holds a line in the S state, at most one (other) cache holds it in the F state. | The 'M', 'E', 'S' and 'I' states are the same as in the MESI protocol. The 'F' state is a specialized form of the 'S' state, and indicates that a cache should act as a designated responder for any requests for the given line. The protocol ensures that, if any cache holds a line in the S state, at most one (other) cache holds it in the F state. | ||

In a system of caches employing the MESI protocol, a cache line request that is received by multiple caches holding a line in the S state will be serviced inefficiently. It may either be satisfied from (slow) main memory, or all the sharing caches could respond, bombarding the requestor with redundant responses. In a system of caches employing the MESIF protocol, a cache line request will be responded to only by the cache holding the line in the F state.This allows the requestor to receive a copy at cache-to-cache speeds, while allowing the use of as few multicast packets as the network topology will allow.[http://en.wikipedia.org/wiki/ | In a system of caches employing the MESI protocol, a cache line request that is received by multiple caches holding a line in the S state will be serviced inefficiently. It may either be satisfied from (slow) main memory, or all the sharing caches could respond, bombarding the requestor with redundant responses. In a system of caches employing the MESIF protocol, a cache line request will be responded to only by the cache holding the line in the F state.This allows the requestor to receive a copy at cache-to-cache speeds, while allowing the use of as few [http://en.wikipedia.org/wiki/Multicast multicast] packets as the network topology will allow. | ||

====Update Coherence Protocols==== | |||

Update based cache coherence protocols directly update all the cache values in the system.This is different from invalidation based protocols as it achieves write propagation without having to invalidate. Hence this reduces coherence misses and also bandwidth usage.The two update based protocols- Dragon Protocol and Firefly Protocol are discussed below. | |||

*'''Dragon Protocol''' | |||

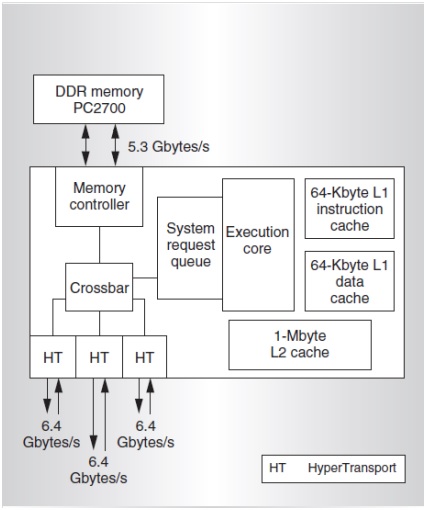

The [http://en.wikipedia.org/wiki/Dragon_protocol Dragon Protocol] is an update-based coherence protocol. It reduces [http://en.wikipedia.org/wiki/Bandwidth_(computing) bandwidth] as it updates the specific words instead of entire block within the cache.[http://en.wikipedia.org/wiki/Cache_(computing)#Writing_policies Write allocate] and write update policies are used by the caches. The Dragon Protocol consists of four states (Modified (M), Exclusive (E), Shared Clean (Sc), and Shared Modified (Sm)) and there is no invalidation state,because every block present in the cache is valid. | |||

[[File:Dragon.jpg|thumb|center|Dragon State Diagram|700px]] | |||

*'''Firefly protocol''' | |||

This is another example of update-based coherence cache protocols.Unlike the Dragon Protocol, the caches use a [http://en.wikipedia.org/wiki/Cache_(computing)#Writing_policies write-through policy] and hence writes all changes to memory.This protocol uses a special bus technique called SharedLine to allow quick detection to copies of the block in other caches. It is similar to the COPIES_EXIST(C) and !COPIES_EXIST. In the firefly protocol , there is no invalidation state just like the dragon protocol as the cache blocks are never invalidated. | |||

====Hybrid Protocols<ref>http://wiki.expertiza.ncsu.edu/index.php/User:Stchen#Introduction_to_Update_and_Adaptive_Coherence_Protocols_on_Real_Architectures</ref>==== | |||

Even though there are clear advantages to using either update protocols or invalidate protocols, there are still disadvantages for each. In a write invalidate protocol, any update/write operation in a processor invalidates the shared cache blocks of other processors. This will force the other caches to do read requests such that the new data is retrieved. This tends to cause a high bus bandwidth and can be especially bad if there is few processors that frequently update the cache. Fortunately, write update protocols mitigate this issue. It will update all other caches at the same time it propagates an update itself. Unfortunately, this creates a different problem, there will sometime be unnecessary update to the cache on the other processors. This tends to increase conflict and capacity cache misses. | |||

Hybrid protocols help to mitigate these problems by decreasing some high bus traffic as well as some unnecessary updates. But,this is possible based on how the adaptive algorithm switches between write-invalidate and write-update. | |||

*'''Competitive update cache coherence protocol<ref>H. Nilsson, P. Stenström | |||

An adaptive update-based cache coherence protocol for reduction of miss rate and traffic | |||

Proc. Parallel Architectures and Languages Europe (PARLE) Conf., Lecture Notes in Computer Science, Athens, Greece, 817, Springer-Verlag, Berlin (Jul. 1994)</ref>''' | |||

This protocol is a hybrid between write-invalidate and write-update protocols and for a wide range of applications it outperforms write invalidate protocols under relaxed memory consistency models because of a lower miss rate. In this protocol,a copy of the block is updated instead of being invalidated at the first write by another processor. If the local processor does not access the copy,it is invalidated after a number of global updates determined by a competitive threshold. As a result, only those copies regularly accessed are updated. In competitive-update protocols coherence is maintained by update messages rather than invalidation messages. Upon a write request, update messages are sent to all caches sharing the same memory block. In contrast to write-update protocols, a competitive threshold, C, is used to locally invalidate copies that are not accessed by the local processor between a number of updates; i.e., when a copy has been updated C times it is invalidated and the update messages to it cease. Thus, the network traffic is reduced compared to a write-update protocol since only those copies regularly accessed are updated. | |||

*'''Cachet'''<ref>http://csg.csail.mit.edu/pubs/memos/Memo-414/memo-414.pdf</ref> | |||

Cachet provides wide scope for adapting to changing program behaviors.It is especially suitable for large [http://en.wikipedia.org/wiki/Distributed_shared_memory Distributed Shared Memory] (DSM) systems, and applicable to a wide variety of programmer-centric memory models.Cachet allows write operations to be performed without the exclusive ownership. This not only alleviates potential cache thrashing due to [http://en.wikipedia.org/wiki/False_sharing false sharing], but also reduces the average | |||

[http://en.wikipedia.org/wiki/Latency_(engineering) latency] of write operations. | |||

==Memory Consistency== | ==Memory Consistency== | ||

''Memory consistency'' deals with the ordering of all memory operations - loads and stores - to different memory locations. It can also be present in systems without caches. The code below from Solihin | ''Memory consistency'' deals with the ordering of all memory operations - loads and stores - to different memory locations. It can also be present in systems without caches. The code below from Solihin<ref>Yan Solihin. "Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems." Solihin Publishing & Consulting LLC, 2009.</ref> shows an example of possible inconsistency: | ||

<pre> | <pre> | ||

| Line 94: | Line 129: | ||

=== Memory Semantics in Uniprocessor Systems === | === Memory Semantics in Uniprocessor Systems === | ||

Uniprocessor languages use simple sequential semantics for memory operations, which allow the programmer to assume that all memory operations will occur one at a time in the sequential order specified by the program. Thus, the programmer can expect a read to return the value of the most recent write to the location according to sequential program order. It is sufficient to only maintain uniprocessor data and control dependences. The compiler and hardware can freely reorder operations to different locations if the uniprocessor data and control dependences are respected. This enables compiler optimizations such as register allocation, code motion, and loop transformations, and hardware optimizations, such as pipelining, multiple issue, write buffer bypassing and forwarding, and lockup-free caches, all of which lead to overlapping and reordering of memory operations. | Uniprocessor languages use simple sequential semantics for memory operations, which allow the programmer to assume that all memory operations will occur one at a time in the sequential order specified by the program. Thus, the programmer can expect a read to return the value of the most recent write to the location according to sequential program order. It is sufficient to only maintain uniprocessor data and control dependences. The compiler and hardware can freely reorder operations to different locations if the uniprocessor data and control dependences are respected. This enables compiler optimizations such as register allocation, code motion, and loop transformations, and hardware optimizations, such as pipelining, multiple issue, write buffer bypassing and forwarding, and lockup-free caches, all of which lead to overlapping and reordering of memory operations.<ref>Sarita V. Adve. Kourosh Gharachorloo. "Shared Memory. Consistency Models: A Tutorial." Digital Western Research Laboratory 250 University Avenue Palo Alto. <http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref> | ||

=== Memory Semantics in Multiprocessor Systems === | === Memory Semantics in Multiprocessor Systems === | ||

Unfortunately, memory consistency is not as straight-forward on multiprocessors. With regard to the example above, instead of each process running on a separate thread, each process runs on a separate processor. With multiple processors, more problems arise with respect to order of execution. While one process might execute instructions in program order, other processes may not recognize the executions in the same order due to delays in communication. How processors handle this problem depends on the chosen ''consistency model''. | Unfortunately, memory consistency is not as straight-forward on multiprocessors. With regard to the example above, instead of each process running on a separate thread, each process runs on a separate processor. With multiple processors, more problems arise with respect to order of execution. While one process might execute instructions in program order, other processes may not recognize the executions in the same order due to delays in communication. How processors handle this problem depends on the chosen ''consistency model''<ref>http://www-vs.informatik.uni-ulm.de/teach/ss05/dsm/arizona.pdf</ref>. | ||

Some examples of consistency models are<ref>http://en.wikipedia.org/wiki/Consistency_model</ref>: | |||

* [http://en.wikipedia.org/wiki/Linearizability '''Atomic Consistency''']: This is the strictest of all consistency models. With atomic consistency, operations take effect at some point in an operation interval. Atomicity is a guarantee of isolation from concurrent processes. Additionally, atomic operations commonly have a succeed-or-fail definition. i.e they either successfully change the state of the system, or have no apparent effect. | |||

* [http://en.wikipedia.org/wiki/Causal_consistency '''Causal consistency''']: The causal consistency model represents a weakening of sequential consistency in that it makes a distinction between events that are potentially causally related and those that are not. Writes that are potentially causally related must be seen by all processes in the same order. Concurrent writes (i.e. ones that are not causally related) may be seen in a different order on different machines. Hence, this is weaker than sequential consistency, which requires that all nodes see all writes in the same order, but is stronger than [http://en.wikipedia.org/wiki/PRAM_consistency PRAM consistency], which requires only writes done by a single node to be seen in the same order from every other node. | |||

* | * [http://en.wikipedia.org/wiki/Delta_consistency '''Delta consistency''']: is a core functionality that gives content providers “freshness guarantee” by ensuring that updates will propagate through the system and all replicas will be consistent after a fixed time period. | ||

* | |||

* | * [http://en.wikipedia.org/wiki/Entry_consistency '''Entry consistency''']: This requires the programmer to use acquire and release at the start and end of each critical section, respectively. Entry consistency requires each ordinary shared variable to be associated with some synchronization variable such as a lock or barrier. If it is desired that elements of an array be accessed independently in parallel, then different array elements must be associated with different locks. | ||

* | |||

* [http://en.wikipedia.org/wiki/Eventual_consistency '''Eventual consistency''']:This model states that given a sufficiently long period of time over which no changes are sent, all updates can be expected to propagate eventually through the system and the replicas will be consistent. | |||

* | * [http://en.wikipedia.org/wiki/Fork_consistency '''Fork consistency''']: In this model, if an untrusted server hides the modifications of one writer to another, the latter will not be shown any future modifications of the former, effectively forking the views of them. This is the strongest consistency model that can be achieved with an untrusted server. | ||

* PRAM consistency | |||

* | * [http://en.wikipedia.org/wiki/One-copy_serializability '''One-copy serializability''']: This model is the same as sequential consistency, as though there is only one copy of the file, thus, access requests are fully serialized. | ||

* | |||

* | * [http://en.wikipedia.org/wiki/PRAM_consistency '''PRAM consistency''']: PRAM consistency or FIFO consistency, is subject to the condition that writes done by a single process are received by all other processes in the order in which they were issued, but writes from different processes may be seen in a different order by different processes. | ||

* | |||

* [http://en.wikipedia.org/wiki/Release_consistency '''Release consistency''']: Release consistency provides these two kinds of synchronization variables. '''Acquire''' accesses are used to tell the memory system that a critical region is about to be entered. '''Release''' accesses say that a critical region has just been exited. These accesses can be implemented either as ordinary operations on special variables or as special operations. | |||

* [http://en.wikipedia.org/wiki/Sequential_consistency '''Sequential consistency''']: Sequential consistency is a slightly weaker model than strict consistency. It was defined by Lamport as the result of any execution is the same as if the reads and writes occurred in some order, and the operations of each individual processor appear in this sequence in the order specified by its program. | |||

* [http://en.wikipedia.org/wiki/Vector-field_consistency '''Vector-field consistency''']:This is a consistency model for replicated data. It is designed to allow bounded divergence of object replicas. In this model replica consistency is selectively and dynamically strengthened or weakened based on the on-going game state and simultaneously it manages: how the consistency degree is changed throughout the execution; and how the consistency requirements are specified. | |||

* [http://en.wikipedia.org/wiki/Weak_consistency '''Weak consistency''']: A memory system is weakly consistent if it enforces that accesses to synchronization variables are sequentially consistent, that no access to a synchronization variable is issued in a processor before all previous data accesses have been performed and that no access is issued by a processor before a previous access to a synchronization variable has been performed. | |||

== Memory Coherence and Shared Virtual Memory == | == Memory Coherence and Shared Virtual Memory == | ||

The memory coherence problem in a shared virtual memory system and in multicache systems are different. In a multicache multiprocessor, there are processors sharing a physical memory through their private caches. The relatively small size of a cache and the fast bus connection to the shared memory, enables using a sophisticated coherence protocol for the multicache hardware such that the time delay of conflicting writes to a memory location is small. | The memory coherence problem in a shared [http://en.wikipedia.org/wiki/Virtual_memory virtual memory] system and in [http://www.cacheopedia.com/wiki/Multi-cache multicache] systems are different. In a multicache multiprocessor, there are processors sharing a physical memory through their private caches. The relatively small size of a cache and the fast bus connection to the shared memory, enables using a sophisticated coherence protocol for the multicache hardware such that the time delay of conflicting writes to a memory location is small.<ref>Kai Li, and Paul Hudak. "Memory coherence in shared virtual memory systems". Published in Journal ACM Transactions on Computer Systems (TOCS), Volume 7 Issue 4, Nov. 1989 http://dl.acm.org.prox.lib.ncsu.edu/citation.cfm?id=75104.75105&coll=DL&dl=GUIDE&CFID=251927&CFTOKEN=71004880</ref> | ||

In contrast, in a shared virtual memory system on a loosely coupled multiprocessor which has no physical shared memory (with a nontrivial communication cost between processors), conflicts are not likely to be solved with negligible delay. These conflicts resemble a “page fault” in a traditional virtual memory system. Thus, there are two design choices that greatly influence the implementation of a shared virtual memory: the granularity of the memory units (i.e., the “page size”) and the strategy for maintaining coherence. | In contrast, in a shared virtual memory system on a loosely coupled multiprocessor which has no physical shared memory (with a nontrivial communication cost between processors), conflicts are not likely to be solved with negligible delay. These conflicts resemble a “page fault” in a traditional virtual memory system. Thus, there are two design choices that greatly influence the implementation of a shared virtual memory: the granularity of the memory units (i.e., the “page size”) and the strategy for maintaining coherence. | ||

| Line 124: | Line 170: | ||

== Centralized Manager Algorithms == | == Centralized Manager Algorithms == | ||

One way to obtain mutual exclusive access to data is to use a centralized algorithm. The first version of this algorithm is very similar to a monitor and makes use of a '''info table''' which has an entry for every page, and also three fields for each of those pages. The '''owner''' field keeps track of the processor which had the latest write access to the page. The '''copy set''' field has a list of every processor with a copy of the page, which is used for broadcast-free invalidation operations. Finally, the '''lock''' field is used when synchronizing requests for the page. Each processor in the algorithm also keeps track of page accessibility in its own page table with an '''access''' and a '''lock''' field. | One way to obtain mutual exclusive access to data is to use a centralized algorithm. The first version of this algorithm is very similar to a monitor and makes use of a '''info table''' which has an entry for every page, and also three fields for each of those pages. The '''owner''' field keeps track of the processor which had the latest write access to the page. The '''copy set''' field has a list of every processor with a copy of the page, which is used for broadcast-free invalidation operations. Finally, the '''[http://en.wikipedia.org/wiki/Lock_(database) lock]''' field is used when synchronizing requests for the page. Each processor in the algorithm also keeps track of page accessibility in its own page table with an '''access''' and a '''lock''' field. | ||

By setting the lock in both of the tables, the algorithm can synchronize page faults whenever there are multiple processes waiting for a page, or whenever the page is in the process of being accessed. In conjunction with this are '''confirmation''' messages that are sent to let the managing processor know when it can give page access to someone else. So, while read-page faults on the managing processor only need a message to and a message from the owner, read-page faults on the non-managing processor need an additional message to the manager and a confirmation message. For write-page faults, the message sending process is the same, except for the additional cost of an invalidation. | By setting the lock in both of the tables, the algorithm can synchronize [http://en.wikipedia.org/wiki/Page_fault page faults] whenever there are multiple processes waiting for a page, or whenever the page is in the process of being accessed. In conjunction with this are '''confirmation''' messages that are sent to let the managing processor know when it can give page access to someone else. So, while read-page faults on the managing processor only need a message to and a message from the owner, read-page faults on the non-managing processor need an additional message to the manager and a confirmation message. For write-page faults, the message sending process is the same, except for the additional cost of an invalidation. | ||

The second version of the algorithm improves on the first by eliminating the need for confirmation messages. To accomplish this, the responsibility of synchronizing page ownership is moved from the manager to the individual owners. This also means that the lock field is removed from the info table, and the copy set field is transferred to each of the page tables. Ultimately, this removes the cost of one send and one receive from the algorithm. | The second version of the algorithm improves on the first by eliminating the need for confirmation messages. To accomplish this, the responsibility of [http://en.wikipedia.org/wiki/Synchronization_(computer_science) synchronizing] page ownership is moved from the manager to the individual owners. This also means that the lock field is removed from the info table, and the copy set field is transferred to each of the page tables. Ultimately, this removes the cost of one send and one receive from the algorithm. | ||

== Distributed Manager Algorithm == | == Distributed Manager Algorithm == | ||

In order to fix the possibe bottle-neck centralized manager algorithms may create, ''Distributed Manager Algorithms'' assigns tasks to various individual processors. There are several methods of assigning these tasks. In a ''Fixed'' Distributed Manager Algorithm, there is a predetermined set of pages for which each processor is responsible. In a ''Broadcast'' Distributed Manager Algorithm, page faults results in broadcast requests to the other processors. Due to the number of requests, the Broadcast Distributed Manager Algorithm does not scale well. In the case of the ''Dynamic'' Distributed Manager Algorithm, instead of using broadcasting requests to find page owners, each processor holds a page table that stores the owners for each page. Since the processors that own each page (page owners) are not static, each entry in a processor's page table is only a "hint," or a starting point for looking for the true owner. | In order to fix the possibe bottle-neck centralized manager algorithms may create, ''Distributed Manager Algorithms'' assigns tasks to various individual processors. There are several methods of assigning these tasks. In a ''Fixed'' Distributed Manager Algorithm, there is a predetermined set of pages for which each processor is responsible. In a ''Broadcast'' Distributed Manager Algorithm, page faults results in [http://en.wikipedia.org/wiki/Broadcasting_(networking) broadcast] requests to the other processors. Due to the number of requests, the Broadcast Distributed Manager Algorithm does not scale well. In the case of the ''Dynamic'' Distributed Manager Algorithm, instead of using broadcasting requests to find page owners, each processor holds a [http://en.wikipedia.org/wiki/Page_table page table] that stores the owners for each page. Since the processors that own each page (page owners) are not static, each entry in a processor's page table is only a "hint," or a starting point for looking for the true owner. | ||

== References == | == References == | ||

<References> | |||

[1] Yan Solihin. "Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems." Solihin Publishing & Consulting LLC, 2009. | [1] Yan Solihin. "Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems." Solihin Publishing & Consulting LLC, 2009. | ||

| Line 140: | Line 187: | ||

[3] Kai Li, and Paul Hudak. "Memory coherence in shared virtual memory systems". Published in Journal ACM Transactions on Computer Systems (TOCS), Volume 7 Issue 4, Nov. 1989 <http://dl.acm.org.prox.lib.ncsu.edu/citation.cfm?id=75104.75105&coll=DL&dl=GUIDE&CFID=251927&CFTOKEN=71004880>. | [3] Kai Li, and Paul Hudak. "Memory coherence in shared virtual memory systems". Published in Journal ACM Transactions on Computer Systems (TOCS), Volume 7 Issue 4, Nov. 1989 <http://dl.acm.org.prox.lib.ncsu.edu/citation.cfm?id=75104.75105&coll=DL&dl=GUIDE&CFID=251927&CFTOKEN=71004880>. | ||

</References> | |||

'''Quiz''' | |||

1. Which of the following is true about Broadcast Distributed manager Algorithm? (More than one options could be correct) | |||

a) It has a predetermined set of pages for which each processor is responsible. | |||

b) Page faults results in broadcast requests to the other processors. | |||

c) It does not scale well due to the number of requests. | |||

d)None. | |||

2.What is the major difference between One copy Serializabilty and Sequential consistency? | |||

a) No difference | |||

b) One copy Serializabilty Model has only one copy of the file. | |||

c) Sequential Consistency Model has only one copy of the file. | |||

d) None. | |||

3.Which of the following is a hybrid protocol? | |||

a) MSI | |||

b) MOSI | |||

c) Cachet | |||

d) Firefly | |||

4.On what basis are memory consistency strategies classified? (More than one options could be correct) | |||

a) Page faults | |||

b) Page synchronization | |||

c) Page ownership | |||

d) None. | |||

5.Which of the following are the four states in a Dragon Protocol? | |||

a) Modified, Shared, Invalidate | |||

b) Modified, Exclusive, Shared Clean, and Shared Modified | |||

c) Owned, Shared, Modified, Exclusive | |||

d) None | |||

6.Which of the following is true about sequential consistency? (More than one options could be correct) | |||

a) All orderings are enforced. | |||

b) Loads and stores can exchange positions freely. | |||

c) Performance can suffer | |||

d) SC is deterministic | |||

7.In Centralized Manager Algorithms, what does the owner field signify? | |||

a) Used when synchronizing requests for the page. | |||

b) Keeps track of the processor which has the latest read access to the page. | |||

c) Keeps track of the processor which has the latest write access to the page. | |||

d) Entry for every page. | |||

8.Which of the following is false? (More than one options could be correct) | |||

a) Write Update method results in significant amount of traffic. | |||

b) In a write through policy, the write is done only to the cache. | |||

c) Cache coherence can be achieved by cache write policy. | |||

d) Broadcast Distributed Manager Algorithm doesn’t scale well. | |||

Quiz Answers: '''1. b,c 2. b 3. c 4. b,c 5. b 6. a,c 7. c 8. a,d''' | |||

Latest revision as of 15:03, 25 March 2013

Previous Page: http://wiki.expertiza.ncsu.edu/index.php/CSC_456_Spring_2012/ch7_AA

Current Page: http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/7a_bs

Introduction

A cache is considered coherent if its read operations always return the most recently written values at the same address. In a system with a single processor (single core), maintaining cache coherence is simple and easy, but in a multiprocessor system, it is not as simple. Data can be present in any processor's cache, and protocol needs to ensure that the data is same in every cache. If it cannot ensure that all the caches are the same, then it needs to flag a cache line to indicate that it is not updated.

In the figure shown here, there is a 4 processor shared memory system where each processor has its own cache. Suppose processor P1 reads memory location M1 and stores it in its local cache. Then, processor P2 also reads from M1 and stores its own local cache. Now, if P1 changes value of M1, there will be two copies of same data residing in different caches, but the one in P1's cache will be different. The problem arises when P2 operates on M1, and uses the stale value of M1 that was stored in its cache.

Cache Coherence

One may think that cache write policy can provide cache coherence, but it is not true. Cache write policy only controls how a change in value of a cache is propagated to a lower level cache or main memory. It is not responsible for propagating changes to other caches.

In order to understand what this means, we need to first understand what cache coherence is and compare it with the purpose of a cache write policy.

Cache coherence refers to the consistency of data stored in local caches of a shared resource.

In a shared memory multiprocessor system, each processor may have a separate cache. Hence, in such conditions, it is possible to have multiple copies of the instruction in each cache; one copy in the main memory and one in each cache memory. Here, when one copy of an operand is changed, the other copies of the operand must be changed also. Thus, cache coherence is the discipline that ensures that changes in the values of shared operands are propagated throughout the system in a timely fashion. Examples of cache coherence protocols include MSI, MOESI, MESI etc.

A Cache write policy on the other hand refers to the write policy by which the cache handles writes to the lower level caches or the main memory. The timing of this write is controlled by what is known as the write policy.

There are two basic writing policies<ref>http://en.wikipedia.org/wiki/Cache_(computing)</ref>:

• Write-through – here, the write is done synchronously, both to the cache and to the backing store.i.e Every time the processor updates a cached memory location, both the cache and the underlying memory location is updated.

• Write-back (or Write-behind or copy back) – here, writing is initially done only to the cache. The write to the backing store is postponed until the cache blocks containing the data are about to be modified/replaced by new content<ref>http://www.pcguide.com/ref/mbsys/cache/funcWrite-c.html</ref>.

Hence, we see that it is not the responsibility of the cache write policy to propagate changes to others caches, but only to the lower level memory. It is the responsibility of the Coherance protocols followed to implement this.

Cache coherence<ref>http://en.wikipedia.org/wiki/Cache_coherency</ref> solutions are mainly classified as software-based or hardware-based solutions. Software-based solutions can be implemented using compiler-based or run-time system support. In addition, some of them can also be done with hardware assistance. Hardware-based solutions can also be implemented in many different ways. For example, one approach could be done by having each cache controller listen for traffic on the bus that would invalidate its cache line. Alternatively, the solution could use directories which track which cache blocks most recently accessed a particular cache block. Another variation of implementation is write-through versus write-back; the former writes to main memory whenever a cache is written to, while the latter waits to update main memory until the cache block is evicted.

The main concern in the case of software-based solutions is that perfect information is needed at all times when memory aliasing and explicit parallelism are required. So, the focus is more on improving hardware-based solutions, making them more common. Studies have shown that different snoop-based cache coherence schemes are more strongly sensitive toward write-policy than the specific coherence protocol. Write-back schemes are more efficient despite the increased hardware complexity involved in cache coherence support.

Hardware-based cache-coherence protocols, though more competitive in terms of performance with respect to basic architectures with no hardware support, incur significant power cost as coherence traffic grows. Thus, as power constraints become tighter and the degree of multiprocessing increases, viability of hardware-based solutions becomes doubtful.

Protocols

Whenever a processor changes a word in its cache, all other caches holding the value must be notified. In order to keep their own values consistent with the freshly written cache, the cache's could be either updated or invalidated. In the update method, if variable 'x' is modified by core 1, core 1 has to send the updated value of 'x' onto the inter-core bus. Each cache listens to the inter-core bus and if a cache sees a variable on the bus which it has a copy of, it will read the updated value. This ensures that all caches have the most up-to-date value of the variable.

In case of invalidation, an invalidation message is sent onto the inter-core bus when a variable is changed. The other caches will read this invalidation signal; if its core attempts to access that variable, it will result in a cache miss and the variable will be read from main memory.

The update method results in significant amount of traffic on the inter-core bus as the update signal is sent onto the bus every time the variable is updated. The invalidation method only requires that an invalidation signal be sent the first time a variable is altered; this is why the invalidation method is the preferred method.

Invalidate Coherence Protocols

- MSI

MSI stands for Modified, Shared, and Invalid, which is based on the three states that a line of cache can be in. The Modified state means that a variable in the cache has been modified and therefore has a different value than that found in main memory; the cache is responsible for writing the variable back to main memory. The Shared state means that the variable exists in at least one cache and is not modified; the cache can evict the variable without writing it back to the main memory. The Invalid state means that the value of the variable has been modified by another cache and is invalid; the cache must read a new value from main memory (or another cache).

- MESI

MESI stands for Modified, Exclusive, Shared, and Invalid. The Modified and Invalid states are the same for this protocol as they are for the MSI protocol. This protocol introduces a new state; the Exclusive state. The Exclusive state means that the variable is only in this cache and its value matches the value within the main memory. This now means that the Shared state indicates that the variable is contained in more than one cache.

- MOSI

The MOSI protocol is identical to the MSI protocol except that it adds an Owned state. The Owned state means that the processor "Owns" the variable and will provide the current value to other caches when requested (or at least it will decide if it will provide it when asked). This is useful because another cache will not have to read the value from main memory and will receive it from the Owning cache much, much, faster.

- MOESI<ref>http://en.wikipedia.org/wiki/MOESI_protocol</ref>

MOESI is a full cache coherency protocol that encompasses all of the possible states commonly used in other protocols. In addition to the four common MESI protocol states, there is a fifth "Owned" state representing data that is both modified and shared. This avoids the need to write modified data back to main memory before sharing it. While the data must still be written back eventually, the write-back may be deferred. In order for this to be possible, direct cache-to-cache transfers of data must be possible, so a cache with the data in the modified state can supply that data to another reader without transferring it to memory.

- MESIF<ref>http://en.wikipedia.org/wiki/MESIF_protocol</ref>

The MESIF protocol is a cache coherency and memory coherence protocol developed by Intel for cache coherent non-uniform memory architectures.The protocol consists of five states, Modified (M), Exclusive (E), Shared (S), Invalid (I) and Forward (F). The 'M', 'E', 'S' and 'I' states are the same as in the MESI protocol. The 'F' state is a specialized form of the 'S' state, and indicates that a cache should act as a designated responder for any requests for the given line. The protocol ensures that, if any cache holds a line in the S state, at most one (other) cache holds it in the F state. In a system of caches employing the MESI protocol, a cache line request that is received by multiple caches holding a line in the S state will be serviced inefficiently. It may either be satisfied from (slow) main memory, or all the sharing caches could respond, bombarding the requestor with redundant responses. In a system of caches employing the MESIF protocol, a cache line request will be responded to only by the cache holding the line in the F state.This allows the requestor to receive a copy at cache-to-cache speeds, while allowing the use of as few multicast packets as the network topology will allow.

Update Coherence Protocols

Update based cache coherence protocols directly update all the cache values in the system.This is different from invalidation based protocols as it achieves write propagation without having to invalidate. Hence this reduces coherence misses and also bandwidth usage.The two update based protocols- Dragon Protocol and Firefly Protocol are discussed below.

- Dragon Protocol

The Dragon Protocol is an update-based coherence protocol. It reduces bandwidth as it updates the specific words instead of entire block within the cache.Write allocate and write update policies are used by the caches. The Dragon Protocol consists of four states (Modified (M), Exclusive (E), Shared Clean (Sc), and Shared Modified (Sm)) and there is no invalidation state,because every block present in the cache is valid.

- Firefly protocol

This is another example of update-based coherence cache protocols.Unlike the Dragon Protocol, the caches use a write-through policy and hence writes all changes to memory.This protocol uses a special bus technique called SharedLine to allow quick detection to copies of the block in other caches. It is similar to the COPIES_EXIST(C) and !COPIES_EXIST. In the firefly protocol , there is no invalidation state just like the dragon protocol as the cache blocks are never invalidated.

Hybrid Protocols<ref>http://wiki.expertiza.ncsu.edu/index.php/User:Stchen#Introduction_to_Update_and_Adaptive_Coherence_Protocols_on_Real_Architectures</ref>

Even though there are clear advantages to using either update protocols or invalidate protocols, there are still disadvantages for each. In a write invalidate protocol, any update/write operation in a processor invalidates the shared cache blocks of other processors. This will force the other caches to do read requests such that the new data is retrieved. This tends to cause a high bus bandwidth and can be especially bad if there is few processors that frequently update the cache. Fortunately, write update protocols mitigate this issue. It will update all other caches at the same time it propagates an update itself. Unfortunately, this creates a different problem, there will sometime be unnecessary update to the cache on the other processors. This tends to increase conflict and capacity cache misses.

Hybrid protocols help to mitigate these problems by decreasing some high bus traffic as well as some unnecessary updates. But,this is possible based on how the adaptive algorithm switches between write-invalidate and write-update.

- Competitive update cache coherence protocol<ref>H. Nilsson, P. Stenström

An adaptive update-based cache coherence protocol for reduction of miss rate and traffic Proc. Parallel Architectures and Languages Europe (PARLE) Conf., Lecture Notes in Computer Science, Athens, Greece, 817, Springer-Verlag, Berlin (Jul. 1994)</ref> This protocol is a hybrid between write-invalidate and write-update protocols and for a wide range of applications it outperforms write invalidate protocols under relaxed memory consistency models because of a lower miss rate. In this protocol,a copy of the block is updated instead of being invalidated at the first write by another processor. If the local processor does not access the copy,it is invalidated after a number of global updates determined by a competitive threshold. As a result, only those copies regularly accessed are updated. In competitive-update protocols coherence is maintained by update messages rather than invalidation messages. Upon a write request, update messages are sent to all caches sharing the same memory block. In contrast to write-update protocols, a competitive threshold, C, is used to locally invalidate copies that are not accessed by the local processor between a number of updates; i.e., when a copy has been updated C times it is invalidated and the update messages to it cease. Thus, the network traffic is reduced compared to a write-update protocol since only those copies regularly accessed are updated.

- Cachet<ref>http://csg.csail.mit.edu/pubs/memos/Memo-414/memo-414.pdf</ref>

Cachet provides wide scope for adapting to changing program behaviors.It is especially suitable for large Distributed Shared Memory (DSM) systems, and applicable to a wide variety of programmer-centric memory models.Cachet allows write operations to be performed without the exclusive ownership. This not only alleviates potential cache thrashing due to false sharing, but also reduces the average latency of write operations.

Memory Consistency

Memory consistency deals with the ordering of all memory operations - loads and stores - to different memory locations. It can also be present in systems without caches. The code below from Solihin<ref>Yan Solihin. "Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems." Solihin Publishing & Consulting LLC, 2009.</ref> shows an example of possible inconsistency:

P0 P1

S1 : datum = 5 S3 : while(!datumIsReady) {}

S2 : datumIsReady = 1 S4 : print datum

In this example, P0 generates sets the values of datum and datumIsReady. By setting datumIsReady to 1, this signals P1 that datum is now ready. P1 spins in the while loop waiting for this flag to become 1 and then prints datum. In this example an in similar cases, it is important that the compiler understand what the programmer intended so that the program order is preserved. Within a uni-processor, this problem can be solved by declaring which variables must be synchronized. For instance when using C, this can be accomplished by declaring variables that may be susceptible to inconsistency as volatile.

Memory Semantics in Uniprocessor Systems

Uniprocessor languages use simple sequential semantics for memory operations, which allow the programmer to assume that all memory operations will occur one at a time in the sequential order specified by the program. Thus, the programmer can expect a read to return the value of the most recent write to the location according to sequential program order. It is sufficient to only maintain uniprocessor data and control dependences. The compiler and hardware can freely reorder operations to different locations if the uniprocessor data and control dependences are respected. This enables compiler optimizations such as register allocation, code motion, and loop transformations, and hardware optimizations, such as pipelining, multiple issue, write buffer bypassing and forwarding, and lockup-free caches, all of which lead to overlapping and reordering of memory operations.<ref>Sarita V. Adve. Kourosh Gharachorloo. "Shared Memory. Consistency Models: A Tutorial." Digital Western Research Laboratory 250 University Avenue Palo Alto. <http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>

Memory Semantics in Multiprocessor Systems

Unfortunately, memory consistency is not as straight-forward on multiprocessors. With regard to the example above, instead of each process running on a separate thread, each process runs on a separate processor. With multiple processors, more problems arise with respect to order of execution. While one process might execute instructions in program order, other processes may not recognize the executions in the same order due to delays in communication. How processors handle this problem depends on the chosen consistency model<ref>http://www-vs.informatik.uni-ulm.de/teach/ss05/dsm/arizona.pdf</ref>.

Some examples of consistency models are<ref>http://en.wikipedia.org/wiki/Consistency_model</ref>:

- Atomic Consistency: This is the strictest of all consistency models. With atomic consistency, operations take effect at some point in an operation interval. Atomicity is a guarantee of isolation from concurrent processes. Additionally, atomic operations commonly have a succeed-or-fail definition. i.e they either successfully change the state of the system, or have no apparent effect.

- Causal consistency: The causal consistency model represents a weakening of sequential consistency in that it makes a distinction between events that are potentially causally related and those that are not. Writes that are potentially causally related must be seen by all processes in the same order. Concurrent writes (i.e. ones that are not causally related) may be seen in a different order on different machines. Hence, this is weaker than sequential consistency, which requires that all nodes see all writes in the same order, but is stronger than PRAM consistency, which requires only writes done by a single node to be seen in the same order from every other node.

- Delta consistency: is a core functionality that gives content providers “freshness guarantee” by ensuring that updates will propagate through the system and all replicas will be consistent after a fixed time period.

- Entry consistency: This requires the programmer to use acquire and release at the start and end of each critical section, respectively. Entry consistency requires each ordinary shared variable to be associated with some synchronization variable such as a lock or barrier. If it is desired that elements of an array be accessed independently in parallel, then different array elements must be associated with different locks.

- Eventual consistency:This model states that given a sufficiently long period of time over which no changes are sent, all updates can be expected to propagate eventually through the system and the replicas will be consistent.

- Fork consistency: In this model, if an untrusted server hides the modifications of one writer to another, the latter will not be shown any future modifications of the former, effectively forking the views of them. This is the strongest consistency model that can be achieved with an untrusted server.

- One-copy serializability: This model is the same as sequential consistency, as though there is only one copy of the file, thus, access requests are fully serialized.

- PRAM consistency: PRAM consistency or FIFO consistency, is subject to the condition that writes done by a single process are received by all other processes in the order in which they were issued, but writes from different processes may be seen in a different order by different processes.

- Release consistency: Release consistency provides these two kinds of synchronization variables. Acquire accesses are used to tell the memory system that a critical region is about to be entered. Release accesses say that a critical region has just been exited. These accesses can be implemented either as ordinary operations on special variables or as special operations.

- Sequential consistency: Sequential consistency is a slightly weaker model than strict consistency. It was defined by Lamport as the result of any execution is the same as if the reads and writes occurred in some order, and the operations of each individual processor appear in this sequence in the order specified by its program.

- Vector-field consistency:This is a consistency model for replicated data. It is designed to allow bounded divergence of object replicas. In this model replica consistency is selectively and dynamically strengthened or weakened based on the on-going game state and simultaneously it manages: how the consistency degree is changed throughout the execution; and how the consistency requirements are specified.

- Weak consistency: A memory system is weakly consistent if it enforces that accesses to synchronization variables are sequentially consistent, that no access to a synchronization variable is issued in a processor before all previous data accesses have been performed and that no access is issued by a processor before a previous access to a synchronization variable has been performed.

The memory coherence problem in a shared virtual memory system and in multicache systems are different. In a multicache multiprocessor, there are processors sharing a physical memory through their private caches. The relatively small size of a cache and the fast bus connection to the shared memory, enables using a sophisticated coherence protocol for the multicache hardware such that the time delay of conflicting writes to a memory location is small.<ref>Kai Li, and Paul Hudak. "Memory coherence in shared virtual memory systems". Published in Journal ACM Transactions on Computer Systems (TOCS), Volume 7 Issue 4, Nov. 1989 http://dl.acm.org.prox.lib.ncsu.edu/citation.cfm?id=75104.75105&coll=DL&dl=GUIDE&CFID=251927&CFTOKEN=71004880</ref>

In contrast, in a shared virtual memory system on a loosely coupled multiprocessor which has no physical shared memory (with a nontrivial communication cost between processors), conflicts are not likely to be solved with negligible delay. These conflicts resemble a “page fault” in a traditional virtual memory system. Thus, there are two design choices that greatly influence the implementation of a shared virtual memory: the granularity of the memory units (i.e., the “page size”) and the strategy for maintaining coherence.

Memory coherence strategies are classified based on how they deal with page synchronization and page ownership. The algorithms for memory coherence depend on the page fault handlers, their servers and the data structures used. So page table becomes an important part of these protocols.

Centralized Manager Algorithms

One way to obtain mutual exclusive access to data is to use a centralized algorithm. The first version of this algorithm is very similar to a monitor and makes use of a info table which has an entry for every page, and also three fields for each of those pages. The owner field keeps track of the processor which had the latest write access to the page. The copy set field has a list of every processor with a copy of the page, which is used for broadcast-free invalidation operations. Finally, the lock field is used when synchronizing requests for the page. Each processor in the algorithm also keeps track of page accessibility in its own page table with an access and a lock field.

By setting the lock in both of the tables, the algorithm can synchronize page faults whenever there are multiple processes waiting for a page, or whenever the page is in the process of being accessed. In conjunction with this are confirmation messages that are sent to let the managing processor know when it can give page access to someone else. So, while read-page faults on the managing processor only need a message to and a message from the owner, read-page faults on the non-managing processor need an additional message to the manager and a confirmation message. For write-page faults, the message sending process is the same, except for the additional cost of an invalidation.

The second version of the algorithm improves on the first by eliminating the need for confirmation messages. To accomplish this, the responsibility of synchronizing page ownership is moved from the manager to the individual owners. This also means that the lock field is removed from the info table, and the copy set field is transferred to each of the page tables. Ultimately, this removes the cost of one send and one receive from the algorithm.

Distributed Manager Algorithm

In order to fix the possibe bottle-neck centralized manager algorithms may create, Distributed Manager Algorithms assigns tasks to various individual processors. There are several methods of assigning these tasks. In a Fixed Distributed Manager Algorithm, there is a predetermined set of pages for which each processor is responsible. In a Broadcast Distributed Manager Algorithm, page faults results in broadcast requests to the other processors. Due to the number of requests, the Broadcast Distributed Manager Algorithm does not scale well. In the case of the Dynamic Distributed Manager Algorithm, instead of using broadcasting requests to find page owners, each processor holds a page table that stores the owners for each page. Since the processors that own each page (page owners) are not static, each entry in a processor's page table is only a "hint," or a starting point for looking for the true owner.

References

<References> [1] Yan Solihin. "Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems." Solihin Publishing & Consulting LLC, 2009.

[2] Sarita V. Adve. Kourosh Gharachorloo. "Shared Memory. Consistency Models: A Tutorial." Digital Western Research Laboratory 250 University Avenue Palo Alto. <http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf>

[3] Kai Li, and Paul Hudak. "Memory coherence in shared virtual memory systems". Published in Journal ACM Transactions on Computer Systems (TOCS), Volume 7 Issue 4, Nov. 1989 <http://dl.acm.org.prox.lib.ncsu.edu/citation.cfm?id=75104.75105&coll=DL&dl=GUIDE&CFID=251927&CFTOKEN=71004880>.

</References>

Quiz

1. Which of the following is true about Broadcast Distributed manager Algorithm? (More than one options could be correct)

a) It has a predetermined set of pages for which each processor is responsible.

b) Page faults results in broadcast requests to the other processors.

c) It does not scale well due to the number of requests.

d)None.

2.What is the major difference between One copy Serializabilty and Sequential consistency?

a) No difference

b) One copy Serializabilty Model has only one copy of the file.

c) Sequential Consistency Model has only one copy of the file.

d) None.

3.Which of the following is a hybrid protocol?

a) MSI

b) MOSI

c) Cachet

d) Firefly

4.On what basis are memory consistency strategies classified? (More than one options could be correct)

a) Page faults

b) Page synchronization

c) Page ownership

d) None.

5.Which of the following are the four states in a Dragon Protocol?

a) Modified, Shared, Invalidate

b) Modified, Exclusive, Shared Clean, and Shared Modified

c) Owned, Shared, Modified, Exclusive

d) None

6.Which of the following is true about sequential consistency? (More than one options could be correct)

a) All orderings are enforced.

b) Loads and stores can exchange positions freely.

c) Performance can suffer

d) SC is deterministic

7.In Centralized Manager Algorithms, what does the owner field signify?

a) Used when synchronizing requests for the page.

b) Keeps track of the processor which has the latest read access to the page.

c) Keeps track of the processor which has the latest write access to the page.

d) Entry for every page.

8.Which of the following is false? (More than one options could be correct)

a) Write Update method results in significant amount of traffic.

b) In a write through policy, the write is done only to the cache.

c) Cache coherence can be achieved by cache write policy.

d) Broadcast Distributed Manager Algorithm doesn’t scale well.

Quiz Answers: 1. b,c 2. b 3. c 4. b,c 5. b 6. a,c 7. c 8. a,d