CSC/ECE 506 Spring 2013/1d ks: Difference between revisions

(→Quiz) |

|||

| (20 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Current Page Address: http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/1d_ks | |||

Page started with: http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/1b_as | |||

=What is Moore's Law?= | |||

Nearly 40 years ago, Intel co-founder Gordon Moore forecasted the rapid pace of technology innovation. His prediction, popularly known as “Moore’s Law,” states that transistor density on [http://en.wikipedia.org/wiki/Integrated_circuit integrated circuits] doubles about every two years.<ref>http://www.computerhistory.org/semiconductor/timeline/1965-Moore.html</ref>.The original prediction presented in [http://download.intel.com/museum/Moores_Law/Articles-Press_releases/Gordon_Moore_1965_Article.pdf 1965 paper in Electronics Magazine] observed an annual doubling in the number of chip elements called transistors. He refined his view in 1975 with a two-year cycle in an [http://download.intel.com/museum/Moores_Law/Articles-Press_Releases/Gordon_Moore_1975_Speech.pdf updated paper]. Instead of giving an empirical formula predicting the rate of increase, Moore instead used prose, graphs, and images to convey these predictions and observations to the masses. This in some ways increased the staying power of Moore's law, allowing the industry to use it as a benchmark of success and a measurable determination of their success. Virtually all digital devices are in some way fundamentally linked to the growth set in place by Moore's law.<ref>http://en.wikipedia.org/wiki/Moore's_law</ref> | Nearly 40 years ago, Intel co-founder Gordon Moore forecasted the rapid pace of technology innovation. His prediction, popularly known as “Moore’s Law,” states that transistor density on [http://en.wikipedia.org/wiki/Integrated_circuit integrated circuits] doubles about every two years.<ref>http://www.computerhistory.org/semiconductor/timeline/1965-Moore.html</ref>.The original prediction presented in [http://download.intel.com/museum/Moores_Law/Articles-Press_releases/Gordon_Moore_1965_Article.pdf 1965 paper in Electronics Magazine] observed an annual doubling in the number of chip elements called transistors. He refined his view in 1975 with a two-year cycle in an [http://download.intel.com/museum/Moores_Law/Articles-Press_Releases/Gordon_Moore_1975_Speech.pdf updated paper]. Instead of giving an empirical formula predicting the rate of increase, Moore instead used prose, graphs, and images to convey these predictions and observations to the masses. This in some ways increased the staying power of Moore's law, allowing the industry to use it as a benchmark of success and a measurable determination of their success. Virtually all digital devices are in some way fundamentally linked to the growth set in place by Moore's law.<ref>http://en.wikipedia.org/wiki/Moore's_law</ref> | ||

==Moore's law, past to present | ==A Common Misconception== | ||

Moore's Law is often linked to performance improvements as measured in CPU clock speeds. In the 1980's, former Intel executive David House stated that chip performance would double every 18 months.<ref>http://news.cnet.com/Myths-of-Moores-Law/2010-1071_3-1014887.html</ref> This is a consequence of Moore's Law, but it is not what Moore's Law actually claims. In fact, due to heat dissipation issues<ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref><ref>http://techtalk.pcpitstop.com/2007/08/06/cpu-clock-speeds/</ref>, performance as measured in clock speed has remained flat since 2005<ref>http://www.kmeme.com/2010/09/clock-speed-wall.html</ref> while the number of transistors continues to double roughly every 2 years. | |||

=Moore's law, past to present= | |||

[[Image:Mooreslaw.png|right|thumb|350px|]] | [[Image:Mooreslaw.png|right|thumb|350px|]] | ||

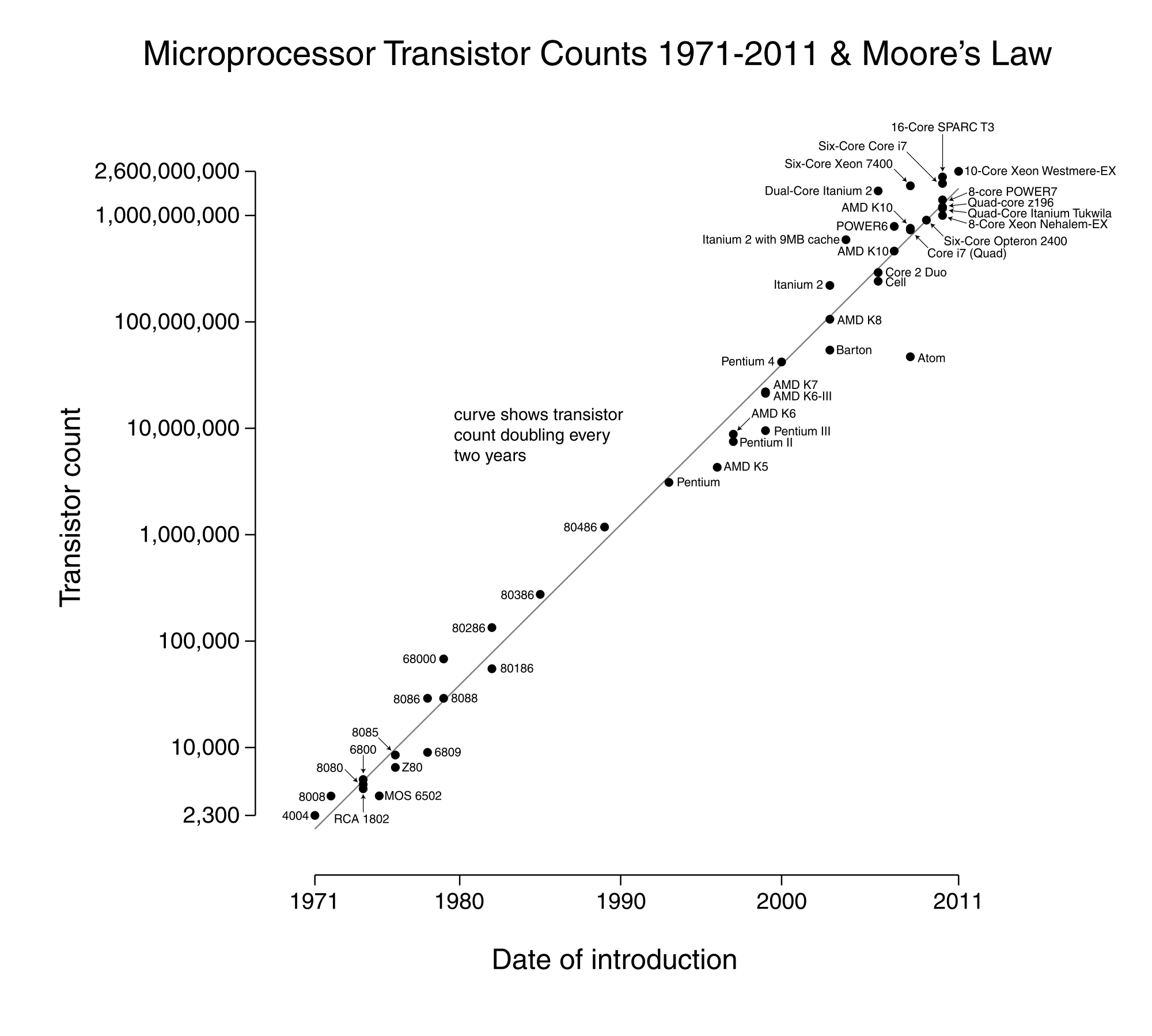

Reviewing data from the inception of Moore's law to the present shows that, consistent to Moore's prediction, the number of transistors on a chip has doubled approximately every 2 years. There are several contributing factors, that had they not been developed, could have slowed or plateaued Moore's law. One of these is the invention Dynamic random access memory ([http://en.wikipedia.org/wiki/DRAM DRAM]). This is a type of random access memory that allows for the storage of each bit in a separate capacitor on an integrated circuit. The main advantage of DRAM over its predecessor, SRAM, is that only one transistor and a capacitor are required per bit, compared to four or six transistors with SRAM. Another is most certainly the complementary metal-oxide-semiconductor ([http://en.wikipedia.org/wiki/CMOS CMOS]). This allowed a higher density of logic functions on a chip with the added benefit of low power consumption and electrical noise immunity. Lastly was the invention of the integrated circuit itself. Moore's law isn't only responsible for making larger and faster chips, but also smaller, cheaper, and more efficient ones as well. | Reviewing data from the inception of Moore's law to the present shows that, consistent to Moore's prediction, the number of transistors on a chip has doubled approximately every 2 years. There are several contributing factors, that had they not been developed, could have slowed or plateaued Moore's law. One of these is the invention Dynamic random access memory ([http://en.wikipedia.org/wiki/DRAM DRAM]). This is a type of random access memory that allows for the storage of each bit in a separate capacitor on an integrated circuit. The main advantage of DRAM over its predecessor, SRAM, is that only one transistor and a capacitor are required per bit, compared to four or six transistors with SRAM. Another is most certainly the complementary metal-oxide-semiconductor ([http://en.wikipedia.org/wiki/CMOS CMOS]). This allowed a higher density of logic functions on a chip with the added benefit of low power consumption and electrical noise immunity. Lastly was the invention of the integrated circuit itself. Moore's law isn't only responsible for making larger and faster chips, but also smaller, cheaper, and more efficient ones as well. | ||

| Line 335: | Line 342: | ||

|512 mm² | |512 mm² | ||

|- | |- | ||

|Six-core | |Six-core IBM zEC12 | ||

|2,750,000,000 | |2,750,000,000 | ||

|2012 | |2012 | ||

| Line 342: | Line 349: | ||

|597 mm² | |597 mm² | ||

|- | |- | ||

|8-Core Itanium | |8-Core Itanium Poulson | ||

|3,100,000,000 | |3,100,000,000 | ||

|2012 | |2012 | ||

| Line 349: | Line 356: | ||

|544 mm² | |544 mm² | ||

|- | |- | ||

|62-Core | |62-Core Xeon Phi | ||

|5,000,000,000 | |5,000,000,000 | ||

|2012 | |2012 | ||

| Line 356: | Line 363: | ||

| | | | ||

|- | |- | ||

|} | |}<ref>http://en.wikipedia.org/wiki/Transistor_count</ref> | ||

==Why do we need Moore's law? | =A quick primer on current manufacturing techniques= | ||

At the heart of Moore's Law is the transistor. Computer chips contain hundreds of millions of transistors embedded on a wafer of silicone. To make these chips, first a “stencil” is made containing the outlines of millions of transistors. The stencil is then placed over a silicone wafer which is sensitive to ultraviolet light. The light penetrates through the gaps of the stencil and exposes the silicon wafer, which is then is bathed in acid, carving the outlines of the circuits and the design of millions of transistors. Since the wafer consists of many conducting and semiconducting layers, the acid cuts into the wafer at different depths and patterns, so one can create circuits of enormous complexity. | |||

One reason why Moore’s law has relentlessly increased the power of chips is because UV light can be tuned so that its wavelength is smaller and smaller, making it possible to etch increasingly tiny transistors onto silicon wafers. Since UV light has a wavelength as small as 10 [http://en.wikipedia.org/wiki/Nanometre nanometers], the smallest transistor that can be etched is about thirty atoms across.<ref>http://en.wikipedia.org/wiki/Photolithography</ref> Due to physical limitations, This process cannot go on forever. At some point, it will be physically impossible to etch smaller effective transistors, and Moore’s law as we understand it will finally collapse. | |||

Currently, estimates predict that around 2020 or soon afterward, Moore’s law will gradually cease to hold true for traditional transistor technology. Transistors will be so small that quantum effects will begin to take over and electrons will "leak" out of the wires.<ref>http://computer.howstuffworks.com/small-cpu2.htm</ref><ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref> For example, the thinnest layer inside a computer will be about five atoms across. At that size, quantum effects will become dominant and transistors will not function as they currently do without other technological advances. | |||

=Why do we need Moore's law?= | |||

Begun as a simple observation, Moore’s Law has come to represent the amazing and seemingly inexhaustible capacity for exponential growth in electronics.<ref>P. K. Bondyopadhyay, “Moore’s Law governs the silicon revolution,”Proc. IEEE, vol. 86, no. 1, pp. 78–81, Jan. 1998.</ref> The historical regularity and predictability of Moore's Law produce organizing and coordinating effects throughout the semiconductor industry that not only set the pace of innovation, but define the rules and very nature of competition. And since semiconductors increasingly comprise a larger portion of electronics components and systems, either used directly by consumers or incorporated into end-use items purchased by consumers, the impact of Moore's Law has led users and consumers to come to expect a continuous stream of faster, better, and cheaper high-technology products. As integrated circuit costs have decreased, they have made their way into modern products ranging from automobiles to greeting cards.<ref>http://download.intel.com/museum/Moores_Law/Printed_Materials/Moores_Law_Backgrounder.pdf</ref> | Begun as a simple observation, Moore’s Law has come to represent the amazing and seemingly inexhaustible capacity for exponential growth in electronics.<ref>P. K. Bondyopadhyay, “Moore’s Law governs the silicon revolution,”Proc. IEEE, vol. 86, no. 1, pp. 78–81, Jan. 1998.</ref> The historical regularity and predictability of Moore's Law produce organizing and coordinating effects throughout the semiconductor industry that not only set the pace of innovation, but define the rules and very nature of competition. And since semiconductors increasingly comprise a larger portion of electronics components and systems, either used directly by consumers or incorporated into end-use items purchased by consumers, the impact of Moore's Law has led users and consumers to come to expect a continuous stream of faster, better, and cheaper high-technology products. As integrated circuit costs have decreased, they have made their way into modern products ranging from automobiles to greeting cards.<ref>http://download.intel.com/museum/Moores_Law/Printed_Materials/Moores_Law_Backgrounder.pdf</ref> | ||

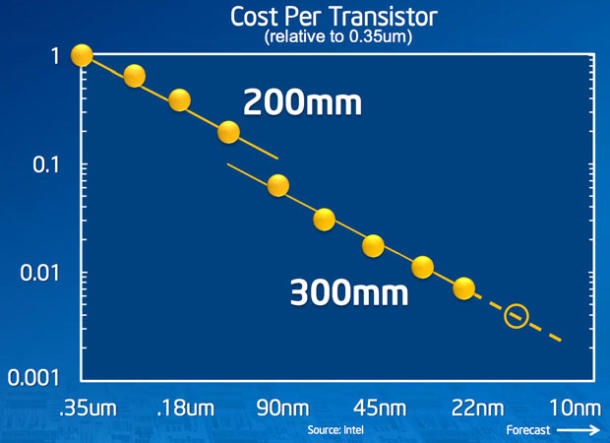

The drivers for technology development fall into two categories: push and pull. Push drivers are technology enablers, those things that make it possible to achieve the technical improvements. Moore described the three push drivers as increasing chip area, decreasing feature size, and design cleverness. The economic drivers for Moore’s Law have been extraordinarily compelling. As the dimensions of a transistor shrank, the transistor became smaller, lighter, faster, consumed less power, and in most cases was more reliable. All of these factors make the transistor more desirable for virtually every possible application. But there is more. Historically, the semiconductor industry has been able to manufacture silicon devices at an essentially constant cost per area of processed silicon. Thus, as the devices shrank they enjoyed a shrinking cost per transistor. Each step along the roadmap of Moore’s Law virtually guaranteed economic success. <ref>http://commonsenseatheism.com/wp-content/uploads/2011/12/Mack-Fifty-Years-of-Moores-Law.pdf</ref> | The drivers for technology development fall into two categories: push and pull. Push drivers are technology enablers, those things that make it possible to achieve the technical improvements. Moore described the three push drivers as increasing chip area, decreasing feature size, and design cleverness. The economic drivers for Moore’s Law have been extraordinarily compelling. As the dimensions of a transistor shrank, the transistor became smaller, lighter, faster, consumed less power, and in most cases was more reliable. All of these factors make the transistor more desirable for virtually every possible application. But there is more. Historically, the semiconductor industry has been able to manufacture silicon devices at an essentially constant cost per area of processed silicon. Thus, as the devices shrank they enjoyed a shrinking cost per transistor. Each step along the roadmap of Moore’s Law virtually guaranteed economic success. <ref>http://commonsenseatheism.com/wp-content/uploads/2011/12/Mack-Fifty-Years-of-Moores-Law.pdf</ref> | ||

=Why Moore's law still holds= | |||

For several decades Moore's law has been the guiding principle in the development of integrated circuits, the heart of the modern computer's ever improving price-performance. Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is uncertain. This observation has proven remarkably prescient and is among the most frequently referred to "predictions" of the 20th century. But Moore’s law is less a prediction than an assertion. It represents a good case of what systems scientists call feed-forward and lawyers refer to as conscious parallelism <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref>. Feed-forward is a systems concept in which signals from outside a system are in some way predicted and fed in to control the system of interest. Sometimes these are psychological predictions, as when people anticipate a shortage of gas, buy ahead, and thereby induce gas shortages. Conscious parallelism is usually used to describe a kind of price-fixing among competitors that happens without actual written or spoken agreements among the parties. Rather, being sensitive to the environment and the various signals that are available there, each party adjusts its behavior to achieve a desired outcome. But conscious parallelism can apply to terms other than price. It is hard to overstate the contribution that human expectations make to the functioning of our systems. Recollecting how in 1962 President Kennedy motivated Americans to reach for the moon,"We choose to go to the moon in this decade and do the other things, not because they are easy, but because they are hard,because that goal will serve to organize and measure the best of our energies and skills, because that challenge is one that we are willing to accept, one we are unwilling to postpone, and one which we intend to win, and the others, too." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref> | For several decades Moore's law has been the guiding principle in the development of integrated circuits, the heart of the modern computer's ever improving price-performance. Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is uncertain. This observation has proven remarkably prescient and is among the most frequently referred to "predictions" of the 20th century. But Moore’s law is less a prediction than an assertion. It represents a good case of what systems scientists call feed-forward and lawyers refer to as conscious parallelism <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref>. Feed-forward is a systems concept in which signals from outside a system are in some way predicted and fed in to control the system of interest. Sometimes these are psychological predictions, as when people anticipate a shortage of gas, buy ahead, and thereby induce gas shortages. Conscious parallelism is usually used to describe a kind of price-fixing among competitors that happens without actual written or spoken agreements among the parties. Rather, being sensitive to the environment and the various signals that are available there, each party adjusts its behavior to achieve a desired outcome. But conscious parallelism can apply to terms other than price. It is hard to overstate the contribution that human expectations make to the functioning of our systems. Recollecting how in 1962 President Kennedy motivated Americans to reach for the moon,"We choose to go to the moon in this decade and do the other things, not because they are easy, but because they are hard,because that goal will serve to organize and measure the best of our energies and skills, because that challenge is one that we are willing to accept, one we are unwilling to postpone, and one which we intend to win, and the others, too." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref> | ||

As for the incredible price reductions in computing, they did not happen for any essential technical reason. Nor were they the result of a simple set of scientific, engineering, production, marketing, or financial forces. Indeed the forces at work have been changing over the four decades in question. Carver Meade said it clearly when he pointed out that Moore's law is "not a law of physics,it's about human belief, and when people believe in something, they'll put energy behind it to make it come to pass." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref> Gordon Moore himself eventually assessed the many developments that had contributed to his observation's persistent relevance this way. "Every company knows that unless they keep moving at that pace, they are going to fall behind. It has become the driver of what happens." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref> | As for the incredible price reductions in computing, they did not happen for any essential technical reason. Nor were they the result of a simple set of scientific, engineering, production, marketing, or financial forces. Indeed the forces at work have been changing over the four decades in question. Carver Meade said it clearly when he pointed out that Moore's law is "not a law of physics,it's about human belief, and when people believe in something, they'll put energy behind it to make it come to pass." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref> Gordon Moore himself eventually assessed the many developments that had contributed to his observation's persistent relevance this way. "Every company knows that unless they keep moving at that pace, they are going to fall behind. It has become the driver of what happens." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref> | ||

"Moore's Law is self-perpetuating," says Daniel Grupp, manager of advanced device development at Acorn Technologies and a visiting scholar at Stanford University’s Center for Integrated Systems. “It is relevant, and continues to be, because the whole cycle of design, development, manufacture, distribution and sales is known to be sustainable if we follow Moore's Law. If we beat Moore's Law, the market cannot absorb the new products and engineers lose their jobs. If we slip behind Moore's Law, there is nothing to buy, and the distribution chain withers. For example, in planning the development strategy at Acorn Technologies,we carefully track Moore's Law to time the introduction of our devices.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref> | "Moore's Law is self-perpetuating," says Daniel Grupp, manager of advanced device development at Acorn Technologies and a visiting scholar at Stanford University’s Center for Integrated Systems. “It is relevant, and continues to be, because the whole cycle of design, development, manufacture, distribution and sales is known to be sustainable if we follow Moore's Law. If we beat Moore's Law, the market cannot absorb the new products and engineers lose their jobs. If we slip behind Moore's Law, there is nothing to buy, and the distribution chain withers. For example, in planning the development strategy at Acorn Technologies, we carefully track Moore's Law to time the introduction of our devices.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref> | ||

Thirty-six years ago, Grupp states, “Moore's Law was merely an observation. Now, we in the industry enforce the law. It has become a business dictum.” He adds, “Moore's Law will continue beyond our lifetimes into the new millennium.” Joe G. Xi, vice president of products and marketing at Plato Design Systems, says,“Moore’s Law is still in effect. If you are a leading-edge semiconductor company, you definitely have to observe Moore’s Law – not Moore’s Law per se, but the migration of the process technologies.” The dictum of 2x transistors every 18 months (as the amended Moore’s Law has it) “may not be as accurate (now) as it was five or six years ago,” Xi adds. “Does the product that people develop reflect whether they have considered Moore’s Law? Usually, they have not.” Analog IC companies are the ones that must answer more directly to Moore’s Law, according to Xi.“In general, Moore’s Law is still correct,” he concludes. Jodi Shelton, executive director of the Fabless Semiconductor Association, says,“Moore’s Law ends up being pretty accurate. Look how quickly we’ve moved from 0.18-micron (device features) to 0.13 micron; it’s amazing.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref> | Thirty-six years ago, Grupp states, “Moore's Law was merely an observation. Now, we in the industry enforce the law. It has become a business dictum.” He adds, “Moore's Law will continue beyond our lifetimes into the new millennium.” Joe G. Xi, vice president of products and marketing at Plato Design Systems, says,“Moore’s Law is still in effect. If you are a leading-edge semiconductor company, you definitely have to observe Moore’s Law – not Moore’s Law per se, but the migration of the process technologies.” The dictum of 2x transistors every 18 months (as the amended Moore’s Law has it) “may not be as accurate (now) as it was five or six years ago,” Xi adds. “Does the product that people develop reflect whether they have considered Moore’s Law? Usually, they have not.” Analog IC companies are the ones that must answer more directly to Moore’s Law, according to Xi.“In general, Moore’s Law is still correct,” he concludes. Jodi Shelton, executive director of the Fabless Semiconductor Association, says,“Moore’s Law ends up being pretty accurate. Look how quickly we’ve moved from 0.18-micron (device features) to 0.13 micron; it’s amazing.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref> | ||

Moore's Law comes into play with forecasts or at anytime someone is thinking about their particular device in the future. If a new process to develop smaller ICs developed every 18 months, it is not hard to see your current design as being obsolete in three years. So, Moore's Law does very much affect design cycles. “As soon as a company puts out a product, they turn right around and start to redesign partially to take advantage of new technologies. Sometimes there are instances where something isn't feasible today, but it could be down the road, if Moore's Law continues to be upheld. The smaller processes help to make advances not only in the CPU world, but also portable, low-power products like cell phones, digital cameras, etc. This allows constant turnovers and the ability for the electronics industry to put out an upgraded product every year. It is definitely | Moore's Law comes into play with forecasts or at anytime someone is thinking about their particular device in the future. If a new process to develop smaller ICs developed every 18 months, it is not hard to see your current design as being obsolete in three years. So, Moore's Law does very much affect design cycles. “As soon as a company puts out a product, they turn right around and start to redesign partially to take advantage of new technologies. Sometimes there are instances where something isn't feasible today, but it could be down the road, if Moore's Law continues to be upheld. The smaller processes help to make advances not only in the CPU world, but also portable, low-power products like cell phones, digital cameras, etc. This allows constant turnovers and the ability for the electronics industry to put out an upgraded product every year. It is definitely in the interest of the electronics industry to make sure Moore's Law remains unbroken.” Robert O’Dell, vice president of marketing at Wintegra, observes, “What drives Moore’s Law is the ability of the fabs to product silicon that is smaller.” Suppliers of wafer fabrication equipment, he notes, “plan on Moore’s Law; they are producing equipment and solving physics problems.” Still, he adds, “I always wonder if it’s a self-fulfilling prophecy.” Moore’s Law is relevant and important these days, according to the marketing executive; “it anchors what’s given in a period of time,” he says. The number of transistors on a given IC may be doubling every 12-18 months, but there are other considerations that are leaving Moore’s Law behind, O’Dell states. Communications bandwidths, especially in the optical component space, “can’t wait around for Moore’s Law,” he notes. “No one has built an all-optical switch that works. Something has to give; you have to put more silicon down.” At the same time, “I/O is not scaling as fast as Moore’s Law,” O’Dell says. <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref> | ||

Largely forgotten is that Moore, in an attempt to keep his ‘law’ effective, formulated Moore's Second Law, which says that facility costs increase on a semi-log scale. In other words, every additional doubling of device complexity increases manufacturing costs exponentially. Ultimately, the cost to obtain the next doubling is larger than the entire wealth of the planet, and that next step is economically impossible. Of course, economics aside, there is a practical limit somewhere: It'll be a while before we can build entire CPU chips that are a tenth the size an atom. According to Ron Gyurcsik, group director for the Datacom IC strategic business group at Tality, the design-services subsidiary of Cadence Design Systems, Moore’s Second Law is more relevant these days than Moore’s First Law. Chip design is “more cost driven than anything,” he says. “The two (laws) work together,” Gyurcsik adds. “The cost emphasis drives us to Moore’s Law. Cost is the way the industry has been driven for a long time.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref> | Largely forgotten is that Moore, in an attempt to keep his ‘law’ effective, formulated Moore's Second Law, which says that facility costs increase on a semi-log scale. In other words, every additional doubling of device complexity increases manufacturing costs exponentially. Ultimately, the cost to obtain the next doubling is larger than the entire wealth of the planet, and that next step is economically impossible. Of course, economics aside, there is a practical limit somewhere: It'll be a while before we can build entire CPU chips that are a tenth the size an atom. According to Ron Gyurcsik, group director for the Datacom IC strategic business group at Tality, the design-services subsidiary of Cadence Design Systems, Moore’s Second Law is more relevant these days than Moore’s First Law. Chip design is “more cost driven than anything,” he says. “The two (laws) work together,” Gyurcsik adds. “The cost emphasis drives us to Moore’s Law. Cost is the way the industry has been driven for a long time.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref> | ||

| Line 379: | Line 394: | ||

an revolution such as quantum computing which is already in early stages of its innovation. Intel already has a technology road-map set until 2020 with the 5nm process node.<ref>http://www.intel.com/content/www/us/en/it-managers/peek-at-the-future-rick-white-presentation.html </ref> But what happens after that is to be seen. It should be noted that the end of Moore's law has been projected to occur since the 1970's but it still holds to this date as engineers and scientists overcome the seemingly impossible challenge every time with ground breaking innovations. From an engineering perspective, it is this spirit of innovation to catch up with Moore's law which has kept the law alive. Although Moore's law may fail someday as the number of transistors cannot go on doubling forever but the importance of Moore's law holds the key role for the future of computing industry. Also doubling of transistors is not the only reason for improved performance. Even if scaling stops, performance can be improved by exploiting new architectural schemes such as multicores, GPGPU's etc which indeed can serve the basic purpose of Moore's law of scaling transistors. | an revolution such as quantum computing which is already in early stages of its innovation. Intel already has a technology road-map set until 2020 with the 5nm process node.<ref>http://www.intel.com/content/www/us/en/it-managers/peek-at-the-future-rick-white-presentation.html </ref> But what happens after that is to be seen. It should be noted that the end of Moore's law has been projected to occur since the 1970's but it still holds to this date as engineers and scientists overcome the seemingly impossible challenge every time with ground breaking innovations. From an engineering perspective, it is this spirit of innovation to catch up with Moore's law which has kept the law alive. Although Moore's law may fail someday as the number of transistors cannot go on doubling forever but the importance of Moore's law holds the key role for the future of computing industry. Also doubling of transistors is not the only reason for improved performance. Even if scaling stops, performance can be improved by exploiting new architectural schemes such as multicores, GPGPU's etc which indeed can serve the basic purpose of Moore's law of scaling transistors. | ||

=The Road Ahead= | |||

==Do Transistor Counts Matter?== | |||

Moore's Law concerns only the doubling of transistors on the same die space every 2 years. While some of these new technologies deal directly with adding more transistors into the same amount of space, others take a different approach to boost overall computational performance. While not strictly following Moore's Law, per se, these advanced designs will lead to a continuation of the increase in computational power that can be harnessed from hardware. They are included in the discussion to illustrate that performance is not necessarily dependent on the number of transistors that can be placed on a die. Novel approaches, such as 3-D transistor manufacturing will allow for greater densities, but other approaches, such as quantum computing operate in a different way than the traditional transistor to solve the same problem more efficiently.<ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref><ref>http://www.iue.tuwien.ac.at/phd/wittmann/node6.html</ref> | Moore's Law concerns only the doubling of transistors on the same die space every 2 years. While some of these new technologies deal directly with adding more transistors into the same amount of space, others take a different approach to boost overall computational performance. While not strictly following Moore's Law, per se, these advanced designs will lead to a continuation of the increase in computational power that can be harnessed from hardware. They are included in the discussion to illustrate that performance is not necessarily dependent on the number of transistors that can be placed on a die. Novel approaches, such as 3-D transistor manufacturing will allow for greater densities, but other approaches, such as quantum computing operate in a different way than the traditional transistor to solve the same problem more efficiently.<ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref><ref>http://www.iue.tuwien.ac.at/phd/wittmann/node6.html</ref> | ||

==Intel's vision to keep Moore's law ticking== | |||

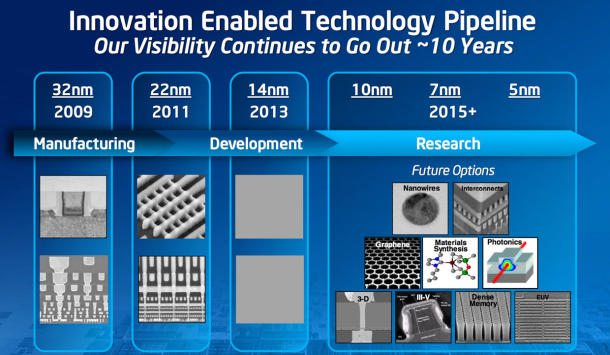

[[File:Intelrnd.jpg|thumb|right|300px|Credits: Intel Corporation]] | [[File:Intelrnd.jpg|thumb|right|300px|Credits: Intel Corporation]] | ||

[[File:Cpertran.jpg|thumb|left|300px|Credits: Intel Corporation]] | [[File:Cpertran.jpg|thumb|left|300px|Credits: Intel Corporation]] | ||

| Line 390: | Line 405: | ||

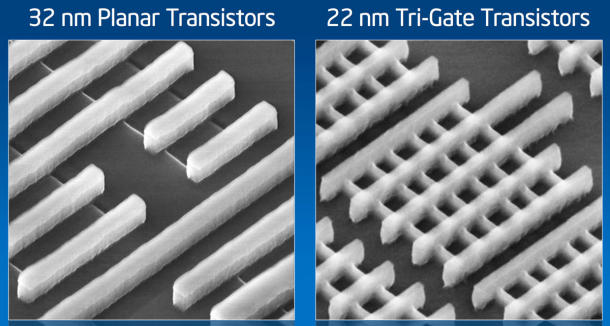

In an interview with CNET <ref>http://news.cnet.com/8301-11386_3-57526582-76/on-the-moores-law-hot-seat-intels-mike-mayberry-q-a/</ref>, Mayberry says that he has been in Intel for last 28 years and he has been hearing about the end of Moore's law since then, but what is interesting is how they have been able to overcome challenges and kept Moore's law ticking. It was predicted that scaling would halt at 180 nm process node because of two things. One was the ability to print features which was lithography and the other one was short-channel effects. This was overcome by making the channels shallow which led to scaling below 180nm. The next problem was the dielectric leakage as the channel was few atomic layers thick. Researchers solved this daunting problem by introducing the high-k metal gate. With further scaling gate leakage came into picture and Intel introduced the first tri-gate process which has enabled scaling to this point of time and will enable us to scale further upto 5nm. With the existing silicon based technology Intel sees Moore's law ticking atleast till 2020. "If you're only using the same technology, then in principle you run into limits. The truth is we've been modifying the technology every five or seven years for 40 years, and there's no end in sight for being able to do that", Mayberry says. What happens after that is not known although there are many alternative solutions like graphene based device, spintronics, quantum computing etc. being suggested but there no clear winner yet. [http://news.cnet.com/8301-11386_3-57526582-76/on-the-moores-law-hot-seat-intels-mike-mayberry-q-a/ Read the interview in detail here.] | In an interview with CNET <ref>http://news.cnet.com/8301-11386_3-57526582-76/on-the-moores-law-hot-seat-intels-mike-mayberry-q-a/</ref>, Mayberry says that he has been in Intel for last 28 years and he has been hearing about the end of Moore's law since then, but what is interesting is how they have been able to overcome challenges and kept Moore's law ticking. It was predicted that scaling would halt at 180 nm process node because of two things. One was the ability to print features which was lithography and the other one was short-channel effects. This was overcome by making the channels shallow which led to scaling below 180nm. The next problem was the dielectric leakage as the channel was few atomic layers thick. Researchers solved this daunting problem by introducing the high-k metal gate. With further scaling gate leakage came into picture and Intel introduced the first tri-gate process which has enabled scaling to this point of time and will enable us to scale further upto 5nm. With the existing silicon based technology Intel sees Moore's law ticking atleast till 2020. "If you're only using the same technology, then in principle you run into limits. The truth is we've been modifying the technology every five or seven years for 40 years, and there's no end in sight for being able to do that", Mayberry says. What happens after that is not known although there are many alternative solutions like graphene based device, spintronics, quantum computing etc. being suggested but there no clear winner yet. [http://news.cnet.com/8301-11386_3-57526582-76/on-the-moores-law-hot-seat-intels-mike-mayberry-q-a/ Read the interview in detail here.] | ||

===Trigate or 3-D transistor=== | |||

Tri-gate or 3-D Transistor fabrication is used by Intel Corporation for the nonplanar transistor architecture used in [http://en.wikipedia.org/wiki/Ivy_Bridge_(microarchitecture)Ivy Bridge processors]. These transistors employ a single gate stacked on top of two vertical gates allowing for essentially three times the surface area for electrons to travel. Intel reports that their tri-gate transistors reduce [http://en.wikipedia.org/wiki/Subthreshold_leakage leakage] and consume far less power than current transistors. This allows up to 37% higher speed, or a power consumption at under 50% of the previous type of transistors used by Intel.<ref>http://en.wikipedia.org/wiki/Multigate_device#cite_note-naturenews6may-16.</ref> <ref>http://en.wikipedia.org/wiki/Multigate_device#cite_note-17</ref> These new transistors enable industry to pursue Moore's Law and to ensure that the pace of technology advancement which consumers expect can continue for years to come. | Tri-gate or 3-D Transistor fabrication is used by Intel Corporation for the nonplanar transistor architecture used in [http://en.wikipedia.org/wiki/Ivy_Bridge_(microarchitecture) Ivy Bridge processors]. These transistors employ a single gate stacked on top of two vertical gates allowing for essentially three times the surface area for electrons to travel. Intel reports that their tri-gate transistors reduce [http://en.wikipedia.org/wiki/Subthreshold_leakage leakage] and consume far less power than current transistors. This allows up to 37% higher speed, or a power consumption at under 50% of the previous type of transistors used by Intel.<ref>http://en.wikipedia.org/wiki/Multigate_device#cite_note-naturenews6may-16.</ref> <ref>http://en.wikipedia.org/wiki/Multigate_device#cite_note-17</ref> These new transistors enable industry to pursue Moore's Law and to ensure that the pace of technology advancement which consumers expect can continue for years to come. | ||

==Alternatives to silicon== | |||

Silicon based process technologies are projected to hit Moore's wall in the next decade. Therefore it becomes very necessary for chip manufacturers to migrate to a new process technology which can keep Moore's law ticking. Other options such as quantum computing are gaining interest now as they have the potential for further device scaling and ultra low power computing. | Silicon based process technologies are projected to hit Moore's wall in the next decade. Therefore it becomes very necessary for chip manufacturers to migrate to a new process technology which can keep Moore's law ticking. Other options such as quantum computing are gaining interest now as they have the potential for further device scaling and ultra low power computing. | ||

===Quantum Computing=== | |||

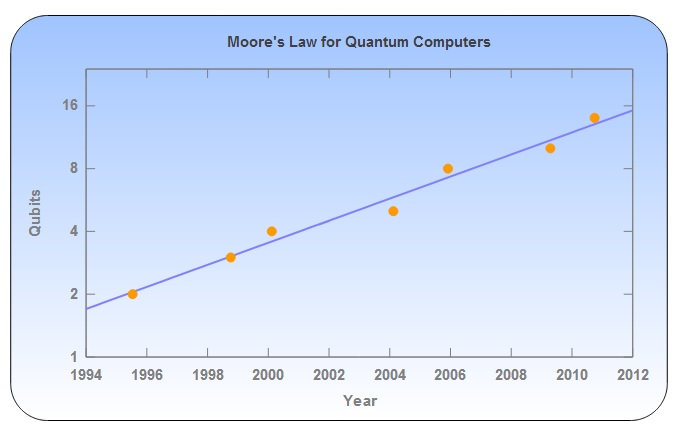

By looking at the history of quantum computing experiments, one finds an exponential increase in the number of qubits, similar to Moore's law for classical computers. Quantum computing power doubles about every six years, with quantum computers for real applications arriving in between nine and twelve years if this trend continues. The challenge of scalability in quantum computing remains an area of vivid speculation in discussions both within the scientific community and beyond it. Since we are interested in scalability,the natural quantity of interest is the number of qubits realized in an experiment. Second, experiments should demonstrate controlled coherent manipulation of individual quantum objects, such as multi-qubit gates or generation of mutual entanglement and the third being controlled manipulation. The first experiment fulfilling the criteria is the demonstration of the Cirac-Zoller gate for two trapped ions by Dave Wineland's group at NIST in 1995. | By looking at the history of quantum computing experiments, one finds an exponential increase in the number of qubits, similar to Moore's law for classical computers. Quantum computing power doubles about every six years, with quantum computers for real applications arriving in between nine and twelve years if this trend continues. The challenge of scalability in quantum computing remains an area of vivid speculation in discussions both within the scientific community and beyond it. Since we are interested in scalability,the natural quantity of interest is the number of qubits realized in an experiment. Second, experiments should demonstrate controlled coherent manipulation of individual quantum objects, such as multi-qubit gates or generation of mutual entanglement and the third being controlled manipulation. The first experiment fulfilling the criteria is the demonstration of the Cirac-Zoller gate for two trapped ions by Dave Wineland's group at NIST in 1995. | ||

In the following, the number of qubits grew rapidly over the years, with ion trap experiments and linear optics implementations competing for the top spot. Currently, the world record in mutual entanglement is at 14 qubits, demonstrated by Rainer Blatt's ion trap group in Innsbruck. If we plot the progression of these records over time, we obtain the following plot. | In the following, the number of qubits grew rapidly over the years, with ion trap experiments and linear optics implementations competing for the top spot. Currently, the world record in mutual entanglement is at 14 qubits, demonstrated by Rainer Blatt's ion trap group in Innsbruck. If we plot the progression of these records over time, we obtain the following plot. | ||

| Line 403: | Line 418: | ||

As the qubit scale is logarithmic, this clearly corresponds to an exponential increase, similar to Moore's law for classical computers. The blue line is a fit to the data, indicating a doubling of the number of qubits every 5.7±0.4 years. We can now use this exponential fit to make some very interesting predictions. Of course, we would like to know when we can expect quantum computers to become superior to classical computers, at least for some problems. The first real applications of quantum computers will come in the area of simulating difficult quantum many-body problems arising, for example, in high-temperature superconductivity, quark bound states such as proton and neutrons, or quantum magnets. For these problems, the record for classical simulations is currently at 42 qubits. While classical computers will see future improvements as well, a conservative estimate is that you need to control 50 qubits in your quantum computer to beat classical simulations. Extrapolating from our exponential fit, we can expect this to happen between 2020 and 2023. Optimization and search problems benefiting from Grover's algorithm could become tractable somewhat later, but that depends a lot on the problem at hand. It would be bold enough to believe that the same scaling continues even further, 2048-bit RSA keys would come under attack somewhere between 2052 and 2059 ! <ref>http://www.quantenblog.net/physics/moores-law-quantum-computer</ref> | As the qubit scale is logarithmic, this clearly corresponds to an exponential increase, similar to Moore's law for classical computers. The blue line is a fit to the data, indicating a doubling of the number of qubits every 5.7±0.4 years. We can now use this exponential fit to make some very interesting predictions. Of course, we would like to know when we can expect quantum computers to become superior to classical computers, at least for some problems. The first real applications of quantum computers will come in the area of simulating difficult quantum many-body problems arising, for example, in high-temperature superconductivity, quark bound states such as proton and neutrons, or quantum magnets. For these problems, the record for classical simulations is currently at 42 qubits. While classical computers will see future improvements as well, a conservative estimate is that you need to control 50 qubits in your quantum computer to beat classical simulations. Extrapolating from our exponential fit, we can expect this to happen between 2020 and 2023. Optimization and search problems benefiting from Grover's algorithm could become tractable somewhat later, but that depends a lot on the problem at hand. It would be bold enough to believe that the same scaling continues even further, 2048-bit RSA keys would come under attack somewhere between 2052 and 2059 ! <ref>http://www.quantenblog.net/physics/moores-law-quantum-computer</ref> | ||

===Spintronics=== | |||

Electron spin has two possible states, either “up” or “down.” Aligning spins in a material creates magnetism. Moreover, magnetic fields affect the passage of “up” and “down” electrons differently. Under normal conditions, the spins of conducting electrons are roughly half-up and half-down. Controlling the spin of electrons within a device can produce surprising and substantial changes in its properties. A new generation of devices based upon the manipulation of spins in solids may have entirely new functionality that could provide a foundation for entirely new computational paradigms. For example, the first widely used spintronic device -- the Giant Magnetoresistive (GMR) spin-valve head for magnetic hard-disk drives -- exhibits large changes in electrical resistance due to variations in the relative magnetic orientation of layers on either side of a spacer layer only 2-3 atoms thick. When the orientations are in the same direction (“parallel”), electrons with one type of spin pass freely while those with the opposite spin meet greater resistance. When the magnetic orientations are in opposite directions (“antiparallel”), all the electrons meet resistance, resulting in a high overall electrical resistance through the head. By designing the structure so a faint external magnetic field would change the relative magnetic orientations of the key layers, the GMR head became an extraordinarily sensitive magnetic-field sensor. Pioneered by IBM in 1997, the GMR head enabled hard-disk drives to read smaller data bits, which led to a more than 40-fold increase in data-storage density over the past seven years. | Electron spin has two possible states, either “up” or “down.” Aligning spins in a material creates magnetism. Moreover, magnetic fields affect the passage of “up” and “down” electrons differently. Under normal conditions, the spins of conducting electrons are roughly half-up and half-down. Controlling the spin of electrons within a device can produce surprising and substantial changes in its properties. A new generation of devices based upon the manipulation of spins in solids may have entirely new functionality that could provide a foundation for entirely new computational paradigms. For example, the first widely used spintronic device -- the Giant Magnetoresistive (GMR) spin-valve head for magnetic hard-disk drives -- exhibits large changes in electrical resistance due to variations in the relative magnetic orientation of layers on either side of a spacer layer only 2-3 atoms thick. When the orientations are in the same direction (“parallel”), electrons with one type of spin pass freely while those with the opposite spin meet greater resistance. When the magnetic orientations are in opposite directions (“antiparallel”), all the electrons meet resistance, resulting in a high overall electrical resistance through the head. By designing the structure so a faint external magnetic field would change the relative magnetic orientations of the key layers, the GMR head became an extraordinarily sensitive magnetic-field sensor. Pioneered by IBM in 1997, the GMR head enabled hard-disk drives to read smaller data bits, which led to a more than 40-fold increase in data-storage density over the past seven years. | ||

Spintronic structures are also at the heart of Magnetic Random Access Memory [http://en.wikipedia.org/wiki/Magnetoresistive_random-access_memory (MRAM)], a fast non-volatile memory concept originally proposed by IBM and currently being developed by IBM, Infineon and others, and the Racetrack Memory Project, a new storage-class memory technology . Use of electron spin for general logic design is an active area of research now. Non-volatile spin-logic devices to enable scaling beyond the year 2025 <ref>http://en.wikipedia.org/wiki/Magnetoresistive_random-access_memory</ref> are being extensively studied. Spin-transfer torque-based logic devices that use spins and magnets for information processing have been proposed and are being extensively studied at Intel. These devices are now part of the [http://en.wikipedia.org/wiki/Magnetoresistive_random-access_memory 2012 ITRS exploratory roadmap] and have potential for inclusion in future computers. | Spintronic structures are also at the heart of Magnetic Random Access Memory [http://en.wikipedia.org/wiki/Magnetoresistive_random-access_memory (MRAM)], a fast non-volatile memory concept originally proposed by IBM and currently being developed by IBM, Infineon and others, and the Racetrack Memory Project, a new storage-class memory technology . Use of electron spin for general logic design is an active area of research now. Non-volatile spin-logic devices to enable scaling beyond the year 2025 <ref>http://en.wikipedia.org/wiki/Magnetoresistive_random-access_memory</ref> are being extensively studied. Spin-transfer torque-based logic devices that use spins and magnets for information processing have been proposed and are being extensively studied at Intel. These devices are now part of the [http://en.wikipedia.org/wiki/Magnetoresistive_random-access_memory 2012 ITRS exploratory roadmap] and have potential for inclusion in future computers. | ||

==New packaging methods== | |||

Scaling from current manufacturing and chip-design technologies has become more difficult, in part because of power-density constraints, and in part because interconnects do not become faster while transistors do. Innovations in packaging techniques enables putting large number of components on a relatively small chip. | Scaling from current manufacturing and chip-design technologies has become more difficult, in part because of power-density constraints, and in part because interconnects do not become faster while transistors do. Innovations in packaging techniques enables putting large number of components on a relatively small chip. | ||

===3-D IC=== | |||

The three-dimensional integrated circuit is a chip in which two or more layers of active electronic components are integrated both vertically and horizontally into a single circuit. 3-D integrated circuits were proposed to address the scaling challenge by stacking 2-D dies and connecting them in the 3rd dimension. This promises to speed up communication between layered chips, compared to planar layout.<ref>http://en.wikipedia.org/wiki/Three-dimensional_integrated_circuit#cite_note-AutoRE-7-8</ref> | The three-dimensional integrated circuit is a chip in which two or more layers of active electronic components are integrated both vertically and horizontally into a single circuit. 3-D integrated circuits were proposed to address the scaling challenge by stacking 2-D dies and connecting them in the 3rd dimension. This promises to speed up communication between layered chips, compared to planar layout.<ref>http://en.wikipedia.org/wiki/Three-dimensional_integrated_circuit#cite_note-AutoRE-7-8</ref> | ||

| Line 418: | Line 433: | ||

This extends Moore’s Law and enables a new generation of tiny but powerful devices. | This extends Moore’s Law and enables a new generation of tiny but powerful devices. | ||

=Conclusions= | |||

The demise of Moore's Law has been predicted several times during the past 40 years, but transistor counts continue to follow a two year doubling on average. With the traditional transistor approach, inevitable physical limits will be reached around the 16 nm process, due to [http://en.wikipedia.org/wiki/Quantum_tunnelling quantum tunneling]<ref>http://news.cnet.com/2100-1008-5112061.html</ref>. If this is true, the current pace of innovation would lead to hitting "Moore's Wall" around 2022, or in about 10 years. This "10 year horizon" for Moore's Law has existed since the early 1990's, with new designs, processes, and breakthroughs which continue to extend the timeline.<ref>http://arxiv.org/pdf/astro-ph/0404510v2.pdf</ref><ref>http://java.sys-con.com/node/557154</ref> New technologies that leverage three dimensional chip architecture would allow for years of continued growth in transistor counts and exotic designs could further increase the theoretical capacity of transistors in a particular space. If the past is used as a predictor for future trends, it is safe to say that the end of Moore's Law "is about 10 years away". | The demise of Moore's Law has been predicted several times during the past 40 years, but transistor counts continue to follow a two year doubling on average. With the traditional transistor approach, inevitable physical limits will be reached around the 16 nm process, due to [http://en.wikipedia.org/wiki/Quantum_tunnelling quantum tunneling]<ref>http://news.cnet.com/2100-1008-5112061.html</ref>. If this is true, the current pace of innovation would lead to hitting "Moore's Wall" around 2022, or in about 10 years. This "10 year horizon" for Moore's Law has existed since the early 1990's, with new designs, processes, and breakthroughs which continue to extend the timeline.<ref>http://arxiv.org/pdf/astro-ph/0404510v2.pdf</ref><ref>http://java.sys-con.com/node/557154</ref> New technologies that leverage three dimensional chip architecture would allow for years of continued growth in transistor counts and exotic designs could further increase the theoretical capacity of transistors in a particular space. If the past is used as a predictor for future trends, it is safe to say that the end of Moore's Law "is about 10 years away". | ||

| Line 425: | Line 440: | ||

On another note, if we relax the definition of Moore's Law to include computational performance gains, we open a whole new avenue by which to measure computing power. Most of the easy gains in performance related to transistor counts have been realized, but new designs of how basic computing is performed can theoretically yield large increases in performance without doubling of transistor counts or extremely high power requirements.<ref>http://abcnews.go.com/Technology/story?id=4006166&page=1#.TzAbDcVA_H4</ref><ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref> The era of the traditional transistor is not quite over yet, but the relevance of transistor counts may be nearing it's end. | On another note, if we relax the definition of Moore's Law to include computational performance gains, we open a whole new avenue by which to measure computing power. Most of the easy gains in performance related to transistor counts have been realized, but new designs of how basic computing is performed can theoretically yield large increases in performance without doubling of transistor counts or extremely high power requirements.<ref>http://abcnews.go.com/Technology/story?id=4006166&page=1#.TzAbDcVA_H4</ref><ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref> The era of the traditional transistor is not quite over yet, but the relevance of transistor counts may be nearing it's end. | ||

=References= | |||

<references/> | <references/> | ||

=Quiz= | |||

# What does Moore's law state ? | |||

## Doubling of transistor area every 18 months | |||

## Doubling of profit in semiconductor industry every 18 months | |||

## New process technology every 18 months | |||

## Doubling of transistors per unit chip area every 18 months | |||

# Current generation transistors may face Moore's wall in near future because (Choose atleast one answer) | |||

## Pronounced quantum tunneling effects at transistor sizes beyond 5nm | |||

## Cost of fabrication increases exponentially | |||

## Semiconductor business is not lucrative | |||

## Less demand for new products in the market | |||

# Currently Intel's vision of scaling blinds out at which process node ? | |||

## 22nm | |||

## 10nm | |||

## 5nm | |||

## 2nm | |||

# "Moore's law drives the electronic industry" | |||

## True | |||

## False | |||

# Intel's Tri-gate or 3D transistors provides the following advantage. (Select atleast one) | |||

## Reduces gate leakage to a large extent than planar transistors | |||

## Incorporates three transistors in one thus saving area | |||

## Faster than the existing technology | |||

## Self cooling | |||

# Moore's second law is about: | |||

## Performance of a chip | |||

## Manufacturing cost | |||

## Chip area | |||

## Design advancements | |||

# Moore's law is related to semiconductor physics | |||

## True | |||

## False | |||

# Quantum computing experiments follow Moore's law | |||

## True | |||

## False | |||

# 3D IC technology refers to | |||

## IC's manufactured using 3D transistors | |||

## Interconnect technology which enables stacking of IC's | |||

## Three Dimensional improved conductivity | |||

## All of these | |||

# Moore's law has been around for | |||

## 80-90 years | |||

## 40-50 years | |||

## 20-30 years | |||

## None of these | |||

Latest revision as of 04:32, 5 February 2013

Current Page Address: http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/1d_ks

Page started with: http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/1b_as

What is Moore's Law?

Nearly 40 years ago, Intel co-founder Gordon Moore forecasted the rapid pace of technology innovation. His prediction, popularly known as “Moore’s Law,” states that transistor density on integrated circuits doubles about every two years.<ref>http://www.computerhistory.org/semiconductor/timeline/1965-Moore.html</ref>.The original prediction presented in 1965 paper in Electronics Magazine observed an annual doubling in the number of chip elements called transistors. He refined his view in 1975 with a two-year cycle in an updated paper. Instead of giving an empirical formula predicting the rate of increase, Moore instead used prose, graphs, and images to convey these predictions and observations to the masses. This in some ways increased the staying power of Moore's law, allowing the industry to use it as a benchmark of success and a measurable determination of their success. Virtually all digital devices are in some way fundamentally linked to the growth set in place by Moore's law.<ref>http://en.wikipedia.org/wiki/Moore's_law</ref>

A Common Misconception

Moore's Law is often linked to performance improvements as measured in CPU clock speeds. In the 1980's, former Intel executive David House stated that chip performance would double every 18 months.<ref>http://news.cnet.com/Myths-of-Moores-Law/2010-1071_3-1014887.html</ref> This is a consequence of Moore's Law, but it is not what Moore's Law actually claims. In fact, due to heat dissipation issues<ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref><ref>http://techtalk.pcpitstop.com/2007/08/06/cpu-clock-speeds/</ref>, performance as measured in clock speed has remained flat since 2005<ref>http://www.kmeme.com/2010/09/clock-speed-wall.html</ref> while the number of transistors continues to double roughly every 2 years.

Moore's law, past to present

Reviewing data from the inception of Moore's law to the present shows that, consistent to Moore's prediction, the number of transistors on a chip has doubled approximately every 2 years. There are several contributing factors, that had they not been developed, could have slowed or plateaued Moore's law. One of these is the invention Dynamic random access memory (DRAM). This is a type of random access memory that allows for the storage of each bit in a separate capacitor on an integrated circuit. The main advantage of DRAM over its predecessor, SRAM, is that only one transistor and a capacitor are required per bit, compared to four or six transistors with SRAM. Another is most certainly the complementary metal-oxide-semiconductor (CMOS). This allowed a higher density of logic functions on a chip with the added benefit of low power consumption and electrical noise immunity. Lastly was the invention of the integrated circuit itself. Moore's law isn't only responsible for making larger and faster chips, but also smaller, cheaper, and more efficient ones as well.

As visible in the examples below and to the right, Moore's law has seemed to hold at an overall constant rate of growth consistent to what Moore predicted. There is some variation from year to year that can be explained by the introduction of new technology, manufacturing or otherwise that helped kick-start Moore's law back on track. This is most evident with the "Dual-Core Itanium 2" processor. It was ahead of it's competitors by a full four years. Looking at the example to the right, we can visibly see a dip in transistor count during 1995-2003. But this is balanced out by an almost equal increase in the following eight years.

| Processor | Transistor count | Date of introduction | Manufacturer | Process | Area |

|---|---|---|---|---|---|

| Intel 4004 | 2,300 | 1971 | Intel | 10 µm | 12 mm² |

| Intel 8008 | 3,500 | 1972 | Intel | 10 µm | 14 mm² |

| MOS Technology 6502 | 3,510 | 1975 | MOS Technology | 21 mm² | |

| Motorola 6800 | 4,100 | 1974 | Motorola | 16 mm² | |

| Intel 8080 | 4,500 | 1974 | Intel | 6 μm | 20 mm² |

| RCA 1802 | 5,000 | 1974 | RCA | 5 μm | 27 mm² |

| Intel 8085 | 6,500 | 1976 | Intel | 3 μm | 20 mm² |

| Zilog Z80 | 8,500 | 1976 | Zilog | 4 μm | 18 mm² |

| Motorola 6809 | 9,000 | 1978 | Motorola | 5 μm | 21 mm² |

| Intel 8086 | 29,000 | 1978 | Intel | 3 μm | 33 mm² |

| Intel 8088 | 29,000 | 1979 | Intel | 3 μm | 33 mm² |

| Intel 80186 | 55,000 | 1982 | Intel | ||

| Motorola 68000 | 68,000 | 1979 | Motorola | 4 μm | 44 mm² |

| Intel 80286 | 134,000 | 1982 | Intel | 1.5 µm | 49 mm² |

| Intel 80386 | 275,000 | 1985 | Intel | 1.5 µm | 104 mm² |

| Intel 80486 | 1,180,000 | 1989 | Intel | 1 µm | 160 mm² |

| Pentium | 3,100,000 | 1993 | Intel | 0.8 µm | 294 mm² |

| AMD K5 | 4,300,000 | 1996 | AMD | 0.5 µm | |

| Pentium II | 7,500,000 | 1997 | Intel | 0.35 µm | 195 mm² |

| AMD K6 | 8,800,000 | 1997 | AMD | 0.35 µm | |

| Pentium III | 9,500,000 | 1999 | Intel | 0.25 µm | |

| AMD K6-III | 21,300,000 | 1999 | AMD | 0.25 µm | |

| AMD K7 | 22,000,000 | 1999 | AMD | 0.25 µm | |

| Pentium 4 | 42,000,000 | 2000 | Intel | 180 nm | |

| Atom | 47,000,000 | 2008 | Intel | 45 nm | |

| Barton | 54,300,000 | 2003 | AMD | 130 nm | |

| AMD K8 | 105,900,000 | 2003 | AMD | 130 nm | |

| Itanium 2 | 220,000,000 | 2003 | Intel | 130 nm | |

| Cell | 241,000,000 | 2006 | Sony/IBM/Toshiba | 90 nm | |

| Core 2 Duo | 291,000,000 | 2006 | Intel | 65 nm | |

| AMD K10 | 463,000,000 | 2007 | AMD | 65 nm | |

| AMD K10 | 758,000,000 | 2008 | AMD | 45 nm | |

| Itanium 2 with 9MB cache | 592,000,000 | 2004 | Intel | 130 nm | |

| Core i7 (Quad) | 731,000,000 | 2008 | Intel | 45 nm | 263 mm² |

| Six-Core Xeon 7400 | 1,900,000,000 | 2008 | Intel | 45 nm | |

| POWER6 | 789,000,000 | 2007 | IBM | 65 nm | 341 mm² |

| Six-Core Opteron 2400 | 904,000,000 | 2009 | AMD | 45 nm | 346 mm² |

| 16-Core SPARC T3 | 1,000,000,000 | 2010 | Sun/Oracle Corporation|Oracle | 40 nm | 377 mm² |

| Core i7 (Gulftown) | 1,170,000,000 | 2010 | Intel | 32 nm | 240 mm² |

| 8-core POWER7 | 1,200,000,000 | 2010 | IBM | 45 nm | 567 mm² |

| z196 | 1,400,000,000 | 2010 | IBM | 45 nm | 512 mm² |

| Dual-Core Itanium 2 | 1,700,000,000 | 2006 | Intel | 90 nm | 596 mm² |

| Tukwila | 2,000,000,000 | 2010 | Intel | 65 nm | 699 mm² |

| Core i7 (Sandy Bridge-E) | 2,270,000,000 | 2011 | Intel | 32 nm | 434 mm² |

| Nehalem-EX | 2,300,000,000 | 2010 | Intel | 45 nm | 684 mm² |

| 10-Core Xeon Westmere-EX | 2,600,000,000 | 2011 | Intel | 32 nm | 512 mm² |

| Six-core IBM zEC12 | 2,750,000,000 | 2012 | IBM | 32 nm | 597 mm² |

| 8-Core Itanium Poulson | 3,100,000,000 | 2012 | Intel | 32 nm | 544 mm² |

| 62-Core Xeon Phi | 5,000,000,000 | 2012 | Intel | 22 nm |

<ref>http://en.wikipedia.org/wiki/Transistor_count</ref>

A quick primer on current manufacturing techniques

At the heart of Moore's Law is the transistor. Computer chips contain hundreds of millions of transistors embedded on a wafer of silicone. To make these chips, first a “stencil” is made containing the outlines of millions of transistors. The stencil is then placed over a silicone wafer which is sensitive to ultraviolet light. The light penetrates through the gaps of the stencil and exposes the silicon wafer, which is then is bathed in acid, carving the outlines of the circuits and the design of millions of transistors. Since the wafer consists of many conducting and semiconducting layers, the acid cuts into the wafer at different depths and patterns, so one can create circuits of enormous complexity.

One reason why Moore’s law has relentlessly increased the power of chips is because UV light can be tuned so that its wavelength is smaller and smaller, making it possible to etch increasingly tiny transistors onto silicon wafers. Since UV light has a wavelength as small as 10 nanometers, the smallest transistor that can be etched is about thirty atoms across.<ref>http://en.wikipedia.org/wiki/Photolithography</ref> Due to physical limitations, This process cannot go on forever. At some point, it will be physically impossible to etch smaller effective transistors, and Moore’s law as we understand it will finally collapse.

Currently, estimates predict that around 2020 or soon afterward, Moore’s law will gradually cease to hold true for traditional transistor technology. Transistors will be so small that quantum effects will begin to take over and electrons will "leak" out of the wires.<ref>http://computer.howstuffworks.com/small-cpu2.htm</ref><ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref> For example, the thinnest layer inside a computer will be about five atoms across. At that size, quantum effects will become dominant and transistors will not function as they currently do without other technological advances.

Why do we need Moore's law?

Begun as a simple observation, Moore’s Law has come to represent the amazing and seemingly inexhaustible capacity for exponential growth in electronics.<ref>P. K. Bondyopadhyay, “Moore’s Law governs the silicon revolution,”Proc. IEEE, vol. 86, no. 1, pp. 78–81, Jan. 1998.</ref> The historical regularity and predictability of Moore's Law produce organizing and coordinating effects throughout the semiconductor industry that not only set the pace of innovation, but define the rules and very nature of competition. And since semiconductors increasingly comprise a larger portion of electronics components and systems, either used directly by consumers or incorporated into end-use items purchased by consumers, the impact of Moore's Law has led users and consumers to come to expect a continuous stream of faster, better, and cheaper high-technology products. As integrated circuit costs have decreased, they have made their way into modern products ranging from automobiles to greeting cards.<ref>http://download.intel.com/museum/Moores_Law/Printed_Materials/Moores_Law_Backgrounder.pdf</ref>

The drivers for technology development fall into two categories: push and pull. Push drivers are technology enablers, those things that make it possible to achieve the technical improvements. Moore described the three push drivers as increasing chip area, decreasing feature size, and design cleverness. The economic drivers for Moore’s Law have been extraordinarily compelling. As the dimensions of a transistor shrank, the transistor became smaller, lighter, faster, consumed less power, and in most cases was more reliable. All of these factors make the transistor more desirable for virtually every possible application. But there is more. Historically, the semiconductor industry has been able to manufacture silicon devices at an essentially constant cost per area of processed silicon. Thus, as the devices shrank they enjoyed a shrinking cost per transistor. Each step along the roadmap of Moore’s Law virtually guaranteed economic success. <ref>http://commonsenseatheism.com/wp-content/uploads/2011/12/Mack-Fifty-Years-of-Moores-Law.pdf</ref>

Why Moore's law still holds

For several decades Moore's law has been the guiding principle in the development of integrated circuits, the heart of the modern computer's ever improving price-performance. Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is uncertain. This observation has proven remarkably prescient and is among the most frequently referred to "predictions" of the 20th century. But Moore’s law is less a prediction than an assertion. It represents a good case of what systems scientists call feed-forward and lawyers refer to as conscious parallelism <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref>. Feed-forward is a systems concept in which signals from outside a system are in some way predicted and fed in to control the system of interest. Sometimes these are psychological predictions, as when people anticipate a shortage of gas, buy ahead, and thereby induce gas shortages. Conscious parallelism is usually used to describe a kind of price-fixing among competitors that happens without actual written or spoken agreements among the parties. Rather, being sensitive to the environment and the various signals that are available there, each party adjusts its behavior to achieve a desired outcome. But conscious parallelism can apply to terms other than price. It is hard to overstate the contribution that human expectations make to the functioning of our systems. Recollecting how in 1962 President Kennedy motivated Americans to reach for the moon,"We choose to go to the moon in this decade and do the other things, not because they are easy, but because they are hard,because that goal will serve to organize and measure the best of our energies and skills, because that challenge is one that we are willing to accept, one we are unwilling to postpone, and one which we intend to win, and the others, too." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref>

As for the incredible price reductions in computing, they did not happen for any essential technical reason. Nor were they the result of a simple set of scientific, engineering, production, marketing, or financial forces. Indeed the forces at work have been changing over the four decades in question. Carver Meade said it clearly when he pointed out that Moore's law is "not a law of physics,it's about human belief, and when people believe in something, they'll put energy behind it to make it come to pass." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref> Gordon Moore himself eventually assessed the many developments that had contributed to his observation's persistent relevance this way. "Every company knows that unless they keep moving at that pace, they are going to fall behind. It has become the driver of what happens." <ref>http://www.fastcompany.com/1108661/where-auto-industry%E2%80%99s-moore%E2%80%99s-law</ref>

"Moore's Law is self-perpetuating," says Daniel Grupp, manager of advanced device development at Acorn Technologies and a visiting scholar at Stanford University’s Center for Integrated Systems. “It is relevant, and continues to be, because the whole cycle of design, development, manufacture, distribution and sales is known to be sustainable if we follow Moore's Law. If we beat Moore's Law, the market cannot absorb the new products and engineers lose their jobs. If we slip behind Moore's Law, there is nothing to buy, and the distribution chain withers. For example, in planning the development strategy at Acorn Technologies, we carefully track Moore's Law to time the introduction of our devices.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref>

Thirty-six years ago, Grupp states, “Moore's Law was merely an observation. Now, we in the industry enforce the law. It has become a business dictum.” He adds, “Moore's Law will continue beyond our lifetimes into the new millennium.” Joe G. Xi, vice president of products and marketing at Plato Design Systems, says,“Moore’s Law is still in effect. If you are a leading-edge semiconductor company, you definitely have to observe Moore’s Law – not Moore’s Law per se, but the migration of the process technologies.” The dictum of 2x transistors every 18 months (as the amended Moore’s Law has it) “may not be as accurate (now) as it was five or six years ago,” Xi adds. “Does the product that people develop reflect whether they have considered Moore’s Law? Usually, they have not.” Analog IC companies are the ones that must answer more directly to Moore’s Law, according to Xi.“In general, Moore’s Law is still correct,” he concludes. Jodi Shelton, executive director of the Fabless Semiconductor Association, says,“Moore’s Law ends up being pretty accurate. Look how quickly we’ve moved from 0.18-micron (device features) to 0.13 micron; it’s amazing.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref>

Moore's Law comes into play with forecasts or at anytime someone is thinking about their particular device in the future. If a new process to develop smaller ICs developed every 18 months, it is not hard to see your current design as being obsolete in three years. So, Moore's Law does very much affect design cycles. “As soon as a company puts out a product, they turn right around and start to redesign partially to take advantage of new technologies. Sometimes there are instances where something isn't feasible today, but it could be down the road, if Moore's Law continues to be upheld. The smaller processes help to make advances not only in the CPU world, but also portable, low-power products like cell phones, digital cameras, etc. This allows constant turnovers and the ability for the electronics industry to put out an upgraded product every year. It is definitely in the interest of the electronics industry to make sure Moore's Law remains unbroken.” Robert O’Dell, vice president of marketing at Wintegra, observes, “What drives Moore’s Law is the ability of the fabs to product silicon that is smaller.” Suppliers of wafer fabrication equipment, he notes, “plan on Moore’s Law; they are producing equipment and solving physics problems.” Still, he adds, “I always wonder if it’s a self-fulfilling prophecy.” Moore’s Law is relevant and important these days, according to the marketing executive; “it anchors what’s given in a period of time,” he says. The number of transistors on a given IC may be doubling every 12-18 months, but there are other considerations that are leaving Moore’s Law behind, O’Dell states. Communications bandwidths, especially in the optical component space, “can’t wait around for Moore’s Law,” he notes. “No one has built an all-optical switch that works. Something has to give; you have to put more silicon down.” At the same time, “I/O is not scaling as fast as Moore’s Law,” O’Dell says. <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref>

Largely forgotten is that Moore, in an attempt to keep his ‘law’ effective, formulated Moore's Second Law, which says that facility costs increase on a semi-log scale. In other words, every additional doubling of device complexity increases manufacturing costs exponentially. Ultimately, the cost to obtain the next doubling is larger than the entire wealth of the planet, and that next step is economically impossible. Of course, economics aside, there is a practical limit somewhere: It'll be a while before we can build entire CPU chips that are a tenth the size an atom. According to Ron Gyurcsik, group director for the Datacom IC strategic business group at Tality, the design-services subsidiary of Cadence Design Systems, Moore’s Second Law is more relevant these days than Moore’s First Law. Chip design is “more cost driven than anything,” he says. “The two (laws) work together,” Gyurcsik adds. “The cost emphasis drives us to Moore’s Law. Cost is the way the industry has been driven for a long time.” <ref>http://cse.yeditepe.edu.tr/~sgoren/spring2011/CSE221/mooreslaw.pdf</ref>

With transistor scaling to the molecular level, Heisenberg's uncertainty principle kicks in where its not possible to determine electron's position and velocity at the same instant. At this point for Moore's law to continue the basic concept of semiconductors should give way to an revolution such as quantum computing which is already in early stages of its innovation. Intel already has a technology road-map set until 2020 with the 5nm process node.<ref>http://www.intel.com/content/www/us/en/it-managers/peek-at-the-future-rick-white-presentation.html </ref> But what happens after that is to be seen. It should be noted that the end of Moore's law has been projected to occur since the 1970's but it still holds to this date as engineers and scientists overcome the seemingly impossible challenge every time with ground breaking innovations. From an engineering perspective, it is this spirit of innovation to catch up with Moore's law which has kept the law alive. Although Moore's law may fail someday as the number of transistors cannot go on doubling forever but the importance of Moore's law holds the key role for the future of computing industry. Also doubling of transistors is not the only reason for improved performance. Even if scaling stops, performance can be improved by exploiting new architectural schemes such as multicores, GPGPU's etc which indeed can serve the basic purpose of Moore's law of scaling transistors.

The Road Ahead

Do Transistor Counts Matter?