CSC/ECE 506 Spring 2012/11a ht: Difference between revisions

| (91 intermediate revisions by 2 users not shown) | |||

| Line 16: | Line 16: | ||

Protic <ref name="protic">Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996 | Protic <ref name="protic">Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996 | ||

doi: 10.1109/88.494605 | doi: 10.1109/88.494605 | ||

[http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=494605&isnumber=10721 paper]</ref> defines a DSM to "consists of multiple independent processing nodes with local memory modules, connected by a general interconnection network." What this means is that rather than having these processors connected on a single bus line, there is a network of bus lines. The new issues that arise from such a method involves how to communicate with another node. This is usually solved using a message passing model that is effective depending on the topology that is used. According to Protic | [http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=494605&isnumber=10721 paper]</ref> defines a DSM to "consists of multiple independent processing nodes with local memory modules, connected by a general interconnection network." What this means is that rather than having these processors connected on a single bus line, there is a network of bus lines. The new issues that arise from such a method involves how to communicate with another node. This is usually solved using a message passing model that is effective depending on the topology that is used. According to Protic, "... compared to shared-memory systems, hardware problems are easier and software problems more complex in distributed-memory systems." What we can walk away with is that even though you have made the hardware limitation less of a factor, the software problem is now more complex in nature. A good picture of what a DSM is, is shown below. | ||

<center>[[Image:DSM Protic.jpg|800px| DSM_Protic]]</center> | |||

<center> '''Figure 2. Breakdown of a DSM''' <ref name="protic">Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996 | |||

doi: 10.1109/88.494605 | |||

[http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=494605&isnumber=10721 paper]</ref></center> | |||

According to Nitzberg <ref name="nitzberg">Nitzberg, B.; Lo, V.; , "Distributed shared memory: a survey of issues and algorithms," Computer , vol.24, no.8, pp.52-60, Aug. 1991 | According to Nitzberg <ref name="nitzberg">Nitzberg, B.; Lo, V.; , "Distributed shared memory: a survey of issues and algorithms," Computer , vol.24, no.8, pp.52-60, Aug. 1991 | ||

doi: 10.1109/2.84877 | doi: 10.1109/2.84877 | ||

[http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=84877&isnumber=2778 paper]</ref> DSM has been researched since the 1980's. There are many reasons why DSM has been an area of research focus. Uniprocessor bus-based systems suffer from a hardware and software limitation that can be mitigated using a DSM. But, this has only become an issue as we start having more and faster processors that we want to add to the system. | [http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=84877&isnumber=2778 paper]</ref> DSM has been researched since the 1980's. There are many reasons why DSM has been an area of research focus. Uniprocessor bus-based systems suffer from a hardware and software limitation that can be mitigated using a DSM. But, this has only become an issue as we start having more and faster processors that we want to add to the system. There are three approaches that have been used to implement a DSM system. These include hardware, operating system, and compiler implementations. | ||

According to Shi <ref name="shi">Shi, Weisong. Performance Optimization of Software Distributed Shared Memory Systems. Beijing: Higher Education, 2004. Print. [http://www.cs.wayne.edu/~weisong/papers/shi00-jiajia.pdf paper]</ref>, "The main difficulty in building a software DSM system is solving the memory coherence problem, namely, the problem of how to make a newly written value to be visible to demanding processors on time." Regarding this issue, the two major points are the memory [http://en.wikipedia.org/wiki/Consistency_model consistency model] and [http://en.wikipedia.org/wiki/Cache_coherence cache coherence] protocols. The best description is given by Shi<ref name="shi"></ref>, "...memory consistency model determines when the modified data should be visible to other processors and cache coherence protocol determines what values should be visible to other processors." From earlier, we can see that on a shared bus line multiprocessor system, this is already a complex problem. When adding a layer of abstraction, this can become a very large problem. For these two complex problems, there are two spectrum of solutions. One being hardware support, the second being software support. | |||

==Software Support== | |||

In 1986, the first software supported DSM was created. Since then, it has been well over 20 years and there have been great improvement upon the first initial system. First, it is usually the case that the software support will find some way to relax the memory consistency model. This is due to the fact that memory passing on a DSM is much more expensive than message passing on a single bus system. Over the last 20 years, over 20 different memory consistency models have been proposed <ref name="shi"></ref>. Second, cache coherence must be addressed. Having multiple cache copies means that when one copy is updated the other cache copies should be affected in some way such that the old values are not used. Traditionally there are two techniques, one being snoopy protocol and the second being directory based protocol. According to Shi <ref name="shi"></ref>, snoopy protocol is less used due the fact that it requires hardware support. Lastly, according to Shi <ref name="shi"></ref> the major problem is the interface. For a DSM system to be competitive, it has to be able to work for many customers. Below is a listing of some representative software DSM implementations. | |||

<center>[[Image:SoftwareDSM.jpg|600px| Software DSM]]</center> | |||

<center> '''Figure 3. Representative Software DSM Implementations''' <ref name="shi"></ref></center> | |||

==Hardware Support== | |||

Although a lot of research has been towards software support for DSM, there has been some research in adding some hardware support. Unfortunately, according to Shi <ref name="shi"></ref> there is a rejection of hardware support from large corporations. What will occur when using hardware support is a issue of compatibility. Fortunately, recent adoptions of certain hardware standards will allow for some hardware support on the mass level. | |||

<br> | |||

=Optimization Implementations= | =Optimization Implementations= | ||

==Shasta Protocol== | ==Shasta Protocol== | ||

The Shasta protocol improves on the regular software disributed shared memory system such that the shared data can be kept coherent at fine granularity. This is implemented by inserting inline code that checkes the cache state of shared data before each load or store. This protocol also allows the coherence granularity to be varied across different shared data structures in a single application, thus alleviating any potential inefficiencies that arise from the fixed large(page-size) granularity of the communication typical in most software shared memory systems. As in the hardware cache-coherent multiprocessors, shared data in this systems has three states - Invalid, Shared and Exclusive. This protocol provides a number of mechanisms for dealing with the long communication latencies in a workstation cluster. It minimizes extraneous coherence messages, and hence requires fewer messages to satisfy shared memory operations compared to protocols commonly used in hardware DSM systems. It also includes optimizations such as non-blocking stores, that aggressively exploit a relaxed memory consistency model. Other optimizations include detection of migratory data sharing, issuing multiple load misses simultaneously, merging of load and store misses to the same cache line, and support for prefetching and home placement directives. | The Shasta protocol improves on the regular software disributed shared memory system such that the shared data can be kept coherent at fine granularity. This is implemented by inserting inline code that checkes the cache state of shared data before each load or store. This protocol also allows the coherence granularity to be varied across different shared data structures in a single application, thus alleviating any potential inefficiencies that arise from the fixed large(page-size) granularity of the communication typical in most software shared memory systems. As in the hardware cache-coherent multiprocessors, shared data in this systems has three states - Invalid, Shared and Exclusive. This protocol provides a number of mechanisms for dealing with the long communication latencies in a workstation cluster. It minimizes extraneous coherence messages, and hence requires fewer messages to satisfy shared memory operations compared to protocols commonly used in hardware DSM systems. It also includes optimizations such as non-blocking stores, that aggressively exploit a relaxed memory consistency model. Other optimizations include detection of migratory data sharing, issuing multiple load misses simultaneously, merging of load and store misses to the same cache line, and support for prefetching and home placement directives. <ref name="shasta protocol report">Scales, D.J.; Gharachorloo, K.; , "Performance of the Shasta Distributed Shared Memory Protocol" WRL Research Report 97/2 , February 1997 | ||

[http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-97-2.pdf report]</ref> | |||

< | |||

<center>[[Image:Shasta1.gif|500px| Protocol representation]]</center> <br> | |||

<center> '''Figure 4. Representation of Shasta protocol ''' <ref name="shasta protocol">Scales, D.J.; Gharachorloo, K.; , "Shasta : A system for Supporting Fine-Grain Shared Memory across Clusters" Eighth SIAM conference on Parallel Processing for Scientific Computing, March 1996 | |||

[https://docs.google.com/a/ncsu.edu/viewer?a=v&q=cache:piyKOneF8LAJ:citeseerx.ist.psu.edu/viewdoc/download?doi%3D10.1.1.36.7878%26rep%3Drep1%26type%3Dpdf+&hl=en&gl=us&pid=bl&srcid=ADGEESiE_RCkP-JIN07cLshGsVZJU4GiIJdS6tW1ZsDCbR76fsdOHdb3m9lYw-VP4crVcnLjwitvUB48WJPSYJPxRfAVHsdsKHn6nhVIeMRt0jwOOiyFpxPN8Uco1evmFUtzex0IhfcC&sig=AHIEtbRMoDKuNhNvSRcUT9Ew-4qMKHAZFw paper]</ref> </center> | |||

===Multiple Coherence Granularity=== | ===Multiple Coherence Granularity=== | ||

| Line 55: | Line 80: | ||

==Parallel File Input/Output in Cohesion system== | ==Parallel File Input/Output in Cohesion system== | ||

A parallel file I/O system that is independent of the memory consistency models reduces the file-related network traffic in the page-based software DSM systems built on a network of workstations. File accesses in page-based software Distributed Shared Memory (DSM) systems are usually performed by a single node, which may lead to a poor overall performance because a large amount of network traffic is generated to transfer data between this file handling node and the other nodes. This system alleviates this problem. The two main features in this design are the adaptive data distribution scheme and the delayed file access mechanism. The former distributes file blocks among the nodes according to the access pattern of the application, while the latter ensures that the data are transferred to the consumer node instead of the request node by exploiting the memory mapping features of the virtual shared address space of the DSM systems. Previously, most researches had been focused on the speedup of the computation phase of a DSM application via relaxed memory consistency models. The time-consuming file access operations were neglected. For ease of programming, the file accesses in a DSM application are usually performed in the sequentially executed initialization and completion phases on a certain node. As a result, a large amount of network traffic is generated to distribute the file data to other nodes during computation. This may degrade system performance since the networks employed in many DSM systems are usually slow compared to other components such as CPU, or even disk. The parallel file I/O system helps combat this network traffic. The effectiveness of this design has been verified by implementing a prototype on a page-based software DSM system named Cohesion. | A parallel file I/O system that is independent of the memory consistency models reduces the file-related network traffic in the page-based software DSM systems built on a network of workstations. File accesses in page-based software Distributed Shared Memory (DSM) systems are usually performed by a single node, which may lead to a poor overall performance because a large amount of network traffic is generated to transfer data between this file handling node and the other nodes. This system alleviates this problem. The two main features in this design are the adaptive data distribution scheme and the delayed file access mechanism. The former distributes file blocks among the nodes according to the access pattern of the application, while the latter ensures that the data are transferred to the consumer node instead of the request node by exploiting the memory mapping features of the virtual shared address space of the DSM systems. Previously, most researches had been focused on the speedup of the computation phase of a DSM application via relaxed memory consistency models. The time-consuming file access operations were neglected. For ease of programming, the file accesses in a DSM application are usually performed in the sequentially executed initialization and completion phases on a certain node. As a result, a large amount of network traffic is generated to distribute the file data to other nodes during computation. This may degrade system performance since the networks employed in many DSM systems are usually slow compared to other components such as CPU, or even disk. The parallel file I/O system helps combat this network traffic. The effectiveness of this design has been verified by implementing a prototype on a page-based software DSM system named Cohesion. <ref name="parallel file system">Ce-Kuen Shieh; Su Cheong Mac; Jyh-Chang Ueng, "Improving the Performance of Distributed Shared Memory Systems via Parallel File Input/Output" The Journal of Systems and Software 44(1998) 3-15 , August 1997 | ||

[http://www.sciencedirect.com/science/article/pii/S0164121298100390 paper]</ref> | |||

<br> | <br> | ||

===Design=== | ===Design=== | ||

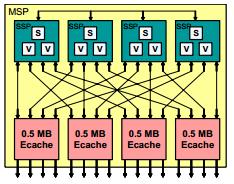

The two | The two main goals in the design are to parallelize the file accesses and reduce network traffic, and to minimize modifications on the existing DSMapplications. In this parallel file I/O system, every computation node also acts as a storage node. A parallel file is partitioned into file blocks, which are scattered among the nodes so that each node has a disjoint portion of the parallel file. In the pre-run of an application, the files are placed on the root node(node 0), which is the node that initiates the DSM system and the application. The data access pattern of the application is collected and the files are then partitioned into file blocks and redistributed among all nodes via an adaptive distribution scheme. In the subsequent executions, the root node issues the read requests via a delayed file access mechanism to ensure that the file blocks are placed in the consumer nodes instead of the requester node, i.e., the root node. Whenc omputation is completed, write requests are issued by the root node via the delayed access mechanism so that the data on each node are stored in its local disk instead of the root node, thereby reducing the network traffic. | ||

<br> | <br> | ||

<center>[[Image:Design.png|500px| Design of the system]]</center> | <center>[[Image:Design.png|500px| Design of the system]]</center> | ||

<center> '''Figure | <center> '''Figure 5. File view of parallel I/O system''' <ref name="parallel file system">Ce-Kuen Shieh; Su Cheong Mac; Jyh-Chang Ueng, "Improving the Performance of Distributed Shared Memory Systems via Parallel File Input/Output" The Journal of Systems and Software 44(1998) 3-15 , August 1997 | ||

[http://www.sciencedirect.com/science/article/pii/S0164121298100390 paper]</ref> </center> | |||

===Adaptive Data Distribution Scheme=== | ===Adaptive Data Distribution Scheme=== | ||

| Line 68: | Line 95: | ||

<br> | <br> | ||

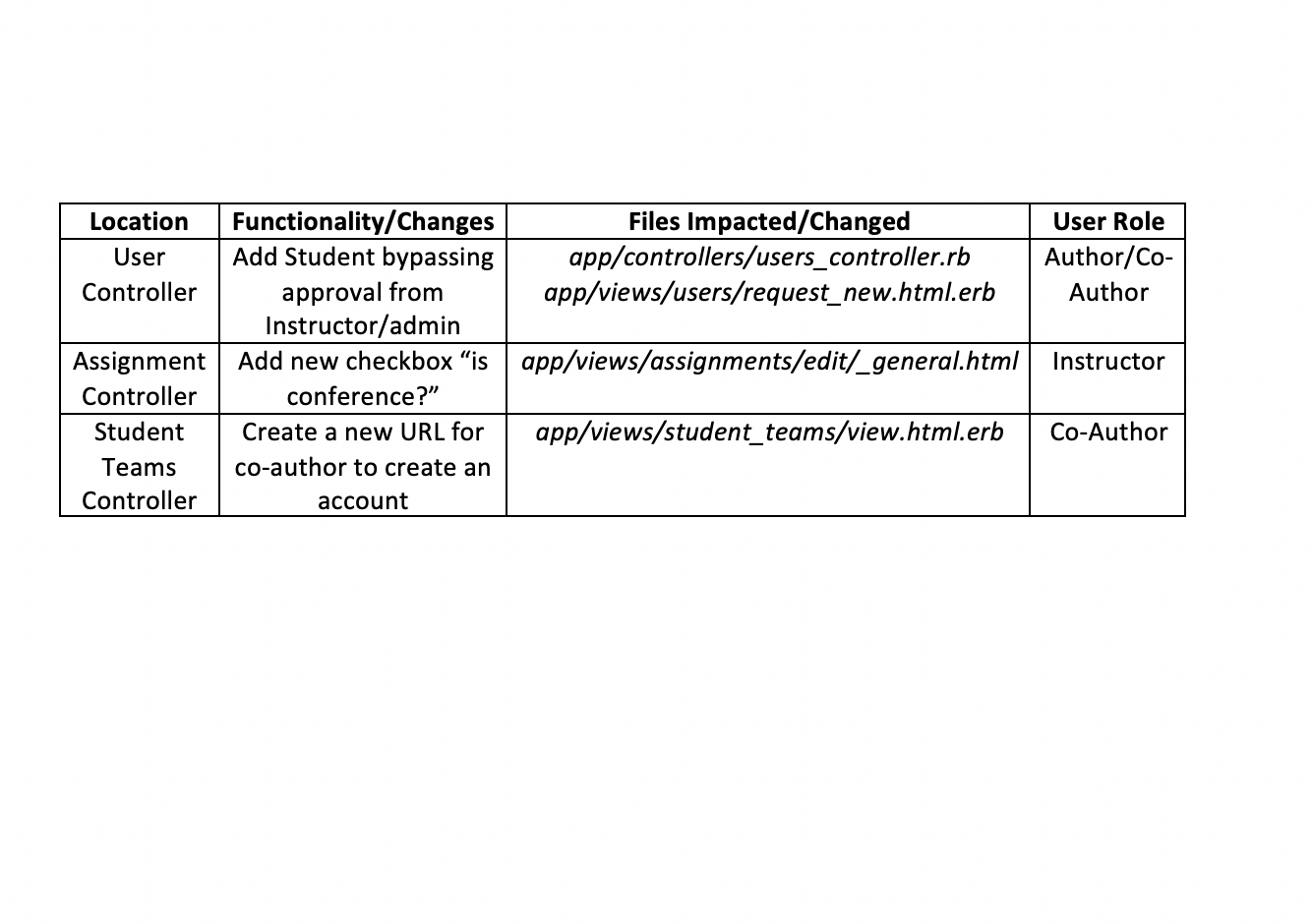

<center>[[Image:Metadata.png|450px|Representation of a data distribution scheme ]]</center> | <center>[[Image:Metadata.png|450px|Representation of a data distribution scheme ]]</center> | ||

<center> '''Figure | <center> '''Figure 6. Structure of metadata head''' <ref name="parallel file system">Ce-Kuen Shieh; Su Cheong Mac; Jyh-Chang Ueng, "Improving the Performance of Distributed Shared Memory Systems via Parallel File Input/Output" The Journal of Systems and Software 44(1998) 3-15 , August 1997 | ||

[http://www.sciencedirect.com/science/article/pii/S0164121298100390 paper]</ref> </center> | |||

===Delayed File Access Mechanism=== | ===Delayed File Access Mechanism=== | ||

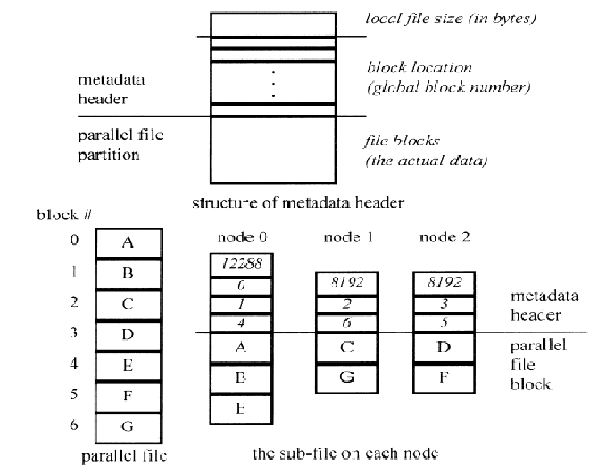

When a system employs ordinary file access mechanism, all file data are sent to the root node when a file is read no matter how we distribute the file blocks, because the requester node is always the root node in our system. The file data are then serialized by the network, negating the benefit of file I/O parallelization. Furthermore, the root node may use only a portion of the file data during computation. As a result, moving all file data to the root node for each file access induces unnecessary network traffic. | When a system employs ordinary file access mechanism, all file data are sent to the root node when a file is read no matter how we distribute the file blocks, because the requester node is always the root node in our system. The file data are then serialized by the network, negating the benefit of file I/O parallelization. Furthermore, the root node may use only a portion of the file data during computation. As a result, moving all file data to the root node for each file access induces unnecessary network traffic. | ||

In the delayed file access mechanism, the requested file block is directly put into the specified memory page of the storag e node inst ead of the re-quester node. When the root node issues a file read operation, a file read request with the memory address specifed in the read operation is broadcasted . Every node then reads a file block from its local disk and stores the block in a local memory page. The metadata header is checked to find the virtual shared add ress space where this local memory page should be mapped, and the local memory page is then mapped to the corresponding virtual shared address space. The reading of the file blocks and the address mapping are repeated until all file blocks are processed. Each node then returns the file block numbers that it has handled to the root node, and the root node broadcasts the node locations of all memory pages containing the file blocks so that other nodes can locate these pages when page faults occur. In this way, the actual network transfer of file blocks is delayed until the data are actually used during computation. The amount of network messages in this mechanism may not be less than that in the previously mentioned ordinary mechanism, but the amount of file data transfer across network is lessened with the delayed mechanism. | In the delayed file access mechanism, the requested file block is directly put into the specified memory page of the storag e node inst ead of the re-quester node. When the root node issues a file read operation, a file read request with the memory address specifed in the read operation is broadcasted . Every node then reads a file block from its local disk and stores the block in a local memory page. The metadata header is checked to find the virtual shared add ress space where this local memory page should be mapped, and the local memory page is then mapped to the corresponding virtual shared address space. The reading of the file blocks and the address mapping are repeated until all file blocks are processed. Each node then returns the file block numbers that it has handled to the root node, and the root node broadcasts the node locations of all memory pages containing the file blocks so that other nodes can locate these pages when page faults occur. In this way, the actual network transfer of file blocks is delayed until the data are actually used during computation. The amount of network messages in this mechanism may not be less than that in the previously mentioned ordinary mechanism, but the amount of file data transfer across network is lessened with the delayed mechanism. | ||

<br> | <br> | ||

<center>[[Image:Delayedaccess.png|700px| Parallel file access mechanisms]]</center> | <center>[[Image:Delayedaccess.png|700px| Parallel file access mechanisms]]</center> | ||

<center> '''Figure | <center> '''Figure 7. Comparison of file access mechanisms ''' <ref name="parallel file system">Ce-Kuen Shieh; Su Cheong Mac; Jyh-Chang Ueng, "Improving the Performance of Distributed Shared Memory Systems via Parallel File Input/Output" The Journal of Systems and Software 44(1998) 3-15 , August 1997 | ||

[http://www.sciencedirect.com/science/article/pii/S0164121298100390 paper]</ref> </center> | |||

===Implementation in Cohesion system=== | ===Implementation in Cohesion system=== | ||

Cohesion is a page-based software DSM system built on a network of intel ´86 based microcomputers running MSDOS with DOS-extender and iRMK Real -Time Kernel . The shared address space of a program running on Cohesion can be divided into two regions, which are categorized as the conventional memory and the eager release memory. A programmer can choose a region for his shared object by inheriting the corresponding base class provided by the system. In Cohesion, we have developed a kernel- level MSDOS-compatible file manipulation module which provides the basic file services such as open/close and read/write. There are four parallel file operations in this proto-type: POpen,PRead,PWrite, and PClose. POpen opens a parallel file for read or write access . An open notification is broadcasted by the root node. Each node then opens its local file portions and reads the metadata headersin case of a file read, or creates a local file in case of a file write. Via our delayed file access mechanism, PRead reads the content of the parallel file from the disks to the virtual shared address space and PWrite writes the contentof a virtual shared memory region to the disks. Experimentally, the total execution time of the 2048 * 1024 20-iterations Successive Over Relaxation program on eight nodes was reduced from 196 seconds with sequential I/O to 108 seconds with parallel I/O, while it was reduced in the 512 * 512 Matrix Multiplication program on eight nodes from 100 seconds with sequential I/O to 84 seconds with parallel I/O. | Cohesion is a page-based software DSM system built on a network of intel ´86 based microcomputers running MSDOS with DOS-extender and iRMK Real -Time Kernel . The shared address space of a program running on Cohesion can be divided into two regions, which are categorized as the conventional memory and the eager release memory. A programmer can choose a region for his shared object by inheriting the corresponding base class provided by the system. In Cohesion, we have developed a kernel- level MSDOS-compatible file manipulation module which provides the basic file services such as open/close and read/write. There are four parallel file operations in this proto-type: POpen,PRead,PWrite, and PClose. POpen opens a parallel file for read or write access . An open notification is broadcasted by the root node. Each node then opens its local file portions and reads the metadata headersin case of a file read, or creates a local file in case of a file write. Via our delayed file access mechanism, PRead reads the content of the parallel file from the disks to the virtual shared address space and PWrite writes the contentof a virtual shared memory region to the disks. Experimentally, the total execution time of the 2048 * 1024 20-iterations Successive Over Relaxation program on eight nodes was reduced from 196 seconds with sequential I/O to 108 seconds with parallel I/O, while it was reduced in the 512 * 512 Matrix Multiplication program on eight nodes from 100 seconds with sequential I/O to 84 seconds with parallel I/O. | ||

<br> | <br> | ||

==Cray X1== | |||

Designed at the Oak Ridge National Laboratory, the Cray X1 supercomputer is a DSM vector multiprocessor. According to Dunigan <ref name="dunigan">T. H. Dunigan, Jr., J. S. Vetter, | |||

J. B. White, III, and P. H. Worley, | |||

BPerformance evaluation of the Cray X1 | |||

distributed shared-memory architecture,[ | |||

IEEE Micro, vol. 25, pp. 30–40, | |||

Jan.-Feb. 2005. [http://www.csm.ornl.gov/~dunigan/ieee-micro-cray-x1-v8.pdf paper]</ref>, "The X1's hierarchial design uses the basic building blocks of multi-streaming processor (MSP), which is capable of 12.8 GF/s for 64-bit operations." It is also scalable to 4096 processors and up to 65 terabytes of memory. This super computer was introduced in 2002 and shows significant performance improvements on several applications. The X1 system attempts to take older Cray supercomputers and improve upon their systems. One of the interesting aspects of this system is that it performs much like a NUMA system. The difference is that each SMP node is not cached. | |||

===Overview=== | |||

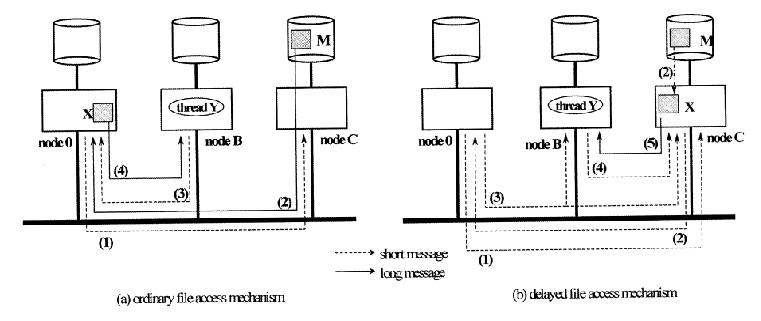

The system is an attempt to incorporate all the previous Cray vector systems into one design. One aspect of the X1 is the high memory bandwith. This will help to realize the theoretical peak performance. The Cray X1 uses a Multi-Streaming Processor (MSP). The hierarchical design of the system uses these MSP as a building block for the system. Each processor is made up of four single-streaming processors. Below is a a graphical representation of an individual Cray MSP module. | |||

<center>[[Image:MSP_Module.jpg|300px| MSP Module]]</center> | |||

<center> '''Figure 8. Cray MSP Module''' <ref name="dunigan"></ref></center> | |||

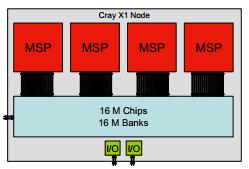

What is so interesting about these modules is how they can be interconnected to create a larger Cray X1 Node shown below. | |||

<center>[[Image:CrayNode.jpg|300px| Cray Node]]</center> | |||

<center> '''Figure 9. Cray Node''' <ref name="dunigan"></ref></center> | |||

For this example, we can see "...four MSPs, 16 memory controller chips (M-chips), and 32 memory daughter cards form a Cray X1 node."<ref name="dunigan"></ref> The memory banks provide 204 GB/s of bandwith which is enough for the processors and streams. Also, these banks use ECC memory which has built in correcting of single-bit errors and detection of multiple-bit errors as well as providing chip-kill error detection. These modules are connected using X1 routing modules. Each node uses full duplex links and each memory module has an even and odd 64-bit link. The overall topology is a 4-D hypercube, though larger configurations move towards a 2-D torus. Since this is a hardware supported DSM, there is little more to discuss regarding the system, so we will move forward into evaluating the performance. | |||

===Performance=== | |||

Understanding the X1 system, we see that the X1 node supports a cache-coherent shared memory and the Cray supports OpenMP, System V shared memory, and POSIX threads shared memory programming models <ref name="dunigan"></ref>. The Cray system also supports several DSM models. Donigan provides many benchmark graphs to show that the performance of the X1 is much greater than previous systems. Please refer to the paper for these graphs <ref name="dunigan"></ref> | |||

<br> | |||

=Glossary= | |||

'''Distributed Shared Memory:''' | |||

A class of software and hardware implementations in which each node of | |||

a cluster has access to shared memory in addition to each node's non-shared | |||

private memory. | |||

'''Non-Uniform Memory Access:''' | |||

A multiprocessing design where the memory access time depends on the memory | |||

location relative to a processor(local memory is accessed faster). | |||

'''Granularity:''' | |||

In parallel computing, the amount of computation in relation to communication, | |||

i.e., the ratio of computation to the amount of communication. | |||

'''Metadata File:''' | |||

A file containing the data about each parallel file on each node. | |||

'''Topology:''' | |||

The layout pattern of interconnections of the various elements such as links, | |||

nodes, etc. of a network. | |||

<br> | |||

=Quiz= | |||

'''Question 1 : DSM are used for multi-processor systems that have more than _____ processors.''' | |||

a) 4 | |||

b) 8 | |||

c) 16 | |||

d) 32 | |||

'''Question 2 : DSM has what two problems that causes implementation slowdowns?''' | |||

a) Memory consistency and hardware support | |||

b) Hardware support and cache coherence | |||

c) Protocols and cache coherence | |||

d) None of the above | |||

'''Question 3 : The Cray X1 is a _____ support for DSM.''' | |||

a) Software | |||

b) Hardware | |||

c) Cache Coherence | |||

d) Memory Consistency | |||

'''Question 4 : What of the following is NOT true of Shasta?''' | |||

a) Exploitation of relaxed memory models | |||

b) Maximization of protocol messages | |||

c) Detection of migratory sharing patterns | |||

d) Prefetching directives | |||

'''Question 5 : Parallel file I/O cohesion uses a ___ .''' | |||

a) Delayed file access | |||

b) Instant file access | |||

c) No file access | |||

d) Virtual file access | |||

'''Question 6 : The Cray X1 uses a ___ .''' | |||

a) Module with six processing threads | |||

b) Module with a single processing thread | |||

c) Module with four processing thread | |||

d) Module with eight processing thread | |||

'''Question 7 : Hardware support is less researched due to ___ .''' | |||

a) Cost of hardware | |||

b) Lack of software support for new hardware | |||

c) Lack of new hardware ideas | |||

d) Compatibility issues | |||

'''Question 8 : The first DSM was designed in ___ .''' | |||

a) 1975 | |||

b) 1983 | |||

c) 1986 | |||

d) 1990 | |||

'''Question 9 : In a DSM handling messages is ___ .''' | |||

a) Relatively low cost | |||

b) Has not impact on performance | |||

c) Relatively high cost | |||

d) No cost as it is hardware supported | |||

'''Question 10 : Shasta was developed in ___ .''' | |||

a) 1986 | |||

b) 1996 | |||

c) 2006 | |||

d) 1976 | |||

=References= | =References= | ||

<references /> | <references /> | ||

=See Also= | |||

1. More on DSM design issues, the following is a graph and the reference points to the paper it came from.[http://expertiza.csc.ncsu.edu/wiki/index.php/File:PerformanceMeasures.jpg Image]. <br> http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=84877&isnumber=2778 | |||

2. http://ieeexplore.ieee.org.prox.lib.ncsu.edu/stamp/stamp.jsp?arnumber=301925 | |||

3. http://catalog.hathitrust.org/Record/008308413 | |||

Latest revision as of 19:26, 23 April 2012

Introduction

When dealing with a relatively small number of processors (8-16), according to Solihin 320, using a bus based shared memory structure is fine. Unfortunately, when you need to provide a shared memory structure for processors much greater than that, you will need a different set of organization. This new organization is needed due to the physical limitations of the bus. There are two ways you can create such a system. These include Distributed Shared Memory (DSM) or Non-Uniform Memory Access (NUMA). The benefits of having a DSM and NUMA is that we can now scale to a larger amount of processors. The disadvantage is that scaling in such a way may not be the most cost-effective solution, Solihin 320. For the remainder of this section, we will be discussing the performance of DSM's.

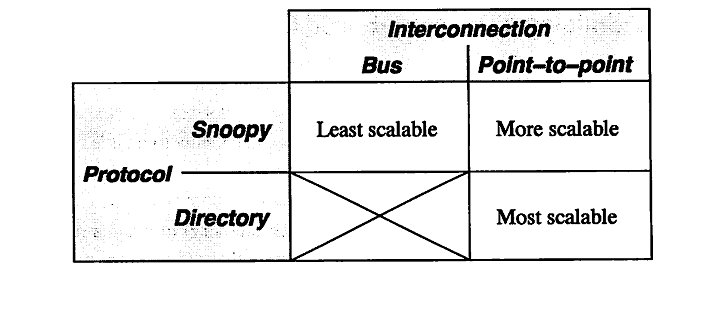

According to Solihin 320, there are two aspects that restrict the scalability of bus-based multiprocessors. These include the physical limitations of interconnections and the limitations of the protocol. To explain in detail, on a bus-based system, adding a processor will not affect any other physical restrictions on the system. Unfortunately, when adding a new processor, you will be reducing the speed of the bus. Second, the protocol needed to keep coherence does not scale well. As you increase the number of processors to the system, the amount of traffic also increases. This means that you might run the risk of overwhelming the bandwith. According to Solihin, there are a few ways that we can mitigate this problem. The following is from 321 of the Solihin textbook.

From the table, we can see that there is three ways to scale a multiprocessor system. The first being a single bus system. This is the least scalable due to the limitations of the bus wire itself. As you add processors you will decrease the bus speed due to having to increase the wire length. Also, you run into an issue of overwhelming the bus due to the amount of traffic. The second way is to use a point-to-point bus system. This allows for the speed of the bus to remain relatively fast, but since the traffic will also scale with the number of processors, there will be a limitation due to overwhelming the bus system with traffic. Lastly, the most scalable system to date is using a directory system. This allows for the bus to remain fast due to the short wires, and the bus traffic to remain low since the directory holds information on cache locations.

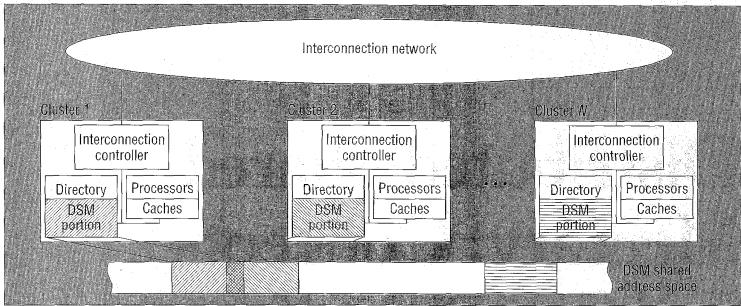

Protic <ref name="protic">Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996 doi: 10.1109/88.494605 paper</ref> defines a DSM to "consists of multiple independent processing nodes with local memory modules, connected by a general interconnection network." What this means is that rather than having these processors connected on a single bus line, there is a network of bus lines. The new issues that arise from such a method involves how to communicate with another node. This is usually solved using a message passing model that is effective depending on the topology that is used. According to Protic, "... compared to shared-memory systems, hardware problems are easier and software problems more complex in distributed-memory systems." What we can walk away with is that even though you have made the hardware limitation less of a factor, the software problem is now more complex in nature. A good picture of what a DSM is, is shown below.

doi: 10.1109/88.494605

paper</ref>

According to Nitzberg <ref name="nitzberg">Nitzberg, B.; Lo, V.; , "Distributed shared memory: a survey of issues and algorithms," Computer , vol.24, no.8, pp.52-60, Aug. 1991

doi: 10.1109/2.84877

paper</ref> DSM has been researched since the 1980's. There are many reasons why DSM has been an area of research focus. Uniprocessor bus-based systems suffer from a hardware and software limitation that can be mitigated using a DSM. But, this has only become an issue as we start having more and faster processors that we want to add to the system. There are three approaches that have been used to implement a DSM system. These include hardware, operating system, and compiler implementations.

According to Shi <ref name="shi">Shi, Weisong. Performance Optimization of Software Distributed Shared Memory Systems. Beijing: Higher Education, 2004. Print. paper</ref>, "The main difficulty in building a software DSM system is solving the memory coherence problem, namely, the problem of how to make a newly written value to be visible to demanding processors on time." Regarding this issue, the two major points are the memory consistency model and cache coherence protocols. The best description is given by Shi<ref name="shi"></ref>, "...memory consistency model determines when the modified data should be visible to other processors and cache coherence protocol determines what values should be visible to other processors." From earlier, we can see that on a shared bus line multiprocessor system, this is already a complex problem. When adding a layer of abstraction, this can become a very large problem. For these two complex problems, there are two spectrum of solutions. One being hardware support, the second being software support.

Software Support

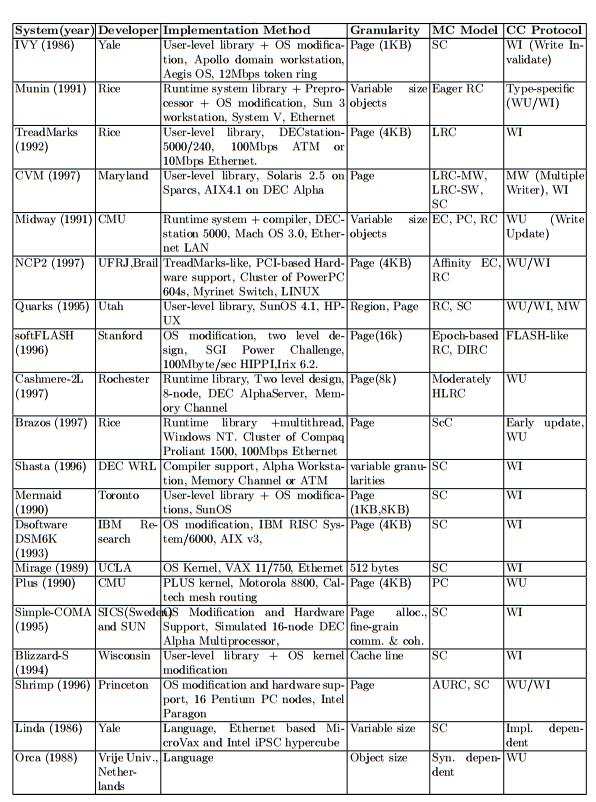

In 1986, the first software supported DSM was created. Since then, it has been well over 20 years and there have been great improvement upon the first initial system. First, it is usually the case that the software support will find some way to relax the memory consistency model. This is due to the fact that memory passing on a DSM is much more expensive than message passing on a single bus system. Over the last 20 years, over 20 different memory consistency models have been proposed <ref name="shi"></ref>. Second, cache coherence must be addressed. Having multiple cache copies means that when one copy is updated the other cache copies should be affected in some way such that the old values are not used. Traditionally there are two techniques, one being snoopy protocol and the second being directory based protocol. According to Shi <ref name="shi"></ref>, snoopy protocol is less used due the fact that it requires hardware support. Lastly, according to Shi <ref name="shi"></ref> the major problem is the interface. For a DSM system to be competitive, it has to be able to work for many customers. Below is a listing of some representative software DSM implementations.

Hardware Support

Although a lot of research has been towards software support for DSM, there has been some research in adding some hardware support. Unfortunately, according to Shi <ref name="shi"></ref> there is a rejection of hardware support from large corporations. What will occur when using hardware support is a issue of compatibility. Fortunately, recent adoptions of certain hardware standards will allow for some hardware support on the mass level.

Optimization Implementations

Shasta Protocol

The Shasta protocol improves on the regular software disributed shared memory system such that the shared data can be kept coherent at fine granularity. This is implemented by inserting inline code that checkes the cache state of shared data before each load or store. This protocol also allows the coherence granularity to be varied across different shared data structures in a single application, thus alleviating any potential inefficiencies that arise from the fixed large(page-size) granularity of the communication typical in most software shared memory systems. As in the hardware cache-coherent multiprocessors, shared data in this systems has three states - Invalid, Shared and Exclusive. This protocol provides a number of mechanisms for dealing with the long communication latencies in a workstation cluster. It minimizes extraneous coherence messages, and hence requires fewer messages to satisfy shared memory operations compared to protocols commonly used in hardware DSM systems. It also includes optimizations such as non-blocking stores, that aggressively exploit a relaxed memory consistency model. Other optimizations include detection of migratory data sharing, issuing multiple load misses simultaneously, merging of load and store misses to the same cache line, and support for prefetching and home placement directives. <ref name="shasta protocol report">Scales, D.J.; Gharachorloo, K.; , "Performance of the Shasta Distributed Shared Memory Protocol" WRL Research Report 97/2 , February 1997 report</ref>

Multiple Coherence Granularity

The Shasta protocol can support multiple granularities for communication and coherence, even with a single application. This can provide a significant performance boost in a software DSM system, since data with good spatial locality can be communicated at a coarse grain to amortize large communication overheads , while data prone to false sharing can use a finer sharing granularity. The implementation automatically chooses a block size based on the allocated size of a data structure. The basic heuristic is to choose a block size equal to the object size up to a certain threshold; the block size for objects larger than a given threshold is simply set to the base Shasta line size (typically set to be 64 bytes). The rationale for the heuristic is that small objects should be transferred as a single unit, while larger objects (e.g. large arrays) should be communicated at a fine granularity to avoid false sharing. Since the choice of the block size does not affect the correctness of the program, the programmer can freely experiment with various block sizes to tune the performance of an application. Controlling the coherence granularity in this manner is significantly simpler than approaches adopted by object-based or region-based DSM systems, since the latter approaches can affect correctness and typically require a more substantial change to the application.

Minimization of Protocol Messages

The Shasta protocol is designed to minimize extraneous coherence messages, given the relatively high overheads associated with handling messages in software DSM implementations. In this protocol, the current owner node specified by the directory guarantees to service a request that is forwarded to it. The fact that the current owner guarantees to service a request that is forwarded to it allows the protocol to complete all directory state changes when a requestor first reaches the home. This property eliminates the need for extra messages that are sent back to the home to confirm that the forwareded request is satisfied. Since this protocol supports dirty sharing, it also eliminates the need for sending an up-to-date copy of the line back to the home in case of read transaction when the home node is remote and the data is dirty in another node. Supporting exclusive requests reduces the need for fetching data on a store if the requesting processor already has the line in shared state. Also, the number of invalidation acknowledgements that are expected for an exclusive request are piggybacked on on of the invalidation acknowledgements to the requestor instead of being sent as a separate message.

Exploitation of Relaxed Memory Models

This protocol emulates the behavior of a processor with non-blocking loads and stores and a lockup-free cache, thus exploiting release consistency. Non-blocking stores are supported by issuing a read-exclusive or exclusive request, recording where the stre occured, and continuing. This information allows the protocol to appropriately merge the reply data with the newly written data that is already in memory. This protocol also exhibits a limited form of non-blocking load behavior

due to the batching optimization, since batching can lead to multiple outstanding loads . Lockup-free behavior for lines that are in a pending state is also supported by allowing writes to a pending line to proceed by storing the newly written data into memory and recording the location of the stores in the miss handler invoked due to the pending state.

Detection of Migratory Sharing Patterns

The Shasta protocol provides a sophisticated mechanism for detecting data that is shared in a migratory fashion and optimizing accesses to such data. Migratory sharing occurs when data is read and modified by different processors, leading to the migration of the data from one processor to another. By keeping extra information at each directory entry, the protocol detects whether the data in each line exhibits migratory behavior. A line is designated for migratory conversion after the migratory sharing pattern is successfully observed for a threshold number of times. A read request to a line that is designated for migratory conversion is automatically converted to a read-exclusive request at the directory. This conversion avoids the load miss followed by a store miss to the same line that is typical for migratory shared data. The protocol provides a mechanism to revert a line from migratory conversion. The reply data for a converted read request is cached with a special caching state , which is called exclusive migratory. Operations by the owner processor treat the line as exclusive, and a subsequent store by that processor changes the line to the ordinary exclusive state. The protocol detects a break in the migratory behavior if an incoming request from another processor arrives before the owner processor writes to the line ,i.e., while line is still in exclusive-migratory state. In this case, a message is sent to the home directory to nullify or revert the migratory conversion for that line. The line may subsequently be designated for migratory conversion if migratory behavior is observed again.

Prefetching and Home Placement Directives

The Shasta protocol allows the application to explicitly specify the home processor for individual pages instead of relying on the default round-robin allocation. The protocol also supports non-binding prefetch and prefetch-exclusive directives. The Shasta system can optionally supply information on source code lines that suffer the most number of remote misses by keeping extra state within the protocol. The programmer can use this information to identify places where prefetching may be helpful.

Parallel File Input/Output in Cohesion system

A parallel file I/O system that is independent of the memory consistency models reduces the file-related network traffic in the page-based software DSM systems built on a network of workstations. File accesses in page-based software Distributed Shared Memory (DSM) systems are usually performed by a single node, which may lead to a poor overall performance because a large amount of network traffic is generated to transfer data between this file handling node and the other nodes. This system alleviates this problem. The two main features in this design are the adaptive data distribution scheme and the delayed file access mechanism. The former distributes file blocks among the nodes according to the access pattern of the application, while the latter ensures that the data are transferred to the consumer node instead of the request node by exploiting the memory mapping features of the virtual shared address space of the DSM systems. Previously, most researches had been focused on the speedup of the computation phase of a DSM application via relaxed memory consistency models. The time-consuming file access operations were neglected. For ease of programming, the file accesses in a DSM application are usually performed in the sequentially executed initialization and completion phases on a certain node. As a result, a large amount of network traffic is generated to distribute the file data to other nodes during computation. This may degrade system performance since the networks employed in many DSM systems are usually slow compared to other components such as CPU, or even disk. The parallel file I/O system helps combat this network traffic. The effectiveness of this design has been verified by implementing a prototype on a page-based software DSM system named Cohesion. <ref name="parallel file system">Ce-Kuen Shieh; Su Cheong Mac; Jyh-Chang Ueng, "Improving the Performance of Distributed Shared Memory Systems via Parallel File Input/Output" The Journal of Systems and Software 44(1998) 3-15 , August 1997

paper</ref>

Design

The two main goals in the design are to parallelize the file accesses and reduce network traffic, and to minimize modifications on the existing DSMapplications. In this parallel file I/O system, every computation node also acts as a storage node. A parallel file is partitioned into file blocks, which are scattered among the nodes so that each node has a disjoint portion of the parallel file. In the pre-run of an application, the files are placed on the root node(node 0), which is the node that initiates the DSM system and the application. The data access pattern of the application is collected and the files are then partitioned into file blocks and redistributed among all nodes via an adaptive distribution scheme. In the subsequent executions, the root node issues the read requests via a delayed file access mechanism to ensure that the file blocks are placed in the consumer nodes instead of the requester node, i.e., the root node. Whenc omputation is completed, write requests are issued by the root node via the delayed access mechanism so that the data on each node are stored in its local disk instead of the root node, thereby reducing the network traffic.

Adaptive Data Distribution Scheme

An adaptive data distribution scheme is used to reduce the amount of network transfers. In this scheme, file blocks are distributed among nodes according to the application's access pattern. It is divided into the metadata file and the information collection technique. A metadata file for each parallel file on each node is required to keep track of the global block numbers of the file blocks in the local file portion, as the file blocks are distributed on the nodes according to the access patterns, which vary across applications. Every file block stored in the disk of a node has an entry in the associated metadata file, and this entry records the global block number of that file block in the parallel file. When the parallel file is opened, a request is broadcasted and the metadata files are opened. When the file read is issued, a read request is broadcasted and each node reads the file blocks from its local disk into the specified shared memory locations according to the metadata file and the starting memory address given in the read function call. When a file is written,the owner node of each memory page of the result data writes that page into its local disk,and the metadata are generated by recording its block number. An information collection technique records the access pattern of a DSM application. In the pre-run execution, the whole input file of the application is placed at the root node. During initialization, the root node reads the file into its local memory pages and maps these pages to their corresponding global virtual addresses spaces. During computation, page faults will be generated when the file-related pages, i.e., the memory pages containing the file data, are accessed bythe other nodes except the root node. This page fault information is collected by our information collection module. By the end of the computation phase, each node has a list of the previously accessed file-related pages and this list is sent to the root node for parsing. In this way, the application's access pattern of the input file is ascertained and the file blocks are then redistributed accordingly. After redistributing the file blocks, a metadata file on each node is then created according to this information. In case of a file write, the owner nodeof a target page writes that page into the local disk, a nd each node creates the metadata file accordingly. With this technique, the amount of network traffic during computation is greatly reduced, except in the pre-run execution of the application.

Delayed File Access Mechanism

When a system employs ordinary file access mechanism, all file data are sent to the root node when a file is read no matter how we distribute the file blocks, because the requester node is always the root node in our system. The file data are then serialized by the network, negating the benefit of file I/O parallelization. Furthermore, the root node may use only a portion of the file data during computation. As a result, moving all file data to the root node for each file access induces unnecessary network traffic.

In the delayed file access mechanism, the requested file block is directly put into the specified memory page of the storag e node inst ead of the re-quester node. When the root node issues a file read operation, a file read request with the memory address specifed in the read operation is broadcasted . Every node then reads a file block from its local disk and stores the block in a local memory page. The metadata header is checked to find the virtual shared add ress space where this local memory page should be mapped, and the local memory page is then mapped to the corresponding virtual shared address space. The reading of the file blocks and the address mapping are repeated until all file blocks are processed. Each node then returns the file block numbers that it has handled to the root node, and the root node broadcasts the node locations of all memory pages containing the file blocks so that other nodes can locate these pages when page faults occur. In this way, the actual network transfer of file blocks is delayed until the data are actually used during computation. The amount of network messages in this mechanism may not be less than that in the previously mentioned ordinary mechanism, but the amount of file data transfer across network is lessened with the delayed mechanism.

Implementation in Cohesion system

Cohesion is a page-based software DSM system built on a network of intel ´86 based microcomputers running MSDOS with DOS-extender and iRMK Real -Time Kernel . The shared address space of a program running on Cohesion can be divided into two regions, which are categorized as the conventional memory and the eager release memory. A programmer can choose a region for his shared object by inheriting the corresponding base class provided by the system. In Cohesion, we have developed a kernel- level MSDOS-compatible file manipulation module which provides the basic file services such as open/close and read/write. There are four parallel file operations in this proto-type: POpen,PRead,PWrite, and PClose. POpen opens a parallel file for read or write access . An open notification is broadcasted by the root node. Each node then opens its local file portions and reads the metadata headersin case of a file read, or creates a local file in case of a file write. Via our delayed file access mechanism, PRead reads the content of the parallel file from the disks to the virtual shared address space and PWrite writes the contentof a virtual shared memory region to the disks. Experimentally, the total execution time of the 2048 * 1024 20-iterations Successive Over Relaxation program on eight nodes was reduced from 196 seconds with sequential I/O to 108 seconds with parallel I/O, while it was reduced in the 512 * 512 Matrix Multiplication program on eight nodes from 100 seconds with sequential I/O to 84 seconds with parallel I/O.

Cray X1

Designed at the Oak Ridge National Laboratory, the Cray X1 supercomputer is a DSM vector multiprocessor. According to Dunigan <ref name="dunigan">T. H. Dunigan, Jr., J. S. Vetter, J. B. White, III, and P. H. Worley, BPerformance evaluation of the Cray X1 distributed shared-memory architecture,[ IEEE Micro, vol. 25, pp. 30–40, Jan.-Feb. 2005. paper</ref>, "The X1's hierarchial design uses the basic building blocks of multi-streaming processor (MSP), which is capable of 12.8 GF/s for 64-bit operations." It is also scalable to 4096 processors and up to 65 terabytes of memory. This super computer was introduced in 2002 and shows significant performance improvements on several applications. The X1 system attempts to take older Cray supercomputers and improve upon their systems. One of the interesting aspects of this system is that it performs much like a NUMA system. The difference is that each SMP node is not cached.

Overview

The system is an attempt to incorporate all the previous Cray vector systems into one design. One aspect of the X1 is the high memory bandwith. This will help to realize the theoretical peak performance. The Cray X1 uses a Multi-Streaming Processor (MSP). The hierarchical design of the system uses these MSP as a building block for the system. Each processor is made up of four single-streaming processors. Below is a a graphical representation of an individual Cray MSP module.

What is so interesting about these modules is how they can be interconnected to create a larger Cray X1 Node shown below.

For this example, we can see "...four MSPs, 16 memory controller chips (M-chips), and 32 memory daughter cards form a Cray X1 node."<ref name="dunigan"></ref> The memory banks provide 204 GB/s of bandwith which is enough for the processors and streams. Also, these banks use ECC memory which has built in correcting of single-bit errors and detection of multiple-bit errors as well as providing chip-kill error detection. These modules are connected using X1 routing modules. Each node uses full duplex links and each memory module has an even and odd 64-bit link. The overall topology is a 4-D hypercube, though larger configurations move towards a 2-D torus. Since this is a hardware supported DSM, there is little more to discuss regarding the system, so we will move forward into evaluating the performance.

Performance

Understanding the X1 system, we see that the X1 node supports a cache-coherent shared memory and the Cray supports OpenMP, System V shared memory, and POSIX threads shared memory programming models <ref name="dunigan"></ref>. The Cray system also supports several DSM models. Donigan provides many benchmark graphs to show that the performance of the X1 is much greater than previous systems. Please refer to the paper for these graphs <ref name="dunigan"></ref>

Glossary

Distributed Shared Memory: A class of software and hardware implementations in which each node of a cluster has access to shared memory in addition to each node's non-shared private memory.

Non-Uniform Memory Access:

A multiprocessing design where the memory access time depends on the memory

location relative to a processor(local memory is accessed faster).

Granularity:

In parallel computing, the amount of computation in relation to communication,

i.e., the ratio of computation to the amount of communication.

Metadata File:

A file containing the data about each parallel file on each node.

Topology:

The layout pattern of interconnections of the various elements such as links,

nodes, etc. of a network.

Quiz

Question 1 : DSM are used for multi-processor systems that have more than _____ processors.

a) 4 b) 8 c) 16 d) 32

Question 2 : DSM has what two problems that causes implementation slowdowns?

a) Memory consistency and hardware support b) Hardware support and cache coherence c) Protocols and cache coherence d) None of the above

Question 3 : The Cray X1 is a _____ support for DSM.

a) Software b) Hardware c) Cache Coherence d) Memory Consistency

Question 4 : What of the following is NOT true of Shasta?

a) Exploitation of relaxed memory models b) Maximization of protocol messages c) Detection of migratory sharing patterns d) Prefetching directives

Question 5 : Parallel file I/O cohesion uses a ___ .

a) Delayed file access b) Instant file access c) No file access d) Virtual file access

Question 6 : The Cray X1 uses a ___ .

a) Module with six processing threads b) Module with a single processing thread c) Module with four processing thread d) Module with eight processing thread

Question 7 : Hardware support is less researched due to ___ .

a) Cost of hardware b) Lack of software support for new hardware c) Lack of new hardware ideas d) Compatibility issues

Question 8 : The first DSM was designed in ___ .

a) 1975 b) 1983 c) 1986 d) 1990

Question 9 : In a DSM handling messages is ___ .

a) Relatively low cost b) Has not impact on performance c) Relatively high cost d) No cost as it is hardware supported

Question 10 : Shasta was developed in ___ .

a) 1986 b) 1996 c) 2006 d) 1976

References

<references />

See Also

1. More on DSM design issues, the following is a graph and the reference points to the paper it came from.Image.

http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=84877&isnumber=2778

2. http://ieeexplore.ieee.org.prox.lib.ncsu.edu/stamp/stamp.jsp?arnumber=301925