CSC/ECE 506 Fall 2007/wiki3 7 qaz: Difference between revisions

| (18 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Topic of Discussion == | |||

Wiki: True and false sharing. In Lectures 9 and 10, we covered performance results for true- and false-sharing misses. The results showed that some applications experienced degradation due to false sharing, and that this problem was greater with larger cache lines. But these data are at least 9 years old, and for multiprocessors that are smaller than those in use today. Comb the ACM Digital Library, IEEE Xplore, and the Web for more up-to-date results. What strategies have proven successful in combating false sharing? Is there any research into ways of diminishing true-sharing misses, e.g., by locating communicating processes on the same processor? Wouldn't this diminish parallelism and thus hurt performance? | Wiki: True and false sharing. In Lectures 9 and 10, we covered performance results for true- and false-sharing misses. The results showed that some applications experienced degradation due to false sharing, and that this problem was greater with larger cache lines. But these data are at least 9 years old, and for multiprocessors that are smaller than those in use today. Comb the ACM Digital Library, IEEE Xplore, and the Web for more up-to-date results. What strategies have proven successful in combating false sharing? Is there any research into ways of diminishing true-sharing misses, e.g., by locating communicating processes on the same processor? Wouldn't this diminish parallelism and thus hurt performance? | ||

'''PLEASE NOTE: THE TEXT IN BLUE ARE LINKS TO WEB-SITES. PLEASE CLICK ON THE TEXT IN BLUE TO TAKE YOU TO THE CORRESPONDING PAGE''' | |||

== False Sharing Miss == | == False Sharing Miss == | ||

| Line 15: | Line 18: | ||

Fig 2 | Fig 2 | ||

== Block Size and False Sharing Miss == | ==== Block Size and False Sharing Miss ==== | ||

The cache performance of shared data in a multiprocessor environment gets affected more because of misses due to shared data than because of any other types of misses. It has been obeserved that shared data is responsible for majority of cache misses, and there miss rate is less predictable. Generally miss rate in uniprocessor goes down with increase in block size, but in multi-processor it goes up with increase in block size (more often, but this is not a general case). The misses because of shared data is also know as coherence miss, and is further of two types. Miss due to false sharing and miss due to true sharing. | The cache performance of shared data in a multiprocessor environment gets affected more because of misses due to shared data than because of any other types of misses. It has been obeserved that shared data is responsible for majority of cache misses, and there miss rate is less predictable. Generally miss rate in uniprocessor goes down with increase in block size, but in multi-processor it goes up with increase in block size (more often, but this is not a general case). The misses because of shared data is also know as coherence miss, and is further of two types. Miss due to false sharing and miss due to true sharing. The coherence misses in general can be reduced by various techniques. Prediction is one of the many proposed methods and take up majority of research area. [http://ieeexplore.ieee.org/iel5/8608/27277/01213084.pdf Jim Nilsson, Anders Landin† and Per Stenstr¨om] proposed The Coherence Predictor Cache among many proposed theories. | ||

Coherence miss that are casued because of false sharing is a subject of speculation. As mentioned above, coherence miss increases with increase in block size is because of higher false sharing of data with larger blocks. Even though we have generalised this, but it sometimes depends on the application that is being run on system. Below it is show contribution of each types of misses for different application. | Coherence miss that are casued because of false sharing is a subject of speculation. As mentioned above, coherence miss increases with increase in block size is because of higher false sharing of data with larger blocks. Even though we have generalised this, but it sometimes depends on the application that is being run on system. Below it is show contribution of each types of misses for different application. | ||

| Line 41: | Line 44: | ||

Figure shows that false sharing increses with the increase in block size. Also, the steps of increase is very small in case of OLTP, and in fact the false sharing decreases with block size 512B. Hence, in general the false sharing increase with increase in block size but its not always true. | Figure shows that false sharing increses with the increase in block size. Also, the steps of increase is very small in case of OLTP, and in fact the false sharing decreases with block size 512B. Hence, in general the false sharing increase with increase in block size but its not always true. | ||

== Strategies to reduce False Sharing Miss == | ==== Strategies to reduce False Sharing Miss ==== | ||

Many of the proposed strategies to improve miss rate because of false sharing, revolve around data transformation. [http://ieeexplore.ieee.org/iel1/12/7122/00286299.pdf?tp=&arnumber=286299&isnumber=7122 J. Torrellas, M. Lam, and J. Hennessey] proposed methods like array padding and block alignment to reduce false sharing. S. Eggers and T. Jeremiassen proposed a method to reduce false sharing either by grouping data that is accessed by the same processor or separate individual data items that are shared. Basic techniques proposed that fall under data transformation can be listed below: | Many of the proposed strategies to improve miss rate because of false sharing, revolve around data transformation. [http://ieeexplore.ieee.org/iel1/12/7122/00286299.pdf?tp=&arnumber=286299&isnumber=7122 J. Torrellas, M. Lam, and J. Hennessey] proposed methods like array padding and block alignment to reduce false sharing. [http://coblitz.codeen.org:3125/citeseer.ist.psu.edu/cache/papers/cs/1663/ftp:zSzzSzftp.cs.washington.eduzSztrzSz1994zSz09zSzUW-CSE-94-09-05.pdf/jeremiassen94reducing.pdf S. Eggers and T. Jeremiassen] proposed a method to reduce false sharing either by grouping data that is accessed by the same processor or separate individual data items that are shared. Basic techniques proposed that fall under data transformation can be listed below: | ||

Changing loop structures : Transform program loops, e.g., by blocking, alignment, or peeling, so that iterations in a parallel loop access disjoint cache lines. | Changing loop structures : Transform program loops, e.g., by blocking, alignment, or peeling, so that iterations in a parallel loop access disjoint cache lines. | ||

| Line 56: | Line 59: | ||

Some of the transformations help improve spatial locality along with reducing false sharing, others may adversely affect locality. Hence there has to be a trade off between spatial locality and false sharing. [http://ieeexplore.ieee.org/iel5/71/26889/01195407.pdf?tp=&arnumber=1195407&isnumber=26889 Kandemir, M. Choudhary, A. Ramanujam and J. Banerjee] proposed unified compilation framework in which focus was more on structured codes which demostrated how spatial locality and false sharing can be treated in an optimizing compiler framework. | Some of the transformations help improve spatial locality along with reducing false sharing, others may adversely affect locality. Hence there has to be a trade off between spatial locality and false sharing. [http://ieeexplore.ieee.org/iel5/71/26889/01195407.pdf?tp=&arnumber=1195407&isnumber=26889 Kandemir, M. Choudhary, A. Ramanujam and J. Banerjee] proposed unified compilation framework in which focus was more on structured codes which demostrated how spatial locality and false sharing can be treated in an optimizing compiler framework. | ||

Juan C. Pichel, Dora B. Heras, Jos´e C. Cabaleiro and Francisco F. Rivera proposed a model in which, locality is established in run-time considering parameters that describe the structure of the sparse matrix which characterizes the irregular accesses. As an example of irregular code with false sharing a particular implementation of the sparse matrix vector product (SpM×V) was selected. The problem of increasing locality and decreasing false sharing for a irregular problem is formulated as a graph. An adequate distribution of the graph among processors followed by a reordering of the nodes inside each processor produces the solution. The results show important improvements in the behavior of the irregular accesses: reductions in execution time and an improved program scalability. | [http://ieeexplore.ieee.org/iel5/10528/33309/01575842.pdf?arnumber=1575842 Juan C. Pichel, Dora B. Heras, Jos´e C. Cabaleiro and Francisco F. Rivera] proposed a model in which, locality is established in run-time considering parameters that describe the structure of the sparse matrix which characterizes the irregular accesses. As an example of irregular code with false sharing a particular implementation of the sparse matrix vector product (SpM×V) was selected. The problem of increasing locality and decreasing false sharing for a irregular problem is formulated as a graph. An adequate distribution of the graph among processors followed by a reordering of the nodes inside each processor produces the solution. The results show important improvements in the behavior of the irregular accesses: reductions in execution time and an improved program scalability. | ||

At hardware level [http://ieeexplore.ieee.org/iel3/4053/11610/00528827.pdf?tp=&arnumber=528827&isnumber=11610 Murali Kadiyala and Laxmi N]. Bhuyan proposed dynamic sub-blocking to reduce false sharing. They presented a dynamic sub-block coherence protocol which minimizes false sharing by trying to dynamically locate the point of false reference. Sharing trafic is minimized by maintaining coherence on smaller blocks (sub-blocks) which are truly shared, | At hardware level [http://ieeexplore.ieee.org/iel3/4053/11610/00528827.pdf?tp=&arnumber=528827&isnumber=11610 Murali Kadiyala and Laxmi N]. Bhuyan proposed dynamic sub-blocking to reduce false sharing. They presented a dynamic sub-block coherence protocol which minimizes false sharing by trying to dynamically locate the point of false reference. Sharing trafic is minimized by maintaining coherence on smaller blocks (sub-blocks) which are truly shared, | ||

| Line 64: | Line 67: | ||

If the word accessed on the miss has been modified since (and including) the reference causing the invalidation, then it is known as True Sharing Miss. Misses due to true sharing, in most cases, are desired because this basically make sure that coherence is maintained. But there can be some simple modification to reduce these kind of misses. A simple write-through | If the word accessed on the miss has been modified since (and including) the reference causing the invalidation, then it is known as True Sharing Miss. Misses due to true sharing, in most cases, are desired because this basically make sure that coherence is maintained. But there can be some simple modification to reduce these kind of misses. A simple write-through | ||

protocol (with allocation on write misses), totally eliminates useless misses. On a store into the block, the address of the modified word is propagated to all processors with a copy of the block and is buffered in an invalidation buffe~ a local access to a word whose address is present in the buffer invalidates the block copy and triggers a PTS miss. (The invalidation buffer could be implemented by a dirty bit associated with each word in each block of the cache.) This implementation “mimics” | protocol (with allocation on write misses), totally eliminates [http://delivery.acm.org/10.1145/170000/165145/p88-dubois.pdf?key1=165145&key2=2112482911&coll=ACM&dl=ACM&CFID=3606952&CFTOKEN=81485400 useless misses]. On a store into the block, the address of the modified word is propagated to all processors with a copy of the block and is buffered in an invalidation buffe~ a local access to a word whose address is present in the buffer invalidates the block copy and triggers a PTS miss. (The invalidation buffer could be implemented by a dirty bit associated with each word in each block of the cache.) This implementation “mimics” | ||

the essential miss detection algorithm and its miss rate is the essential miss rate of the trace. Write-through caches generate an unacceptable amount of write traffic. To make the protocol write back we need to maintain ownership. Stores accessing non-owned blocks with a pending invalidation for ANY one of its words in the local invalidation buffer must trigger a miss. These additional misses are the cost of maintaining ownership. | the essential miss detection algorithm and its miss rate is the essential miss rate of the trace. Write-through caches generate an unacceptable amount of write traffic. To make the protocol write back we need to maintain ownership. Stores accessing non-owned blocks with a pending invalidation for ANY one of its words in the local invalidation buffer must trigger a miss. These additional misses are the cost of maintaining ownership. | ||

| Line 74: | Line 77: | ||

As mentioned above there has to be balance between all the performance metrics. With reduced miss rate and communication cost is it worth reducing parallelism ? well the solution is subtle orchestration of parallel program. The process which shares large address space should be executed on same processor. | As mentioned above there has to be balance between all the performance metrics. With reduced miss rate and communication cost is it worth reducing parallelism ? well the solution is subtle orchestration of parallel program. The process which shares large address space should be executed on same processor. | ||

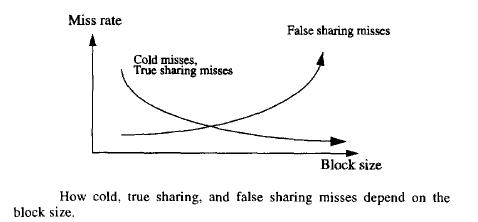

Increasing the block size have a reverse effect on true and false sharing. Below shows the relation between true sharing miss and flase sharing miss with increasing block size. True sharing misses generally decreases with increasing block size and its opposite for false sharing miss. | |||

[[Image:Fig4.jpg]] | |||

== References == | == References == | ||

* [http://www.intel.com/cd/ids/developer/asmo-na/eng/193679.htm Intel] | |||

* [http://ieeexplore.ieee.org/iel5/12/20861/00966493.pdf?arnumber=966493 Silent Stores and Store Value Locality] | |||

* [http://ieeexplore.ieee.org/iel1/12/7122/00286299.pdf?tp=&arnumber=286299&isnumber=7122 J. Torrellas, M. Lam, and J. Hennessey] | |||

* [http://coblitz.codeen.org:3125/citeseer.ist.psu.edu/cache/papers/cs/1663/ftp:zSzzSzftp.cs.washington.eduzSztrzSz1994zSz09zSzUW-CSE-94-09-05.pdf/jeremiassen94reducing.pdf S. Eggers and T. Jeremiassen] | |||

* [http://ieeexplore.ieee.org/iel5/71/26889/01195407.pdf?tp=&arnumber=1195407&isnumber=26889 Kandemir, M. Choudhary, A. Ramanujam and J. Banerjee] | |||

* [http://ieeexplore.ieee.org/iel5/10528/33309/01575842.pdf?arnumber=1575842 Juan C. Pichel, Dora B. Heras, Jos´e C. Cabaleiro and Francisco F. Rivera] | |||

* [http://ieeexplore.ieee.org/iel3/4053/11610/00528827.pdf?tp=&arnumber=528827&isnumber=11610 Murali Kadiyala and Laxmi N] | |||

* [http://delivery.acm.org/10.1145/170000/165145/p88-dubois.pdf?key1=165145&key2=2112482911&coll=ACM&dl=ACM&CFID=3606952&CFTOKEN=81485400 Michel Dubois, Jonas Skeppstedt, Livio Ricc$dli, Krishnart Ramamurthy] | |||

Latest revision as of 22:40, 24 October 2007

Topic of Discussion

Wiki: True and false sharing. In Lectures 9 and 10, we covered performance results for true- and false-sharing misses. The results showed that some applications experienced degradation due to false sharing, and that this problem was greater with larger cache lines. But these data are at least 9 years old, and for multiprocessors that are smaller than those in use today. Comb the ACM Digital Library, IEEE Xplore, and the Web for more up-to-date results. What strategies have proven successful in combating false sharing? Is there any research into ways of diminishing true-sharing misses, e.g., by locating communicating processes on the same processor? Wouldn't this diminish parallelism and thus hurt performance?

PLEASE NOTE: THE TEXT IN BLUE ARE LINKS TO WEB-SITES. PLEASE CLICK ON THE TEXT IN BLUE TO TAKE YOU TO THE CORRESPONDING PAGE

False Sharing Miss

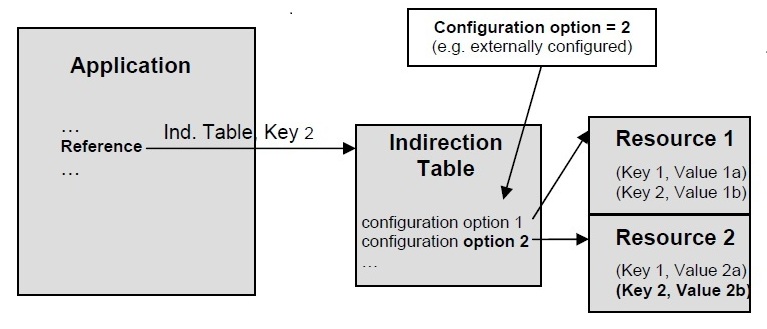

In multiprocessor system, it is important to ensure data coherence across all processors. Vendors like INTEL uses MESI protocol to ensure cache coherence. When the program is loaded on to the cache for the first time, MESI requires to put this in Exclusive state. This data in the processors cache will go to shared state when another processor requests the same portion of program. For all subsequent stores by any one processor will cause its state to change from shared to modified and it will invalidate the corresponding cache content of other processors. Figure 1 demonstrates how two distinct variables that are placed adjacent to each other in system memory can be loaded on two or more processors cache line, causing the processor to mark the whole line as shared and invalidate the line for each load/store.

Fig 1

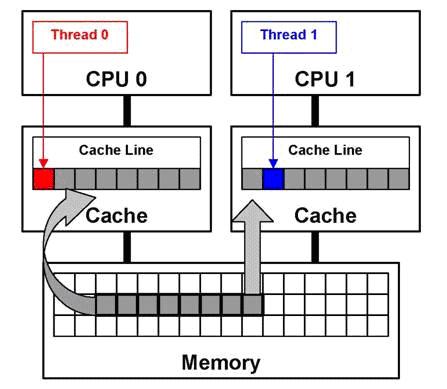

Fasle sharing is caused because of multiple processes/threads sharing same address space, and it is very popular to occur on multiprocessor system. MESI protocol, which is basically an invalidate based coherence protocol, will invalidate shared cache lines on different processors for each load/store of an element in a shared cache line. Figure 2 demostrates this. Even though CPU 0 is accessing different word in the cache line than what CPU 1 is trying to, the entire line in CPU 1 cache will be invalidated. A false sharing miss will occur when CPU 1 again tries to access the same word.

Fig 2

Block Size and False Sharing Miss

The cache performance of shared data in a multiprocessor environment gets affected more because of misses due to shared data than because of any other types of misses. It has been obeserved that shared data is responsible for majority of cache misses, and there miss rate is less predictable. Generally miss rate in uniprocessor goes down with increase in block size, but in multi-processor it goes up with increase in block size (more often, but this is not a general case). The misses because of shared data is also know as coherence miss, and is further of two types. Miss due to false sharing and miss due to true sharing. The coherence misses in general can be reduced by various techniques. Prediction is one of the many proposed methods and take up majority of research area. Jim Nilsson, Anders Landin† and Per Stenstr¨om proposed The Coherence Predictor Cache among many proposed theories.

Coherence miss that are casued because of false sharing is a subject of speculation. As mentioned above, coherence miss increases with increase in block size is because of higher false sharing of data with larger blocks. Even though we have generalised this, but it sometimes depends on the application that is being run on system. Below it is show contribution of each types of misses for different application.

Fig 3

Source: Silent Stores and Store Value Locality

The figure shows 6 bars in each set. Each bar of a set corresponds to a scenario of cache. There are 5 different kinds scenarios:

Baseline : Basic invalidation based MESI protocol.

UFS : Updatebased false sharing.

UFS-P : Measures the potential of UFS with perfect knowledge. This allows us to avoid reading followed by an upgrade (S->M).

MSFS : Message passing stochastic false sharing.

PSFS : Program structure stochastic false sharing.

Figure shows that false sharing increses with the increase in block size. Also, the steps of increase is very small in case of OLTP, and in fact the false sharing decreases with block size 512B. Hence, in general the false sharing increase with increase in block size but its not always true.

Strategies to reduce False Sharing Miss

Many of the proposed strategies to improve miss rate because of false sharing, revolve around data transformation. J. Torrellas, M. Lam, and J. Hennessey proposed methods like array padding and block alignment to reduce false sharing. S. Eggers and T. Jeremiassen proposed a method to reduce false sharing either by grouping data that is accessed by the same processor or separate individual data items that are shared. Basic techniques proposed that fall under data transformation can be listed below:

Changing loop structures : Transform program loops, e.g., by blocking, alignment, or peeling, so that iterations in a parallel loop access disjoint cache lines.

Changing data structures : Change the layout of data structures, e.g., by array alignment and padding. Array alignment is the insertion of dummy space so as to change the starting address of an array variable. Array padding is an increase in the allocated dimension size of an array variable.

Copying data : Copy the data to be updated by the loop into a temporary data structure that does not exhibit false sharing and is well suited to the data access patterns in the loop. After the parallel loop completes execution, the temporary data structure is copied back to the original structure. The copy back may exhibit false sharing, however.

Changing schedule parameters : Schedule the loop iterations so that concurrently executed iterations access disjoint cache lines.

Some of the transformations help improve spatial locality along with reducing false sharing, others may adversely affect locality. Hence there has to be a trade off between spatial locality and false sharing. Kandemir, M. Choudhary, A. Ramanujam and J. Banerjee proposed unified compilation framework in which focus was more on structured codes which demostrated how spatial locality and false sharing can be treated in an optimizing compiler framework.

Juan C. Pichel, Dora B. Heras, Jos´e C. Cabaleiro and Francisco F. Rivera proposed a model in which, locality is established in run-time considering parameters that describe the structure of the sparse matrix which characterizes the irregular accesses. As an example of irregular code with false sharing a particular implementation of the sparse matrix vector product (SpM×V) was selected. The problem of increasing locality and decreasing false sharing for a irregular problem is formulated as a graph. An adequate distribution of the graph among processors followed by a reordering of the nodes inside each processor produces the solution. The results show important improvements in the behavior of the irregular accesses: reductions in execution time and an improved program scalability.

At hardware level Murali Kadiyala and Laxmi N. Bhuyan proposed dynamic sub-blocking to reduce false sharing. They presented a dynamic sub-block coherence protocol which minimizes false sharing by trying to dynamically locate the point of false reference. Sharing trafic is minimized by maintaining coherence on smaller blocks (sub-blocks) which are truly shared, whereas larger blocks are used as the basic units of transfer. Larger blocks exploit locality while coherence is maintained on sub-blocks which minimize bus traffic due to shared misses.

Miss Caused due to True Sharing

If the word accessed on the miss has been modified since (and including) the reference causing the invalidation, then it is known as True Sharing Miss. Misses due to true sharing, in most cases, are desired because this basically make sure that coherence is maintained. But there can be some simple modification to reduce these kind of misses. A simple write-through protocol (with allocation on write misses), totally eliminates useless misses. On a store into the block, the address of the modified word is propagated to all processors with a copy of the block and is buffered in an invalidation buffe~ a local access to a word whose address is present in the buffer invalidates the block copy and triggers a PTS miss. (The invalidation buffer could be implemented by a dirty bit associated with each word in each block of the cache.) This implementation “mimics” the essential miss detection algorithm and its miss rate is the essential miss rate of the trace. Write-through caches generate an unacceptable amount of write traffic. To make the protocol write back we need to maintain ownership. Stores accessing non-owned blocks with a pending invalidation for ANY one of its words in the local invalidation buffer must trigger a miss. These additional misses are the cost of maintaining ownership.

That was one way to reduce true sharing miss. By locating communicating processes on the same processor we can attempt to reduce the true sharing misses. With this approach there are following performance metrics.

- Even though with this approach misses due to true sharing will reduce but will not be eliminated completely.

- With this approach different communicating processes will be executed on single process in a interleaved manner. This implies we are reducing parallelism. Hence there will be overall utilization of processors will reduce.

- By making sure that communicating process executes on same processor, communication latency are nullified on the cost of low parallelism and process context switch cost.

As mentioned above there has to be balance between all the performance metrics. With reduced miss rate and communication cost is it worth reducing parallelism ? well the solution is subtle orchestration of parallel program. The process which shares large address space should be executed on same processor.

Increasing the block size have a reverse effect on true and false sharing. Below shows the relation between true sharing miss and flase sharing miss with increasing block size. True sharing misses generally decreases with increasing block size and its opposite for false sharing miss.

References

- Intel

- Silent Stores and Store Value Locality

- J. Torrellas, M. Lam, and J. Hennessey

- S. Eggers and T. Jeremiassen

- Kandemir, M. Choudhary, A. Ramanujam and J. Banerjee

- Juan C. Pichel, Dora B. Heras, Jos´e C. Cabaleiro and Francisco F. Rivera

- Murali Kadiyala and Laxmi N

- Michel Dubois, Jonas Skeppstedt, Livio Ricc$dli, Krishnart Ramamurthy