ECE506 CSC/ECE 506 Spring 2012/7b na: Difference between revisions

| (67 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == '''Introduction''' == | ||

Virtual addresses are translated to absolute addresses using a hardware structure called the Translation Lookaside Buffer (TLB). A TLB accepts a Virtual Page Number and returns the corresponding Physical Page Number. The translation lookaside buffer performs other functions in addition to the basic address translation. The other functions include access control, program debugging support and operating system support for virtual memory. | Virtual addresses are translated to absolute addresses using a hardware structure called the Translation Lookaside Buffer (TLB). A TLB accepts a Virtual Page Number and returns the corresponding Physical Page Number. The translation lookaside buffer performs other functions in addition to the basic address translation. The other functions include access control, program debugging support and operating system support for virtual memory. | ||

=== What is a TLB? === | === '''What is a TLB?''' === | ||

Since all references in Level 0 systems are absolute accesses, these systems do not have TLBs. A TLB is typically not large enough to hold all the current translations. Translations for all pages in memory are stored in a memory structure called the Page Table. The TLB is organized as two parts. The instruction TLB (ITLB) is only used for instruction references, while the data TLB (DTLB) is only used for data references. A system may implement a combined TLB which is used for both instruction and data references. | Since all references in Level 0 systems are absolute accesses, these systems do not have TLBs. A TLB is typically not large enough to hold all the current translations. Translations for all pages in memory are stored in a memory structure called the Page Table. The TLB is organized as two parts. The instruction TLB (ITLB) is only used for instruction references, while the data TLB (DTLB) is only used for data references. A system may implement a combined TLB which is used for both instruction and data references. | ||

Additionally, translations are supported for large address ranges. Such translations are called block translations and are stored in a block TLB. Block translations map address ranges larger than a page. Block translations are useful in mapping virtual address ranges which do not get paged in and out. These block translations increase the virtual address range of the TLB thereby minimizing the virtual address translation overhead. | Additionally, translations are supported for large address ranges. Such translations are called block translations and are stored in a block TLB. Block translations map address ranges larger than a page. Block translations are useful in mapping virtual address ranges which do not get paged in and out. These block translations increase the virtual address range of the TLB thereby minimizing the virtual address translation overhead. | ||

=== TLB Implementation === | === '''TLB Implementation<ref>http://sequoia.ict.pwr.wroc.pl/~iro/RISC/sm/www.hp.com/acd-18.html#HEADING18-0</ref>''' === | ||

A common approach to reducing the overhead of address translation in paging systems is to use the descriptor cache better known as the translation lookaside bufler. The TLB contains associative memory in which it stores recently used page descriptors. The TLB contains the corresponding (virtual address/ page-map table(PMT) entry) pairs used by recent address translations. | |||

(See [[#Definitions]] for PMT) The address-translation hardware searches the TLB associatively by the page portion of the virtual address when the processor initiates a memory reference. When the match is found, the TLB furnishes the corresponding PMT entry. The physical address stored in the PMT entry forms the physical memory address. Protection is enforced by comparing task boundaries and access rights bits stored in the PMT entry with the obtained address and the intended mode of memory access. The MMU flags(See [[#Definitions]]) discrepancies as protection violations and aborts the memory reference. In addition, a processor exception is raised to allow the operating system to abort the offending task. | |||

TLBs generally have high hit ratios, in excess of 90 percent, and thus complete most address translations quickly. When a virtual address is not found in the TLB (a miss), the memory management hardware has to reference the PMTs in memory to complete the translation. The root pointer to the address-translation tree of a specific task is provided by the operating system as a part of the task-control block. The root pointer provides the base address of the next-level mapping table. The Microprocessor Memory Management Unit(MMU) uses it to reference memory and to obtain the related PMT entry. The presence bit therein is inspected to establish whether the item that it points to is resident in (physical) memory. If not, the page-fault exception is raised, and the operating system fetches the missing item from secondary storage. When the target item is in main memory, the MMU checks whether it is the leaf node of the translation tree. If not, the process is repeated for each level of hierarchy until the last level is reached. Only the leaf level contains pointers to page frames in main memory. Other pointers are indirect and serve as trail markers within the address-translation tree. Once the last level of the mapping hierarchy is reached, the MMU stores the obtained PMT entry into the TLB for future reuse. If the TLB is full, it replaces an existing entry to make room for the incoming one. | |||

TLBs commonly employ the least recently used (LRU) replacement policy. Simpler alternatives, such as FIFO and random, may be cheaper to implement but have inferior performance. Due to the non uniqueness of virtual addresses between different address spaces, single-task TLBs must be flushed upon every task switch. The oncoming task must usually resort to loading the TLB with its entries through a succession of TLB misses. Some designers opt to alleviate this problem by using multitasking or multicontext TLBs. A multicontext TLB removes ambiguities by tagging each entry with a unique owner identity, usually that of the enclosing task. On translation, both the address, and the owner identity must match for a given entry to produce a hit. In this way, the TLB need not be flushed upon task switches, and a re-scheduled task can benefit from finding some of its entries in the TLB. In principle, TLBs in systems with hierarchical mapping tables should provide comparatively higher hit ratios to offset the longer translation times incurred by TLB misses. Increasing the number of entries, which also tends to increase the price, generally accomplishes a higher hit ratio. | |||

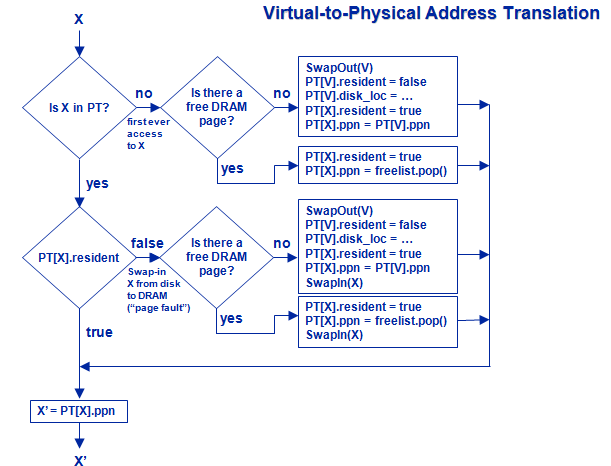

[[Image:TLB2.PNG|thumbnail|left|x300px|Figure 2:Translation Lookaside Buffer<sup><span id="3body"></span></sup>]] | |||

Here, X is the virtual page number to be translated into a physical page number, X' is the Physical number corresponding to X. and V is the Virtual page number of a victim page(that is a page to be kicked off the table). | Here, X is the virtual page number to be translated into a physical page number, X' is the Physical number corresponding to X. and V is the Virtual page number of a victim page(that is a page to be kicked off the table). | ||

=== TLB miss handling === | === '''TLB miss handling''' === | ||

Given a virtual address, the selected TLB is searched for an entry matching the Virtual Page Number. If the entry exists, the 20-bit Physical Page Number (contained in the TLB entry) is concatenated with the original 12-bit page offset to form a 32-bit absolute address. If no such entry exists, the TLB is updated by either software TLB miss handling or hardware TLB miss handling. | Given a virtual address, the selected TLB is searched for an entry matching the Virtual Page Number. If the entry exists, the 20-bit Physical Page Number (contained in the TLB entry) is concatenated with the original 12-bit page offset to form a 32-bit absolute address. If no such entry exists, the TLB is updated by either software TLB miss handling or hardware TLB miss handling. | ||

| Line 28: | Line 56: | ||

In implementations that provide hardware for TLB miss handling, the hardware attempts to find the virtual to physical page translation in the Page Table. If the hardware is successful, it inserts the translation and protection fields into the appropriate instruction or data TLB. No interruption occurs in this case. If hardware is not successful, due to a search of the Page Table that was not exhaustive or due to the appropriate translation not existing in the Page Table, an interruption occurs so that the software can complete the process. | In implementations that provide hardware for TLB miss handling, the hardware attempts to find the virtual to physical page translation in the Page Table. If the hardware is successful, it inserts the translation and protection fields into the appropriate instruction or data TLB. No interruption occurs in this case. If hardware is not successful, due to a search of the Page Table that was not exhaustive or due to the appropriate translation not existing in the Page Table, an interruption occurs so that the software can complete the process. | ||

== | == '''TLB coherence''' == | ||

The cache-coherent part of a multiprocessor system is required to behave as if there were logically a single DTLB and a single ITLB. All TLBs in a multiprocessor system are required to broadcast purges, except PDTLBE and PITLBE, to all other TLBs. The originating processor's purge instruction suspends until all target processors complete the purge. | The cache-coherent part of a multiprocessor system is required to behave as if there were logically a single DTLB and a single ITLB. All TLBs in a multiprocessor system are required to broadcast purges, except PDTLBE and PITLBE, to all other TLBs. The originating processor's purge instruction suspends until all target processors complete the purge. | ||

A processor's TLB is simply a cache on the page table entries (PTEs) used for virtual to physical address translation. A PTE can come to reside in the TLBs in multiple processors due to either actual sharing of data or process migration. PTEs may be modified- for example, when the page is swapped out or its protection is changed - leading to direct analog of the cache coherence problem. A variety of solutions have been used for TLB coherence. Software solutions, through the operating system, are popular since TLB coherence operations are much less frequent than cache coherence operations. The exact solutions used depend on whether PTEs are loaded into the TLB directly by hardware or under software control as well as on several other variables in how TLBs and operating systems are implemented. Hardware solutions are also used by some systems, particularly when TLB operations are not visible to the software. The next section provides a brief overview of four approaches to TLB coherence: virtually addressed caches, software TLB shootdown, address space identifiers (ASIDs), and hardware TLB coherence. Further details can be found in (Thompson et al. 1988; Rosenburg 1989; Teller 1990) and the papers referenced therein. | A processor's TLB is simply a cache on the page table entries (PTEs) used for virtual to physical address translation. A PTE can come to reside in the TLBs in multiple processors due to either actual sharing of data or process migration. PTEs may be modified- for example, when the page is swapped out or its protection is changed - leading to direct analog of the cache coherence problem. A variety of solutions have been used for TLB coherence. Software solutions, through the operating system, are popular since TLB coherence operations are much less frequent than cache coherence operations. The exact solutions used depend on whether PTEs are loaded into the TLB directly by hardware or under software control as well as on several other variables in how TLBs and operating systems are implemented. Hardware solutions are also used by some systems, particularly when TLB operations are not visible to the software. The next section provides a brief overview of four approaches to TLB coherence: virtually addressed caches, software TLB shootdown, address space identifiers (ASIDs), and hardware TLB coherence. Further details can be found in (Thompson et al. 1988; Rosenburg 1989; Teller 1990) and the papers referenced therein. | ||

[[Image: | [[Image:TLB3.PNG|thumbnail|left|x300px|Figure 3:Virtual Memory in modern computer systems<sup><span id="3body"></span></sup>]] | ||

=== Approaches to avoid TLB coherence problem === | |||

=== '''Approaches to avoid TLB coherence problem''' === | |||

-------------------------------------------------------------------- | -------------------------------------------------------------------- | ||

== Virtually addressed caches== | ==== '''Virtually addressed caches''' ==== | ||

-------------------------------------------------------------------- | -------------------------------------------------------------------- | ||

TLBs, and hence the TLB coherence problem, can be avoided entirely by using virtually addressed caches. Address translation is now needed only on cache misses, so particularly if the cache miss rate is small, we can use the page tables directly. PTEs are brought into the regular data cache when they are accessed and are therefore kept coherent by the cache coherence mechanism. However, when a physical page is swapped out of memory or its protection changed, this is not visible to the cache coherence hardware, so the PTE must be flushed from the virtually addressed caches of all processors by the operating system. In addition, the coherence problem for virtually addressed caches must be solved. This approach was explored in the SPUR research project (Hill et al. 1986; WOOD et al. 1986) | TLBs, and hence the TLB coherence problem, can be avoided entirely by using virtually addressed caches. Address translation is now needed only on cache misses, so particularly if the cache miss rate is small, we can use the page tables directly. PTEs are brought into the regular data cache when they are accessed and are therefore kept coherent by the cache coherence mechanism. However, when a physical page is swapped out of memory or its protection changed, this is not visible to the cache coherence hardware, so the PTE must be flushed from the virtually addressed caches of all processors by the operating system. In addition, the coherence problem for virtually addressed caches must be solved. This approach was explored in the SPUR research project (Hill et al. 1986; WOOD et al. 1986) | ||

[[Image: | [[Image:TLB4.PNG|thumbnail|left|x300px|Figure 4:Virtual Memory in modern computer systems<sup><span id="3body"></span></sup>]] | ||

== TLB shootdown == | |||

==== '''TLB shootdown''' ==== | |||

--------------------------------------------------------------------- | --------------------------------------------------------------------- | ||

| Line 51: | Line 134: | ||

<ref>http://stackoverflow.com/questions/3748384/what-is-tlb-shootdown</ref>. | <ref>http://stackoverflow.com/questions/3748384/what-is-tlb-shootdown</ref>. | ||

TLB shootdown,the process of maintaining TLB consistency by invalidating entries when pages are unmapped,is one of the simplest operations in a multiprocessor OS that requires global coordination. It is also a short, but latency-critical, operation and so represents a worst-case comparison for a multikernel. | |||

In Linux and Windows, inter-processor interrupts (IPIs) are used: a core that wishes to change a page mapping writes the operation to a well-known location and sends an interrupt to every core that might have the mapping in its TLB. Each core takes the trap, acknowledges the IPI by writing to a shared variable, invalidates the TLB entry, and resumes. The initiating core can continue immediately after every IPI has been acknowledged, since by the time each core resumes to user space the TLB entry is guaranteed to be flushed. This has low latency, but can be disruptive as each core immediately takes the cost of a trap (about 800 cycles). The TLB invalidation itself is fast, taking 95-320 cycles on a current x86-64 core. | |||

In Barrelfish, we use messages for shootdown. In the naive algorithm, the local monitor broadcasts invalidate messages to the others and waits for all the replies. We would expect this to show higher latency than the IPI approach, since the remote monitors handle the messages only when “convenient”. However, fortunately, the message-passing paradigm allows us to improve on this approach without significantly restructuring the code. In particular, we can exploit knowledge about the specific hardware platform, extracted from the system knowledge base at runtime, to achieve very good TLB shootdown performance.<ref>http://research.microsoft.com/pubs/101903/paper.pdf</ref>. | |||

== Address Space Identifier (ASID) == | ==== '''Address Space Identifier (ASID)''' ==== | ||

--------------------------------------------------------------------------- | --------------------------------------------------------------------------- | ||

| Line 58: | Line 146: | ||

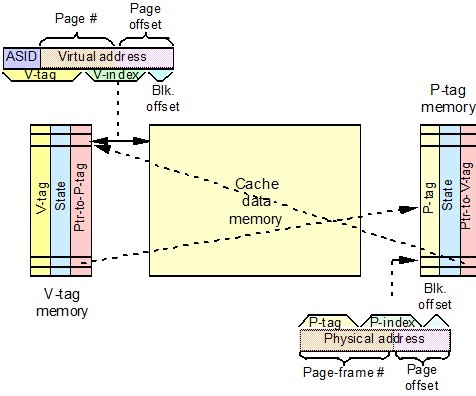

Figure shows the organization of virtually addressed caches. | Figure shows the organization of virtually addressed caches. | ||

[[File: | [[File:TLB5.jpg|left|x300px|Figure 5:Organization of Virtual Addressed Caches<ref>lec15 from NCSU ECE506</ref>]] | ||

From the figure “ASID” means “address-space identifier,” which is often called a process identifier (PID) in some books. The diagram shows the “V-index” overlapping the (virtual) page number. If not, we wouldn’t need a virtually addressed cache. We could do address translation in parallel with cache access with a physically addressed cache. Also the V-index actually does not stored in the cache. Like the index (“set” or “line”) field in physically addressed caches, it is not stored, but just tells what line or set to look in for the data<ref>lec15 from NCSU ECE506</ref>. | From the figure “ASID” means “address-space identifier,” which is often called a process identifier (PID) in some books. The diagram shows the “V-index” overlapping the (virtual) page number. If not, we wouldn’t need a virtually addressed cache. We could do address translation in parallel with cache access with a physically addressed cache. Also the V-index actually does not stored in the cache. Like the index (“set” or “line”) field in physically addressed caches, it is not stored, but just tells what line or set to look in for the data<ref>lec15 from NCSU ECE506</ref>. | ||

== Invalidate technique <ref>lec15 from NCSU ECE506</ref> == | ==== '''Invalidate technique <ref>lec15 from NCSU ECE506</ref>''' ==== | ||

---------------------------------------------------------------------------- | ---------------------------------------------------------------------------- | ||

Finally, some processor families provide hardware instructions to invalidate other processors' TLBs. In the PowerPC family (Weiss and Smith 1994), the "TLB invalidate entry" instruction (tlbie) broadcasts the page address on the bus so that the snooping hardware on other processors can automatically invalidate the corresponding TLB entries without interrupting the processor. The algorithm for handling changes to PTEs is simple: the operating system first makes changes to the page table and then issues a tlbie instruction for the changed PTEs. If the TLB is hardware loaded (as it is in the PowerPC), the OS does not know which other TLBs might be caching the PTE, so the invalidation must be broadcast to all processors. Broadcast is well suited to a bus but undesirable for the more scalable systems with distributed networks. | Finally, some processor families provide hardware instructions to invalidate other processors' TLBs. In the PowerPC family (Weiss and Smith 1994), the "TLB invalidate entry" instruction (tlbie) broadcasts the page address on the bus so that the snooping hardware on other processors can automatically invalidate the corresponding TLB entries without interrupting the processor. The algorithm for handling changes to PTEs is simple: the operating system first makes changes to the page table and then issues a tlbie instruction for the changed PTEs. If the TLB is hardware loaded (as it is in the PowerPC), the OS does not know which other TLBs might be caching the PTE, so the invalidation must be broadcast to all processors. Broadcast is well suited to a bus but undesirable for the more scalable systems with distributed networks. | ||

=== Multiprocessor Considerations === | === '''Multiprocessor Considerations<ref> Milan Milenkovic's IEEE paper </ref>''' === | ||

In multiprocessor systems with shared virtual memory and multiple MMUs, a mapping descriptor can be simultaneously cached in multiple TLBs. Since the contents of a mapping descriptor can vary in response to changes of state in the related page in memory, multiple copies of a given descriptor may pose a consistency problem. Keeping all copies of a mapping descriptor consistent with each other is sometimes referred to as TLB coherence. In a milder form, the coherence problem exists even in uniprocessor systems in which the TLB and memory copies of a mapping descriptor should be identical. Moreover, the updating of a memory-based descriptor should be an atomic action that makes the descriptor consistent with itself. The TLB coherence problem shares many characteristics with its better known cache-coherence counterpart. As with caches, a crude way to deal with TLB coherence is to disallow TLB buffering of shareable descriptors. Forcing all mappings to complete by using the in-memory copy of a shared descriptor avoids the coherence problem at the cost of increased mapping delays. | In multiprocessor systems with shared virtual memory and multiple MMUs, a mapping descriptor can be simultaneously cached in multiple TLBs. Since the contents of a mapping descriptor can vary in response to changes of state in the related page in memory, multiple copies of a given descriptor may pose a consistency problem. Keeping all copies of a mapping descriptor consistent with each other is sometimes referred to as TLB coherence. In a milder form, the coherence problem exists even in uniprocessor systems in which the TLB and memory copies of a mapping descriptor should be identical. Moreover, the updating of a memory-based descriptor should be an atomic action that makes the descriptor consistent with itself. The TLB coherence problem shares many characteristics with its better known cache-coherence counterpart. As with caches, a crude way to deal with TLB coherence is to disallow TLB buffering of shareable descriptors. Forcing all mappings to complete by using the in-memory copy of a shared descriptor avoids the coherence problem at the cost of increased mapping delays. | ||

| Line 73: | Line 187: | ||

Numerous other ways to deal with the problem require some sort of hardware assistance such as snooping TLBs, remote TLB entry invalidation, and selective disabling or flushing of TLB entries. In systems that have both caches and TLBs, the two coherence problems are interdependent in perhaps non-obvious ways. For example, disallowing placement of shareable entries into TLBs may not achieve TLB coherence if caching of the mapping descriptors can occur and cache coherence is not enforced. Consistency and coherence of shared entries in combined MMU/cache/memory systems still pose an open research problem. If the past is any indicator, some time will elapse before a few dominant solutions emerge and find their way into commercial implementations of cache and MMU units. In the meantime, designers of multi microprocessor systems will have to resort to custom solutions. | Numerous other ways to deal with the problem require some sort of hardware assistance such as snooping TLBs, remote TLB entry invalidation, and selective disabling or flushing of TLB entries. In systems that have both caches and TLBs, the two coherence problems are interdependent in perhaps non-obvious ways. For example, disallowing placement of shareable entries into TLBs may not achieve TLB coherence if caching of the mapping descriptors can occur and cache coherence is not enforced. Consistency and coherence of shared entries in combined MMU/cache/memory systems still pose an open research problem. If the past is any indicator, some time will elapse before a few dominant solutions emerge and find their way into commercial implementations of cache and MMU units. In the meantime, designers of multi microprocessor systems will have to resort to custom solutions. | ||

==Techniques for maintaining TLB Coherence in Multiprocessors== | =='''Techniques for maintaining TLB Coherence in Multiprocessors'''== | ||

===TLB Prefetchers=== | ===TLB Prefetchers<ref>Abhishek Bhattacharjee, Margaret R. Martonosi. “Inter-Core Cooperative TLB Prefetchers”</ref>=== | ||

====Introduction==== | ====Introduction==== | ||

This technique aims to exploit the inter-core TLB miss redundancy in parallel applications existing in chip multiprocessors (CMPs).It is based on studies that show the similarity in the miss patterns that exist among multiple cores. This may happen in the following two forms. First, is the existence of identical virtual pages on multiple cores and second, in the form of predictable strides between virtual pages causing TLB misses on different cores. As a result, there are good opportunities for eliminating the common TLB miss streams across the various cores.These techniques can reduce the misses on both on I-TLBs and D-TLBs. | This technique aims to exploit the inter-core TLB miss redundancy in parallel applications existing in chip multiprocessors (CMPs).It is based on studies that show the similarity in the miss patterns that exist among multiple cores. This may happen in the following two forms. First, is the existence of identical virtual pages on multiple cores and second, in the form of predictable strides between virtual pages causing TLB misses on different cores. As a result, there are good opportunities for eliminating the common TLB miss streams across the various cores.These techniques can reduce the misses on both on I-TLBs and D-TLBs. | ||

| Line 87: | Line 201: | ||

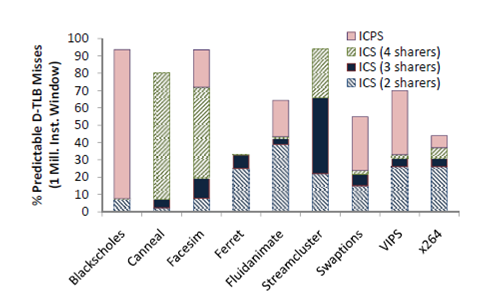

In terms of CMPs, these two prefetching techniques aim to minimize the ''Inter Core Shared(ICS) TLB misses'' and the ''Inter Core Predictable Stride TLB misses'' respectively. These are defined as: | In terms of CMPs, these two prefetching techniques aim to minimize the ''Inter Core Shared(ICS) TLB misses'' and the ''Inter Core Predictable Stride TLB misses'' respectively. These are defined as: | ||

;Inter Core Shared(ICS) TLB misses | |||

:In an N-core CMP, a TLB miss on a core is ICS if it is caused by access to a translation entry with the same virtual page, physical page, context ID (process ID), protection information, and page size as the translation accessed by a previous miss on any of the other N-1 cores, within a 1 million instruction window. The number of cores that see this translation is dened as the number of sharers. | |||

;Inter Core Predictable Stride TLB misses | |||

:In an Ncore CMP, a TLB miss is ICPS with a stride of S if its virtual page V+S differs by S from the virtual page V of the preceding matching miss (context ID and page size must also match). We require this match to occur within a 1 million instruction window, and the stride S must be repetitive and prominent to be categorized as ICPS. | |||

Figure 6 shows the prevalence of these two type of misses on studies workloads | |||

[[Image:%MissesVsBenchmarks.png|thumb|upright=2|left|frame|Figure 6:Percentage of different type of Misses Vs Benchmarks]]<br/> | |||

====Challenges in Implementation==== | ====Challenges in Implementation==== | ||

| Line 97: | Line 242: | ||

====Algorithm==== | ====Algorithm==== | ||

=====Leader-Follower Prefetching===== | |||

The algorithm can be explained in the two example cases depicted in Figure | Assume an N-core CMP with per core D-TLB.The prefetched entries are not inserted directly iinto the TLBs but into prefetch buffers(PB) that are looked up concurrently along with the TLBs. This helps to reduce the consequences of prefetching too early and eliminate useful information. Each PB entry contains a Valid bit and a Prefetch Type bit(to indicate whether the entry rose from Leader Follower or Distance Based cross prefetching) along with the translation entry information.On a PB hit,the entry is put in the TLB.FIFO replacement policy is used by the PB.<br/> | ||

The algorithm can be explained in the two example cases depicted in Figure 7.<br/> | |||

Case 1: Suppose we encounter a D-TLB miss but PB hit on core 0 (step 1a). In response (step 1b), we remove the entry from core 0's PB and add it to its D-TLB. | [[Image:Leader-Follower.png|frame|Figure 7:Leader-Follower Prefetching cases]] | ||

''Case 1'': Suppose we encounter a D-TLB miss but PB hit on core 0 (step 1a). In response (step 1b), we remove the entry from core 0's PB and add it to its D-TLB.<br/> | |||

Case 2: Suppose instead that core 1 sees a D-TLB and PB miss (step 2a). In response, the page table is walked, the translation is located and relled into the D-TLB. In step 2b, this translation is also prefetched or pushed into PBs of the other cores, with the aim of eliminating future ICS misses on the other cores. | ''Case 2'': Suppose instead that core 1 sees a D-TLB and PB miss (step 2a). In response, the page table is walked, the translation is located and relled into the D-TLB. In step 2b, this translation is also prefetched or pushed into PBs of the other cores, with the aim of eliminating future ICS misses on the other cores.<br/> | ||

=====Distance Based Cross Core Prefetching===== | |||

We again assume an N-core system with prefetches placed into percore PBs. The steps of the approach are as follows: | We again assume an N-core system with prefetches placed into percore PBs. The steps of the approach are as follows: | ||

| Line 115: | Line 259: | ||

Step 4: The core (in our example, core 0) sends the current distance, the last distance (from the Last Dist. Reg.), the CPU number, and the current context to the Distance Table (DT), which caches frequently used distance-pairs and is shared by all the cores.The scheme places the DT next to the shared L2 cache. | Step 4: The core (in our example, core 0) sends the current distance, the last distance (from the Last Dist. Reg.), the CPU number, and the current context to the Distance Table (DT), which caches frequently used distance-pairs and is shared by all the cores.The scheme places the DT next to the shared L2 cache. | ||

[[Image:Distance_Based.png|frame|Figure 8:Distance Based Prefetching cases]] | |||

Step 5: The DT uses the current distance to extract predicted future distances from the stored distance-pairs. It also updates itself using the last distance and current distance. | Step 5: The DT uses the current distance to extract predicted future distances from the stored distance-pairs. It also updates itself using the last distance and current distance. | ||

| Line 125: | Line 270: | ||

A number of options exist for the page table walk in step 7;A hardware-managed TLB could use its hardware state machine without involvement from the workload, which could execute in parallel. In contrast, a software-managed TLB may execute the page table walk within the interrupt caused by the initiating TLB miss. | A number of options exist for the page table walk in step 7;A hardware-managed TLB could use its hardware state machine without involvement from the workload, which could execute in parallel. In contrast, a software-managed TLB may execute the page table walk within the interrupt caused by the initiating TLB miss. | ||

These steps are illustrated in Figure | These steps are illustrated in Figure 8. | ||

[[Image:Flowchart.png|thumb|upright=1.5|right|Figure 9:Flowchart of the Poison Bit Method]] | |||

===Poison bit technique=== | ===Poison bit technique<ref>http://www.google.com/patents?hl=en&lr=&vid=USPAT6182195&id=u50GAAAAEBAJ&oi=fnd&dq=TLB+coherence+in+multiprocessors&printsec=abstract#v=onepage&q=TLB%20coherence%20in%20multiprocessors&f=false </ref>=== | ||

====Background==== | ====Background==== | ||

It is more natural to handle Translation updates or TLB coherence actions in software and much of the cost is the need to synchronize(i.e.interrupt) all processors who have entries in their TLB invalidated or updated. This scheme is directed to a system for maintaining coherency between virtual to physical memory translations of multiple requestors in a shared memory processor and is said to have an advantage over Teller’s TLB validation algorithm & Wisconsin Wind Tunnel (WWT) scheme as will be explained below | It is more natural to handle Translation updates or TLB coherence actions in software and much of the cost is the need to synchronize(i.e.interrupt) all processors who have entries in their TLB invalidated or updated. This scheme is directed to a system for maintaining coherency between virtual to physical memory translations of multiple requestors in a shared memory processor and is said to have an advantage over Teller’s TLB validation algorithm & Wisconsin Wind Tunnel (WWT) scheme as will be explained below | ||

| Line 138: | Line 284: | ||

*TLB entry is shown by the designation “tlb” | *TLB entry is shown by the designation “tlb” | ||

[[Image:Figure_A.png|thumb|left|200px|Figure A<sup><span id="3body"></span></sup>]] | |||

*Figure A : All processors virtual address Vx is translated to physical page P0.Value of P0 is AA55 | |||

[[Image:Figure_B.png|thumb|left|200px|Figure B<sup><span id="3body"></span></sup>]] | |||

[[Image:Figure_C.png|thumb|left|200px|Figure C<sup><span id="3body"></span></sup>]] | |||

[[Image:Figure_D.png|thumb|left|200px|Figure D<sup><span id="3body"></span></sup>]] | |||

*Figure B : Proc 0 executes a copy of P0 to P1 which sets the poison bit of P0 to 1 and location P0 is purged from the caches of all the three processors. After completion, P0 updates its page table and its TLB entry so that Vx points to P1. This setting of the Poison state and the reading of the current value of the location must be an atomic operation. | *Figure B : Proc 0 executes a copy of P0 to P1 which sets the poison bit of P0 to 1 and location P0 is purged from the caches of all the three processors. After completion, P0 updates its page table and its TLB entry so that Vx points to P1. This setting of the Poison state and the reading of the current value of the location must be an atomic operation. | ||

*Figure C : When Proc1 & Proc2 use their stale TLB translation, they must first go to the memory as the move invalidates their cached copies of P0.On receiving a bus error, Proc 1 and Proc 2 update their TLB translation for Vx(asynchronously to one another) | *Figure C : When Proc1 & Proc2 use their stale TLB translation, they must first go to the memory as the move invalidates their cached copies of P0.On receiving a bus error, Proc 1 and Proc 2 update their TLB translation for Vx(asynchronously to one another) | ||

*Figure D: When it can be guaranteed that there are no more stale entries for P0, the P0 frame can be re-used by resetting the Poison bit | *Figure D: When it can be guaranteed that there are no more stale entries for P0, the P0 frame can be re-used by resetting the Poison bit | ||

==References== | |||

===UNITD Coherence<ref>http://people.ee.duke.edu/~sorin/papers/hpca10_unitd.pdf</ref>=== | |||

UNITD(Unified Instruction/Translation/Data) is a hardware coherence framework that integrates translation coherence into the existing cache coherence protocol. This technique is one of the few hardware based approaches to maintain TLB coherence in multiprocessor environments unlike all other software TLB coherence schemes. It is said to have no impact on the cache coherence protocol and neither does it increase its complexity. | |||

====Benefits of Hardware Coherence==== | |||

*Reduces the performance impact of TLB coherence | |||

*Provides a cleaner interface between the architecture and the OS | |||

*By decoupling translation coherence from the OS, hardware TLB coherence can be used to support designs that use TLBs in non-processor components such as net¬work cards or processing elements | |||

====Conceptual Framework==== | |||

UNITD is a framework for unifying TLB coherence with cache coherence in one hardware protocol. TLBs like other caches,participate in the coherence protocols like coherent, read-only instruction caches. | |||

With respect to the coherence protocol, TLB entries are read-only—translations are never modified in the TLBs themselves—and thus only two coherence states.TLB entry’s(PTEs) have only two possible states: Shared (read-only) and Invalid.A new translation inserted into a TLB is marked as Shared and can be accessed by the local core as long as it is in the Shared state. The translation remains in this state until the TLB receives a coher¬ence message invalidating the translation. The translation is then Invalid and thus subsequent memory accesses depending on it will miss in the TLB and reac¬quire the translation from the memory system. | |||

one of the main differences between caches and TLBs lies in the fact that cache coherence is based on physical addresses of blocks, but a translation cached in a TLB is not directly addressable by the physical addresses on which it resides (i.e., the physical address of the PTE defining the translation, not to be confused with the physical address to which the translation maps a virtual address). Thus it is important for UNITD to be able to perform coherence lookups in the TLB based on the physical addresses of PTEs. To overcome this key difference between TLBs and caches, we must address two issues: | |||

*Issue #1: It must discover the physical address of the PTE associated for each translation in a TLB with that translation. | |||

*Issue #2:It must augment the TLBs such that they can be accessed with a physical address. | |||

====Addressing UNITD issues==== | |||

In systems with flat page tables, associating a translation with its PTE’s physical address assumes there is a one-to-one association between translations and PTEs. However,This assumption is less obvious for systems using hierarchical page tables because the translation is defined by a combination of mul¬tiple PTEs in the hierarchy. Figure 4.<ref>http://people.ee.duke.edu/~sorin/papers/hpca10_unitd.pdf</ref> illustrates the translation on an IA32 system. | |||

[[Image:Figure_10.png|thumbnail|right|x200px|Figure 10:An IA32 system from virtual page VP1 to physical page PP1, starting from the root of the page table (i.e., CR3 register) and traversing the intermediate PTEs (i.e., PDPE and PDE)<sup><span id="3body"></span></sup>]] | |||

Thus, translation coherence should be enforced when a modification is made to any of the PTEs on which the translation depends.Changes to mappings and changes to the metadata bits are the two types of possible PTE modifications. In the case of mapping changes, the previous memory range the PTE was mapping to must be invalidated. Also the pages included in this space must be cleared for security reasons to ensure that the reused memory space does not contain any previous information. With respect to the metadata bits, any unsafe changes (i.e., to the permission bits) must be propagated down to the last-level PTE. Thus,translation coherence is required by determining when changes are made to the last-level PTE that the translation depends on and so, independent of the structure of the page tables, a translation is identifiable through the last-level PTE address. | |||

UNITD needs to be able to access the TLBs with physical addresses and invalidate the translations associated with the PTEs that reside at those physical addresses to perform coherence lookups in the TLBs. A TLB translation moves to Invalid whenever | |||

the PTE defining the translation is modified assuming one-to-one correspondence between translations and PTEs. | |||

=='''Definitions'''== | |||

1) '''MMU''' - A memory management unit, sometimes called paged memory management unit (PMMU), is a computer hardware component responsible for handling accesses to memory requested by the CPU. Its functions include translation of virtual addresses to physical addresses (i.e., virtual memory management), memory protection, cache control, bus arbitration and in simpler computer architectures (especially 8-bit systems) bank switching. | |||

2) '''PMT''' - In operating systems that use virtual memory, every process is given the impression that it is working with large, contiguous sections of memory. In reality, each process' memory may be dispersed across different areas of physical memory, or may have been paged out to a backup storage (typically the hard disk). When a process requests access to its memory, it is the responsibility of the operating system to map the virtual address provided by the process to the physical address where that memory is stored. The page-map table(PMT) is where the operating system stores its mappings of virtual addresses to physical addresses. | |||

=='''See Also'''== | |||

1) http://www.cs.huji.ac.il/~etsman/papers/DiDi-PACT-2011.pdf | |||

2) http://www.cs.utah.edu/~lepreau/osdi94/bala/abstract.html | |||

3) http://www.helenos.org/doc/design/html.chunked/mm.html | |||

4) http://publib.boulder.ibm.com/infocenter/zos/basics/index.jsp?topic=/com.ibm.zos.zconcepts/zconcepts_82.htm | |||

5)http://books.google.com/books?id=g82fofiqa5IC&pg=PA440&lpg=PA440&dq=TLB+coherence+problem&source=bl&ots=CPnfetmhYl&sig=8M1TbFpDlE_YfEykNCK7LRU9p7s&hl=en&sa=X&ei=DNBzT9PmN5GJ0QHpyb3_Ag&ved=0CCsQ6AEwAQ#v=onepage&q=TLB%20coherence%20problem&f=false | |||

=='''References'''== | |||

<references></references> | <references></references> | ||

Latest revision as of 03:27, 29 March 2012

Introduction

Virtual addresses are translated to absolute addresses using a hardware structure called the Translation Lookaside Buffer (TLB). A TLB accepts a Virtual Page Number and returns the corresponding Physical Page Number. The translation lookaside buffer performs other functions in addition to the basic address translation. The other functions include access control, program debugging support and operating system support for virtual memory.

What is a TLB?

Since all references in Level 0 systems are absolute accesses, these systems do not have TLBs. A TLB is typically not large enough to hold all the current translations. Translations for all pages in memory are stored in a memory structure called the Page Table. The TLB is organized as two parts. The instruction TLB (ITLB) is only used for instruction references, while the data TLB (DTLB) is only used for data references. A system may implement a combined TLB which is used for both instruction and data references. Additionally, translations are supported for large address ranges. Such translations are called block translations and are stored in a block TLB. Block translations map address ranges larger than a page. Block translations are useful in mapping virtual address ranges which do not get paged in and out. These block translations increase the virtual address range of the TLB thereby minimizing the virtual address translation overhead.

TLB Implementation<ref>http://sequoia.ict.pwr.wroc.pl/~iro/RISC/sm/www.hp.com/acd-18.html#HEADING18-0</ref>

A common approach to reducing the overhead of address translation in paging systems is to use the descriptor cache better known as the translation lookaside bufler. The TLB contains associative memory in which it stores recently used page descriptors. The TLB contains the corresponding (virtual address/ page-map table(PMT) entry) pairs used by recent address translations. (See #Definitions for PMT) The address-translation hardware searches the TLB associatively by the page portion of the virtual address when the processor initiates a memory reference. When the match is found, the TLB furnishes the corresponding PMT entry. The physical address stored in the PMT entry forms the physical memory address. Protection is enforced by comparing task boundaries and access rights bits stored in the PMT entry with the obtained address and the intended mode of memory access. The MMU flags(See #Definitions) discrepancies as protection violations and aborts the memory reference. In addition, a processor exception is raised to allow the operating system to abort the offending task.

TLBs generally have high hit ratios, in excess of 90 percent, and thus complete most address translations quickly. When a virtual address is not found in the TLB (a miss), the memory management hardware has to reference the PMTs in memory to complete the translation. The root pointer to the address-translation tree of a specific task is provided by the operating system as a part of the task-control block. The root pointer provides the base address of the next-level mapping table. The Microprocessor Memory Management Unit(MMU) uses it to reference memory and to obtain the related PMT entry. The presence bit therein is inspected to establish whether the item that it points to is resident in (physical) memory. If not, the page-fault exception is raised, and the operating system fetches the missing item from secondary storage. When the target item is in main memory, the MMU checks whether it is the leaf node of the translation tree. If not, the process is repeated for each level of hierarchy until the last level is reached. Only the leaf level contains pointers to page frames in main memory. Other pointers are indirect and serve as trail markers within the address-translation tree. Once the last level of the mapping hierarchy is reached, the MMU stores the obtained PMT entry into the TLB for future reuse. If the TLB is full, it replaces an existing entry to make room for the incoming one.

TLBs commonly employ the least recently used (LRU) replacement policy. Simpler alternatives, such as FIFO and random, may be cheaper to implement but have inferior performance. Due to the non uniqueness of virtual addresses between different address spaces, single-task TLBs must be flushed upon every task switch. The oncoming task must usually resort to loading the TLB with its entries through a succession of TLB misses. Some designers opt to alleviate this problem by using multitasking or multicontext TLBs. A multicontext TLB removes ambiguities by tagging each entry with a unique owner identity, usually that of the enclosing task. On translation, both the address, and the owner identity must match for a given entry to produce a hit. In this way, the TLB need not be flushed upon task switches, and a re-scheduled task can benefit from finding some of its entries in the TLB. In principle, TLBs in systems with hierarchical mapping tables should provide comparatively higher hit ratios to offset the longer translation times incurred by TLB misses. Increasing the number of entries, which also tends to increase the price, generally accomplishes a higher hit ratio.

Here, X is the virtual page number to be translated into a physical page number, X' is the Physical number corresponding to X. and V is the Virtual page number of a victim page(that is a page to be kicked off the table).

TLB miss handling

Given a virtual address, the selected TLB is searched for an entry matching the Virtual Page Number. If the entry exists, the 20-bit Physical Page Number (contained in the TLB entry) is concatenated with the original 12-bit page offset to form a 32-bit absolute address. If no such entry exists, the TLB is updated by either software TLB miss handling or hardware TLB miss handling. In systems with software TLB miss handling, a TLB miss fault interruption routine performs the translation, explicitly inserts the translation and protection fields into the appropriate instruction TLB or data TLB, and restarts the interrupted instruction. To insure the completion of instructions, the TLB must be organized to simultaneously hold all necessary translations. In implementations that provide hardware for TLB miss handling, the hardware attempts to find the virtual to physical page translation in the Page Table. If the hardware is successful, it inserts the translation and protection fields into the appropriate instruction or data TLB. No interruption occurs in this case. If hardware is not successful, due to a search of the Page Table that was not exhaustive or due to the appropriate translation not existing in the Page Table, an interruption occurs so that the software can complete the process.

TLB coherence

The cache-coherent part of a multiprocessor system is required to behave as if there were logically a single DTLB and a single ITLB. All TLBs in a multiprocessor system are required to broadcast purges, except PDTLBE and PITLBE, to all other TLBs. The originating processor's purge instruction suspends until all target processors complete the purge. A processor's TLB is simply a cache on the page table entries (PTEs) used for virtual to physical address translation. A PTE can come to reside in the TLBs in multiple processors due to either actual sharing of data or process migration. PTEs may be modified- for example, when the page is swapped out or its protection is changed - leading to direct analog of the cache coherence problem. A variety of solutions have been used for TLB coherence. Software solutions, through the operating system, are popular since TLB coherence operations are much less frequent than cache coherence operations. The exact solutions used depend on whether PTEs are loaded into the TLB directly by hardware or under software control as well as on several other variables in how TLBs and operating systems are implemented. Hardware solutions are also used by some systems, particularly when TLB operations are not visible to the software. The next section provides a brief overview of four approaches to TLB coherence: virtually addressed caches, software TLB shootdown, address space identifiers (ASIDs), and hardware TLB coherence. Further details can be found in (Thompson et al. 1988; Rosenburg 1989; Teller 1990) and the papers referenced therein.

Approaches to avoid TLB coherence problem

Virtually addressed caches

TLBs, and hence the TLB coherence problem, can be avoided entirely by using virtually addressed caches. Address translation is now needed only on cache misses, so particularly if the cache miss rate is small, we can use the page tables directly. PTEs are brought into the regular data cache when they are accessed and are therefore kept coherent by the cache coherence mechanism. However, when a physical page is swapped out of memory or its protection changed, this is not visible to the cache coherence hardware, so the PTE must be flushed from the virtually addressed caches of all processors by the operating system. In addition, the coherence problem for virtually addressed caches must be solved. This approach was explored in the SPUR research project (Hill et al. 1986; WOOD et al. 1986)

TLB shootdown

A second approach is called TLB shootdown. There are many variants that rely on different (but small) amounts of hardware support, usually including support for interprocessor interrupts and invalidation of individual TLB entries. The TLB coherence procedure is invoked by a processor, called the initiator, when it makes changes to PTEs that may be cached by other TLBs. Since changes to PTEs must be made by the operating system, the OS knows which PTEs are being changed and it may also know which other processors might be caching then in their TLBs ( conservatively, since entries may have been replaced). The OS kernel locks the PTEs being changed (or the relevant page table sections, depending on the granularity of locking) and sends interrupts to other processors that it thinks have copies. On being interrupted, the recipients disable interrupts, look at the list of page table entries being modified (which is in shared memory), and locally invalidate those entries from their TLBs. The initiator waits for them to finish, perhaps by polling shared memory locations, and then unlocks the page table sections. A different, somewhat more complex shootdown algorithm is used in the MACH operating system (Black et al. 1989). <ref>http://stackoverflow.com/questions/3748384/what-is-tlb-shootdown</ref>.

TLB shootdown,the process of maintaining TLB consistency by invalidating entries when pages are unmapped,is one of the simplest operations in a multiprocessor OS that requires global coordination. It is also a short, but latency-critical, operation and so represents a worst-case comparison for a multikernel.

In Linux and Windows, inter-processor interrupts (IPIs) are used: a core that wishes to change a page mapping writes the operation to a well-known location and sends an interrupt to every core that might have the mapping in its TLB. Each core takes the trap, acknowledges the IPI by writing to a shared variable, invalidates the TLB entry, and resumes. The initiating core can continue immediately after every IPI has been acknowledged, since by the time each core resumes to user space the TLB entry is guaranteed to be flushed. This has low latency, but can be disruptive as each core immediately takes the cost of a trap (about 800 cycles). The TLB invalidation itself is fast, taking 95-320 cycles on a current x86-64 core.

In Barrelfish, we use messages for shootdown. In the naive algorithm, the local monitor broadcasts invalidate messages to the others and waits for all the replies. We would expect this to show higher latency than the IPI approach, since the remote monitors handle the messages only when “convenient”. However, fortunately, the message-passing paradigm allows us to improve on this approach without significantly restructuring the code. In particular, we can exploit knowledge about the specific hardware platform, extracted from the system knowledge base at runtime, to achieve very good TLB shootdown performance.<ref>http://research.microsoft.com/pubs/101903/paper.pdf</ref>.

Address Space Identifier (ASID)

Some processor families, most notably the MIPS family from Silicon Graphics, use software-loaded rather than hardware-loaded TLBs, which means that the OS is involved not only in PTE modifications but also in loading a PTE into the TLB on a miss. In these cases, the coherence problem for process-private pages due to process migration can be solved using a third approach, that of ASIDs, which avoids interrupts and TLB shootdown. Every TLB entry has an ASID field associated with it to avoid flushing the entire TLB on a context switch(just as the process identifier is used in virtually addressed caches). In the case of the TLBs, however, ASIDs are like tags allocated dynamically by the OS on a per-processor basis, using a free pool to which they are returned when TLB entries are replaced; they are not associated with processes for their lifetime. One way to use the ASIDs, as was done in the IRIX 5.2 operating system, follows. The OS maintains an array for each process that tracks the ASID assigned to that process on each of the processors in the system. When a process modifies a PTE, the ASID of that process for all other processors is set to zero. This ensures that when the process is migrated to another processor, it will find its ASID to be zero there; the kernel will therefore allocate it a new one, thus preventing use of stale TLB entries. TLB coherence for pages truly shared by the processes is performed using TLB shootdown.

Figure shows the organization of virtually addressed caches.

From the figure “ASID” means “address-space identifier,” which is often called a process identifier (PID) in some books. The diagram shows the “V-index” overlapping the (virtual) page number. If not, we wouldn’t need a virtually addressed cache. We could do address translation in parallel with cache access with a physically addressed cache. Also the V-index actually does not stored in the cache. Like the index (“set” or “line”) field in physically addressed caches, it is not stored, but just tells what line or set to look in for the data<ref>lec15 from NCSU ECE506</ref>.

Invalidate technique <ref>lec15 from NCSU ECE506</ref>

Finally, some processor families provide hardware instructions to invalidate other processors' TLBs. In the PowerPC family (Weiss and Smith 1994), the "TLB invalidate entry" instruction (tlbie) broadcasts the page address on the bus so that the snooping hardware on other processors can automatically invalidate the corresponding TLB entries without interrupting the processor. The algorithm for handling changes to PTEs is simple: the operating system first makes changes to the page table and then issues a tlbie instruction for the changed PTEs. If the TLB is hardware loaded (as it is in the PowerPC), the OS does not know which other TLBs might be caching the PTE, so the invalidation must be broadcast to all processors. Broadcast is well suited to a bus but undesirable for the more scalable systems with distributed networks.

Multiprocessor Considerations<ref> Milan Milenkovic's IEEE paper </ref>

In multiprocessor systems with shared virtual memory and multiple MMUs, a mapping descriptor can be simultaneously cached in multiple TLBs. Since the contents of a mapping descriptor can vary in response to changes of state in the related page in memory, multiple copies of a given descriptor may pose a consistency problem. Keeping all copies of a mapping descriptor consistent with each other is sometimes referred to as TLB coherence. In a milder form, the coherence problem exists even in uniprocessor systems in which the TLB and memory copies of a mapping descriptor should be identical. Moreover, the updating of a memory-based descriptor should be an atomic action that makes the descriptor consistent with itself. The TLB coherence problem shares many characteristics with its better known cache-coherence counterpart. As with caches, a crude way to deal with TLB coherence is to disallow TLB buffering of shareable descriptors. Forcing all mappings to complete by using the in-memory copy of a shared descriptor avoids the coherence problem at the cost of increased mapping delays.

Numerous other ways to deal with the problem require some sort of hardware assistance such as snooping TLBs, remote TLB entry invalidation, and selective disabling or flushing of TLB entries. In systems that have both caches and TLBs, the two coherence problems are interdependent in perhaps non-obvious ways. For example, disallowing placement of shareable entries into TLBs may not achieve TLB coherence if caching of the mapping descriptors can occur and cache coherence is not enforced. Consistency and coherence of shared entries in combined MMU/cache/memory systems still pose an open research problem. If the past is any indicator, some time will elapse before a few dominant solutions emerge and find their way into commercial implementations of cache and MMU units. In the meantime, designers of multi microprocessor systems will have to resort to custom solutions.

Techniques for maintaining TLB Coherence in Multiprocessors

TLB Prefetchers<ref>Abhishek Bhattacharjee, Margaret R. Martonosi. “Inter-Core Cooperative TLB Prefetchers”</ref>

Introduction

This technique aims to exploit the inter-core TLB miss redundancy in parallel applications existing in chip multiprocessors (CMPs).It is based on studies that show the similarity in the miss patterns that exist among multiple cores. This may happen in the following two forms. First, is the existence of identical virtual pages on multiple cores and second, in the form of predictable strides between virtual pages causing TLB misses on different cores. As a result, there are good opportunities for eliminating the common TLB miss streams across the various cores.These techniques can reduce the misses on both on I-TLBs and D-TLBs.

The inter-core co-operative TLB prefetchers aim to improve performance by exploiting the common TLB miss patterns among the cores. These prefetchers employ two techniques: (i)Leader-Follower prefetching: Exploits common TLB miss virtual pages among cores by pushing TLB mappings from leader to other cores, reducing TLB misses. This technique is said to eliminate almost 57% of the TLB misses across emerging parallel workloads. The key is to identify the miss patterns and to avoid pushing the mapping onto uninterested cores.

(ii)Distance Based prefetching: Exploits stride-predictable TLB misses across CMP cores. This means that this technique tracks the distance/difference between successive TLB miss virtual pages and attempts to catch the repetitions. On every TLB miss, the distance between the last miss virtual page and the current miss virtual page is used to predict the next expected distance and the prefetch is then executed for that virtual page. This technique is said to eliminate almost 89% of the TLB misses across emerging parallel workloads.

In terms of CMPs, these two prefetching techniques aim to minimize the Inter Core Shared(ICS) TLB misses and the Inter Core Predictable Stride TLB misses respectively. These are defined as:

- Inter Core Shared(ICS) TLB misses

- In an N-core CMP, a TLB miss on a core is ICS if it is caused by access to a translation entry with the same virtual page, physical page, context ID (process ID), protection information, and page size as the translation accessed by a previous miss on any of the other N-1 cores, within a 1 million instruction window. The number of cores that see this translation is dened as the number of sharers.

- Inter Core Predictable Stride TLB misses

- In an Ncore CMP, a TLB miss is ICPS with a stride of S if its virtual page V+S differs by S from the virtual page V of the preceding matching miss (context ID and page size must also match). We require this match to occur within a 1 million instruction window, and the stride S must be repetitive and prominent to be categorized as ICPS.

Figure 6 shows the prevalence of these two type of misses on studies workloads

Challenges in Implementation

With respect to CMPs, various complications exist in order to implement schemes that will dynamically adapt to diverse TLB miss patterns. For instance, some benchmarks may see a dominance of ICS misses while others may experience more strided ICPS misses. Moreover. the actual strides among the benchmarks may vary significantly. The other challenge exists in the timeliness of the prefetching such that it is able to hide the latency of the TLB misses.

Algorithm

Leader-Follower Prefetching

Assume an N-core CMP with per core D-TLB.The prefetched entries are not inserted directly iinto the TLBs but into prefetch buffers(PB) that are looked up concurrently along with the TLBs. This helps to reduce the consequences of prefetching too early and eliminate useful information. Each PB entry contains a Valid bit and a Prefetch Type bit(to indicate whether the entry rose from Leader Follower or Distance Based cross prefetching) along with the translation entry information.On a PB hit,the entry is put in the TLB.FIFO replacement policy is used by the PB.

The algorithm can be explained in the two example cases depicted in Figure 7.

Case 1: Suppose we encounter a D-TLB miss but PB hit on core 0 (step 1a). In response (step 1b), we remove the entry from core 0's PB and add it to its D-TLB.

Case 2: Suppose instead that core 1 sees a D-TLB and PB miss (step 2a). In response, the page table is walked, the translation is located and relled into the D-TLB. In step 2b, this translation is also prefetched or pushed into PBs of the other cores, with the aim of eliminating future ICS misses on the other cores.

Distance Based Cross Core Prefetching

We again assume an N-core system with prefetches placed into percore PBs. The steps of the approach are as follows:

Step 1: On a D-TLB access, the PB is scanned concurrently to check for the entry. If there is a PB hit, we go to step 2, otherwise we skip directly to step 3.

Step 2: On a PB hit, the entry is removed from the PB and inserted into the D-TLB (in our example, for core 0).We then move to step 3 and follow the same steps as the PB miss case.

Step 3:We now check if the context ID of the current TLB miss is equal to the context ID of the last TLB miss (held in the Last Context Reg.). If so, the current distance is calculated by subtracting the current TLB miss virtual page from the last TLB miss virtual page (held in the Last VP Reg.) and we move to step 4. If there is no match, we skip directly to step 8.

Step 4: The core (in our example, core 0) sends the current distance, the last distance (from the Last Dist. Reg.), the CPU number, and the current context to the Distance Table (DT), which caches frequently used distance-pairs and is shared by all the cores.The scheme places the DT next to the shared L2 cache.

Step 5: The DT uses the current distance to extract predicted future distances from the stored distance-pairs. It also updates itself using the last distance and current distance.

Step 6: A maximum of P predicted distances (the current distance may match with multiple distance-pairs) are sent from the DT back to the requesting core (core 0 in our example), where they are entered into the Distance Buffer (DB). The DB is a FIFO structure with size P to hold all newly predicted distances.

Step 7: The predicted distances in the DB are now used by the core (core 0 in our case) to calculate the corresponding virtual pages and walk the page table. When these prefetched translations are found, they are inserted or pulled into the PB (unlike the Leader-Follower case, this is a pull mechanism since the core with the TLB miss prefetches further items to itself rather than the others).

Step 8: The Last Ctxt., Last VP, and Last Dist. Regs are updated with the current context, current virtual page, and current distance.

A number of options exist for the page table walk in step 7;A hardware-managed TLB could use its hardware state machine without involvement from the workload, which could execute in parallel. In contrast, a software-managed TLB may execute the page table walk within the interrupt caused by the initiating TLB miss. These steps are illustrated in Figure 8.

Poison bit technique<ref>http://www.google.com/patents?hl=en&lr=&vid=USPAT6182195&id=u50GAAAAEBAJ&oi=fnd&dq=TLB+coherence+in+multiprocessors&printsec=abstract#v=onepage&q=TLB%20coherence%20in%20multiprocessors&f=false </ref>

Background

It is more natural to handle Translation updates or TLB coherence actions in software and much of the cost is the need to synchronize(i.e.interrupt) all processors who have entries in their TLB invalidated or updated. This scheme is directed to a system for maintaining coherency between virtual to physical memory translations of multiple requestors in a shared memory processor and is said to have an advantage over Teller’s TLB validation algorithm & Wisconsin Wind Tunnel (WWT) scheme as will be explained below

Summary of Invention

Each memory block is associated with a Poison bit that is set when the virtual to physical address translation of that memory block is stale.The memory controller associated with that memory block generates an exception when there is an access by any of the requestors to that block with the Poison bit set. A requestor may be a processor, an input/output device or the like. In response to that exception,the requestor updates its memory translation for that block with the virtual address translation corresponding to the new physical location of that block. This scheme can be applied to a cache system or a non-cached system.For a cached system with a cache in each processor.For a cached system,all the cached copies are invalidated when the Poison bit is set.The system generally supports a poisoned read operation that returns the current copy of the block while setting the block’s poison bit.The advantage over Teller’s method is the reduced memory overhead due to one state encoding Vs Teller’s generation count and the need to send these counts to the memory. The advantage over WWT is that WWT does not address TLB consistency at all. The translation coherence of this method is updated in a lazy fashion meaning that each processor updates its translation independently and only if there has been an access to a stale translation

Flowchart & Pictorial description

- Proc 0,Proc1 & Proc2 designate 3 nodes with three corresponding memory locations and an interconnection network

- TLB entry is shown by the designation “tlb”

- Figure A : All processors virtual address Vx is translated to physical page P0.Value of P0 is AA55

- Figure B : Proc 0 executes a copy of P0 to P1 which sets the poison bit of P0 to 1 and location P0 is purged from the caches of all the three processors. After completion, P0 updates its page table and its TLB entry so that Vx points to P1. This setting of the Poison state and the reading of the current value of the location must be an atomic operation.

- Figure C : When Proc1 & Proc2 use their stale TLB translation, they must first go to the memory as the move invalidates their cached copies of P0.On receiving a bus error, Proc 1 and Proc 2 update their TLB translation for Vx(asynchronously to one another)

- Figure D: When it can be guaranteed that there are no more stale entries for P0, the P0 frame can be re-used by resetting the Poison bit

UNITD Coherence<ref>http://people.ee.duke.edu/~sorin/papers/hpca10_unitd.pdf</ref>

UNITD(Unified Instruction/Translation/Data) is a hardware coherence framework that integrates translation coherence into the existing cache coherence protocol. This technique is one of the few hardware based approaches to maintain TLB coherence in multiprocessor environments unlike all other software TLB coherence schemes. It is said to have no impact on the cache coherence protocol and neither does it increase its complexity.

Benefits of Hardware Coherence

- Reduces the performance impact of TLB coherence

- Provides a cleaner interface between the architecture and the OS

- By decoupling translation coherence from the OS, hardware TLB coherence can be used to support designs that use TLBs in non-processor components such as net¬work cards or processing elements

Conceptual Framework

UNITD is a framework for unifying TLB coherence with cache coherence in one hardware protocol. TLBs like other caches,participate in the coherence protocols like coherent, read-only instruction caches. With respect to the coherence protocol, TLB entries are read-only—translations are never modified in the TLBs themselves—and thus only two coherence states.TLB entry’s(PTEs) have only two possible states: Shared (read-only) and Invalid.A new translation inserted into a TLB is marked as Shared and can be accessed by the local core as long as it is in the Shared state. The translation remains in this state until the TLB receives a coher¬ence message invalidating the translation. The translation is then Invalid and thus subsequent memory accesses depending on it will miss in the TLB and reac¬quire the translation from the memory system. one of the main differences between caches and TLBs lies in the fact that cache coherence is based on physical addresses of blocks, but a translation cached in a TLB is not directly addressable by the physical addresses on which it resides (i.e., the physical address of the PTE defining the translation, not to be confused with the physical address to which the translation maps a virtual address). Thus it is important for UNITD to be able to perform coherence lookups in the TLB based on the physical addresses of PTEs. To overcome this key difference between TLBs and caches, we must address two issues:

- Issue #1: It must discover the physical address of the PTE associated for each translation in a TLB with that translation.

- Issue #2:It must augment the TLBs such that they can be accessed with a physical address.

Addressing UNITD issues

In systems with flat page tables, associating a translation with its PTE’s physical address assumes there is a one-to-one association between translations and PTEs. However,This assumption is less obvious for systems using hierarchical page tables because the translation is defined by a combination of mul¬tiple PTEs in the hierarchy. Figure 4.<ref>http://people.ee.duke.edu/~sorin/papers/hpca10_unitd.pdf</ref> illustrates the translation on an IA32 system.

Thus, translation coherence should be enforced when a modification is made to any of the PTEs on which the translation depends.Changes to mappings and changes to the metadata bits are the two types of possible PTE modifications. In the case of mapping changes, the previous memory range the PTE was mapping to must be invalidated. Also the pages included in this space must be cleared for security reasons to ensure that the reused memory space does not contain any previous information. With respect to the metadata bits, any unsafe changes (i.e., to the permission bits) must be propagated down to the last-level PTE. Thus,translation coherence is required by determining when changes are made to the last-level PTE that the translation depends on and so, independent of the structure of the page tables, a translation is identifiable through the last-level PTE address.

UNITD needs to be able to access the TLBs with physical addresses and invalidate the translations associated with the PTEs that reside at those physical addresses to perform coherence lookups in the TLBs. A TLB translation moves to Invalid whenever the PTE defining the translation is modified assuming one-to-one correspondence between translations and PTEs.

Definitions

1) MMU - A memory management unit, sometimes called paged memory management unit (PMMU), is a computer hardware component responsible for handling accesses to memory requested by the CPU. Its functions include translation of virtual addresses to physical addresses (i.e., virtual memory management), memory protection, cache control, bus arbitration and in simpler computer architectures (especially 8-bit systems) bank switching.

2) PMT - In operating systems that use virtual memory, every process is given the impression that it is working with large, contiguous sections of memory. In reality, each process' memory may be dispersed across different areas of physical memory, or may have been paged out to a backup storage (typically the hard disk). When a process requests access to its memory, it is the responsibility of the operating system to map the virtual address provided by the process to the physical address where that memory is stored. The page-map table(PMT) is where the operating system stores its mappings of virtual addresses to physical addresses.

See Also

1) http://www.cs.huji.ac.il/~etsman/papers/DiDi-PACT-2011.pdf

2) http://www.cs.utah.edu/~lepreau/osdi94/bala/abstract.html

3) http://www.helenos.org/doc/design/html.chunked/mm.html

References

<references></references>