CSC/ECE 506 Spring 2012/8a fu: Difference between revisions

(→MESIF) |

|||

| (58 intermediate revisions by 2 users not shown) | |||

| Line 54: | Line 54: | ||

With the simple rules of '''[http://en.wikipedia.org/wiki/MSI_protocol MSI]'''protocol enforced by hardware, efficient synchronization and efficient data sharing are achieved in a simple shared memory model of parallel processing in the 4D-MP graphics superworkstation. | With the simple rules of '''[http://en.wikipedia.org/wiki/MSI_protocol MSI]'''protocol enforced by hardware, efficient synchronization and efficient data sharing are achieved in a simple shared memory model of parallel processing in the 4D-MP graphics superworkstation. | ||

===Implementation Complexities=== | ===Implementation Complexities=== | ||

MSI protocol has one serious drawback for executing read-then-write sequences in programs. For each read-then-write, two bus transactions are involved: a BusRd and a BudRdX. All these operations waste a large amount of bandwidth. Therefore, most new machines do not implement MSI protocol. Instead, they use variants of the MSI protocol to reduce the amount of traffic in the coherency interconnect, such as MOSI and MESI protocols. | |||

<br/> | |||

<br/> | |||

==Synapse protocol== | ==Synapse protocol== | ||

| Line 62: | Line 64: | ||

In Synapse protocol '''M''' state is called '''D''' (Dirty) state. The following is the state transition diagram for Synapse protocol: | In Synapse protocol '''M''' state is called '''D''' (Dirty) state. The following is the state transition diagram for Synapse protocol: | ||

<center>[[Image: | <center>[[Image:Synapse699.jpeg]]</center> | ||

<br/> | <br/> | ||

===Real Architectures using Synapse=== | ===Real Architectures using Synapse=== | ||

| Line 72: | Line 74: | ||

===Implementation Complexities=== | ===Implementation Complexities=== | ||

In the Synapse system, memory contention is reduced by designing a processor cache employing a non-write-through algorithm to minimized bandwidth between cache and shared memory. The Synapse Expansion Bus includes an ownership level protocol between processor caches.[[#References|<sup>[24]</sup>]] | In the Synapse system, memory contention is reduced by designing a processor cache employing a non-write-through algorithm to minimized bandwidth between cache and shared memory. The Synapse Expansion Bus includes an ownership level protocol between processor caches.[[#References|<sup>[24]</sup>]] | ||

<br/> | |||

<br/> | |||

==[http://en.wikipedia.org/wiki/MESI_protocol MESI]== | ==[http://en.wikipedia.org/wiki/MESI_protocol MESI]== | ||

| Line 127: | Line 131: | ||

===Real Architectures using MESI=== | ===Real Architectures using MESI=== | ||

====CMP Implementation in Intel Architecture==== | |||

Intel's [http://en.wikipedia.org/wiki/Pentium_Pro Pentium Pro] microprocessor, introduced in 1992, was the first Intel architecture microprocessor to support symmetric multiprocessing in various multiprocessor configurations. SMP and MESI protocol was the architecture used consistently until the introduction of the 45-nm Hi-k Core micro-architecture in Intel's (Nehalem-EP) quad-core x86-64. The 45-nm Hi-k Intel Core microarchitecture utilizes a new system of framework called the [http://en.wikipedia.org/wiki/Intel_QuickPath_Interconnect QuickPath Interconnect] which uses point-to-point interconnection technology based on distributed shared memory architecture. It uses a modified version of MESI protocol called [http://en.wikipedia.org/wiki/MESIF_protocol MESIF], which introduces the additional Forward state. (The state diagram of MESI transitions that occur within the Pentium's data cache can be found on page 63 of [http://books.google.com/books?id=TVzjEZg1--YC&printsec=frontcover&source=gbs_ge_summary_r&cad=0#v=onepage&q&f=false Pentium Processor System Architecture: Second Edition] by Don Anderson, Tom Shanley, MindShare, Inc) | Intel's [http://en.wikipedia.org/wiki/Pentium_Pro Pentium Pro] microprocessor, introduced in 1992, was the first Intel architecture microprocessor to support symmetric multiprocessing in various multiprocessor configurations. SMP and MESI protocol was the architecture used consistently until the introduction of the 45-nm Hi-k Core micro-architecture in Intel's (Nehalem-EP) quad-core x86-64. The 45-nm Hi-k Intel Core microarchitecture utilizes a new system of framework called the [http://en.wikipedia.org/wiki/Intel_QuickPath_Interconnect QuickPath Interconnect] which uses point-to-point interconnection technology based on distributed shared memory architecture. It uses a modified version of MESI protocol called [http://en.wikipedia.org/wiki/MESIF_protocol MESIF], which introduces the additional Forward state.[[#References|<sup>[3]</sup>]] (The state diagram of MESI transitions that occur within the Pentium's data cache can be found on page 63 of [http://books.google.com/books?id=TVzjEZg1--YC&printsec=frontcover&source=gbs_ge_summary_r&cad=0#v=onepage&q&f=false Pentium Processor System Architecture: Second Edition] by Don Anderson, Tom Shanley, MindShare, Inc) | ||

<br/> | |||

<br/> | |||

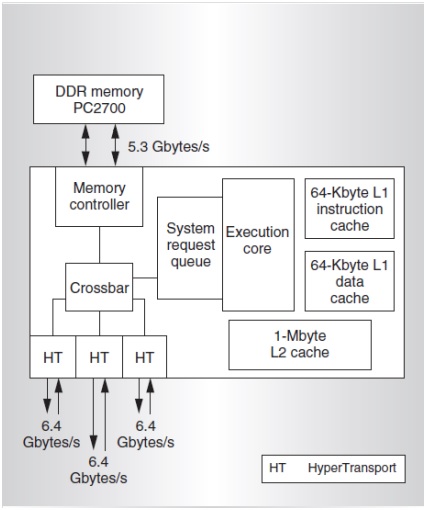

CMP implementation on the Intel Pentium M processor contains a unified on-chip L1 cache with the processor, an Memory/L2 access control unit, a prefetch unit, and a [http://en.wikipedia.org/wiki/Front-side_bus Front Side Bus (FSB)]. Processor requests are first sought in the L2 cache. On a miss, they are forwarded to the main memory via FSB. The Memory/L2 access control unit serves as a central point for maintaining coherence within the core and with the external world. It contains a snoop control unit that receives snoop requests from the bus and performs the required operations on each cache (and internal buffers) in parallel. It also handles RFO requests (BusUpgr) and ensures the operation continues only after it guarantees that no other version on the cache line exists in any other cache in the system.[[#References|<sup>[3]</sup>]] CMP implementation on the [http://en.wikipedia.org/wiki/Intel_Core Intel Core Duo] contains duplicated processors with on-chip L1 caches, L2 controller to handle all L2 cache requests and snoop requests, bus controller to handle data and I/O requests to and from the FSB, a prefetching unit, and a logical unit to maintain fairness between requests coming from each processors to L2 cache.[[#References|<sup>[3]</sup>]] | |||

<br/> | <br/> | ||

<br/> | <br/> | ||

The Intel bus architecture has been evolving in order to accommodate the demands of scalability while using the same MESI protocol. From using a single shared bus to '''dual independent buses (DIB)''', doubling the available bandwidth, and to the logical conclusion of DIB with the introduction of '''dedicated high-speed interconnects (DHSI)'''. The DHSI-based platforms use four FSBs, one for each processor in the platform. In both DIB and DHSI, the snoop filter was used in the chipset to cache snoop information, thereby significantly reducing the broadcasting needed for the snoop traffic on the buses. With the production of processors based on next generation 45-nm Hi-k Intel Core microarchitecture, the [http://en.wikipedia.org/wiki/Xeon Intel Xeon] processor fabric will transition from a DHSI, with the memory controller in the chipset, to a distributed shared memory architecture using Intel QuickPath Interconnects using MESIF protocol.[[#References|<sup>[3]</sup>]] | |||

====ARM MPCore==== | |||

The [http://www.arm.com/products/processors/classic/arm11/arm11-mpcore.php ARM11 MPCore] and [http://en.wikipedia.org/wiki/ARM_Cortex-A9_MPCore Cortex-A9 MPCore] processors support the MESI cache coherency protocol.[[#References|<sup>[19]</sup>]] ARM MPCore defines the states of the MESI protocol it implements as: | The [http://www.arm.com/products/processors/classic/arm11/arm11-mpcore.php ARM11 MPCore] and [http://en.wikipedia.org/wiki/ARM_Cortex-A9_MPCore Cortex-A9 MPCore] processors support the MESI cache coherency protocol.[[#References|<sup>[19]</sup>]] ARM MPCore defines the states of the MESI protocol it implements as: | ||

| Line 161: | Line 171: | ||

===Implementation Complexities=== | ===Implementation Complexities=== | ||

One implementation complexity already mentioned is the inefficiency of task migration in MESI that the ARM MPCore addresses.[[#References|<sup>[19]</sup>]] Another possible implementation complexity is found during the replacement of a cache line: one possible MESI implementation requires a message to be sent to main memory when a cache line is flushed (i.e. an E to I transition), as the line was exclusively in one cache before it was removed. It is possible to avoid this replacement message if the system is designed so that the flush of a modified (exclusive) line requires an acknowledgment from main memory. However, this requires the flush to be stored in a 'write-back' buffer until the reply arrives (to ensure the change is successfully propagated to memory).[[#References|<sup>[23]</sup>]]<br/> | One implementation complexity already mentioned is the inefficiency of task migration in MESI that the ARM MPCore addresses.[[#References|<sup>[19]</sup>]] Another possible implementation complexity is found during the replacement of a cache line: one possible MESI implementation requires a message to be sent to main memory when a cache line is flushed (i.e. an E to I transition), as the line was exclusively in one cache before it was removed. It is possible to avoid this replacement message if the system is designed so that the flush of a modified (exclusive) line requires an acknowledgment from main memory. However, this requires the flush to be stored in a 'write-back' buffer until the reply arrives (to ensure the change is successfully propagated to memory).[[#References|<sup>[23]</sup>]] | ||

<br/> | |||

<br/> | |||

==MESIF== | ==MESIF== | ||

Invented by Intel, MESIF protocol consists of five states, Modified (M), Exclusive (E), Shared (S), Invalid (I) and Forward (F). Whenever there is a read request, only the cache line in the F state will respond to the request, while all the S state caches remain dormant. Hence, by designating a single cache line to '''respond to requests''', coherency traffic is substantially reduced when multiple copies of the data exist. Also, on a read request, the F state transitions from F to S state. That is, when a cache line in the '''F''' state is '''copied''', the F state '''migrates''' to the '''newer copy''', while the '''older''' one drops back to '''S'''. Moving the new copy to the F state '''exploits''' both '''temporal and spatial locality'''. Because the newest copy of the cache line is always in the F state, it is very unlikely that the line in the F state will be evicted from the caches. This takes advantage of the temporal locality of the request. The second advantage is that if a particular cache line is in high demand due to spatial locality, the bandwidth used to transmit that data will be spread across several nodes. | |||

All M to S state transition and E to S state transitions will now be from '''M to F''' and '''E to F'''. | All M to S state transition and E to S state transitions will now be from '''M to F''' and '''E to F'''. | ||

The '''F state''' is '''different''' from the '''Owned state''' of the MOESI protocol as it is '''not''' a unique copy because a valid copy is stored in memory. Thus, unlike the Owned state of the MOESI protocol, in which the data in the O state is the only valid copy of the data, the data in the F state can be evicted or converted to the S state, if desired. | The '''F state''' is '''different''' from the '''Owned state''' of the MOESI protocol as it is '''not''' a unique copy because a valid copy is stored in memory. Thus, unlike the Owned state of the MOESI protocol, in which the data in the O state is the only valid copy of the data, the data in the F state can be evicted or converted to the S state, if desired. | ||

The state transition table is shown below. | |||

{| class="wikitable" border="1" | |||

|- | |||

| '''Cache Line State:''' | |||

| '''Clean / Dirty''' | |||

| '''May Write?''' | |||

| '''May Forward?''' | |||

| '''May Transition To?''' | |||

|- | |||

| M - Modified | |||

| Dirty | |||

| Yes | |||

| Yes | |||

| - | |||

|- | |||

| E - Exclusive | |||

| Clean | |||

| Yes | |||

| Yes | |||

| MSIF | |||

|- | |||

| S - Shared | |||

| Clean | |||

| No | |||

| No | |||

| I | |||

|- | |||

| I - Invalid | |||

| - | |||

| No | |||

| No | |||

| - | |||

|- | |||

| F - Forward | |||

| Clean | |||

| No | |||

| Yes | |||

| SI | |||

|} | |||

===Real Architectures using MESIF=== | |||

MESIF protocol is implemented in the Intel QuickPath Interconnect (QPI), which is a high speed, packetized, point-to-point interconnect used in Intel's Core i series processor starting in 2008. The narrow high-speed links stitch together processors in a distributed shared memoryl-style platform architecture. Compared with front-side buses, it offers much higher bandwidth with low latency. QPI has an efficient architecture allowing more interconnect performance to be achieved in real systems. It has a snoop protocol optimized for low latency and high scalability, as well as | |||

packet and lane structures enabling quick completions of transactions. [[#References|<sup>[2]</sup>]] | |||

More information on the QuickPath Interconnect and MESIF protocol can be found at | More information on the QuickPath Interconnect and MESIF protocol can be found at | ||

'''[http://www.intel.com/technology/quickpath/introduction.pdf Introduction to QuickPath Interconnect]''' | '''[http://www.intel.com/technology/quickpath/introduction.pdf Introduction to QuickPath Interconnect]''' | ||

===Implementation Complexities=== | ===Implementation Complexities=== | ||

In MESIF protocol, only one agent can have a cache line in the F state at any given time; the other agents are allowed to have copies in S shate. Even when a cache line has been forwarded in this state, the home agent still needs to respond with a completion to allow retirement of the resources tracking the transaction. | |||

<br/> | |||

<br/> | |||

==MOESI== | ==MOESI== | ||

| Line 273: | Line 334: | ||

[[File:MOESI.jpg|500px|thumb|center|MOESI state transition diagram]] | [[File:MOESI.jpg|500px|thumb|center|MOESI state transition diagram]] | ||

===Real Architectures using MOESI=== | |||

The following systems implement MOESI coherence protocol: | |||

1. AMD64 architecture, including Operation, Athlon 64, Phenom processors. | |||

2. HP AlphaServer GS320, which is based on Alpha EV68 microprocessors. | |||

===Implementation Complexities=== | |||

When implementing the MOESI protocol on a real architecture like AMD K10 series, some modification or optimization was made to the protocol which allowed more efficient operation for some specific program patterns. For example [http://en.wikipedia.org/wiki/AMD_Phenom AMD Phenom] family of microprocessors (Family 0×10) which is AMD’s first generation to incorporate 4 distinct cores on a single die, and the first to have a cache that all the cores share, uses the MOESI protocol with some optimization techniques incorporated. | |||

It focuses on a small subset of compute problems which behave like Producer and Consumer programs. In such a computing problem, a thread of a program running on a single core produces data, which is consumed by a thread that is running on a separate core. With such programs, it is desirable to get the two distinct cores to communicate through the shared cache, to avoid round trips to/from main memory. The '''MOESI''' protocol that the [http://en.wikipedia.org/wiki/AMD_Phenom AMD Phenom] cache uses for cache coherence can also limit bandwidth. Hence by keeping the cache line in the '''‘M’''' state for such computing problems, we can achieve better performance. | It focuses on a small subset of compute problems which behave like Producer and Consumer programs. In such a computing problem, a thread of a program running on a single core produces data, which is consumed by a thread that is running on a separate core. With such programs, it is desirable to get the two distinct cores to communicate through the shared cache, to avoid round trips to/from main memory. The '''MOESI''' protocol that the [http://en.wikipedia.org/wiki/AMD_Phenom AMD Phenom] cache uses for cache coherence can also limit bandwidth. Hence by keeping the cache line in the '''‘M’''' state for such computing problems, we can achieve better performance. | ||

| Line 287: | Line 355: | ||

You can find more information on how this is implemented and various other ways of optimizations in this manual [http://www.amd.com/us-en/assets/content_type/white_papers_and_tech_docs/40546.pdf Software Optimization guide for AMD 10h Processors] | You can find more information on how this is implemented and various other ways of optimizations in this manual [http://www.amd.com/us-en/assets/content_type/white_papers_and_tech_docs/40546.pdf Software Optimization guide for AMD 10h Processors] | ||

<br/> | |||

<br/> | |||

==Dragon Protocol== | ==Dragon Protocol== | ||

| Line 302: | Line 368: | ||

The Dragon protocol implements snoopy caches that provided the appearance of a uniform memory space to multiple processors. Here, each cache listens to 2 buses: the processor bus and the memory bus. The caches are also responsible for address translation, so the processor bus carries virtual addresses and the memory bus carries physical addresses. The Dragon system was designed to support 4 to 8 Dragon processors. | The Dragon protocol implements snoopy caches that provided the appearance of a uniform memory space to multiple processors. Here, each cache listens to 2 buses: the processor bus and the memory bus. The caches are also responsible for address translation, so the processor bus carries virtual addresses and the memory bus carries physical addresses. The Dragon system was designed to support 4 to 8 Dragon processors. | ||

<br/> | |||

<br/> | |||

=[http://en.wikipedia.org/wiki/Instruction_prefetch Instruction Prefetching]= | =[http://en.wikipedia.org/wiki/Instruction_prefetch Instruction Prefetching]= | ||

In standard [http://en.wikipedia.org/wiki/Pipeline_(computing) CPU pipelining], the optimization of instruction prefetching is used to minimize the time the CPU spends waiting on instructions being fetched from main memory. Prefetching works perfectly on completely | In standard [http://en.wikipedia.org/wiki/Pipeline_(computing) CPU pipelining], the optimization of instruction prefetching is used to minimize the time the CPU spends waiting on instructions being fetched from main memory. Prefetching works perfectly on completely sequential programs; but on programs with branches, other optimizations (like [http://en.wikipedia.org/wiki/Branch_predictor branch prediction]) have to be implemented, and the cost of branching the wrong way has to be considered and optimized.[[#References|<sup>[26]</sup>]] | ||

<br/> | |||

<br/> | |||

Solihin[[#References|<sup>[27]</sup>]] states that many processors rely on software to implement the prefetch special instruction. Solihin[[#References|<sup>[27]</sup>]] also mentions that effective prefecthing is characterized by '''coverage''' (the fraction of initial cache misses prefetch has transitioned into cache hits), '''accuracy''' (the fraction of prefetches that generated cache hits), and '''timeliness''' (how early the prefetch arrives). [http://en.wikipedia.org/wiki/Prefetch_buffer Prefetch buffers] help prefetching obtain the desired characteristics. [http://publib.boulder.ibm.com/infocenter/db2luw/v8/index.jsp?topic=/com.ibm.db2.udb.doc/admin/c0005397.htm Sequential prefetching] detects and prefetches for accesses to contiguous locations, while [http://www.ics.uci.edu/~amrm/slides/amrm_structure/pta/tsld049.htm stride prefetching] detects and prefetches accesses that are s-cache block apart between consecutive accesses.[[#References|<sup>[27]</sup>]] | |||

<br/> | |||

<br/> | |||

Prefetching's optimization is that it brings additional blocks into cache that are surrounding blocks currently being hit by the processor. [[#References|[28]]] states that simply increasing the hit ratio does not automatically improve overall performance in multiprocessor computation. Prefetching can actually produce stalls on individual CPUs of multiprocessor systems, as the hit ratio benefit will only apply to the thread currently benefiting from the prefetch, and it will have to wait for other threads to catch up. [[#References|[28]]] suggests that overlapping prefetching decision-making time with user process time as much as possible to minimize the impact of the overall execution sequence as one attempt to implement prefetching within multiprocessor systems. | |||

<br/> | |||

<br/> | |||

Due to prefetching in multiprocessor systems, the data can be modified in such a way that the memory coherence protocol will not be able to handle the effects, and software must use serializing or cache-invalidation instructions to guarantee subsequent data accesses are coherent. Take for example a [http://en.wikipedia.org/wiki/Page_table page-table] update followed by accesses to the physical pages referenced by the updated page tables. The following is a sequence in which software changes the translation of virtual-page A from physical-page M to physical-page N, which can lead to prefetching of incorrect data: 1) The tables that translate virtual-page A to physical-page M are now held only in main memory and the copies in the cache are invalidated. 2) Page-table entry is changed by the software for virtual-page A in main memory to point to physical page N rather than physical-page M. 3) Data in virtual-page A is accessed. | |||

<br/> | |||

<br/> | |||

Software expects the processor to access the data from physical-page N after the update. However, it is possible for the processor to prefetch the data from physical-page M before the page table for virtual page A is updated because the processor is unable to recognize that coherence checking is needed. Without coherence checking, it will be assumed that it is safe to prefetch the data. Similar behavior can occur when instructions are prefetched from beyond the page-table update instruction. Special instructions are provided by prefetching software (executed immediately after the page-table update) to ensure that subsequent instruction fetches and data accesses use the correct virtual-page-to-physical-page translation. It is not necessary to perform a [http://en.wikipedia.org/wiki/Translation_lookaside_buffer TLB] invalidation operation preceding the table update. | |||

<br/> | |||

<br/> | |||

Another optimization considered for bus-based multiprocessors is cache-injection. In cache-injection, a cache uses prefetching to predict it's future needs by placing the address of the data expected in a injection table to initiate the read bus transaction. Once the data is produced, a write-back bus transaction is initiated, and all other caches snoop the bus for an injection table hit to determine if they should store the data.[[#References|<sup>[29]</sup>]] Cache-injection can be implemented on any bus-based cache coherency protocol, supplying support for cache prefetching, [http://docs.lib.purdue.edu/cgi/viewcontent.cgi?article=1160&context=ecetr data forwarding]. Section 4.2 of [[#References|[29]]] walks through an in-depth example of using cache-injection on a MESI write-back invalidation cache. | |||

<br/> | <br/> | ||

<br/> | <br/> | ||

=Word Invalidation= | =Word Invalidation= | ||

| Line 374: | Line 398: | ||

In essence, the solution proposed here is to advance the MOESI protocol with word invalidation and specific treatment of temporal and spatial data, so that the block is not invalidated. | In essence, the solution proposed here is to advance the MOESI protocol with word invalidation and specific treatment of temporal and spatial data, so that the block is not invalidated. | ||

<br/> | |||

<br/> | |||

=Performance: MOESI vs MEI/MESI= | =Performance: MOESI vs MEI/MESI= | ||

| Line 381: | Line 407: | ||

Source: [http://www.lexisnexis.com.www.lib.ncsu.edu:2048/hottopics/lnacademic/?shr=t&csi=155278&sr=HLEAD%28Architects+wrestle+with+multiprocessor+options%29+and+date+is+August%2C%202001 Edwards, Chris (08/06/2001). "Architects wrestle with multiprocessor options". Electronic engineering times (0192-1541), (1178), p. 48.] | Source: [http://www.lexisnexis.com.www.lib.ncsu.edu:2048/hottopics/lnacademic/?shr=t&csi=155278&sr=HLEAD%28Architects+wrestle+with+multiprocessor+options%29+and+date+is+August%2C%202001 Edwards, Chris (08/06/2001). "Architects wrestle with multiprocessor options". Electronic engineering times (0192-1541), (1178), p. 48.] | ||

<br/> | |||

<br/> | |||

=References= | =References= | ||

| Line 408: | Line 436: | ||

# [http://rsim.cs.illinois.edu/rsim/Manual/node109.html RSIM] | # [http://rsim.cs.illinois.edu/rsim/Manual/node109.html RSIM] | ||

# [http://dl.acm.org/citation.cfm?id=1499317 Synapse tightly coupled multiprocessors] | # [http://dl.acm.org/citation.cfm?id=1499317 Synapse tightly coupled multiprocessors] | ||

# [http://dictionary.reference.com/browse/instruction+prefetch prefetching] | |||

# Yan Solihin, "Fundamentals of Parallel Computer Architecture," Solihin Publishing & Consulting LLC, 2008, pp. 168-173. | |||

# David F. Kotz and Carla Schlatter Ellis, "Prefetching in File Systems for MIMD Multiprocessors," in IEEE Transactions on Parallel and Distributed Systems, Vol. 1, No. 2, April 1990, pp. 218-230. | |||

# [http://www.ece.uah.edu/~milenka/docs/apads98.pdf cache-injection 1998] | |||

Latest revision as of 22:21, 25 March 2012

Introduction to bus-based cache coherence in real machines

SMP Architecture

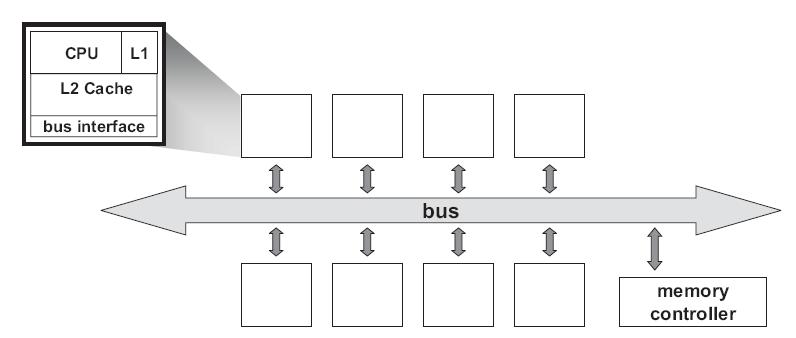

Most parallel software in the commercial market relies on the shared-memory programming model in which all processors access the same physical address space. And the most common multiprocessors today use SMP architecture which use a common bus as the interconnect. In the case of multicore processors ("chip multiprocessors," or CMP) the SMP architecture applies to the cores treating them as separate processors. The key problem of shared-memory multiprocessors is providing a consistent view of memory with various cache hierarchies. This is called cache coherence problem. It is critical to achieve correctness and performance-sensitive design point for supporting the shared-memory model. The cache coherence mechanisms not only govern communication in a shared-memory multiprocessor, but also typically determine how the memory system transfers data between processors, caches, and memory.

At any point in logical time, the permissions for a cache block can allow either a single writer or multiple readers. The coherence protocol ensures the invariants of the states are maintained. The different coherent states used by most of the cache coherence protocols are as shown in Table 1:

| States | Access Type | Invariant |

| Modified | read, write | all other caches in I state |

| Exclusive | read | all other caches in I state |

| Owned | read | all other caches in I or S state |

| Shared | read | no other cache in M or E state |

| Invalid | - | - |

Snooping Protocols

MSI Protocol

MSI is a three-state write-back invalidation protocol which is one of the earliest snooping-based cache coherence-protocols. It marks the cache line in Modified (M) ,Shared (S) and Invalid (I) state. Invalid means the cache line is either not present or is invalid state. If the cache line is clean and is shared by more than one processor , it is marked shared. If cache line is dirty and the processor has exclusive ownership of the cache line, it is present in Modified state. BusRdx causes others to invalidate (demote) to I state. If it is present in M state in another cache, it will flush. A BusRdx, even if it causes a cache hit in S state, is promoted to M (upgrade) state.

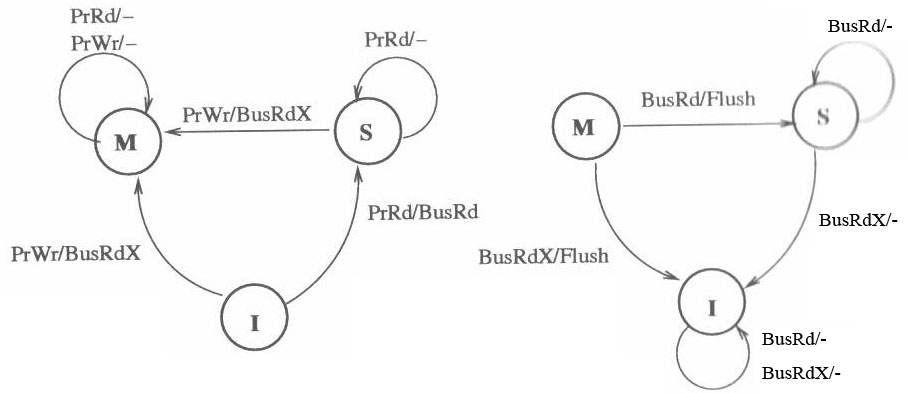

The state diagram of the MSI protocol is shown below. Note that the left part shows the response to processor-side requests, and the right part shows the response to snopper-side requests.[21]

Real Architectures using MSI

MSI protocol was first used in SGI IRIS 4D series. SGI produced a broad range of MIPS-based (Microprocessor without Interlocked Pipeline Stages) workstations and servers during the 1990s, running SGI's version of UNIX System V, now called IRIX. The 4D-MP graphics superworkstation brought 40 MIPS(million instructions per second) of computing performance to a graphics superworkstation. The unprecedented level of computing and graphics processing in an office-environment workstation was made possible by the fastest available Risc microprocessors in a single shared memory multiprocessor design driving a tightly coupled, highly parallel graphics system. Aggregate sustained data rates of over one gigabyte per second were achieved by a hierarchy of buses in a balanced system designed to avoid bottlenecks.

The multiprocessor bus used in 4D-MP graphics superworkstation is a pipelined, block transfer bus that supports the cache coherence protocol as well as providing 64 megabytes of sustained data bandwidth between the processors, the memory and I/O system, and the graphics subsystem. Because the sync bus provides for efficient synchronization between processors, the cache coherence protocol was designed to support efficient data sharing between processors. If a cache coherence protocol has to support synchronization as well as sharing, a compromise in the efficiency of the data sharing protocol may be necessary to improve the efficiency of the synchronization operations. Hence it uses the simple cache coherence protocol which is the MSI protocol.

With the simple rules of MSIprotocol enforced by hardware, efficient synchronization and efficient data sharing are achieved in a simple shared memory model of parallel processing in the 4D-MP graphics superworkstation.

Implementation Complexities

MSI protocol has one serious drawback for executing read-then-write sequences in programs. For each read-then-write, two bus transactions are involved: a BusRd and a BudRdX. All these operations waste a large amount of bandwidth. Therefore, most new machines do not implement MSI protocol. Instead, they use variants of the MSI protocol to reduce the amount of traffic in the coherency interconnect, such as MOSI and MESI protocols.

Synapse protocol

From the state transition diagram of MSI, we observe that there is transition to state S from state M when a BusRd is observed for that block. The contents of the block is flushed to the bus before going to S state. It would look more appropriate to move to I state thus giving up the block entirely in certain cases. This choice of moving to S or I reflects the designer's assertion that the original processor is more likely to continue reading the block than the new processor to write to the block. In synapse protocol, used in the early Synapse multiprocessor, made this alternate choice of going directly from M state to I state on a BusRd, assuming the migratory pattern would be more frequent. More details about this protocol can be found in these papers published in late 1980's Coherence protocols: evaluation using a multiprocessor simulation model and Synapse tightly coupled multiprocessors: a new approach to solve old problems

In Synapse protocol M state is called D (Dirty) state. The following is the state transition diagram for Synapse protocol:

Real Architectures using Synapse

Nothing can be found on an any actual architecture using the Synapse cache-coherence protocol. From the abstract of the paper written by Steve Frank and Armond Inselberg in 1984, "Synapse Tightly Coupled Multiprocessors: A New Approach to Solve Old Problems", Synapse was theoretical.

The The BABYLON Project did one example of a Synapse cache coherence protocol with atomic synchronization actions.

Implementation Complexities

In the Synapse system, memory contention is reduced by designing a processor cache employing a non-write-through algorithm to minimized bandwidth between cache and shared memory. The Synapse Expansion Bus includes an ownership level protocol between processor caches.[24]

MESI

The main drawback of MSI is that each read-write sequence incurs two bus transactions irrespective of whether the cache line is stored in only one cache or not. Highly parallel programs that have little data sharing suffer the most from this. MESI protocol solves this problem by introducing the Exclusive state to distinguish between a cache line stored in multiple caches and a line stored in a single cache. Let us briefly see how the MESI protocol works. For a more detailed version refer Solihin textbook pg. 215.

MESI coherence protocol marks each cache line in of the Modified, Exclusive, Shared, or Invalid state.

- Invalid : The cache line is either not present or is invalid

- Exclusive : The cache line is clean and is owned by this core/processor only

- Modified : This implies that the cache line is dirty and the core/processor has exclusive ownership of the cache line,exclusive of the memory also.

- Shared : The cache line is clean and is shared by more than one core/processor

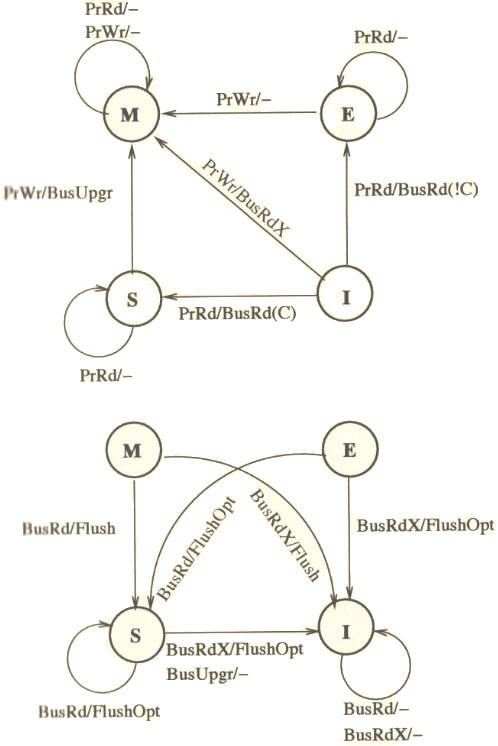

The MESI protocol works as follows: A line that is fetched, receives E, or S state depending on whether it exists in other processors in the system. A cache line gets the M state when a processor writes to it; if the line is not in E or M-state prior to writing it, the cache sends a Bus Upgrade (BusUpgr) signal or as the Intel manuals term it, “Read-For-Ownership (RFO) request” that ensures that the line exists in the cache and is in the I state in all other processors on the bus (if any). A table is shown below to summarize the MESI protocol.

| Cache Line State: | Modified | Exclusive | Shared | Invalid |

| This cache line is valid? | Yes | Yes | Yes | No |

| The memory copy is… | out of date | valid | valid | - |

| Copies exist in caches of other processors? | No | No | Maybe | Maybe |

| A write to this line | does not go to bus | does not go to bus | goes to bus and updates cache | goes directly to bus |

State transition diagram for MESI protocol is shown below.[21]

Real Architectures using MESI

CMP Implementation in Intel Architecture

Intel's Pentium Pro microprocessor, introduced in 1992, was the first Intel architecture microprocessor to support symmetric multiprocessing in various multiprocessor configurations. SMP and MESI protocol was the architecture used consistently until the introduction of the 45-nm Hi-k Core micro-architecture in Intel's (Nehalem-EP) quad-core x86-64. The 45-nm Hi-k Intel Core microarchitecture utilizes a new system of framework called the QuickPath Interconnect which uses point-to-point interconnection technology based on distributed shared memory architecture. It uses a modified version of MESI protocol called MESIF, which introduces the additional Forward state.[3] (The state diagram of MESI transitions that occur within the Pentium's data cache can be found on page 63 of Pentium Processor System Architecture: Second Edition by Don Anderson, Tom Shanley, MindShare, Inc)

CMP implementation on the Intel Pentium M processor contains a unified on-chip L1 cache with the processor, an Memory/L2 access control unit, a prefetch unit, and a Front Side Bus (FSB). Processor requests are first sought in the L2 cache. On a miss, they are forwarded to the main memory via FSB. The Memory/L2 access control unit serves as a central point for maintaining coherence within the core and with the external world. It contains a snoop control unit that receives snoop requests from the bus and performs the required operations on each cache (and internal buffers) in parallel. It also handles RFO requests (BusUpgr) and ensures the operation continues only after it guarantees that no other version on the cache line exists in any other cache in the system.[3] CMP implementation on the Intel Core Duo contains duplicated processors with on-chip L1 caches, L2 controller to handle all L2 cache requests and snoop requests, bus controller to handle data and I/O requests to and from the FSB, a prefetching unit, and a logical unit to maintain fairness between requests coming from each processors to L2 cache.[3]

The Intel bus architecture has been evolving in order to accommodate the demands of scalability while using the same MESI protocol. From using a single shared bus to dual independent buses (DIB), doubling the available bandwidth, and to the logical conclusion of DIB with the introduction of dedicated high-speed interconnects (DHSI). The DHSI-based platforms use four FSBs, one for each processor in the platform. In both DIB and DHSI, the snoop filter was used in the chipset to cache snoop information, thereby significantly reducing the broadcasting needed for the snoop traffic on the buses. With the production of processors based on next generation 45-nm Hi-k Intel Core microarchitecture, the Intel Xeon processor fabric will transition from a DHSI, with the memory controller in the chipset, to a distributed shared memory architecture using Intel QuickPath Interconnects using MESIF protocol.[3]

ARM MPCore

The ARM11 MPCore and Cortex-A9 MPCore processors support the MESI cache coherency protocol.[19] ARM MPCore defines the states of the MESI protocol it implements as:

| Cache Line State: | Modified | Exclusive | Shared | Invalid |

| Copies in other caches | NO | NO | YES | - |

| Clean or Dirty | DIRTY | CLEAN | CLEAN | - |

The coherency protocol is implemented and managed by the Snoop Control Unit (SCU) in the ARM MPCore, which monitors the traffic between local L1 data caches and the next level of the memory hierarchy. At boot time, each core can choose to partake in the coherency domain or not. Unless explicit system calls bound a task to a specific core (processor affinity), there are high chances that a task will at some point migrate to a different core, along with its data as it is used. Migration of tasks is not efficiently implemented in literal MESI implementation, so the ARM MPCore offers two optimizations that allow for MESI compliance and migration of tasks: Direct Data Intervention (DDI) (in which the SCU keeps a copy of all cores caches’ tag RAMs. This enables it to efficiently detect if a cache line request by a core is in another core in the coherency domain before looking for it in the next level of the memory hierarchy)and Cache-to-cache Migration (where if the SCU finds that the cache line requested by one CPU present in another core, it will either copy it (if clean) or move it (if dirty) from the other CPU directly into the requesting one, without interacting with external memory). These optimizations reduce memory traffic in and out of the L1 cache subsystem by eliminating interaction with external memories, which in effect, reduces the overall load on the interconnect and the overall power consumption.[19]

Other architectures that use the MESI cache-coherence protocol include the L2 cache of the IBM POWER4 processor[20], the L2 cache of the Intel Itanium 2 processor[21], and the Intel Xeon[22].

Implementation Complexities

One implementation complexity already mentioned is the inefficiency of task migration in MESI that the ARM MPCore addresses.[19] Another possible implementation complexity is found during the replacement of a cache line: one possible MESI implementation requires a message to be sent to main memory when a cache line is flushed (i.e. an E to I transition), as the line was exclusively in one cache before it was removed. It is possible to avoid this replacement message if the system is designed so that the flush of a modified (exclusive) line requires an acknowledgment from main memory. However, this requires the flush to be stored in a 'write-back' buffer until the reply arrives (to ensure the change is successfully propagated to memory).[23]

MESIF

Invented by Intel, MESIF protocol consists of five states, Modified (M), Exclusive (E), Shared (S), Invalid (I) and Forward (F). Whenever there is a read request, only the cache line in the F state will respond to the request, while all the S state caches remain dormant. Hence, by designating a single cache line to respond to requests, coherency traffic is substantially reduced when multiple copies of the data exist. Also, on a read request, the F state transitions from F to S state. That is, when a cache line in the F state is copied, the F state migrates to the newer copy, while the older one drops back to S. Moving the new copy to the F state exploits both temporal and spatial locality. Because the newest copy of the cache line is always in the F state, it is very unlikely that the line in the F state will be evicted from the caches. This takes advantage of the temporal locality of the request. The second advantage is that if a particular cache line is in high demand due to spatial locality, the bandwidth used to transmit that data will be spread across several nodes. All M to S state transition and E to S state transitions will now be from M to F and E to F. The F state is different from the Owned state of the MOESI protocol as it is not a unique copy because a valid copy is stored in memory. Thus, unlike the Owned state of the MOESI protocol, in which the data in the O state is the only valid copy of the data, the data in the F state can be evicted or converted to the S state, if desired.

The state transition table is shown below.

| Cache Line State: | Clean / Dirty | May Write? | May Forward? | May Transition To? |

| M - Modified | Dirty | Yes | Yes | - |

| E - Exclusive | Clean | Yes | Yes | MSIF |

| S - Shared | Clean | No | No | I |

| I - Invalid | - | No | No | - |

| F - Forward | Clean | No | Yes | SI |

Real Architectures using MESIF

MESIF protocol is implemented in the Intel QuickPath Interconnect (QPI), which is a high speed, packetized, point-to-point interconnect used in Intel's Core i series processor starting in 2008. The narrow high-speed links stitch together processors in a distributed shared memoryl-style platform architecture. Compared with front-side buses, it offers much higher bandwidth with low latency. QPI has an efficient architecture allowing more interconnect performance to be achieved in real systems. It has a snoop protocol optimized for low latency and high scalability, as well as packet and lane structures enabling quick completions of transactions. [2]

More information on the QuickPath Interconnect and MESIF protocol can be found at Introduction to QuickPath Interconnect

Implementation Complexities

In MESIF protocol, only one agent can have a cache line in the F state at any given time; the other agents are allowed to have copies in S shate. Even when a cache line has been forwarded in this state, the home agent still needs to respond with a completion to allow retirement of the resources tracking the transaction.

MOESI

AMD Opteron was the AMD’s first-generation dual core which had 2 distinct K8 cores together on a single die. Cache coherence produces bigger problems on such multiprocessors. It was necessary to use an appropriate coherence protocol to address this problem. The Intel Xeon, which was the competitive counterpart from Intel used the MESI protocol to handle cache coherence. MESI came with the drawback of using much time and bandwidth in certain situations.

MOESI was the AMD’s answer to this problem. MOESI added a fifth state to MESI protocol called “Owned” . MOESI addresses the bandwidth problem faced in MESI protocol when processor having invalid data in its cache wants to modify the data. The processor seeking the data access will have to wait for the processor which modified this data to write back to the main memory, which takes time and bandwidth. This drawback is removed in MOESI by allowing dirty sharing. When the data is held by a processor in the new state “Owned”, it can provide other processors the modified data without or even before writing it to the main memory. This is called dirty sharing. The processor with the data in "Owned" stays responsible to update the main memory later when the cache line is evicted.

MOESI has become one of the most popular snoop-based protocols supported in the AMD64 architecture. The AMD dual-core Opteron can maintain cache coherence in systems up to 8 processors using this protocol.

The five different states of the MOESI protocol are:

- Modified (M) : The most recent copy of the data is present in the cache line. But it is not present in any other processor cache.

- Owned (O) : The cache line has the most recent correct copy of the data . This can be shared by other processors. The processor in this state for this cache line is responsible to update the correct value in the main memory before it gets evicted.

- Exclusive (E) : A cache line holds the most recent, correct copy of the data, which is exclusively present on this processor and a copy is present in the main memory.

- Shared (S) : A cache line in the shared state holds the most recent, correct copy of the data, which may be shared by other processors.

- Invalid (I) : A cache line does not hold a valid copy of the data.

A detailed explanation of this protocol implementation on AMD processor can be found in the manual Architecture of the AMD 64-bit core

The following table summarizes the MOESI protocol:

| Cache Line State: | Modified | Owner | Exclusive | Shared | Invalid |

| This cache line is valid? | Yes | Yes | Yes | Yes | No |

| The memory copy is… | out of date | out of date | valid | valid | - |

| Copies exist in caches of other processors? | No | No | Yes (out of date values) | Maybe | Maybe |

| A write to this line | does not go to bus | does not go to bus | does not go to bus | goes to bus and updates cache | goes directly to bus |

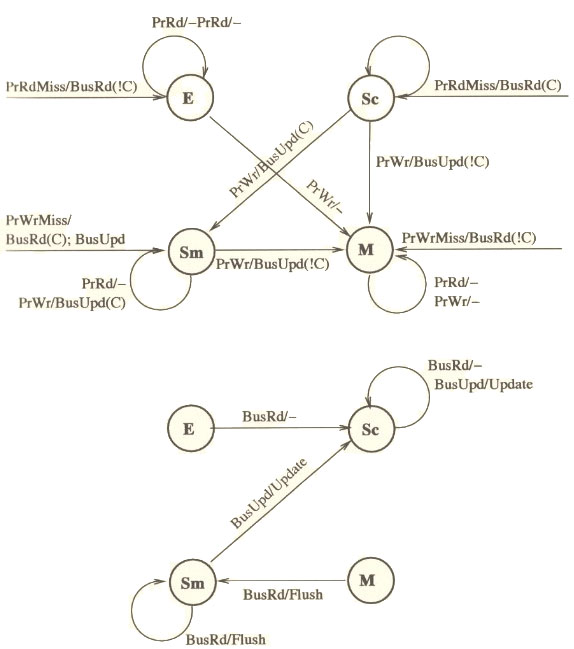

State transition diagram for MOESI protocol is shown below. The top part shows the response to processor-side requests and the bottom part is the response to snooper-side requests. [21]

Real Architectures using MOESI

The following systems implement MOESI coherence protocol:

1. AMD64 architecture, including Operation, Athlon 64, Phenom processors. 2. HP AlphaServer GS320, which is based on Alpha EV68 microprocessors.

Implementation Complexities

When implementing the MOESI protocol on a real architecture like AMD K10 series, some modification or optimization was made to the protocol which allowed more efficient operation for some specific program patterns. For example AMD Phenom family of microprocessors (Family 0×10) which is AMD’s first generation to incorporate 4 distinct cores on a single die, and the first to have a cache that all the cores share, uses the MOESI protocol with some optimization techniques incorporated.

It focuses on a small subset of compute problems which behave like Producer and Consumer programs. In such a computing problem, a thread of a program running on a single core produces data, which is consumed by a thread that is running on a separate core. With such programs, it is desirable to get the two distinct cores to communicate through the shared cache, to avoid round trips to/from main memory. The MOESI protocol that the AMD Phenom cache uses for cache coherence can also limit bandwidth. Hence by keeping the cache line in the ‘M’ state for such computing problems, we can achieve better performance.

When the producer thread , writes a new entry, it allocates cache-lines in the modified (M) state. Eventually, these M-marked cache lines will start to fill the L3 cache. When the consumer reads the cache line, the MOESI protocol changes the state of the cache line to owned (O) in the L3 cache and pulls down a shared (S) copy for its own use. Now, the producer thread circles the ring buffer to arrive back to the same cache line it had previously written. However, when the producer attempts to write new data to the owned (marked ‘O’) cache line, it finds that it cannot, since a cache line marked ‘O’ by the previous consumer read does not have sufficient permission for a write request (in the MOESI protocol). To maintain coherence, the memory controller must initiate probes in the other caches (to handle any other S copies that may exist). This will slow down the process.

Thus, it is preferable to keep the cache line in the ‘M’ state in the L3 cache. In such a situation, when the producer comes back around the ring buffer, it finds the previously written cache line still marked ‘M’, to which it is safe to write without coherence concerns. Thus better performance can be achieved by such optimization techniques to standard protocols when implemented in real machines.

You can find more information on how this is implemented and various other ways of optimizations in this manual Software Optimization guide for AMD 10h Processors

Dragon Protocol

The Dragon Protocol is an update based coherence protocol which does not invalidate other cached copies like what we have seen in the coherence protocols so far. Write propagation is achieved by updating the cached copies instead of invalidating them. But the Dragon Protocol does not update memory on a cache to cache transfer and delays the memory and cache consistency until the data is evicted and written back, which saves time and lowers the memory access requirements. Moreover only the written byte or the word is communicated to the other caches instead of the whole block which further reduces the bandwidth usage. It has the ability to detect dynamically, the sharing status of a block and use a write through policy for shared blocks and write back for currently non-shared blocks. The Dragon Protocol employs the following four states for the cache blocks: Shared Clean, Shared Modified, Exclusive and Modified.

- Modified (M) and Exclusive (E) - these states have the same meaning as explained in the protocols above.

- Shared Modified (Sm) - Only one cache line in the system can be in the Shared Modified state. Potentially two or more caches have this block and memory may or may not be up to date and this processor's cache had modified the block.

- Shared Clean (Sc) - Potentially two or more caches have this block and memory may or may not be up to date (if no other cache has it in Sm state, memory will be up to date else it is not).

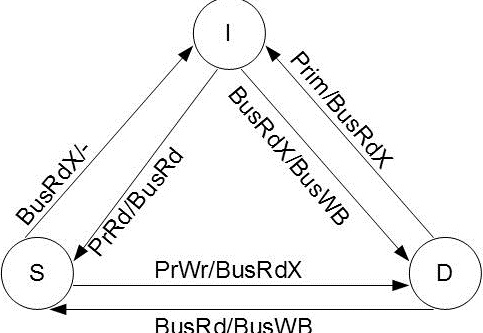

When a Shared Modified line is evicted from the cache on a cache miss only then is the block written back to the main memory in order to keep memory consistent. For more information on Dragon protocol, refer to Solihin textbook, page number 229. The state transition diagram has been given below for reference.[21]

The Dragon protocol implements snoopy caches that provided the appearance of a uniform memory space to multiple processors. Here, each cache listens to 2 buses: the processor bus and the memory bus. The caches are also responsible for address translation, so the processor bus carries virtual addresses and the memory bus carries physical addresses. The Dragon system was designed to support 4 to 8 Dragon processors.

Instruction Prefetching

In standard CPU pipelining, the optimization of instruction prefetching is used to minimize the time the CPU spends waiting on instructions being fetched from main memory. Prefetching works perfectly on completely sequential programs; but on programs with branches, other optimizations (like branch prediction) have to be implemented, and the cost of branching the wrong way has to be considered and optimized.[26]

Solihin[27] states that many processors rely on software to implement the prefetch special instruction. Solihin[27] also mentions that effective prefecthing is characterized by coverage (the fraction of initial cache misses prefetch has transitioned into cache hits), accuracy (the fraction of prefetches that generated cache hits), and timeliness (how early the prefetch arrives). Prefetch buffers help prefetching obtain the desired characteristics. Sequential prefetching detects and prefetches for accesses to contiguous locations, while stride prefetching detects and prefetches accesses that are s-cache block apart between consecutive accesses.[27]

Prefetching's optimization is that it brings additional blocks into cache that are surrounding blocks currently being hit by the processor. [28] states that simply increasing the hit ratio does not automatically improve overall performance in multiprocessor computation. Prefetching can actually produce stalls on individual CPUs of multiprocessor systems, as the hit ratio benefit will only apply to the thread currently benefiting from the prefetch, and it will have to wait for other threads to catch up. [28] suggests that overlapping prefetching decision-making time with user process time as much as possible to minimize the impact of the overall execution sequence as one attempt to implement prefetching within multiprocessor systems.

Due to prefetching in multiprocessor systems, the data can be modified in such a way that the memory coherence protocol will not be able to handle the effects, and software must use serializing or cache-invalidation instructions to guarantee subsequent data accesses are coherent. Take for example a page-table update followed by accesses to the physical pages referenced by the updated page tables. The following is a sequence in which software changes the translation of virtual-page A from physical-page M to physical-page N, which can lead to prefetching of incorrect data: 1) The tables that translate virtual-page A to physical-page M are now held only in main memory and the copies in the cache are invalidated. 2) Page-table entry is changed by the software for virtual-page A in main memory to point to physical page N rather than physical-page M. 3) Data in virtual-page A is accessed.

Software expects the processor to access the data from physical-page N after the update. However, it is possible for the processor to prefetch the data from physical-page M before the page table for virtual page A is updated because the processor is unable to recognize that coherence checking is needed. Without coherence checking, it will be assumed that it is safe to prefetch the data. Similar behavior can occur when instructions are prefetched from beyond the page-table update instruction. Special instructions are provided by prefetching software (executed immediately after the page-table update) to ensure that subsequent instruction fetches and data accesses use the correct virtual-page-to-physical-page translation. It is not necessary to perform a TLB invalidation operation preceding the table update.

Another optimization considered for bus-based multiprocessors is cache-injection. In cache-injection, a cache uses prefetching to predict it's future needs by placing the address of the data expected in a injection table to initiate the read bus transaction. Once the data is produced, a write-back bus transaction is initiated, and all other caches snoop the bus for an injection table hit to determine if they should store the data.[29] Cache-injection can be implemented on any bus-based cache coherency protocol, supplying support for cache prefetching, data forwarding. Section 4.2 of [29] walks through an in-depth example of using cache-injection on a MESI write-back invalidation cache.

Word Invalidation

One complexity problem applying to a number of the protocols deals with invalidation. In newer protocols, individual words may be modified in a cache line, as opposed to the entirety of the line. The other processors will thus have a mostly correct cache line, with only a word difference. This leads to potential complexity, because to 'correct' the error the other processors must transition from shared to invalid, where they then can read the correct word from the bus and place it back into the line, transitioning back into shared. This transition is potentially unnecessary, as the second processor may never access the specific word changed, but it may access other words in the cache line. One potential solution to this is being researched at the present time, advancing the protocols so that a cache line is "not invalidated on the first dirty word, but after the number of dirty words crosses some predetermined value, which is data type and application dependent." In other words, if the application can possibly have multiple words 'incorrect,' several transitions to and from the invalid state may be avoided.

Source: http://tab.computer.org/tcca/NEWS/sept96/dsmideas.ps

In essence, the solution proposed here is to advance the MOESI protocol with word invalidation and specific treatment of temporal and spatial data, so that the block is not invalidated.

Performance: MOESI vs MEI/MESI

Early multiprocessors (such as the PowerPC processors) were designed to work with three states (Modified, Exclusive, Invalid - this is similar to the MSI protocol discussed earlier, the term MEI is used to remain consistent with the sources used for reference). As time progressed, more multi-processors transitioned to the MESI protocol. This is most likely due to the characteristics of MEI - it is "easy to implement but leads to inefficiencies in the way that memory bus bandwidth is used" source. As a result, systems with two or more MEI processors (most likely) will not see the 'full' potential of the extra processors, due to the inefficient bus transactions.

In contrast, the five-state protocol MOESI is more complex to implement than MESI and MEI/MSI. However, advantages arise from using it. As Any Keane (VP of Marketing for PMC-Sierra) put it "this [fifth] state allows shared data that is dirty to remain in the cache. Without this state, any shared line would be written back to memory to change the original state form modified. Since we have a dedicated, fast path from CPU to CPU, this state maximizes the use of this path rather than the path to memory, which is inherently slower" source. This advantage can be implemented by allowing MOESI to make some processor to processor transfers from level 2 caches.

References

- Cache coherence

- Introduction to QuickPath Interconnect

- CMP Implementation in Intel Core Duo Processors

- Common System Interface in Intel Processors

- Cache consistency with MESI on Intel processor

- AMD dual core Architecture

- AMD64 Architecture Programmer's manual

- Software Optimization guide for AMD 10h Processors

- Architecture of AMD 64 bit core

- Silicon Graphics Computer Systems

- Parallel computer architecture: a hardware/software approach By David E. Culler, Jaswinder Pal Singh, Anoop Gupta

- Three state invalidation protocols

- Synapse tightly coupled multiprocessors: a new approach to solve old problems

- Coherence protocols: evaluation using a multiprocessor simulation model

- Dragon Protocol

- Xerox Dragon

- Coherence Protocols

- XDBus

- ARM

- IBM POWER4

- Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems. Solihin, Yan

- Intel Itanium 2

- MESI-MESI-MOESI Banana-fana...

- RSIM

- Synapse tightly coupled multiprocessors

- prefetching

- Yan Solihin, "Fundamentals of Parallel Computer Architecture," Solihin Publishing & Consulting LLC, 2008, pp. 168-173.

- David F. Kotz and Carla Schlatter Ellis, "Prefetching in File Systems for MIMD Multiprocessors," in IEEE Transactions on Parallel and Distributed Systems, Vol. 1, No. 2, April 1990, pp. 218-230.

- cache-injection 1998