CSC/ECE 506 Spring 2012/3a kp: Difference between revisions

| (117 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

'''Patterns of parallel programming''' | |||

== Overview == | == '''Overview''' == | ||

A | A [http://en.wikipedia.org/wiki/Design_pattern '''Design Pattern'''] is a description of a solution to a frequently occurring problem in some domain. The description makes it easy for the reader to quickly understand both the problem and the proposed solution and, because the pattern has a name, a collection of patterns provides a vocabulary with which to talk about these solutions. They were first postulated for City Planning applications, but they promptly extended to Computer Science and other [http://en.wikipedia.org/wiki/Software_design_pattern '''disciplines'''] The idea would always be to have a library of reusable resources that would help us in the development of our product. | ||

As an example – | As an example – '''Dense Linear Algebra''' is a '''Computational''' design pattern for parallel programming. Under this pattern, a computation is organized as a sequence of arithmetic expressions acting on dense arrays of data. The operations and data access patterns are well defined mathematically, so data can be prefetched and CPUs execute close to their theoretically allowed peak performance. | ||

theoretically allowed peak performance. | |||

Parallel programming design patterns have been identified at various levels of software development from high-level patterns that describe how an application is organized to | Parallel programming design patterns have been identified at various levels of software development, from high-level patterns that describe how an application is organized, to mid-level patterns about specific classes of computations; and low level patterns describing specific execution strategies. Related to these classifications, there are also available “pattern languages” from several researchers, that guide the software developer through hierarchy of parallel programming design development. | ||

In this article we first cover three | In this article we intend to first cover three '''pattern languages''' for parallel programming in some detail. These are attempts to classify these patterns for us then to dwell on commonalities between these approaches. Thereafter, we cover a broad range of parallel programming design patterns, highlighting the commonalities and differences. | ||

It is important to appreciate that many design problems do not lend themselves to a top-down or bottom-up analysis. In many cases, the pathway through our patterns will be bounce around between layers with the designer working at whichever layer is most productive at a given time. | It is important to appreciate that many design problems do not lend themselves to a top-down or bottom-up analysis. In many cases, the pathway through our patterns will be bounce around between layers with the designer working at whichever layer is most productive at a given time. | ||

== Parallel Pattern Programming Languages == | == '''Parallel Pattern Programming Languages''' == | ||

Following is a review of "Parallel Pattern Programming Languages" from various researchers and a discussion about how they group in the different parts of the development process. These programming languages are the basis where the design patterns are organized and used, and several proposals have been postulated. | |||

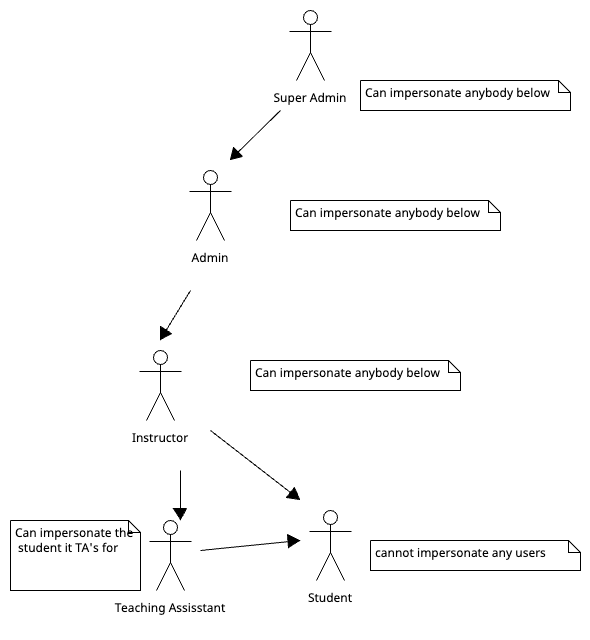

[[File:flow.png|400px|thumb|right|Flow of the Parallel Programming]] | [[File:flow.png|400px|thumb|right|Flow of the Parallel Programming]] | ||

=== [http://www.cise.ufl.edu/research/ParallelPatterns/overview.htm '''Classification by B.L.Massingell'''] === | |||

This considers four levels of patterns design - | This language considers four levels of patterns design - | ||

A) Finding Concurrency | A) Finding Concurrency | ||

B) Algorithm Structure | B) Algorithm Structure | ||

C) Supporting Structures | C) Supporting Structures | ||

D) Implementation Mechanisms | D) Implementation Mechanisms | ||

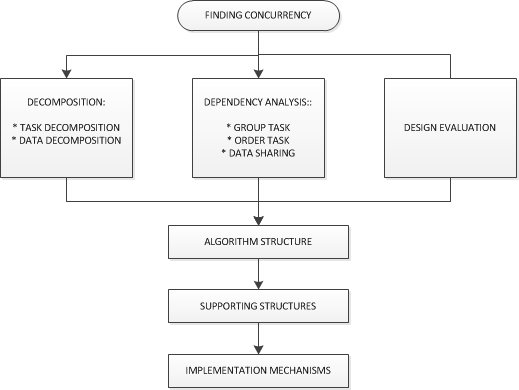

[[File:concurrency.png|300px|thumb|right|Finding Concurrency]] | [[File:concurrency.png|300px|thumb|right|Finding Concurrency]] | ||

A) ''' | A) '''Finding Concurrency''' - considers high-level issues to expose potential concurrency | ||

There are three major design patterns at this level – | There are three major design patterns at this level – | ||

* Decomposition Strategy pattern - focuses on decomposing the problem into parts that can be run simultaneously and also includes “Task Decomposition pattern” (decomposing into concurrent tasks) and “Data Decomposition pattern” (decomposing into data units that can be run independently). | |||

* Dependency Analysis – After using "Decomposing Strategy Pattern" to break into entities, the dependency analysis pattern help the programmer understand how they depend on each other. These dependencies include “Grouping Tasks” (to simplify dependency), “Ordering Tasks” (to satisfy constraints) and “Data Sharing” (among tasks). | |||

* Design Evaluation – This pattern is a consolidation pattern; it is used to evaluate the results of the other patterns in this design space. | |||

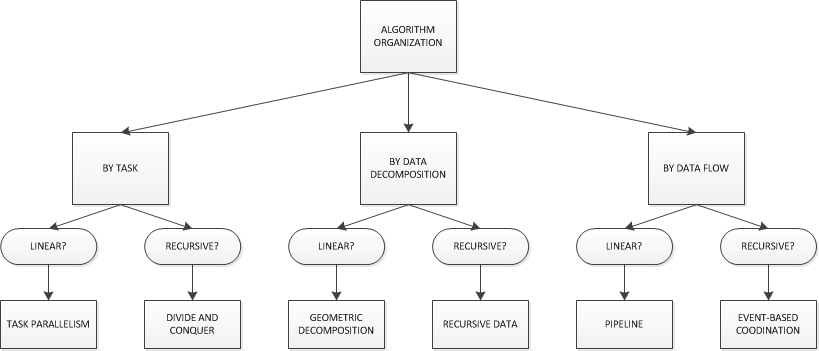

[[File:Algorthm.png|300px|thumb|right|Algorithm Structure]] | [[File:Algorthm.png|300px|thumb|right|Algorithm Structure]] | ||

B) '''Algorithm Structure''' | B) '''Algorithm Structure''' - This steps moves close to the final program and has six Patterns in three sub-groups - | ||

* Organize by flow of data – Pipeline Processing Pattern (decomposing into ordered tasks groups connected by data dependencies) and Asynchronous Decomposition Pattern(decomposed into task groups that interact through asynchronous events). | |||

* Organize by tasks – Embarrassingly Parallel Pattern (decomposing into a set of independent tasks) and Divide-and-conquer Pattern (break the problem into subproblems that are solved separately and then recombined later). | |||

* Organize by data – Geometric Decomposition Pattern (decompose and solve problem into discrete subspaces with the solution for each subspace typically requiring data from a small number of other subspaces) and Recursive Data Pattern (problem is defined in terms of following links through a recursive data structure). | |||

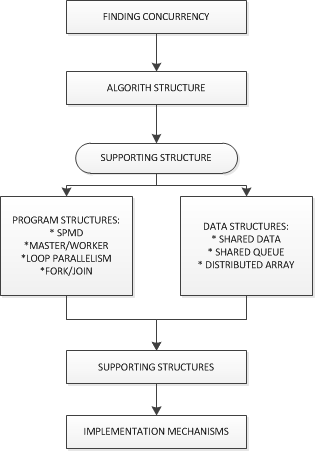

[[File:structure.png|200px|thumb|right|Supporting Structures]] | [[File:structure.png|200px|thumb|right|Supporting Structures]] | ||

The intermediate stage between algorithm structure design space and the machine-oriented “patterns” of the implementation mechanisms space has two types of patterns i) program-structuring constructs and ii) shared data structures. Program-structuring constructs include master/worker (a master process managing worker processes)/ SPMD (Single Program Multiple Data - similar to master/worker with no explicit master process), loop parallelism (distributing parallel loops processing) and Fork/Join (distribution of dynamically created tasks among processing units). Shared data patterns include - ‘shared data’ (explicit shared data among concurrent tasks), ‘shared queue’ (sharing of queue data structure among concurrent tasks), distributed array (distribution of arrays among multiple processing units). | C) '''The Supporting Structures design space''' - The intermediate stage between algorithm structure design space and the machine-oriented “patterns” of the implementation mechanisms space. It has two types of patterns i) program-structuring constructs and ii) shared data structures. Program-structuring constructs include master/worker (a master process managing worker processes)/ SPMD (Single Program Multiple Data - similar to master/worker with no explicit master process), loop parallelism (distributing parallel loops processing) and Fork/Join (distribution of dynamically created tasks among processing units). Shared data patterns include - ‘shared data’ (explicit shared data among concurrent tasks), ‘shared queue’ (sharing of queue data structure among concurrent tasks), distributed array (distribution of arrays among multiple processing units). | ||

D) '''The Implementation Mechanisms design space''' - These patterns deal with common mechanisms for process or thread management (e.g., creating or destroying processes or threads), synchronization to enforce order of events (memory fences, barriers, mutual exclusion, monitors, semaphores) and communication (e.g., message-passing and collective communication). | |||

=== [http://parlab.eecs.berkeley.edu/wiki/patterns/patterns '''Classification by Keutzer and Mattson '''] === | |||

[http://parlab.eecs.berkeley.edu/wiki/patterns/patterns] | |||

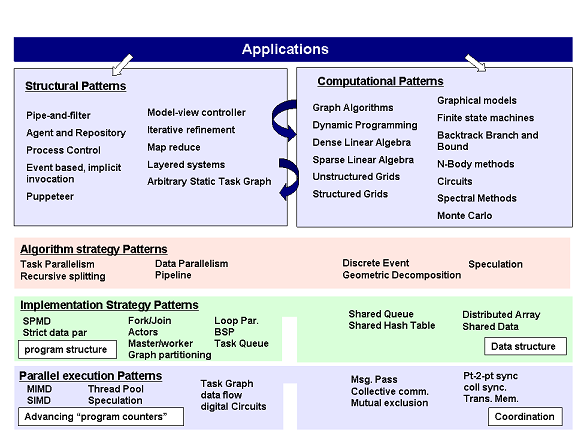

[[File:DesignPatterns.png|400px|thumb|left|Flow of the Parallel Programming]] | [[File:DesignPatterns.png|400px|thumb|left|Flow of the Parallel Programming]] | ||

They define the application in terms of structural | They define the application in terms of structural patterns (that define overall organization) and computational patterns at the top to low level details of parallel algorithm at the bottom. | ||

A) The "structural and computational patterns" at the top are tightly coupled and a designer may iterate among them. In other words, a designer thinks about his or her problem, chooses a structure pattern, then considers the computational patterns required to solve the problem. The selection of computational patterns may suggest a different overall structure for the architecture and force a reconsideration of the appropriate structural patterns. This process, moving between structural and computational patterns, continues until the designer settles on a high level design for the problem. | A) The '''"structural and computational patterns"''' at the top are tightly coupled and a designer may iterate among them. In other words, a designer thinks about his or her problem, chooses a structure pattern, then considers the computational patterns required to solve the problem. The selection of computational patterns may suggest a different overall structure for the architecture and force a reconsideration of the appropriate structural patterns. This process, moving between structural and computational patterns, continues until the designer settles on a high level design for the problem. | ||

The top level “Structural Pattern” and “Computational Pattern” | The top level “Structural Pattern” and “Computational Pattern” corresponds to the “Finding Concurrency” in B.L. Massingell’s classification. The emphasis is on strategically overviewing the problem to find concurrency. | ||

B) The algorithm strategy pattern at the next level is also very similar to Massingell's "Algorithm Structure". These deal with organizing by tasks (Tasks Parallelism, Recursive Splitting), data (Data Parallelism, Geometric Decomposition) and flow of data (Pipeline, Speculation, Discrete Event). | B) The '''algorithm strategy pattern''' at the next level is also very similar to Massingell's "Algorithm Structure". These deal with organizing by tasks (Tasks Parallelism, Recursive Splitting), data (Data Parallelism, Geometric Decomposition) and flow of data (Pipeline, Speculation, Discrete Event). | ||

C) At the bottom two levels “Implementation Strategy” and D) “Parallel Execution Strategy” correspond very closely to the last two design patterns levels in B.L.Massingell. The “Implementation Strategy” deals with “Program Structure” and “Data Structure” patterns, while the “Parallel Execution Strategy” deals with task/creation process and management of communication between tasks. | C) At the bottom two levels '''“Implementation Strategy”''' and D) '''“Parallel Execution Strategy”''' correspond very closely to the last two design patterns levels in B.L.Massingell. The “Implementation Strategy” deals with “Program Structure” and “Data Structure” patterns, while the “Parallel Execution Strategy” deals with task/creation process and management of communication between tasks. | ||

In summary, there appears a commonality | In summary, there appears a commonality when thinking in classifying design patterns for parallel programming. | ||

=== [http://www.cs.uiuc.edu/homes/snir/PPP/skeleton/dpndp.pdf '''Classification by Siu et al '''] === | |||

[http://www.cs.uiuc.edu/homes/snir/PPP/skeleton/dpndp.pdf] | |||

[[File:skeletons.png|300px|thumb|right|Flow of the Parallel Programming]] | [[File:skeletons.png|300px|thumb|right|Flow of the Parallel Programming]] | ||

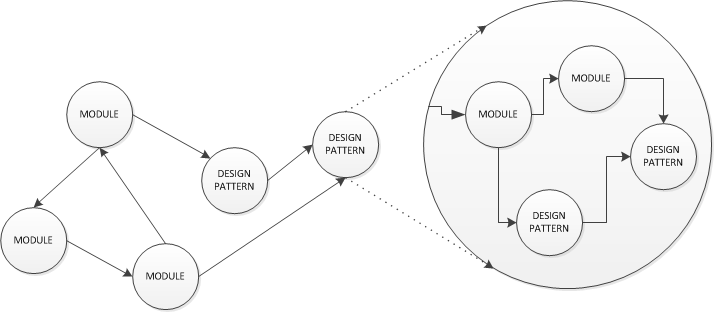

Another line of parallel programming proposed by Siu et | Another line of parallel programming proposed by Siu et al implies the use of so-called skeletons as the building blocks for fast and reliable parallel code. The system provides interfaces to connect patterns that can be reused and interchanged, so the programmer not only has a library of resources, but can experiment with different methods with more ease and time savings. The technique exploits the fact that the patterns have common structures but lack the procedures that are specifics to the applications. | ||

The model represents applications with graphs with the nodes being either a sequential computation or a design pattern. Each of these nodes could be or have a subgrapgh itself. It has the following components: | The model represents applications with graphs, with the nodes being either a sequential computation or a design pattern. Each of these nodes could be or have a subgrapgh itself. It has the following components: | ||

A) '''User Interface''': where the user introduces and specifies the graphs to be used. | |||

B) '''Design Pattern libraries''': where the design patterns are located, typically as classes. | |||

C) '''Code Skeleton''' generator and supporting libraries. | |||

- | == '''The First Level - Decomposition Strategy Patterns'''== | ||

In this section we cover the decomposition strategy using Structural and Computational Design pattern. While many of these patterns may appear familiar, we emphasize, however, the relevance of these paradigms in the parallel programming context, i.e., in splitting the software design into sub-tasks that can be run in parallel. | |||

- | *'''Structural Pattern''' is a high level view of program design. They represent the "box and arcs" that a software architect would draw on a whiteboard when describing an application. The "boxes" of the diagram represent the "computational" kernels. The problem may be viewed in terms of any of the structures patterns described in the glossary in section 8. Identifying the pattern of the problem automatically points to parallelization that is possible. For example - | ||

** pipe-and-filter views a problem as a series of processing boxes with inputs and outputs. Each of the processing box may be run concurrently/in parallel. ('''Examples''' - Vector Processing (loop level pipelining), processing signal through stages, graphics processing of images through stages, shell programming) | |||

** agent-and-repository - views a problem as many independent agents/processes acting on a central data repository. Each of the agents/processes may be run in parallel under the control of a manager. ('''Examples''' - database management system where several users can access/update data, shared file systems). | |||

** process control - views a state being monitored continuously and actions being taken based on the monitor results. The actions may be run concurrently/in parallel. ('''Example''' - auto-pilot navigation systems for airplanes, dynamic range compression software system in audio applications (e.g. hearing aid) that modify signal based on parallel processing of recent history) | |||

** event based implicit invocation - this pattern involves agents reacting to events and generating events. This is quite easily amenable to be handled by parallel processors, since handling many events may be viewed as parallel tasks. | |||

** model-view-controller - the roles of data processing, controlling the data processing and interaction with the user may be easily separated among different processing functions that may be run in parallel. ('''Example''' - typicaly used in GUI displays of data where the data displayed (i.e. the model) is manipulated separately from the view that renders the display and a controller is used to handle multiple requests for display. The model, view and controller tasks may run parallely.) | |||

** iterative refinement - An '''example''' of this is the ocean problem covered in the class. A process to refine the solution iteratively may be broken out among the parallel tasks e.g. red and black dot and anti-parallel processing for the ocean problem. | |||

** map-reduce - involves mapping a single function to independent data sets and reducing these execution to solution. The task of running and reducing may be run in parallel. ('''Examples''' - used by Google to regenerate index of the World Wide Web, counting of word frequency in a text, distributed grep to test every line of the input files to match a given pattern defined by a regular expression) | |||

** puppeteer - involves several agents running in parallel and managed by a puppeteer. This differs from "Agent and Repository" pattern to the extent that the puppeteer is mainly involved in managing interaction among agents rather than access to shared data. ('''Example''' - Simulation of atmospheric conditions by an "Atmospheric Pupeteer" that handles interaction between "air", "cloud" and "ground" agents). | |||

** arbitrary static task graph - is the most general pattern (when nothing else fits) with a general break-up of problem into tasks that may potentially be run in parallel. | |||

- | *'''Computational Patterns''' They are the structural patterns that describe and represent our application and the operations that it comprehends, that is, the building blocks we can divide it upon to be implemented. They can normally be split into smaller patterns in a hierarchical fashion and be adapted to particular hardware and software architectures. At times, the definition of the computational patterns takes to further iterations of other levels of patterns, most commonly the structural ones, as the software architects start pealing through the layers of the application. Some '''examples''' of this - | ||

** Backtrack, branch and bound: these are decision patterns that look for values in the history of a group of variable and act according to their value. They will become ours if, switch or threads. | |||

** Circuits, where the components are Boolean in fashion; like memory, or flip-flops. | |||

** Dynamic programming, which are the typical problems of "size N". It is important to define these structures well to get the best parallelisms. These cases are normally traverses or searches. (Wavefront pattern refers to dynamic programming. It is called as such because it resembles a wave moving across a diagonal of a square with computation points increasing initially till the middle of the diagonal and then decreasing as the opposite vertex is reached. Typical '''examples''' of dynamic programming problems that are very amenable to parallel programming are a) shortest path from two vertices of graph using Bellman-Ford/Floyd-Warshall/Dijkstra algorithms, b) string algorithms for finding longest common/increasing subsequence between two strings) | |||

** Linear algebras, where the problems can be organized in mathematical structures, like for instance matrices, and arithmetic expressions. They can be spares or dense, depending if their elements are mostly zeroes or not. Their operations are typically well defined and data can be prefetch, which means normally many opportunities for finding ways to repetition, iteration and parallelization. | |||

** Automatas and Finite State Machines, where the inputs are treated with machines that can be virtual or not. Associated often to hardware, like FPGAs, they are also common in software, like regular expressions, or simulations. Parallelization will strongly depend on the architecture of the machine that manages the application. | |||

** [https://engineering.purdue.edu/~milind/docs/tr-09-05.pdf, Graphical Algorithms and Models], that comprehend all cases where the applications are better represented as graphs. These graphs nodes, vertices and edges and can be object of a number of operations, like traversing, that can be easily parallelized. | |||

** Approximations, like Montecarlo, where we use sampling or other approximation techniques, that can allow us to analyze a problem with a reduce complexity. | |||

** Transformations: where the data is transformed so it can be analyzed or processed in a better way using some mathematical techniques, like, for instance, using a Fourier transform. This process presents lots of opportunities to find parallelization. (Multidomain patterns use transformations to change the domain of the problem where it may be easier to solve it. A common '''example''' of this is in modeling earth's atmospheric conditions using Navier Stokes differential equations. The problem formulation in latitude/longitude dimensions requires very small grid size for better stability and does not capture spherical nature (of earth) accurately. The original problem in latitude/logitude dimension is transformed first to latitude/wave number pair using Fast-Fourier transformation and then to Spectral domain using Legendre transformation. The reverse transformation is used to go back to the original dimensions). | |||

** Meshes, which can be structured or not, depending on the geometrical coupling between their components. Meshes are systems that are defined geometrically and are represented by a system of equations. Most typical '''example''' of Meshes is in solving Partial Differential Equations (PDE) with boundary conditions using Finite Difference Methods. PDE based problems are common in physical phenomena (Navier Stokes Equations for fluid dynamics, Maxwell's equations for electromagnetic field, Linear elasticity equations) and financial derivatives modeling (Black Scholes Equations). The following patterns are variants of Meshes - | |||

*** Multigrid pattern - Solves a system of equations by varying the granularity of the grid from course to fine, and vice-versa, at various steps in solution. This avoids oscillatory behavior of the solution | |||

*** Adaptive mesh refinement - Varies the size of the mesh at specific areas depending upon the accuracy requirement in each specific area. | |||

*** Odd-Even Communication Group - Sequentially dependent points in an algorithm are broken down into odd-even groups and parallelized. '''Example:''' Red-Black Dots in Ocean Problem (Gauss-Seidel) | |||

*** Transpositional Communication Group - Rows and columns of solution points are transposed at different steps in computation with the row dimension split up for parallelization | |||

- | == '''The Second level - Algorithm Strategy Patterns''' == | ||

Once a structural/computational pattern has been identified there may be a variety of algorithms that may be used. The concurrences are more directly identified at this stage. | |||

- | The most common algorithm strategy patterns include - | ||

*Task parallelism - Simply means running independent tasks parallely. An '''example''' of this is the embarrassingly parallel pattern. | |||

*Pipeline - Running serial operations on a series of data set in parallel because one data set item does not need to wait for the operations to finish on the data item ahead. | |||

*Discrete event - Multiple tasks interacting via an event handler with the handling of those event run in parallel by each task. | |||

*Speculation - Speculatively and parallely run a series of tasks ahead and commit some of the parallel task as required by the outcome of previous step. | |||

*Data parallelism -A single stream of instructions is applied to each element of data structure. | |||

*Recursive splitting - Recursively generated task can be optimized by assigning these to parallel processing, using a balanced data structure/fork-join or task queue implementation and improving locality. | |||

**Geometric decomposition - Breaks the problem into data chunks with each each data chunk processed parallely and data chunks exchanging data at boundaries. | |||

== The Third Level - Implementation Strategy Patterns == | == '''The Third Level - Implementation Strategy Patterns''' == | ||

Here is where the system adapts to the application, and vice-versa. It is in plain words the source code and includes its organization and that of the structures that we need to carry it on. This level is where we define how the threads of our code are going to be executed, and it is where we may map and implement the concurrences that we have found in the upper levels in software procedures. We will have a choice of software kind and language that will determine how our concurrency gets implemented. There are two main groups, depending on what the scope of the pattern is: program structure patterns and data structure patterns. | Here is where the system adapts to the application, and vice-versa. It is in plain words the source code and includes its organization and that of the structures that we need to carry it on. This level is where we define how the threads of our code are going to be executed, and it is where we may map and implement the concurrences that we have found in the upper levels in software procedures. We will have a choice of software kind and language that will determine how our concurrency gets implemented. There are two main groups, depending on what the scope of the pattern is: program structure patterns and data structure patterns. | ||

Program structured: | *'''Program structured:''' | ||

** Single Program Multiple Data, where a single operation is performed over many sets of data and the programs have many instances. These are the patterns to define process and threads IDs and ranks. | |||

** Data Parallel Strictly comprehends a big set of patterns and algorithms for concurrent operations with data. | |||

** Fork/Join are thread mechanisms, where tasks are created in parallel, executed and them put together with a joining function. | |||

** Actors are objects, which include variables and their operations and normally interact with message passing. | |||

** Master-workers is an architecture where a central process would be assigning the tasks to its "workers", parallel processing elements, that would return the results to the master when finished, and be assigned a new task. | |||

** Task Queues, where the tasks that are running in parallel are independent and can be organized in queues and taken by the scheduler. Similar to master-workers, but with no "master" overhead or limited to a single "master" thread. | |||

** Graph partitioning, oriented to exploit concurrency in graphs. | |||

** Loop-level parallelism, or patterns that deal with computing intensive loops and how to take advantage of the application particularities to optimize parallelism. OpenMP falls in this bucket. | |||

** Computational structures, like BSP, where the system is organize in steps, each one of it with local data access. | |||

*'''Data structures:''' | |||

** Shared queues that manages data streams which tasks are executed at the same time. | |||

** Distributed Arrays are a common structure and presents a large number of possibilities for concurrency.The drawback is that managing and maintaining these structures could be cumbersome and require lots of work, in particular when they are shared by a large number of processes. | |||

** Shared hash tables are also able to perform parallelism efficiently, but also present a similar problem as with arrays about the managing of locality and indexing. | |||

** Shared data and concurrent access between processes, that are normally managed by libraries or plain APIs, to better manage its complexity. Often, to improve parallelism we want to locate the data in a shared address space and use synchronization. | |||

- | == '''The Fourth Level - Parallel Execution Patterns''' == | ||

These patterns deal with how a parallel algorithm is translated into software elements to fully exploit the specific hardware elements. . They are the strategies and facilities to build, support and synchronize our tasks. These include - (1) Process and thread control patterns (2) Coordination pattern | |||

- | *'''Process and thread controls patterns that advance a program counter -''' | ||

** MIMD pattern - Multiple process with each one acting on their own data and coordinating communication through discrete events | |||

** Data flow - Computation steps are viewed as direct acylice graph of thread that are mapped to parallel computers | |||

** SIMD pattern - Single Stream of instructions is applied to multiple data on different processing elements | |||

** Thread pool - Rather than creating and destroying threads (which are expensive operations), a pool of threads is maintained. A thread is returned to a pool after use and steals works to ensure a balanced work load | |||

** Speculative execution - Compiler or runtime system is enabled to do speculative executions and to roll back those executions that are not required | |||

** Digital circuits - Functionality is hard wired for concurrency in digital circuits and available as separate instruction set. | |||

- | *'''Patterns that deal with coordination of threads or processes (all well known)-''' | ||

** Message passing - Passing messages between process that need to be synchronized | |||

** Collective Communication - Message is passed to a group of (rather than individual) threads. Example reductions, broadcasts, prefix sums, and scatter/gather | |||

** Mutual exclusion - Block of code or memory that can be exeucted by one process at a time | |||

** Point-to-point and collective synchronization - Mutex locks to enforce order of events for two threads or a collection of threads. | |||

** Transactional memory - Folds into memory to detect access conflicts and roll back transactions when a conflict occurs. This is speculative parallelism and effective when conflicts are rare | |||

== '''Examples''' == | |||

In this section we will go over some examples of implementations of these patterns. | |||

=== '''Master/Workers: The calculation of pi''' === | |||

As stated above, master/workers is an examples of Implementation Strategic Patterns where a main process controls or uses a number of other processes in parallel, the workers, to finish to divide a task and then obtains a results out of those workers. | |||

An example of its use can be seen when trying to calculate an approximation to the number pi. The strategy is well- known: We have a circle inside a square and we can calculate the number pi by relating the areas: | |||

pi = 4 * (circle area/square area) | |||

The way this calculation gets parallelized is by following this algorithm: | |||

* Generate random numbers | |||

* Check if the number is located in the circle (and the square), or only the square. | |||

* Calculate pi as 4*(points in the circle and the square/number of points in the square) | |||

The bigger the number of points, the better the approximation. Below, we can see a pseudo-code | |||

points = 100000; // number of points. | |||

p = WORKERS; // number of workers | |||

nWorkers = points / p; //number of points per worker | |||

countinCircle = 0; // one per worker | |||

// worker routine: | |||

for (i = 0; i < nWorkers; i++) { | |||

// generation of 2 random numbers inside the square; | |||

a = first random number; | |||

b = second random number; | |||

if (a, b) lies inside the circle | |||

countinCircle++; | |||

} | |||

// master routine: | |||

gets countinCircle values from the workers | |||

computes PI as PI = 4.0 * countinCircle/points; | |||

== The | === '''Branch and Bound Aplication: The Knapsack Problem''' === | ||

[http://en.wikipedia.org/wiki/Branch_and_bound, Branch and bound] is a technique for problem optimization. It lists all the possibly solutions to the problem, splits and organizes them in branches and then discards the non-suitable branches according to particular bounding criteria, normally upper and lower bounding. | |||

An example of this algorithm is the [http://en.wikipedia.org/wiki/Knapsack_problem, knapsack problem], where we have a set of things, characterized by their weight and value, and we want to know which combination of them has the closest value to a given threshold, and that value is less or equal the threshold. The problem could be one-dimensional (just weight) or mufti-dimensional (weight, size, value...) and items can be repeated or not. | |||

One would think that the fact that it is organized as a tree gives us opportunities to find and exploit parallelism. The truth is that many times the dependencies among the data that makes the set are too high to do it, hence why we chose this example. | |||

Kamil et al, propose a [http://www.cs.berkeley.edu/~ejr/GSI/cs267-s04/homework-3-results/knap/hw3.pdf, '''study and solution'''] to one common formulation of the problem, the 0-1 knapsack problem, where the items are restricted to the values 0 and 1. | |||

=== '''MapReduce''' === | |||

As mentioned before, [http://en.wikipedia.org/wiki/MapReduce, MapReduce] is a Google tool oriented to work with clusters of computers, as it adapts very well to distributed systems. It is another example of Master/workers and it has two steps: '''Map''' , where our problem is divides into sub-problems that are assigned to workers; and '''Reduce''', where the master node processes the results coming from the workers. | |||

Here is an example of the two functions, in pseudocode, that would count the number of times a particular picture 'pic' shows up in a set of documents: | |||

MAP(pic, setDocuments): // pic is the picture, setDocuments is a group of documents, where we are looking | |||

for each pic in setDocument: | |||

send(pic, TRUE); | |||

REDUCE(pic, picCount): //pic is a picture and picCount is the count. | |||

sum = 0; | |||

for each pc in partialCounts: | |||

sum += pc; | |||

send(pic, sum); | |||

== '''Glossary Of Patterns'''== | |||

==='''Structural Patterns'''=== | |||

== | |||

'''Structural Patterns''' | |||

i) Pipe-and-filter | i) Pipe-and-filter | ||

| Line 242: | Line 232: | ||

x) Arbitrary static task graph | x) Arbitrary static task graph | ||

'''Computational Patterns''' | ==='''Computational Patterns'''=== | ||

i) Backtrack, branch and bound | i) Backtrack, branch and bound | ||

| Line 258: | Line 248: | ||

xiii) Unstructured mesh | xiii) Unstructured mesh | ||

'''Algorithm Strategy Patterns''' | ==='''Algorithm Strategy Patterns'''=== | ||

i) Task parallelism | i) Task parallelism | ||

| Line 268: | Line 258: | ||

vii)Geometic decomposition | vii)Geometic decomposition | ||

'''Implementation | ==='''Implementation Strategy Patterns'''=== | ||

Structured as a Program: | Structured as a Program: | ||

| Line 287: | Line 277: | ||

ii) Distributed array | ii) Distributed array | ||

iii) Shared hash table | iii) Shared hash table | ||

iv) Shared data | iv) Shared data | ||

'''Parallel Execution Patterns''' | ==='''Parallel Execution Patterns'''=== | ||

With program counter advance: | With program counter advance: | ||

| Line 309: | Line 299: | ||

vi) Transactional memory | vi) Transactional memory | ||

== References == | == '''References and External Links''' == | ||

1."Parallel Programming with a Pattern Language", Berna Massingill, et al. | 1."Parallel Programming with a Pattern Language", Berna Massingill, et al. | ||

| Line 320: | Line 310: | ||

5. "Building Parallel Applications using Design Patterns", Dhrubajyoti Goswami, et al. | 5. "Building Parallel Applications using Design Patterns", Dhrubajyoti Goswami, et al. | ||

6. "Patterns for parallel programming", Timothy G. Mattson, Beverly A. Sanders, Berna Massingill, Pearson Education, 2005 | |||

7. [http://www.cs.uiuc.edu/homes/snir/PPP/ Resources on Parallel Pattern] | |||

8 [http://www.cise.ufl.edu/research/ParallelPatterns/overview.htm Massingill-A pattern language of parallel programming] | |||

9. [http://parlab.eecs.berkeley.edu/wiki/patterns/patterns Keutzer and Mattson - A Pattern Language for Parallel Programming ver2.0 | |||

] | ] | ||

10. [http://msdn.microsoft.com/en-us/library/dd492418.aspx/Link Parallel Patterns Library (PPL) | |||

] | ] | ||

11 [http://www.cs.uiuc.edu/homes/snir/PPP/skeleton/dpndp.pdf Design Patterns For Parallel Programming] | |||

12. [https://wiki.engr.illinois.edu/display/transformation/Pattern+Catalog] Pattern Catalog (Illinois University) | |||

13. [http://www.cs.uiuc.edu/homes/snir/PPP/patterns/patterns.ppt] Parallel Programming Patterns, Eun-Gyu Kim, June 10, 2004 | |||

14. [http://www.upcrc.illinois.edu/patterns.html] UPCRC Illinois University | |||

15. [https://engineering.purdue.edu/~milind/docs/tr-09-05.pdf] Amorphous Data-parallelism in Irregular Algorithms, Pingali et al. | |||

16. [http://code.google.com/edu/parallel/mapreduce-tutorial.html, MapReduce Tutorial'] | |||

17. [http://en.wikipedia.org/wiki/Parallel_computing, Parallel Computing] | |||

18. [http://en.wikipedia.org/wiki/Branch_and_bound, Branch and Bound] | |||

19. [http://www.cs.berkeley.edu/~ejr/GSI/cs267-s04/homework-3-results/knap/hw3.pdf, Knapsack Problem] | |||

20. [http://www.cs.wustl.edu/~schmidt/patterns-ace.html, Patterns for Concurrent, Parallel and Distributed Systems] | |||

== '''Quiz''' == | |||

A design pattern is ... | |||

# A description of a solution to a frequently occurring problem in some domain. | |||

# A routine that is repeated often. | |||

# A hierarchical approach to software architecture. | |||

Which of the following does not belong to the Massingell's Classification of patterns. | |||

# Finding Concurrency | |||

# Parallel Circulation | |||

# Implementation Mechanism | |||

# Algorithm Structure | |||

Task parallelism consists of | |||

# Having tasks that come in parallel to the processor. | |||

# Having tasks that are concurrent. | |||

# Running independent tasks in parallel | |||

Recursive splitting is an example of | |||

# Computational Patterns | |||

# Structural Patterns | |||

# Algorithm Strategy Patterns | |||

Forks and joins are used mainly when | |||

# Using Implementation Strategy patterns | |||

# Using Algorithm Strategy Patterns | |||

# Using Computational Patterns | |||

The application design can iterate between structural and computational patterns, | |||

# Never, they are completely separate tasks. | |||

# At times, if the number of processes is more than 1000. | |||

# All the times. Both patterns frequently complement each other. | |||

According to the Sui et al model, | |||

# There can be a sub-design pattern embedded in a module. | |||

# A module is a non divisible structure. | |||

# Design patterns and modules are normally used in parallel. | |||

In a Discrete event pattern multiple tasks can be run interacting via an event handler with the handling of the events by each tasks running parallely. | |||

# True | |||

# False | |||

Graph structures can be parallelized when: | |||

# When the graphs have been done with a parallel computer. | |||

# Only in the number of vortexes is a power of 2. | |||

# At times, when their structures allow it. | |||

'''Answers''' | |||

* 1 | |||

* 2 | |||

* 3 | |||

* 3 | |||

* 1 | |||

* 3 | |||

* 1 | |||

* True | |||

* 3 | |||

Latest revision as of 16:28, 20 February 2012

Patterns of parallel programming

Overview

A Design Pattern is a description of a solution to a frequently occurring problem in some domain. The description makes it easy for the reader to quickly understand both the problem and the proposed solution and, because the pattern has a name, a collection of patterns provides a vocabulary with which to talk about these solutions. They were first postulated for City Planning applications, but they promptly extended to Computer Science and other disciplines The idea would always be to have a library of reusable resources that would help us in the development of our product.

As an example – Dense Linear Algebra is a Computational design pattern for parallel programming. Under this pattern, a computation is organized as a sequence of arithmetic expressions acting on dense arrays of data. The operations and data access patterns are well defined mathematically, so data can be prefetched and CPUs execute close to their theoretically allowed peak performance.

Parallel programming design patterns have been identified at various levels of software development, from high-level patterns that describe how an application is organized, to mid-level patterns about specific classes of computations; and low level patterns describing specific execution strategies. Related to these classifications, there are also available “pattern languages” from several researchers, that guide the software developer through hierarchy of parallel programming design development.

In this article we intend to first cover three pattern languages for parallel programming in some detail. These are attempts to classify these patterns for us then to dwell on commonalities between these approaches. Thereafter, we cover a broad range of parallel programming design patterns, highlighting the commonalities and differences.

It is important to appreciate that many design problems do not lend themselves to a top-down or bottom-up analysis. In many cases, the pathway through our patterns will be bounce around between layers with the designer working at whichever layer is most productive at a given time.

Parallel Pattern Programming Languages

Following is a review of "Parallel Pattern Programming Languages" from various researchers and a discussion about how they group in the different parts of the development process. These programming languages are the basis where the design patterns are organized and used, and several proposals have been postulated.

Classification by B.L.Massingell

This language considers four levels of patterns design - A) Finding Concurrency B) Algorithm Structure C) Supporting Structures D) Implementation Mechanisms

A) Finding Concurrency - considers high-level issues to expose potential concurrency There are three major design patterns at this level –

- Decomposition Strategy pattern - focuses on decomposing the problem into parts that can be run simultaneously and also includes “Task Decomposition pattern” (decomposing into concurrent tasks) and “Data Decomposition pattern” (decomposing into data units that can be run independently).

- Dependency Analysis – After using "Decomposing Strategy Pattern" to break into entities, the dependency analysis pattern help the programmer understand how they depend on each other. These dependencies include “Grouping Tasks” (to simplify dependency), “Ordering Tasks” (to satisfy constraints) and “Data Sharing” (among tasks).

- Design Evaluation – This pattern is a consolidation pattern; it is used to evaluate the results of the other patterns in this design space.

B) Algorithm Structure - This steps moves close to the final program and has six Patterns in three sub-groups -

- Organize by flow of data – Pipeline Processing Pattern (decomposing into ordered tasks groups connected by data dependencies) and Asynchronous Decomposition Pattern(decomposed into task groups that interact through asynchronous events).

- Organize by tasks – Embarrassingly Parallel Pattern (decomposing into a set of independent tasks) and Divide-and-conquer Pattern (break the problem into subproblems that are solved separately and then recombined later).

- Organize by data – Geometric Decomposition Pattern (decompose and solve problem into discrete subspaces with the solution for each subspace typically requiring data from a small number of other subspaces) and Recursive Data Pattern (problem is defined in terms of following links through a recursive data structure).

C) The Supporting Structures design space - The intermediate stage between algorithm structure design space and the machine-oriented “patterns” of the implementation mechanisms space. It has two types of patterns i) program-structuring constructs and ii) shared data structures. Program-structuring constructs include master/worker (a master process managing worker processes)/ SPMD (Single Program Multiple Data - similar to master/worker with no explicit master process), loop parallelism (distributing parallel loops processing) and Fork/Join (distribution of dynamically created tasks among processing units). Shared data patterns include - ‘shared data’ (explicit shared data among concurrent tasks), ‘shared queue’ (sharing of queue data structure among concurrent tasks), distributed array (distribution of arrays among multiple processing units).

D) The Implementation Mechanisms design space - These patterns deal with common mechanisms for process or thread management (e.g., creating or destroying processes or threads), synchronization to enforce order of events (memory fences, barriers, mutual exclusion, monitors, semaphores) and communication (e.g., message-passing and collective communication).

Classification by Keutzer and Mattson

They define the application in terms of structural patterns (that define overall organization) and computational patterns at the top to low level details of parallel algorithm at the bottom.

A) The "structural and computational patterns" at the top are tightly coupled and a designer may iterate among them. In other words, a designer thinks about his or her problem, chooses a structure pattern, then considers the computational patterns required to solve the problem. The selection of computational patterns may suggest a different overall structure for the architecture and force a reconsideration of the appropriate structural patterns. This process, moving between structural and computational patterns, continues until the designer settles on a high level design for the problem.

The top level “Structural Pattern” and “Computational Pattern” corresponds to the “Finding Concurrency” in B.L. Massingell’s classification. The emphasis is on strategically overviewing the problem to find concurrency.

B) The algorithm strategy pattern at the next level is also very similar to Massingell's "Algorithm Structure". These deal with organizing by tasks (Tasks Parallelism, Recursive Splitting), data (Data Parallelism, Geometric Decomposition) and flow of data (Pipeline, Speculation, Discrete Event).

C) At the bottom two levels “Implementation Strategy” and D) “Parallel Execution Strategy” correspond very closely to the last two design patterns levels in B.L.Massingell. The “Implementation Strategy” deals with “Program Structure” and “Data Structure” patterns, while the “Parallel Execution Strategy” deals with task/creation process and management of communication between tasks.

In summary, there appears a commonality when thinking in classifying design patterns for parallel programming.

Classification by Siu et al

Another line of parallel programming proposed by Siu et al implies the use of so-called skeletons as the building blocks for fast and reliable parallel code. The system provides interfaces to connect patterns that can be reused and interchanged, so the programmer not only has a library of resources, but can experiment with different methods with more ease and time savings. The technique exploits the fact that the patterns have common structures but lack the procedures that are specifics to the applications. The model represents applications with graphs, with the nodes being either a sequential computation or a design pattern. Each of these nodes could be or have a subgrapgh itself. It has the following components:

A) User Interface: where the user introduces and specifies the graphs to be used.

B) Design Pattern libraries: where the design patterns are located, typically as classes.

C) Code Skeleton generator and supporting libraries.

The First Level - Decomposition Strategy Patterns

In this section we cover the decomposition strategy using Structural and Computational Design pattern. While many of these patterns may appear familiar, we emphasize, however, the relevance of these paradigms in the parallel programming context, i.e., in splitting the software design into sub-tasks that can be run in parallel.

- Structural Pattern is a high level view of program design. They represent the "box and arcs" that a software architect would draw on a whiteboard when describing an application. The "boxes" of the diagram represent the "computational" kernels. The problem may be viewed in terms of any of the structures patterns described in the glossary in section 8. Identifying the pattern of the problem automatically points to parallelization that is possible. For example -

- pipe-and-filter views a problem as a series of processing boxes with inputs and outputs. Each of the processing box may be run concurrently/in parallel. (Examples - Vector Processing (loop level pipelining), processing signal through stages, graphics processing of images through stages, shell programming)

- agent-and-repository - views a problem as many independent agents/processes acting on a central data repository. Each of the agents/processes may be run in parallel under the control of a manager. (Examples - database management system where several users can access/update data, shared file systems).

- process control - views a state being monitored continuously and actions being taken based on the monitor results. The actions may be run concurrently/in parallel. (Example - auto-pilot navigation systems for airplanes, dynamic range compression software system in audio applications (e.g. hearing aid) that modify signal based on parallel processing of recent history)

- event based implicit invocation - this pattern involves agents reacting to events and generating events. This is quite easily amenable to be handled by parallel processors, since handling many events may be viewed as parallel tasks.

- model-view-controller - the roles of data processing, controlling the data processing and interaction with the user may be easily separated among different processing functions that may be run in parallel. (Example - typicaly used in GUI displays of data where the data displayed (i.e. the model) is manipulated separately from the view that renders the display and a controller is used to handle multiple requests for display. The model, view and controller tasks may run parallely.)

- iterative refinement - An example of this is the ocean problem covered in the class. A process to refine the solution iteratively may be broken out among the parallel tasks e.g. red and black dot and anti-parallel processing for the ocean problem.

- map-reduce - involves mapping a single function to independent data sets and reducing these execution to solution. The task of running and reducing may be run in parallel. (Examples - used by Google to regenerate index of the World Wide Web, counting of word frequency in a text, distributed grep to test every line of the input files to match a given pattern defined by a regular expression)

- puppeteer - involves several agents running in parallel and managed by a puppeteer. This differs from "Agent and Repository" pattern to the extent that the puppeteer is mainly involved in managing interaction among agents rather than access to shared data. (Example - Simulation of atmospheric conditions by an "Atmospheric Pupeteer" that handles interaction between "air", "cloud" and "ground" agents).

- arbitrary static task graph - is the most general pattern (when nothing else fits) with a general break-up of problem into tasks that may potentially be run in parallel.

- Computational Patterns They are the structural patterns that describe and represent our application and the operations that it comprehends, that is, the building blocks we can divide it upon to be implemented. They can normally be split into smaller patterns in a hierarchical fashion and be adapted to particular hardware and software architectures. At times, the definition of the computational patterns takes to further iterations of other levels of patterns, most commonly the structural ones, as the software architects start pealing through the layers of the application. Some examples of this -

- Backtrack, branch and bound: these are decision patterns that look for values in the history of a group of variable and act according to their value. They will become ours if, switch or threads.

- Circuits, where the components are Boolean in fashion; like memory, or flip-flops.

- Dynamic programming, which are the typical problems of "size N". It is important to define these structures well to get the best parallelisms. These cases are normally traverses or searches. (Wavefront pattern refers to dynamic programming. It is called as such because it resembles a wave moving across a diagonal of a square with computation points increasing initially till the middle of the diagonal and then decreasing as the opposite vertex is reached. Typical examples of dynamic programming problems that are very amenable to parallel programming are a) shortest path from two vertices of graph using Bellman-Ford/Floyd-Warshall/Dijkstra algorithms, b) string algorithms for finding longest common/increasing subsequence between two strings)

- Linear algebras, where the problems can be organized in mathematical structures, like for instance matrices, and arithmetic expressions. They can be spares or dense, depending if their elements are mostly zeroes or not. Their operations are typically well defined and data can be prefetch, which means normally many opportunities for finding ways to repetition, iteration and parallelization.

- Automatas and Finite State Machines, where the inputs are treated with machines that can be virtual or not. Associated often to hardware, like FPGAs, they are also common in software, like regular expressions, or simulations. Parallelization will strongly depend on the architecture of the machine that manages the application.

- Graphical Algorithms and Models, that comprehend all cases where the applications are better represented as graphs. These graphs nodes, vertices and edges and can be object of a number of operations, like traversing, that can be easily parallelized.

- Approximations, like Montecarlo, where we use sampling or other approximation techniques, that can allow us to analyze a problem with a reduce complexity.

- Transformations: where the data is transformed so it can be analyzed or processed in a better way using some mathematical techniques, like, for instance, using a Fourier transform. This process presents lots of opportunities to find parallelization. (Multidomain patterns use transformations to change the domain of the problem where it may be easier to solve it. A common example of this is in modeling earth's atmospheric conditions using Navier Stokes differential equations. The problem formulation in latitude/longitude dimensions requires very small grid size for better stability and does not capture spherical nature (of earth) accurately. The original problem in latitude/logitude dimension is transformed first to latitude/wave number pair using Fast-Fourier transformation and then to Spectral domain using Legendre transformation. The reverse transformation is used to go back to the original dimensions).

- Meshes, which can be structured or not, depending on the geometrical coupling between their components. Meshes are systems that are defined geometrically and are represented by a system of equations. Most typical example of Meshes is in solving Partial Differential Equations (PDE) with boundary conditions using Finite Difference Methods. PDE based problems are common in physical phenomena (Navier Stokes Equations for fluid dynamics, Maxwell's equations for electromagnetic field, Linear elasticity equations) and financial derivatives modeling (Black Scholes Equations). The following patterns are variants of Meshes -

- Multigrid pattern - Solves a system of equations by varying the granularity of the grid from course to fine, and vice-versa, at various steps in solution. This avoids oscillatory behavior of the solution

- Adaptive mesh refinement - Varies the size of the mesh at specific areas depending upon the accuracy requirement in each specific area.

- Odd-Even Communication Group - Sequentially dependent points in an algorithm are broken down into odd-even groups and parallelized. Example: Red-Black Dots in Ocean Problem (Gauss-Seidel)

- Transpositional Communication Group - Rows and columns of solution points are transposed at different steps in computation with the row dimension split up for parallelization

The Second level - Algorithm Strategy Patterns

Once a structural/computational pattern has been identified there may be a variety of algorithms that may be used. The concurrences are more directly identified at this stage.

The most common algorithm strategy patterns include -

- Task parallelism - Simply means running independent tasks parallely. An example of this is the embarrassingly parallel pattern.

- Pipeline - Running serial operations on a series of data set in parallel because one data set item does not need to wait for the operations to finish on the data item ahead.

- Discrete event - Multiple tasks interacting via an event handler with the handling of those event run in parallel by each task.

- Speculation - Speculatively and parallely run a series of tasks ahead and commit some of the parallel task as required by the outcome of previous step.

- Data parallelism -A single stream of instructions is applied to each element of data structure.

- Recursive splitting - Recursively generated task can be optimized by assigning these to parallel processing, using a balanced data structure/fork-join or task queue implementation and improving locality.

- Geometric decomposition - Breaks the problem into data chunks with each each data chunk processed parallely and data chunks exchanging data at boundaries.

The Third Level - Implementation Strategy Patterns

Here is where the system adapts to the application, and vice-versa. It is in plain words the source code and includes its organization and that of the structures that we need to carry it on. This level is where we define how the threads of our code are going to be executed, and it is where we may map and implement the concurrences that we have found in the upper levels in software procedures. We will have a choice of software kind and language that will determine how our concurrency gets implemented. There are two main groups, depending on what the scope of the pattern is: program structure patterns and data structure patterns.

- Program structured:

- Single Program Multiple Data, where a single operation is performed over many sets of data and the programs have many instances. These are the patterns to define process and threads IDs and ranks.

- Data Parallel Strictly comprehends a big set of patterns and algorithms for concurrent operations with data.

- Fork/Join are thread mechanisms, where tasks are created in parallel, executed and them put together with a joining function.

- Actors are objects, which include variables and their operations and normally interact with message passing.

- Master-workers is an architecture where a central process would be assigning the tasks to its "workers", parallel processing elements, that would return the results to the master when finished, and be assigned a new task.

- Task Queues, where the tasks that are running in parallel are independent and can be organized in queues and taken by the scheduler. Similar to master-workers, but with no "master" overhead or limited to a single "master" thread.

- Graph partitioning, oriented to exploit concurrency in graphs.

- Loop-level parallelism, or patterns that deal with computing intensive loops and how to take advantage of the application particularities to optimize parallelism. OpenMP falls in this bucket.

- Computational structures, like BSP, where the system is organize in steps, each one of it with local data access.

- Data structures:

- Shared queues that manages data streams which tasks are executed at the same time.

- Distributed Arrays are a common structure and presents a large number of possibilities for concurrency.The drawback is that managing and maintaining these structures could be cumbersome and require lots of work, in particular when they are shared by a large number of processes.

- Shared hash tables are also able to perform parallelism efficiently, but also present a similar problem as with arrays about the managing of locality and indexing.

- Shared data and concurrent access between processes, that are normally managed by libraries or plain APIs, to better manage its complexity. Often, to improve parallelism we want to locate the data in a shared address space and use synchronization.

The Fourth Level - Parallel Execution Patterns

These patterns deal with how a parallel algorithm is translated into software elements to fully exploit the specific hardware elements. . They are the strategies and facilities to build, support and synchronize our tasks. These include - (1) Process and thread control patterns (2) Coordination pattern

- Process and thread controls patterns that advance a program counter -

- MIMD pattern - Multiple process with each one acting on their own data and coordinating communication through discrete events

- Data flow - Computation steps are viewed as direct acylice graph of thread that are mapped to parallel computers

- SIMD pattern - Single Stream of instructions is applied to multiple data on different processing elements

- Thread pool - Rather than creating and destroying threads (which are expensive operations), a pool of threads is maintained. A thread is returned to a pool after use and steals works to ensure a balanced work load

- Speculative execution - Compiler or runtime system is enabled to do speculative executions and to roll back those executions that are not required

- Digital circuits - Functionality is hard wired for concurrency in digital circuits and available as separate instruction set.

- Patterns that deal with coordination of threads or processes (all well known)-

- Message passing - Passing messages between process that need to be synchronized

- Collective Communication - Message is passed to a group of (rather than individual) threads. Example reductions, broadcasts, prefix sums, and scatter/gather

- Mutual exclusion - Block of code or memory that can be exeucted by one process at a time

- Point-to-point and collective synchronization - Mutex locks to enforce order of events for two threads or a collection of threads.

- Transactional memory - Folds into memory to detect access conflicts and roll back transactions when a conflict occurs. This is speculative parallelism and effective when conflicts are rare

Examples

In this section we will go over some examples of implementations of these patterns.

Master/Workers: The calculation of pi

As stated above, master/workers is an examples of Implementation Strategic Patterns where a main process controls or uses a number of other processes in parallel, the workers, to finish to divide a task and then obtains a results out of those workers. An example of its use can be seen when trying to calculate an approximation to the number pi. The strategy is well- known: We have a circle inside a square and we can calculate the number pi by relating the areas:

pi = 4 * (circle area/square area)

The way this calculation gets parallelized is by following this algorithm:

- Generate random numbers

- Check if the number is located in the circle (and the square), or only the square.

- Calculate pi as 4*(points in the circle and the square/number of points in the square)

The bigger the number of points, the better the approximation. Below, we can see a pseudo-code

points = 100000; // number of points. p = WORKERS; // number of workers nWorkers = points / p; //number of points per worker countinCircle = 0; // one per worker

// worker routine:

for (i = 0; i < nWorkers; i++) {

// generation of 2 random numbers inside the square;

a = first random number;

b = second random number;

if (a, b) lies inside the circle

countinCircle++;

}

// master routine:

gets countinCircle values from the workers

computes PI as PI = 4.0 * countinCircle/points;

Branch and Bound Aplication: The Knapsack Problem

Branch and bound is a technique for problem optimization. It lists all the possibly solutions to the problem, splits and organizes them in branches and then discards the non-suitable branches according to particular bounding criteria, normally upper and lower bounding. An example of this algorithm is the knapsack problem, where we have a set of things, characterized by their weight and value, and we want to know which combination of them has the closest value to a given threshold, and that value is less or equal the threshold. The problem could be one-dimensional (just weight) or mufti-dimensional (weight, size, value...) and items can be repeated or not. One would think that the fact that it is organized as a tree gives us opportunities to find and exploit parallelism. The truth is that many times the dependencies among the data that makes the set are too high to do it, hence why we chose this example. Kamil et al, propose a study and solution to one common formulation of the problem, the 0-1 knapsack problem, where the items are restricted to the values 0 and 1.

MapReduce

As mentioned before, MapReduce is a Google tool oriented to work with clusters of computers, as it adapts very well to distributed systems. It is another example of Master/workers and it has two steps: Map , where our problem is divides into sub-problems that are assigned to workers; and Reduce, where the master node processes the results coming from the workers. Here is an example of the two functions, in pseudocode, that would count the number of times a particular picture 'pic' shows up in a set of documents:

MAP(pic, setDocuments): // pic is the picture, setDocuments is a group of documents, where we are looking

for each pic in setDocument:

send(pic, TRUE);

REDUCE(pic, picCount): //pic is a picture and picCount is the count.

sum = 0;

for each pc in partialCounts:

sum += pc;

send(pic, sum);

Glossary Of Patterns

Structural Patterns

i) Pipe-and-filter ii) Agent-and-repository iii) Process control iv) Event-based-implicit invocation v) Model-view-controller vi) Iterative refinement vii) MapReduce viii) Layered systems ix) Puppeter x) Arbitrary static task graph

Computational Patterns

i) Backtrack, branch and bound ii) Circuits iii) Dynamic programming iv) Dense linear algebra v) Sparse Linear Algebra vi) Finite state machine vii) Graph algorithms viii) Graphical models ix) Monte Carlo x) N-body xi) Spectral methods xii) Structured mesh xiii) Unstructured mesh

Algorithm Strategy Patterns

i) Task parallelism ii) Pipeline iii) Discrete event iv) Speculation v) Data parallelism vi) Recursive splitting vii)Geometic decomposition

Implementation Strategy Patterns

Structured as a Program:

i) Single-Program Multiple Data (SPMD) ii) Strict data parallel iii) Fork/join iv) Actors v) Master-worker vi) Task queue vii) Graph Partitioning viii) Loop-level parallelism ix) BSP

Structured as Data:

i) Shared queue ii) Distributed array iii) Shared hash table iv) Shared data

Parallel Execution Patterns

With program counter advance:

i) MIMD ii) Data flow iii) SIMD iv) Thread pool v) Speculative execution vi) Digital circuits

Thread coordinated:

i) Message passing ii) Collective communication iii) Mutual exclusion iv) Point to point synchronization v) Collective synchronization vi) Transactional memory

References and External Links

1."Parallel Programming with a Pattern Language", Berna Massingill, et al.

2. "Design Patterns for Parallel Programming" - S. Siu, M De Simone, D Goswami, A Singh

3. "Parallel Numerical Methods - Multigrid", Michael Heath

4. "Distributed Dynamic Data-Structures for Parallel Adaptive Mesh-Refinement", Manish Parashar, et al

5. "Building Parallel Applications using Design Patterns", Dhrubajyoti Goswami, et al.

6. "Patterns for parallel programming", Timothy G. Mattson, Beverly A. Sanders, Berna Massingill, Pearson Education, 2005

7. Resources on Parallel Pattern

8 Massingill-A pattern language of parallel programming

9. [http://parlab.eecs.berkeley.edu/wiki/patterns/patterns Keutzer and Mattson - A Pattern Language for Parallel Programming ver2.0 ]

10. [http://msdn.microsoft.com/en-us/library/dd492418.aspx/Link Parallel Patterns Library (PPL) ]

11 Design Patterns For Parallel Programming

12. [1] Pattern Catalog (Illinois University)

13. [2] Parallel Programming Patterns, Eun-Gyu Kim, June 10, 2004

14. [3] UPCRC Illinois University

15. [4] Amorphous Data-parallelism in Irregular Algorithms, Pingali et al.

18. Branch and Bound

19. Knapsack Problem

20. Patterns for Concurrent, Parallel and Distributed Systems

Quiz

A design pattern is ...

- A description of a solution to a frequently occurring problem in some domain.

- A routine that is repeated often.

- A hierarchical approach to software architecture.

Which of the following does not belong to the Massingell's Classification of patterns.

- Finding Concurrency

- Parallel Circulation

- Implementation Mechanism

- Algorithm Structure

Task parallelism consists of

- Having tasks that come in parallel to the processor.

- Having tasks that are concurrent.

- Running independent tasks in parallel

Recursive splitting is an example of

- Computational Patterns

- Structural Patterns

- Algorithm Strategy Patterns

Forks and joins are used mainly when

- Using Implementation Strategy patterns

- Using Algorithm Strategy Patterns

- Using Computational Patterns

The application design can iterate between structural and computational patterns,

- Never, they are completely separate tasks.

- At times, if the number of processes is more than 1000.

- All the times. Both patterns frequently complement each other.

According to the Sui et al model,

- There can be a sub-design pattern embedded in a module.

- A module is a non divisible structure.

- Design patterns and modules are normally used in parallel.

In a Discrete event pattern multiple tasks can be run interacting via an event handler with the handling of the events by each tasks running parallely.

- True

- False

Graph structures can be parallelized when:

- When the graphs have been done with a parallel computer.

- Only in the number of vortexes is a power of 2.

- At times, when their structures allow it.

Answers

- 1

- 2

- 3

- 3

- 1

- 3

- 1

- True

- 3