Chapter 6: Joshua Mohundro, Patrick Wong: Difference between revisions

m (→How they work) |

No edit summary |

||

| (44 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

===History=== | ===History=== | ||

One of the first commercially available PCs that used a cache, IBM 360/85, used a sectored cache. The primary reason for a sectored cache is that during the time of the IBM 360/85 it was easier to build than current non-sectored designs. However, the sectored design proved to be much less efficient | One of the first commercially available PCs that used a cache, IBM 360/85, used a sectored cache. The primary reason for a sectored cache is that during the time of the IBM 360/85 it was easier to build than current non-sectored designs. However, the sectored design proved to be much less efficient thAn the non-sectored designs (of the time) and thus largely disappeared. In the 1990's there was a resurgence of interest in sectored caches. This is because sector caches have the feature that large numbers of bytes can be tagged using relatively small numbers of tag bits, while still only transferring small blocks when a miss occurs. This suggests the use of sector caches for multilevel cache designs. In such a design, the cache tags can be placed at a higher level (e.g., on the processor chip) and the cache data array can be placed at a lower level (e.g., off-chip). | ||

===How they work=== | ===How they work=== | ||

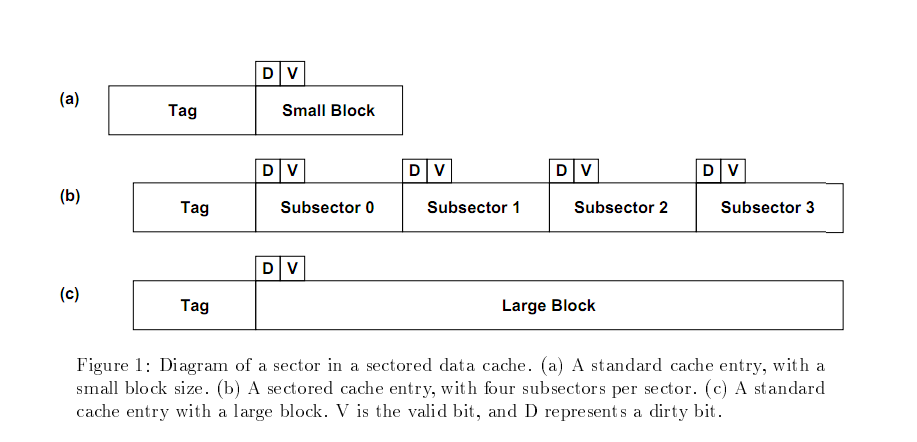

A sectored cache is broken up into sectors (hence the name) each of which has an address tag associated with it. Each sector is further broken down into subsectors, each of which has a "valid" bit allowing for some subsectors to remain empty while others are full. When there is a miss to a sector, a resident sector is evicted, an address tag is set to point to the missed sector and a single subsector is fetched. When a subsector is missing but the sector "containing" it is present then only the subsector needs to be fetched. As mentioned in the history section sectored caches were all but abandoned due to the inferiority to other designs | A sectored cache is broken up into sectors (hence the name) each of which has an address tag associated with it. Each sector is further broken down into subsectors, each of which has a "valid" bit allowing for some subsectors to remain empty while others are full. [[File:sectored_diagram.png|thumb|alt=This is a picture of a sectored cache|This is a diagram of a sectored cache taken from Jeffry B. Rotham<sup>1</sup>.]]When there is a miss to a sector, a resident sector is evicted, an address tag is set to point to the missed sector and a single subsector is fetched. When a subsector is missing but the sector "containing" it is present then only the subsector needs to be fetched. As mentioned in the history section, sectored caches were all but abandoned due to the inferiority to other designs; this inferiority came mainly to the design flaw that a sector would often be evicted before all subsectors had been loaded thus at any given time much of the cache was not utilized.Sector caches do have, however, one important advantage. In a normal, (non-sectored) cache, the only way to have a very large cache capacity with a relatively small numb er of tag bits is to make the cache blocks (lines) very large; the problem in that case is that every miss requires that a large block be fetched in its entirety. With a sector cache, it is possible to fetch only a portion of a block (or sector), and thus the time to handle a miss, and the bus traffic, can both be significantly reduced. Thus, although it is likely that sector caches will have higher miss ratios than normal caches, there is the possibility that when timing is considered, the sector cache will be found to have better performance. | ||

[[File: | ===Examples=== | ||

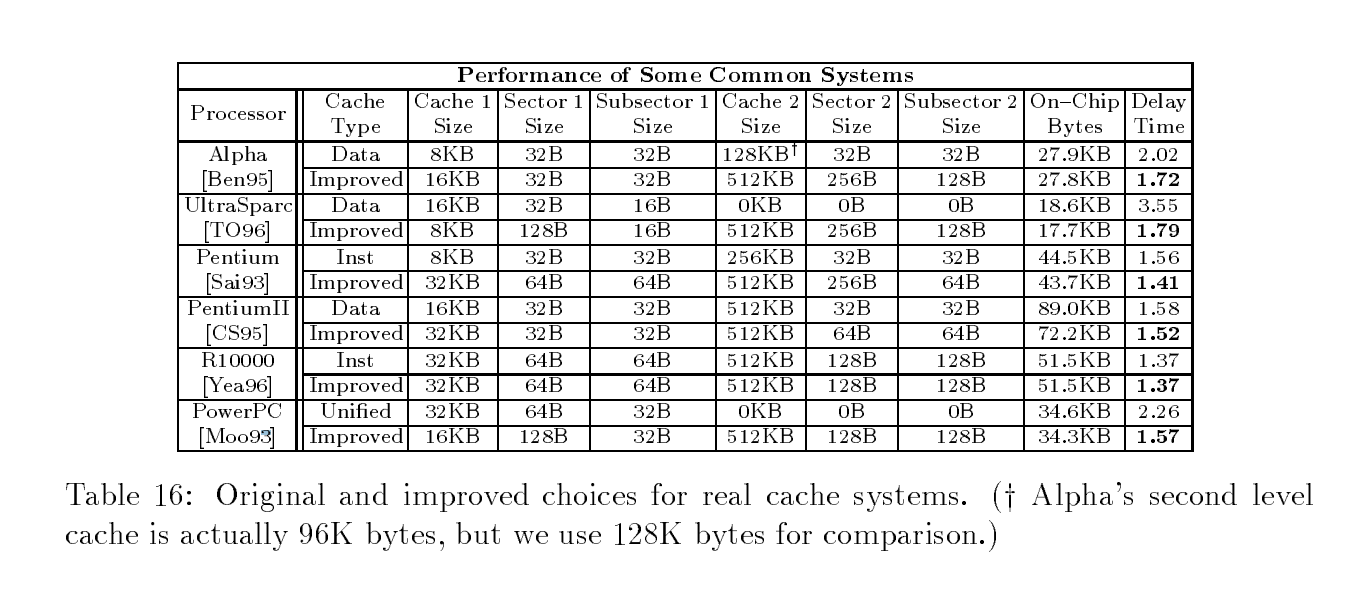

[[File:examples_of_sectored_cache.png|tumb|100px|980px|This is taken from reference 1]] | |||

Image taken from reference 1. | |||

== Victim Cache == | == Victim Cache == | ||

The Victim Cache, in architectures with them, stores just-evicted lines from another level of cache. This cache is usually highly associative and has very few entries, but solves one of the pathological cases for direct-mapped caches, the alternating memory access pattern | The Victim Cache, in architectures with them, stores just-evicted lines from another level of cache. This cache is usually highly associative and has very few entries, but solves one of the pathological cases for direct-mapped caches, the alternating memory access pattern where a cache line conflict occurs. These types of memory patterns cause conflict misses, a cache miss caused by subsequent accesses which use and evict the same cache line. | ||

In general, the solution to conflict misses is a cache of higher associativity. However, associative caches require more logic (ie. run slower) than direct mapped caches; direct mapped caches are faster but less efficient. One solution is to use a more associative cache, giving each line higher associativity, but this is expensive in cache logic. In actual workloads, it was discovered that high associativity is only really needed for very few cache lines. The victim cache's effect is to extend the associativity of cache lines which need them. The associativity of that particular direct mapped cache line increases by the number of entries in the victim cache. Traditional victim caches are usually between 4 and 16 entries and run a little slower than the parent cache due to the fully associative feature of a victim cache. | |||

[[File:ch6_pzwong.png|tumb|100px|768px|A graphical representation of how a Victim Cache works]] | |||

Architectures implementing victim cache for x86 include the Transmeta Efficeon, AMD K7, AMD K8, AMD K10, and the Intel Core i7 series. The AMD and Intel implementations will be examined in the next section. | |||

===Implementations=== | |||

AMD has traditionally implemented an exclusive cache hierarchy, a form of cache that avoids duplication of data by ensuring that data is only available at one cache level. When a line of cache is evicted from a lower level cache, it is added to a higher level cache. Therefore, a victim cache is a natural development from implementation of an exclusive cache as a buffer between levels of cache. | |||

Traditional Victim Caches are implemented in AMD's older architectures, such as the K7 and K8. In K7, the cache was located on a very slow external bus, and the victim cache acted as a buffer between evicted lines from L1 cache and slow L2 cache. In K8 and later iterations of K7, the L2 cache was fast because it was integrated on-chip, but transferring data between the L1 and L2 cache was still an expensive (powerwise) operation that the victim cache helped mitigate. In addition, the hardware is already there from the K7 core. The victim caches on these CPUs was very small: 8 entries (512 bytes at 64 bytes per line). | |||

With the increasing focus on multiprocessing, AMD used the "victim cache" idea and evolved it into a large "victim cache" capable of serving more than two CPUs. In AMD's K10 architecture, a three-level cache hierarchy was used, with the new victim cache at the third level. A smaller, faster, and more associative L1 and L2 cache was dedicated to each CPU, coupled to a large, slow L3 victim cache, which was no longer fully associative. This victim cache was shared between all of the CPUs on the package and was 2-6 MB, an order of magnitude larger than earlier victim caches. The higher associativities of lower level caches reduces the effectiveness of the traditional victim cache. Thus, the evolution of the victim cache to fulfill the demands of multiprocessing is natural. | |||

Intel's Core i7 uses the L3 "victim cache" with non-shared L2 caches to improve cache performance for multiple CPUs. Intel's cache hierarchy is inclusive, so no prior Intel CPU has implemented this feature as there is no good reason to use a victim cache for a inclusive cache on a uniprocessor system (the cache line is just re-fetched from the lower level cache). It marks the first time that an Intel chip has implemented a victim cache. | |||

[[File:Yarr.png|tumb|100px|800px|A diagram of old and new victim cache structure]] | |||

==Notes== | |||

{{Reflist}} | |||

== References == | |||

1. http://www.eecs.berkeley.edu/Pubs/TechRpts/1999/CSD-99-1034.pdf <br/> | |||

2. http://delivery.acm.org/10.1145/200000/192072/p384-seznec.pdf?ip=152.14.244.199&acc=ACTIVE%20SERVICE&CFID=83457235&CFTOKEN=35168855&__acm__=1328554043_50f74be9f1a9f6d72939aa9190e069e7 | |||

Latest revision as of 16:56, 14 March 2012

Sectored Cache

History

One of the first commercially available PCs that used a cache, IBM 360/85, used a sectored cache. The primary reason for a sectored cache is that during the time of the IBM 360/85 it was easier to build than current non-sectored designs. However, the sectored design proved to be much less efficient thAn the non-sectored designs (of the time) and thus largely disappeared. In the 1990's there was a resurgence of interest in sectored caches. This is because sector caches have the feature that large numbers of bytes can be tagged using relatively small numbers of tag bits, while still only transferring small blocks when a miss occurs. This suggests the use of sector caches for multilevel cache designs. In such a design, the cache tags can be placed at a higher level (e.g., on the processor chip) and the cache data array can be placed at a lower level (e.g., off-chip).

How they work

A sectored cache is broken up into sectors (hence the name) each of which has an address tag associated with it. Each sector is further broken down into subsectors, each of which has a "valid" bit allowing for some subsectors to remain empty while others are full.

When there is a miss to a sector, a resident sector is evicted, an address tag is set to point to the missed sector and a single subsector is fetched. When a subsector is missing but the sector "containing" it is present then only the subsector needs to be fetched. As mentioned in the history section, sectored caches were all but abandoned due to the inferiority to other designs; this inferiority came mainly to the design flaw that a sector would often be evicted before all subsectors had been loaded thus at any given time much of the cache was not utilized.Sector caches do have, however, one important advantage. In a normal, (non-sectored) cache, the only way to have a very large cache capacity with a relatively small numb er of tag bits is to make the cache blocks (lines) very large; the problem in that case is that every miss requires that a large block be fetched in its entirety. With a sector cache, it is possible to fetch only a portion of a block (or sector), and thus the time to handle a miss, and the bus traffic, can both be significantly reduced. Thus, although it is likely that sector caches will have higher miss ratios than normal caches, there is the possibility that when timing is considered, the sector cache will be found to have better performance.

Examples

Victim Cache

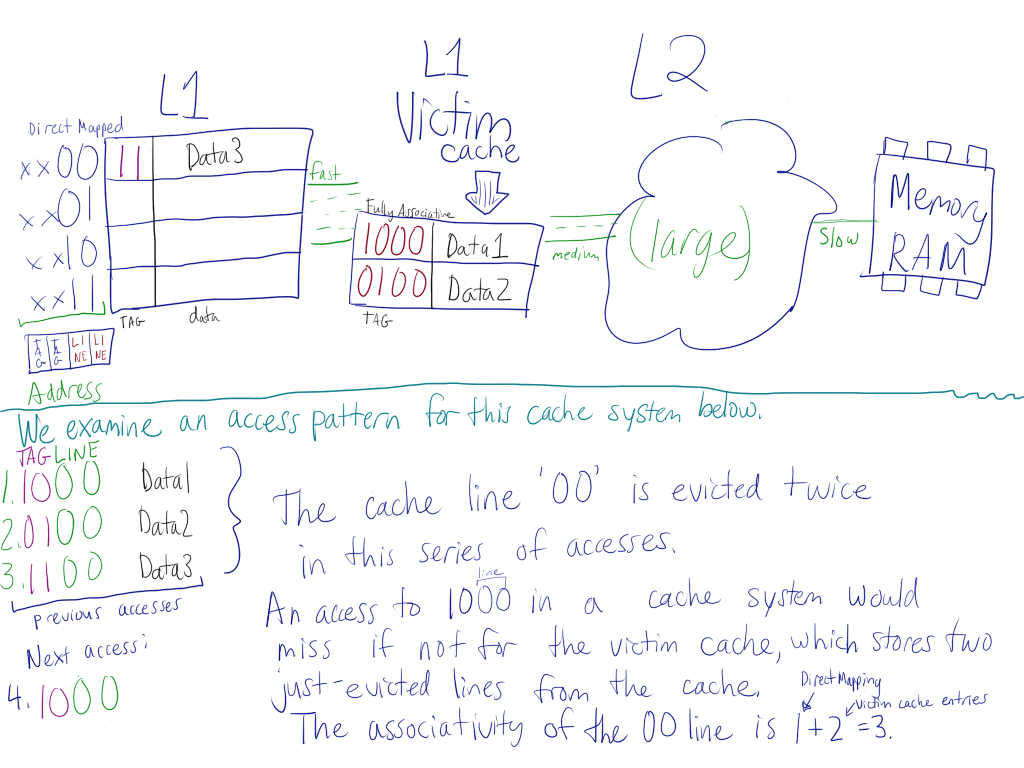

The Victim Cache, in architectures with them, stores just-evicted lines from another level of cache. This cache is usually highly associative and has very few entries, but solves one of the pathological cases for direct-mapped caches, the alternating memory access pattern where a cache line conflict occurs. These types of memory patterns cause conflict misses, a cache miss caused by subsequent accesses which use and evict the same cache line.

In general, the solution to conflict misses is a cache of higher associativity. However, associative caches require more logic (ie. run slower) than direct mapped caches; direct mapped caches are faster but less efficient. One solution is to use a more associative cache, giving each line higher associativity, but this is expensive in cache logic. In actual workloads, it was discovered that high associativity is only really needed for very few cache lines. The victim cache's effect is to extend the associativity of cache lines which need them. The associativity of that particular direct mapped cache line increases by the number of entries in the victim cache. Traditional victim caches are usually between 4 and 16 entries and run a little slower than the parent cache due to the fully associative feature of a victim cache.

Architectures implementing victim cache for x86 include the Transmeta Efficeon, AMD K7, AMD K8, AMD K10, and the Intel Core i7 series. The AMD and Intel implementations will be examined in the next section.

Implementations

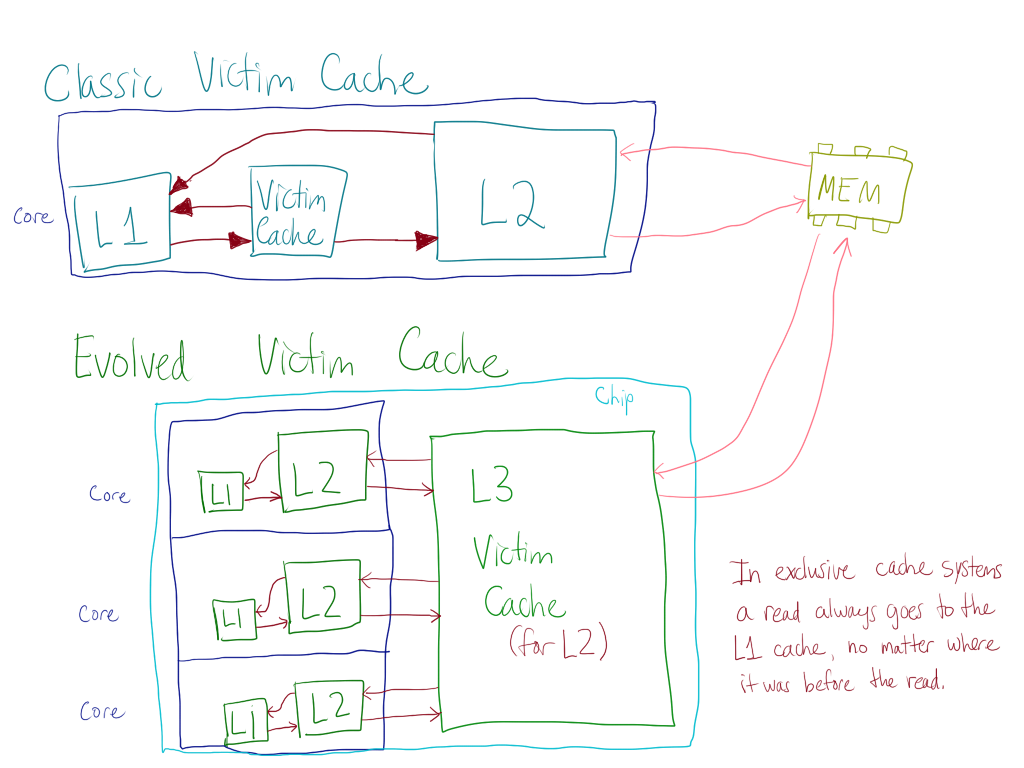

AMD has traditionally implemented an exclusive cache hierarchy, a form of cache that avoids duplication of data by ensuring that data is only available at one cache level. When a line of cache is evicted from a lower level cache, it is added to a higher level cache. Therefore, a victim cache is a natural development from implementation of an exclusive cache as a buffer between levels of cache.

Traditional Victim Caches are implemented in AMD's older architectures, such as the K7 and K8. In K7, the cache was located on a very slow external bus, and the victim cache acted as a buffer between evicted lines from L1 cache and slow L2 cache. In K8 and later iterations of K7, the L2 cache was fast because it was integrated on-chip, but transferring data between the L1 and L2 cache was still an expensive (powerwise) operation that the victim cache helped mitigate. In addition, the hardware is already there from the K7 core. The victim caches on these CPUs was very small: 8 entries (512 bytes at 64 bytes per line).

With the increasing focus on multiprocessing, AMD used the "victim cache" idea and evolved it into a large "victim cache" capable of serving more than two CPUs. In AMD's K10 architecture, a three-level cache hierarchy was used, with the new victim cache at the third level. A smaller, faster, and more associative L1 and L2 cache was dedicated to each CPU, coupled to a large, slow L3 victim cache, which was no longer fully associative. This victim cache was shared between all of the CPUs on the package and was 2-6 MB, an order of magnitude larger than earlier victim caches. The higher associativities of lower level caches reduces the effectiveness of the traditional victim cache. Thus, the evolution of the victim cache to fulfill the demands of multiprocessing is natural.

Intel's Core i7 uses the L3 "victim cache" with non-shared L2 caches to improve cache performance for multiple CPUs. Intel's cache hierarchy is inclusive, so no prior Intel CPU has implemented this feature as there is no good reason to use a victim cache for a inclusive cache on a uniprocessor system (the cache line is just re-fetched from the lower level cache). It marks the first time that an Intel chip has implemented a victim cache.

Notes

References

1. http://www.eecs.berkeley.edu/Pubs/TechRpts/1999/CSD-99-1034.pdf

2. http://delivery.acm.org/10.1145/200000/192072/p384-seznec.pdf?ip=152.14.244.199&acc=ACTIVE%20SERVICE&CFID=83457235&CFTOKEN=35168855&__acm__=1328554043_50f74be9f1a9f6d72939aa9190e069e7