CSC/ECE 506 Spring 2012/1b as: Difference between revisions

| (25 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

==What is Moore's Law?== | ==What is Moore's Law?== | ||

[[Image:TransCount59-75.png|right]] | [[Image:TransCount59-75.png|right]] | ||

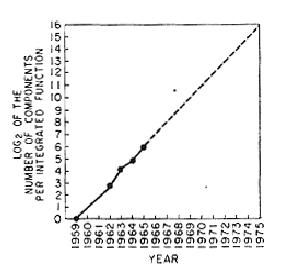

Moore's law, named after Gordon Moore, co-founder of Intel, states that the number of transistors that can be placed on an [http://en.wikipedia.org/wiki/Integrated_circuit integrated circuit] will double approximately every two years<ref>http://www.computerhistory.org/semiconductor/timeline/1965-Moore.html</ref>. | Moore's law, named after Gordon Moore, co-founder of Intel, states that the number of transistors that can be placed on an [http://en.wikipedia.org/wiki/Integrated_circuit integrated circuit] will double approximately every two years<ref>http://www.computerhistory.org/semiconductor/timeline/1965-Moore.html</ref>. The original prediction in 1965 stated a doubling every 12 months, but in 1975, after microprocessors were introduced that were less dense he slowed the rate of doubling to its current state of two years <ref>http://arstechnica.com/hardware/news/2008/09/moore.ars</ref>. Instead of giving an empirical formula predicting the rate of increase, Moore instead used prose, graphs, and images to convey these predictions and observations to the masses. This in some ways increased the staying power of Moore's law, allowing the industry to use it as a benchmark of success and a measurable determination of their success. Virtually all digital devices are in some way fundamentally linked to the growth set in place by Moore's law.<ref>http://en.wikipedia.org/wiki/Moore's_law</ref> | ||

== | ==A quick primer on current manufacturing techniques== | ||

At the heart of Moore's Law is the transistor. Computer chips contain hundreds of millions of transistors embedded on a wafer of silicone. To make these chips, first a “stencil” is made containing the outlines of millions of transistors. The stencil is then placed over a silicone wafer which is sensitive to ultraviolet light. The light penetrates through the gaps of the stencil and exposes the silicon wafer, which is then is bathed in acid, carving the outlines of the circuits and the design of millions of transistors. Since the wafer consists of many conducting and semiconducting layers, the acid cuts into the wafer at different depths and patterns, so one can create circuits of enormous complexity. | |||

One reason why Moore’s law has relentlessly increased the power of chips is because UV light can be tuned so that its wavelength is smaller and smaller, making it possible to etch increasingly tiny transistors onto silicon wafers. Since UV light has a wavelength as small as 10 [http://en.wikipedia.org/wiki/Nanometre nanometers], the smallest transistor that can be etched is about thirty atoms across.<ref>http://en.wikipedia.org/wiki/Photolithography</ref> Due to physical limitations, This process cannot go on forever. At some point, it will be physically impossible to etch smaller effective transistors, and Moore’s law as we understand it will finally collapse. | |||

Currently, estimates predict that around 2020 or soon afterward, Moore’s law will gradually cease to hold true for traditional transistor technology. Transistors will be so small that quantum effects will begin to take over and electrons will "leak" out of the wires.<ref>http://computer.howstuffworks.com/small-cpu2.htm</ref><ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref> For example, the thinnest layer inside a computer will be about five atoms across. At that size, quantum effects will become dominant and transistors will not function as they currently do without other technological advances. | |||

===A Common Misconception=== | |||

Moore's Law is often linked to performance improvements as measured in CPU clock speeds. In the 1980's, former Intel executive David House stated that chip performance would double every 18 months.<ref>http://news.cnet.com/Myths-of-Moores-Law/2010-1071_3-1014887.html</ref> This is a consequence of Moore's Law, but it is not what Moore's Law actually claims. In fact, due to heat dissipation issues<ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref><ref>http://techtalk.pcpitstop.com/2007/08/06/cpu-clock-speeds/</ref>, performance as measured in clock speed has remained flat since 2005<ref>http://www.kmeme.com/2010/09/clock-speed-wall.html</ref> while the number of transistors continues to double roughly every 2 years. | |||

==Moore's law, past to present== | ==Moore's law, past to present== | ||

[[Image:Mooreslaw.png|right|thumb|350px|]] | [[Image:Mooreslaw.png|right|thumb|350px|]] | ||

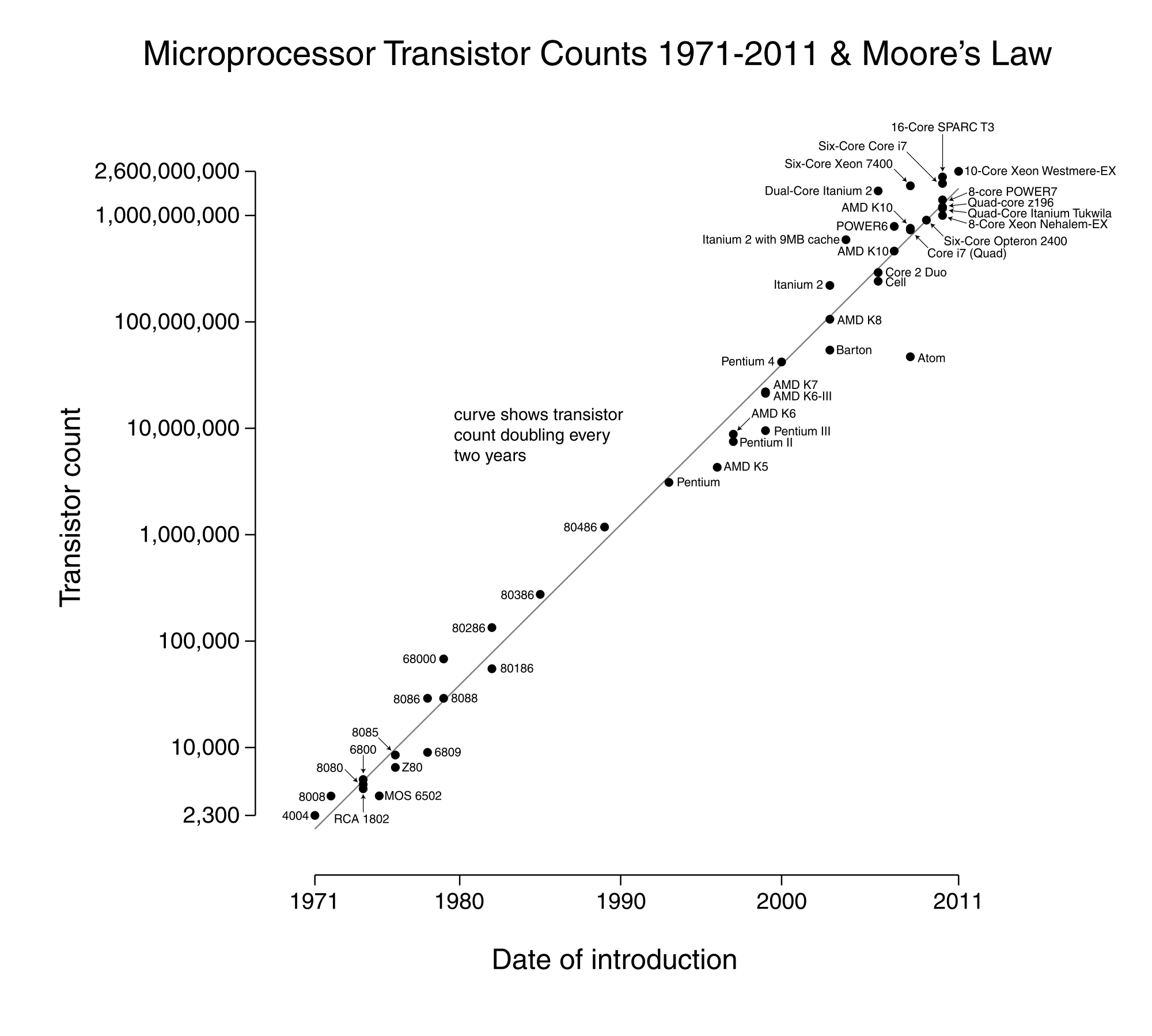

Reviewing data from the inception of Moore's law to the present shows that, consistent to Moore's prediction, the number of transistors on a chip has doubled approximately every 2 years. There are several contributing factors, that had they not been developed, could have slowed or plateaued Moore's law. | Reviewing data from the inception of Moore's law to the present shows that, consistent to Moore's prediction, the number of transistors on a chip has doubled approximately every 2 years. There are several contributing factors, that had they not been developed, could have slowed or plateaued Moore's law. One of these is the invention Dynamic random access memory ([http://en.wikipedia.org/wiki/DRAM DRAM]). This is a type of random access memory that allows for the storage of each bit in a separate capacitor on an integrated circuit. The main advantage of DRAM over its predecessor, SRAM, is that only one transistor and a capacitor are required per bit, compared to four or six transistors with SRAM. Another is most certainly the complementary metal-oxide-semiconductor ([http://en.wikipedia.org/wiki/CMOS CMOS]). This allowed a higher density of logic functions on a chip with the added benefit of low power consumption and electrical noise immunity. Lastly was the invention of the integrated circuit itself. Moore's law isn't only responsible for making larger and faster chips, but also smaller, cheaper, and more efficient ones as well. | ||

As visible in the examples below and to the right, Moore's law has seemed to hold at an overall constant rate of growth consistent to what Moore predicted. There is some variation from year to year that can be explained by the introduction of new technology, manufacturing or otherwise that helped kick-start Moore's law back on track. This is most evident with the "Dual-Core Itanium 2" processor. It was ahead of its competitors by a full four years. Looking at the example to the right, we can visibly see a dip in transistor count during 1995-2003. But this is balanced out by an almost equal increase in the following eight years. | |||

{| class="wikitable sortable" style="text-align:center" | {| class="wikitable sortable" style="text-align:center" | ||

! Processor | ! Processor | ||

| Line 338: | Line 349: | ||

|} | |} | ||

== | ==The lesser known second law== | ||

There is another fundamental principle known as Rock's law, this law is a direct consequence to Moore's law in that the cost to produce transistors on a chip may go down, these costs instead flow towards manufacturing, testing, and research and development. The law states that the cost of a semiconductor chip fabrication plant doubles ever four years. Simply put, in order for Moore's law to hold, Rock's law must also hold.<ref>http://en.wikipedia.org/wiki/Rock%27s_law</ref> | |||

Due to current economic downturns, Moore's law may see troubling times ahead. Due to the increase investment towards research and development and fabrication cost, it may soon be difficult to meet an adequate return on investment given current production standards. New and innovative methods will have to be developed.<ref>http://ieeexplore.ieee.org.prox.lib.ncsu.edu/stamp/stamp.jsp?tp=&arnumber=5227172</ref> | |||

==Beyond Moore's Law== | |||

There are a few new technologies that have the potential to change the underlying architecture of processors and extend performance gains past the theoretical limits of traditional transistors. | |||

== | ====Do Transistor Counts Matter?==== | ||

Moore's Law | Moore's Law concerns only the doubling of transistors on the same die space every 2 years. While some of these new technologies deal directly with adding more transistors into the same amount of space, others take a different approach to boost overall computational performance. While not strictly following Moore's Law, per se, these advanced designs will lead to a continuation of the increase in computational power that can be harnessed from hardware. They are included in the discussion to illustrate that performance is not necessarily dependent on the number of transistors that can be placed on a die. Novel approaches, such as 3-D transistor manufacturing will allow for greater densities, but other approaches, such as quantum computing operate in a different way than the traditional transistor to solve the same problem more efficiently.<ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref><ref>http://www.iue.tuwien.ac.at/phd/wittmann/node6.html</ref> | ||

===[http://en.wikipedia.org/wiki/Memristor The Memristor]=== | ===[http://en.wikipedia.org/wiki/Memristor The Memristor]=== | ||

| Line 362: | Line 369: | ||

===[http://en.wikipedia.org/wiki/Quantum_computer Quantum Computing]=== | ===[http://en.wikipedia.org/wiki/Quantum_computer Quantum Computing]=== | ||

Quantum computing works by essentially allowing all available bits to enter into superposition. Using this superposition, each "q-bit" can be entangled with other q-bits to represent multiple states at once. By using quantum logic gates, the qbits can be manipulated to find the desired state among the superposition of states. This has great potential for drastically shortening the time necessary to solve several important problems, including integer factorization and the discrete logarithm problem, upon which much current encryption is based. Quantum computing faces several technical issues, including [http://en.wikipedia.org/wiki/Quantum_decoherence decoherence], which makes quantum computers difficult to construct and maintain. | Quantum computing works by essentially allowing all available bits to enter into [http://en.wikipedia.org/wiki/Superposition_principle superposition.] Using this superposition, each [http://en.wikipedia.org/wiki/Qubit "q-bit"] can be entangled with other q-bits to represent multiple states at once. By using [http://en.wikipedia.org/wiki/Quantum_gate quantum logic gates], the qbits can be manipulated to find the desired state among the superposition of states. This has great potential for drastically shortening the time necessary to solve several important problems, including integer factorization and the [http://en.wikipedia.org/wiki/Discrete_logarithm_problem discrete logarithm problem], upon which much current encryption is based. Quantum computing faces several technical issues, including [http://en.wikipedia.org/wiki/Quantum_decoherence decoherence], which makes quantum computers difficult to construct and maintain. | ||

===[http://en.wikipedia.org/wiki/Ballistic_transistor Ballistic Deflection Transistors]=== | ===[http://en.wikipedia.org/wiki/Ballistic_transistor Ballistic Deflection Transistors]=== | ||

| Line 383: | Line 390: | ||

The demise of Moore's Law has been predicted several times during the past 40 years, but transistor counts continue to follow a two year doubling on average. With the traditional transistor approach, inevitable physical limits will be reached around the 16 nm process, due to [http://en.wikipedia.org/wiki/Quantum_tunnelling quantum tunneling]<ref>http://news.cnet.com/2100-1008-5112061.html</ref>. If this is true, the current pace of innovation would lead to hitting "Moore's Wall" around 2022, or in about 10 years. This "10 year horizon" for Moore's Law has existed since the early 1990's, with new designs, processes, and breakthroughs which continue to extend the timeline.<ref>http://arxiv.org/pdf/astro-ph/0404510v2.pdf</ref><ref>http://java.sys-con.com/node/557154</ref> New technologies that leverage three dimensional chip architecture would allow for years of continued growth in transistor counts and exotic designs could further increase the theoretical capacity of transistors in a particular space. If the past is used as a predictor for future trends, it is safe to say that the end of Moore's Law "is about 10 years away". | The demise of Moore's Law has been predicted several times during the past 40 years, but transistor counts continue to follow a two year doubling on average. With the traditional transistor approach, inevitable physical limits will be reached around the 16 nm process, due to [http://en.wikipedia.org/wiki/Quantum_tunnelling quantum tunneling]<ref>http://news.cnet.com/2100-1008-5112061.html</ref>. If this is true, the current pace of innovation would lead to hitting "Moore's Wall" around 2022, or in about 10 years. This "10 year horizon" for Moore's Law has existed since the early 1990's, with new designs, processes, and breakthroughs which continue to extend the timeline.<ref>http://arxiv.org/pdf/astro-ph/0404510v2.pdf</ref><ref>http://java.sys-con.com/node/557154</ref> New technologies that leverage three dimensional chip architecture would allow for years of continued growth in transistor counts and exotic designs could further increase the theoretical capacity of transistors in a particular space. If the past is used as a predictor for future trends, it is safe to say that the end of Moore's Law "is about 10 years away". | ||

On another note, if we relax the definition of Moore's Law to include computational performance gains, we open a whole new avenue by which to measure computing power. Most of the easy gains in performance related to transistor counts have been realized, but new designs of how basic computing is performed can theoretically yield large increases in performance without doubling of transistor counts or extremely high power requirements.<ref>http://abcnews.go.com/Technology/story?id=4006166&page=1#.TzAbDcVA_H4</ref><ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref> The era of the traditional transistor is not quite over yet, but the relevance of transistor counts may be nearing it's end. | |||

==References== | ==References== | ||

<references/> | <references/> | ||

Latest revision as of 03:55, 22 January 2014

What is Moore's Law?

Moore's law, named after Gordon Moore, co-founder of Intel, states that the number of transistors that can be placed on an integrated circuit will double approximately every two years<ref>http://www.computerhistory.org/semiconductor/timeline/1965-Moore.html</ref>. The original prediction in 1965 stated a doubling every 12 months, but in 1975, after microprocessors were introduced that were less dense he slowed the rate of doubling to its current state of two years <ref>http://arstechnica.com/hardware/news/2008/09/moore.ars</ref>. Instead of giving an empirical formula predicting the rate of increase, Moore instead used prose, graphs, and images to convey these predictions and observations to the masses. This in some ways increased the staying power of Moore's law, allowing the industry to use it as a benchmark of success and a measurable determination of their success. Virtually all digital devices are in some way fundamentally linked to the growth set in place by Moore's law.<ref>http://en.wikipedia.org/wiki/Moore's_law</ref>

A quick primer on current manufacturing techniques

At the heart of Moore's Law is the transistor. Computer chips contain hundreds of millions of transistors embedded on a wafer of silicone. To make these chips, first a “stencil” is made containing the outlines of millions of transistors. The stencil is then placed over a silicone wafer which is sensitive to ultraviolet light. The light penetrates through the gaps of the stencil and exposes the silicon wafer, which is then is bathed in acid, carving the outlines of the circuits and the design of millions of transistors. Since the wafer consists of many conducting and semiconducting layers, the acid cuts into the wafer at different depths and patterns, so one can create circuits of enormous complexity.

One reason why Moore’s law has relentlessly increased the power of chips is because UV light can be tuned so that its wavelength is smaller and smaller, making it possible to etch increasingly tiny transistors onto silicon wafers. Since UV light has a wavelength as small as 10 nanometers, the smallest transistor that can be etched is about thirty atoms across.<ref>http://en.wikipedia.org/wiki/Photolithography</ref> Due to physical limitations, This process cannot go on forever. At some point, it will be physically impossible to etch smaller effective transistors, and Moore’s law as we understand it will finally collapse.

Currently, estimates predict that around 2020 or soon afterward, Moore’s law will gradually cease to hold true for traditional transistor technology. Transistors will be so small that quantum effects will begin to take over and electrons will "leak" out of the wires.<ref>http://computer.howstuffworks.com/small-cpu2.htm</ref><ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref> For example, the thinnest layer inside a computer will be about five atoms across. At that size, quantum effects will become dominant and transistors will not function as they currently do without other technological advances.

A Common Misconception

Moore's Law is often linked to performance improvements as measured in CPU clock speeds. In the 1980's, former Intel executive David House stated that chip performance would double every 18 months.<ref>http://news.cnet.com/Myths-of-Moores-Law/2010-1071_3-1014887.html</ref> This is a consequence of Moore's Law, but it is not what Moore's Law actually claims. In fact, due to heat dissipation issues<ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref><ref>http://techtalk.pcpitstop.com/2007/08/06/cpu-clock-speeds/</ref>, performance as measured in clock speed has remained flat since 2005<ref>http://www.kmeme.com/2010/09/clock-speed-wall.html</ref> while the number of transistors continues to double roughly every 2 years.

Moore's law, past to present

Reviewing data from the inception of Moore's law to the present shows that, consistent to Moore's prediction, the number of transistors on a chip has doubled approximately every 2 years. There are several contributing factors, that had they not been developed, could have slowed or plateaued Moore's law. One of these is the invention Dynamic random access memory (DRAM). This is a type of random access memory that allows for the storage of each bit in a separate capacitor on an integrated circuit. The main advantage of DRAM over its predecessor, SRAM, is that only one transistor and a capacitor are required per bit, compared to four or six transistors with SRAM. Another is most certainly the complementary metal-oxide-semiconductor (CMOS). This allowed a higher density of logic functions on a chip with the added benefit of low power consumption and electrical noise immunity. Lastly was the invention of the integrated circuit itself. Moore's law isn't only responsible for making larger and faster chips, but also smaller, cheaper, and more efficient ones as well.

As visible in the examples below and to the right, Moore's law has seemed to hold at an overall constant rate of growth consistent to what Moore predicted. There is some variation from year to year that can be explained by the introduction of new technology, manufacturing or otherwise that helped kick-start Moore's law back on track. This is most evident with the "Dual-Core Itanium 2" processor. It was ahead of its competitors by a full four years. Looking at the example to the right, we can visibly see a dip in transistor count during 1995-2003. But this is balanced out by an almost equal increase in the following eight years.

| Processor | Transistor count | Date of introduction | Manufacturer | Process | Area |

|---|---|---|---|---|---|

| Intel 4004 | 2,300 | 1971 | Intel | 10 µm | 12 mm² |

| Intel 8008 | 3,500 | 1972 | Intel | 10 µm | 14 mm² |

| MOS Technology 6502 | 3,510 | 1975 | MOS Technology | 21 mm² | |

| Motorola 6800 | 4,100 | 1974 | Motorola | 16 mm² | |

| Intel 8080 | 4,500 | 1974 | Intel | 6 μm | 20 mm² |

| RCA 1802 | 5,000 | 1974 | RCA | 5 μm | 27 mm² |

| Intel 8085 | 6,500 | 1976 | Intel | 3 μm | 20 mm² |

| Zilog Z80 | 8,500 | 1976 | Zilog | 4 μm | 18 mm² |

| Motorola 6809 | 9,000 | 1978 | Motorola | 5 μm | 21 mm² |

| Intel 8086 | 29,000 | 1978 | Intel | 3 μm | 33 mm² |

| Intel 8088 | 29,000 | 1979 | Intel | 3 μm | 33 mm² |

| Intel 80186 | 55,000 | 1982 | Intel | ||

| Motorola 68000 | 68,000 | 1979 | Motorola | 4 μm | 44 mm² |

| Intel 80286 | 134,000 | 1982 | Intel | 1.5 µm | 49 mm² |

| Intel 80386 | 275,000 | 1985 | Intel | 1.5 µm | 104 mm² |

| Intel 80486 | 1,180,000 | 1989 | Intel | 1 µm | 160 mm² |

| Pentium | 3,100,000 | 1993 | Intel | 0.8 µm | 294 mm² |

| AMD K5 | 4,300,000 | 1996 | AMD | 0.5 µm | |

| Pentium II | 7,500,000 | 1997 | Intel | 0.35 µm | 195 mm² |

| AMD K6 | 8,800,000 | 1997 | AMD | 0.35 µm | |

| Pentium III | 9,500,000 | 1999 | Intel | 0.25 µm | |

| AMD K6-III | 21,300,000 | 1999 | AMD | 0.25 µm | |

| AMD K7 | 22,000,000 | 1999 | AMD | 0.25 µm | |

| Pentium 4 | 42,000,000 | 2000 | Intel | 180 nm | |

| Atom | 47,000,000 | 2008 | Intel | 45 nm | |

| Barton | 54,300,000 | 2003 | AMD | 130 nm | |

| AMD K8 | 105,900,000 | 2003 | AMD | 130 nm | |

| Itanium 2 | 220,000,000 | 2003 | Intel | 130 nm | |

| Cell | 241,000,000 | 2006 | Sony/IBM/Toshiba | 90 nm | |

| Core 2 Duo | 291,000,000 | 2006 | Intel | 65 nm | |

| AMD K10 | 463,000,000 | 2007 | AMD | 65 nm | |

| AMD K10 | 758,000,000 | 2008 | AMD | 45 nm | |

| Itanium 2 with 9MB cache | 592,000,000 | 2004 | Intel | 130 nm | |

| Core i7 (Quad) | 731,000,000 | 2008 | Intel | 45 nm | 263 mm² |

| Six-Core Xeon 7400 | 1,900,000,000 | 2008 | Intel | 45 nm | |

| POWER6 | 789,000,000 | 2007 | IBM | 65 nm | 341 mm² |

| Six-Core Opteron 2400 | 904,000,000 | 2009 | AMD | 45 nm | 346 mm² |

| 16-Core SPARC T3 | 1,000,000,000 | 2010 | Sun/Oracle Corporation|Oracle | 40 nm | 377 mm² |

| Core i7 (Gulftown) | 1,170,000,000 | 2010 | Intel | 32 nm | 240 mm² |

| 8-core POWER7 | 1,200,000,000 | 2010 | IBM | 45 nm | 567 mm² |

| z196 | 1,400,000,000 | 2010 | IBM | 45 nm | 512 mm² |

| Dual-Core Itanium 2 | 1,700,000,000 | 2006 | Intel | 90 nm | 596 mm² |

| Tukwila | 2,000,000,000 | 2010 | Intel | 65 nm | 699 mm² |

| Core i7 (Sandy Bridge-E) | 2,270,000,000 | 2011 | Intel | 32 nm | 434 mm² |

| Nehalem-EX | 2,300,000,000 | 2010 | Intel | 45 nm | 684 mm² |

| 10-Core Xeon Westmere-EX | 2,600,000,000 | 2011 | Intel | 32 nm | 512 mm² |

The lesser known second law

There is another fundamental principle known as Rock's law, this law is a direct consequence to Moore's law in that the cost to produce transistors on a chip may go down, these costs instead flow towards manufacturing, testing, and research and development. The law states that the cost of a semiconductor chip fabrication plant doubles ever four years. Simply put, in order for Moore's law to hold, Rock's law must also hold.<ref>http://en.wikipedia.org/wiki/Rock%27s_law</ref>

Due to current economic downturns, Moore's law may see troubling times ahead. Due to the increase investment towards research and development and fabrication cost, it may soon be difficult to meet an adequate return on investment given current production standards. New and innovative methods will have to be developed.<ref>http://ieeexplore.ieee.org.prox.lib.ncsu.edu/stamp/stamp.jsp?tp=&arnumber=5227172</ref>

Beyond Moore's Law

There are a few new technologies that have the potential to change the underlying architecture of processors and extend performance gains past the theoretical limits of traditional transistors.

Do Transistor Counts Matter?

Moore's Law concerns only the doubling of transistors on the same die space every 2 years. While some of these new technologies deal directly with adding more transistors into the same amount of space, others take a different approach to boost overall computational performance. While not strictly following Moore's Law, per se, these advanced designs will lead to a continuation of the increase in computational power that can be harnessed from hardware. They are included in the discussion to illustrate that performance is not necessarily dependent on the number of transistors that can be placed on a die. Novel approaches, such as 3-D transistor manufacturing will allow for greater densities, but other approaches, such as quantum computing operate in a different way than the traditional transistor to solve the same problem more efficiently.<ref>http://www.monolithic3d.com/2/post/2011/09/is-there-a-fundamental-limit-to-miniaturizing-cmos-transistors1.html</ref><ref>http://www.iue.tuwien.ac.at/phd/wittmann/node6.html</ref>

The Memristor

Currently being developed by Hewlett Packard, the memristor is a new type of transistor that combines both electrical charge and magnetic flux. As current flows in one direction through the circuit, resistance increases. Reversing the flow will decrease the resistance and stopping the flow will leave the resistance in the current state. This type of structure allows for both data storage and data processing (logic gate construction). Currently, it is postulated that memristors could be layered in three dimensions on silicone, yielding data and transistor densities of up to 1000 times greater than currently available. HP has reported the ability to fit 100GB in a square centimeter<ref>http://www.eetimes.com/electronics-news/4076910/-Missing-link-memristor-created-Rewrite-the-textbooks</ref> and with the ability to layer memristors, this could lead to pocket devices with a capacity of over 1 petabyte.

Some advanced theoretical capabilities of memristors are the ability to store more than one state, which can lead to analog computing. Memristor technology may also provide an excellent architecture for synaptic modeling and self-learning systems.

Quantum Computing

Quantum computing works by essentially allowing all available bits to enter into superposition. Using this superposition, each "q-bit" can be entangled with other q-bits to represent multiple states at once. By using quantum logic gates, the qbits can be manipulated to find the desired state among the superposition of states. This has great potential for drastically shortening the time necessary to solve several important problems, including integer factorization and the discrete logarithm problem, upon which much current encryption is based. Quantum computing faces several technical issues, including decoherence, which makes quantum computers difficult to construct and maintain.

Ballistic Deflection Transistors

Another promising avenue is a re-design of the traditional transistor. Essentially, single electrons are passed through a transistor and deflected into one path or the other, thus delivering a 0 or a 1. The theoretical speed of these transistors is in the terahertz range.<ref>http://www.rochester.edu/news/show.php?id=2585</ref>

Other Technologies

The arena of research to produce an alternative to the traditional transistor includes many novel approaches. They include (but are not limited to):

- Optical Computing

- DNA Computing

- Molecular Electronics

- Spintronics

- Chemical Computing

- Artificial Neural Networks

- Unconventional Computing

Conclusions

The demise of Moore's Law has been predicted several times during the past 40 years, but transistor counts continue to follow a two year doubling on average. With the traditional transistor approach, inevitable physical limits will be reached around the 16 nm process, due to quantum tunneling<ref>http://news.cnet.com/2100-1008-5112061.html</ref>. If this is true, the current pace of innovation would lead to hitting "Moore's Wall" around 2022, or in about 10 years. This "10 year horizon" for Moore's Law has existed since the early 1990's, with new designs, processes, and breakthroughs which continue to extend the timeline.<ref>http://arxiv.org/pdf/astro-ph/0404510v2.pdf</ref><ref>http://java.sys-con.com/node/557154</ref> New technologies that leverage three dimensional chip architecture would allow for years of continued growth in transistor counts and exotic designs could further increase the theoretical capacity of transistors in a particular space. If the past is used as a predictor for future trends, it is safe to say that the end of Moore's Law "is about 10 years away".

On another note, if we relax the definition of Moore's Law to include computational performance gains, we open a whole new avenue by which to measure computing power. Most of the easy gains in performance related to transistor counts have been realized, but new designs of how basic computing is performed can theoretically yield large increases in performance without doubling of transistor counts or extremely high power requirements.<ref>http://abcnews.go.com/Technology/story?id=4006166&page=1#.TzAbDcVA_H4</ref><ref>http://www.gotw.ca/publications/concurrency-ddj.htm</ref> The era of the traditional transistor is not quite over yet, but the relevance of transistor counts may be nearing it's end.

References

<references/>