CSC/ECE 506 Spring 2012/2a va: Difference between revisions

No edit summary |

No edit summary |

||

| (20 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

===Introduction=== | ===Introduction=== | ||

Decades ago, shared memory programming model was always implemented on shared memory hardware, while message-passing programming model was adopted on distributed memory hardware. However, nowadays the boundary has been broken. With the support of a shared virtual memory layer, we can program in shared memory fashion on distributed | Decades ago, [http://en.wikipedia.org/wiki/Shared_memory shared memory] programming model was always implemented on shared memory hardware, while [http://en.wikipedia.org/wiki/Message_passing message-passing] programming model was adopted on [http://en.wikipedia.org/wiki/Distributed_memory distributed memory] hardware. However, nowadays the boundary has been broken. With the support of a shared [http://en.wikipedia.org/wiki/Virtual_memory virtual memory] layer, we can program in shared memory fashion on distributed memory hardware. | ||

This article discusses how the shared-memory programming model is implemented on distributed-memory hardware. It provides some basic definitions of the memory models available as well as their strengths and weaknesses. It then goes into more depth on DSM systems, discussing the various ways in which communication takes place between [http://en.wikipedia.org/wiki/Central_processing_unit processors] as well as examples of how these are implemented in various DSM systems. The article also covers the transfer size of messages, the relative performance of SAS programming versus the message passing model on distributed systems, and finishes up with algorithms used in SAS programming. | |||

=== | ===Memory Model Definitions=== | ||

[http://en.wikipedia.org/wiki/Parallel_programming_model Parallel Programming models] traditionally break down into two main types: | |||

* SAS Programming - Shared Address Space Programming, also referred to as the Shared Memory Model, is a style of [http://en.wikipedia.org/wiki/Parallel_computing parallel programming] in which all the processors in the system have read and write access to the same memory. Information private to a single processor can be written to a designated memory location, but anything that is to be shared should be written to the shared location.<ref>http://www-public.int-evry.fr/~gibson/Teaching/CSC5021/ReadingMaterial/DagumMenon98.pdf</ref> | |||

* Distributed-Memory Machine - In the Distributed Memory Model, each processor has access only to its own memory. Information is shared between processors through a message passing system.<ref>http://www.cparity.com/projects/AcmClassification/samples/201065.pdf</ref> | |||

=====Distributed-memory | ===Strengths and Weakness of Memory Models=== | ||

In order to be effective, parallel processing must be supported on both a software level and a hardware level, with the appropriate systems in between. Different memory models have been developed which handle parallel processing in distinct ways. | |||

====Shared-memory==== | |||

This model is widely considered to be the most straightforward to program for. The main shortcoming of this model, however, is that it does not scale well due to the fact that the processors must share a common [http://en.wikipedia.org/wiki/Bus_(computing) bus] which [http://en.wikipedia.org/wiki/Synchronization_(computer_science)#Thread_or_process_synchronization serializes] a significant portion of communication. | |||

====Distributed-memory==== | |||

This model has the opposite strengths and weaknesses of the shared-memory model. It scales well as long as the machines are on a highspeed network, but the message-passing model associated with distributed-memory systems is much more difficult to program for. | This model has the opposite strengths and weaknesses of the shared-memory model. It scales well as long as the machines are on a highspeed network, but the message-passing model associated with distributed-memory systems is much more difficult to program for. | ||

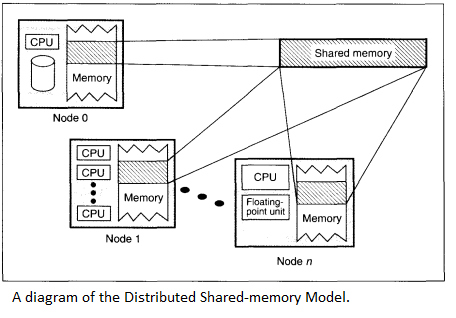

==== | ====Distributed Shared-memory==== | ||

=== | SAS programming on distributed-memory machines is an attempt to give us the best of both the shared-memory model and the distributed memory model. Frequently referred to as the [http://en.wikipedia.org/wiki/Distributed_shared_memory distributed shared-memory] model or DSM, it uses a virtual address space or similar abstraction which gives the impression that the memory is shared even though it isn’t.<ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.17.7084&rep=rep1&type=pdf</ref> | ||

DSMs are less efficient than a straight message passing system. The reason for this is that under the message passing model, the programmer is aware of when messages need to be passed and can program for those situations. With the DSM model, the programmer programs as if no messages need to be shared, but the DSM system must handle and attempt to anticipate when messages will need to be sent without knowing what applications will be running on it or how those applications have been programmed. <ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.52.4066&rep=rep1&type=pdf</ref> | DSMs are less efficient than a straight message passing system. The reason for this is that under the message passing model, the programmer is aware of when messages need to be passed and can program for those situations. With the DSM model, the programmer programs as if no messages need to be shared, but the DSM system must handle and attempt to anticipate when messages will need to be sent without knowing what applications will be running on it or how those applications have been programmed.<ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.52.4066&rep=rep1&type=pdf</ref> | ||

[[File: | [[File:dsm2.png|left|A diagram of the Distributed Shared-memory Model.]] | ||

=== | ===Communication between processors=== | ||

In the DSM, the system has to do a lookup for each reference to figure out if the data is local. If it’s not, a protocol action is invoked to acquire the data locally. This is referred to as access control.<ref>http://ftp.cs.wisc.edu/pub/techreports/1994/TR1238.pdf</ref> This is implemented in a variety of ways, including both hardware and software only solutions, as well solutions that include both hardware and software elements. All of these efforts are made in an attempt to bring the efficiency of the DSM model closer to that of a well-programmed message passing model. The efficiency issue with DSM boils down to how remote data is both stored and retrieved. There have been several different approaches to this problem | In the DSM, the system has to do a lookup for each reference to figure out if the data is local. If it’s not, a [http://en.wikipedia.org/wiki/Communications_protocol protocol] action is invoked to acquire the data locally. This is referred to as access control.<ref>http://ftp.cs.wisc.edu/pub/techreports/1994/TR1238.pdf</ref> This is implemented in a variety of ways, including both hardware and software only solutions, as well solutions that include both hardware and software elements. All of these efforts are made in an attempt to bring the efficiency of the DSM model closer to that of a well-programmed message passing model. The efficiency issue with DSM boils down to how remote data is both stored and retrieved. There have been several different approaches to this communication problem. | ||

====Static Data==== | ====Static Data==== | ||

Simply making the location of data within the overall system static solves the problem of how to find the data. This approach, however, creates a new problem of making sure that the data is properly distributed throughout the system. If all of the data ends up on a single node, this will create problems as multiple processes try to access the same data. | Simply making the location of data within the overall system static solves the problem of how to find the data. This approach, however, creates a new problem of making sure that the data is properly distributed throughout the system. If all of the data ends up on a single [http://en.wikipedia.org/wiki/Node_(networking) node], this will create problems as multiple processes try to access the same data. | ||

====Dynamic Data==== | ====Dynamic Data==== | ||

| Line 47: | Line 53: | ||

=====Broadcasting===== | =====Broadcasting===== | ||

With the [http://en.wikipedia.org/wiki/Broadcasting_(networking) broadcasting] approach, the node needing the data sends a message out to all the nodes in the system. Each of the nodes processes the message to determine if it has the data the sending node needs. If one of the nodes has the data, it return the data to the sending node. The problem with this approach is that it creates a lot of overhead as every node has to process the broadcast. | |||

=====Owner based distribution===== | =====Owner based distribution===== | ||

| Line 53: | Line 59: | ||

This system keeps track of the original owner of each piece of data. A node which needs the data then queries the original owner of the data, which passes the request off to the new owner if it has moved. This can result in excessive forwarding.<ref>http://www.cdf.toronto.edu/~csc469h/fall/handouts/nitzberg91.pdf</ref> | This system keeps track of the original owner of each piece of data. A node which needs the data then queries the original owner of the data, which passes the request off to the new owner if it has moved. This can result in excessive forwarding.<ref>http://www.cdf.toronto.edu/~csc469h/fall/handouts/nitzberg91.pdf</ref> | ||

===Examples of DSM Systems=== | |||

====Dash==== | |||

Developed at Stanford, this is a hardware-based system that uses [http://en.wikipedia.org/wiki/Point-to-point_protocol point-to-point] messaging instead of a broadcast system to share messages. This is known as the Directory-Based Cache Coherence Protocol.<ref>http://web.cecs.pdx.edu/~alaa/ece588/papers/lenoski_isca_1990.pdf</ref> | |||

====Munin==== | |||

This DSM system was aimed at providing programmers a way to program using the Share-memory model without having to cater to the idiosyncrasies of the different flavors of DSM systems available at the time. It did this by utilizing multiple consistency protocols based on the type of variable being shared and multiple concurrent writers to reduce [http://en.wikipedia.org/wiki/False_sharing false sharing].<ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.144.8558&rep=rep1&type=pdf</ref> | |||

====TreadMarks==== | |||

TreadMarks is a software-based system that runs on standard Unix systems. It features a relaxed memory model and uses a virtual memory hardware for detecting when data has been accessed.<ref>http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=25B1BF76E5CCA72AFC4F6F9A524F233B?doi=10.1.1.50.6377&rep=rep1&type=pdf</ref> | |||

===Transfer=== | |||

The class of DSM machines is undoubtedly the fastest growing part in the family of high-performance computers. Although this DSM of machines is more difficult to deal with than shared-memory machines and DM-SIMD machines, the advantages of DSM-MIMD systems are clear: the bandwidth problem that haunts shared-memory systems is avoided because the bandwidth scales up automatically with the number of processors. Furthermore, the speed of the memory which is another critical issue with shared-memory systems (to get a peak performance that is comparable to that of DSM-MIMD systems, the processors of the shared-memory machines should be very fast and the speed of the memory should match it) is less important for the DSM-MIMD machines, because more processors can be configured without the afore mentioned bandwidth problems. | |||

<ref>http://www.netlib.org/utk/papers/advanced-computers/dm-mimd.html</ref> | <ref>http://www.netlib.org/utk/papers/advanced-computers/dm-mimd.html</ref> | ||

| Line 63: | Line 82: | ||

<ref>http://sslab.cs.nthu.edu.tw/~jimmy/present_2004/02_26/Introduction%20to%20Software%20Distributed%20Shared%20Memory%20Systems.ppt</ref> | <ref>http://sslab.cs.nthu.edu.tw/~jimmy/present_2004/02_26/Introduction%20to%20Software%20Distributed%20Shared%20Memory%20Systems.ppt</ref> | ||

===Performance=== | |||

Currently, message passing (MP) and shared address space (SAS) are the two leading programming paradigms for these systems. MP has been standardized with MPI, and is the most common and mature parallel programming approach. However, MP code development can be extremely difficult, especially for irregularly structured computations. SAS offers substantial ease of programming, but may suffer from performance limitations due to poor spatial locality and high protocol overhead. | |||

Approaches to support SAS in software across clusters differ not only in the specialization and efficiencies of networks but also in the granularities at which they provide coherence. | |||

Fine-grained software coherence uses either code instrumentation for access control or commodity oriented hardware support with the protocol implemented in software. Page-grained software coherence takes advantage of the virtual memory management facilities to provide replication and coherence at page granularity. | |||

Much research has been done in the design and implementation of shared address space (SAS) for clustered architectures, both at page and at finer fixed granularities through code instrumentation. Among the most popular ways to support a coherent SAS in software on clusters is page-based shared virtual memory (SVM). SVM provides replication and coherence at the page granularity by taking advantage of virtual memory management facilities. | |||

It is possible to achieve high scalability on commodity clusters using both MPI and SAS programming approaches, and for certain applications, however, MPI significantly outperforms the SAS implementation, since the latter has higher RMEM and SYNC. These costs are due to the expensive protocol overheads of performing all-to-all communications | |||

The difference in these two approaches stems from the relatively high latency and low bandwidth of the cluster, where it is more efficient to send fewer messages in exchange for increased computational requirements of assembling the scattered data chunks. | |||

<ref>http:// | <ref>http://crd-legacy.lbl.gov/~oliker/papers/ipdps01.pdf</ref> | ||

===Algorithm=== | |||

Recently, clusters of workstations or multiprocessors have become widely and cheaply available to high-end application users as well. Since they are capable of delivering high computational performance, they are important and viable platforms for next-generation supercomputing. As a result, the coherent shared address space model (like message passing) has been supported on a much greater variety of systems with both different granularities as well as varying levels of hardware support in the communication architecture. The focus is on using more commodity-oriented communication architectures, either by relaxing the integration and specialization of the communication controller or by leveraging the virtual memory mechanism to produce coherence at page granularity (shared virtual memory), or by providing access control in software, in almost all cases running its protocol in software. For the shared address space model to truly deliver on its ease of programming advantages for complex, irregular applications such as tree-based N-body applications to end users—for whom it may be important that their codes run well across a range of available platforms—it is very important that performance not only be good on hardware cache-coherent systems but also port well across these important platforms. | |||

, | |||

, | |||

, | |||

, | |||

, | |||

In algorithms, particles are loaded directly into a single shared tree. As a result, whenever a cell is actually modified (e.g. particles or new children added) a lock is needed to implement the necessary mutual exclusion. This causes a lot of synchronization, contention and remote access, which are usually expensive in these systems. | |||

The following is the code skeleton to build the global tree: | |||

<pre> | <pre> | ||

MakeGlobalTree() | |||

{ | |||

cell *Local_Tree; | |||

Local_Tree = InitRoot(); | |||

InsertParticlesInTree(Local_Particles, Local_Tree); | |||

MergeLocalTrees (Local_Tree, Global_Tree); | |||

BARRIER; | |||

} | |||

</pre> | </pre> | ||

In this algorithm, The function InsertParticlesInTree is responsible for building a local tree ( Local Tree ) for each processor using only those particles (Local Particles) that are currently assigned to it (from the previous time step). | |||

<ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3761&rep=rep1&type=pdf</ref> | |||

==References== | ==References== | ||

<references></references> | <references></references> | ||

Latest revision as of 18:03, 7 February 2012

SAS programming on distributed-memory machines

Introduction

Decades ago, shared memory programming model was always implemented on shared memory hardware, while message-passing programming model was adopted on distributed memory hardware. However, nowadays the boundary has been broken. With the support of a shared virtual memory layer, we can program in shared memory fashion on distributed memory hardware.

This article discusses how the shared-memory programming model is implemented on distributed-memory hardware. It provides some basic definitions of the memory models available as well as their strengths and weaknesses. It then goes into more depth on DSM systems, discussing the various ways in which communication takes place between processors as well as examples of how these are implemented in various DSM systems. The article also covers the transfer size of messages, the relative performance of SAS programming versus the message passing model on distributed systems, and finishes up with algorithms used in SAS programming.

Memory Model Definitions

Parallel Programming models traditionally break down into two main types:

- SAS Programming - Shared Address Space Programming, also referred to as the Shared Memory Model, is a style of parallel programming in which all the processors in the system have read and write access to the same memory. Information private to a single processor can be written to a designated memory location, but anything that is to be shared should be written to the shared location.<ref>http://www-public.int-evry.fr/~gibson/Teaching/CSC5021/ReadingMaterial/DagumMenon98.pdf</ref>

- Distributed-Memory Machine - In the Distributed Memory Model, each processor has access only to its own memory. Information is shared between processors through a message passing system.<ref>http://www.cparity.com/projects/AcmClassification/samples/201065.pdf</ref>

Strengths and Weakness of Memory Models

In order to be effective, parallel processing must be supported on both a software level and a hardware level, with the appropriate systems in between. Different memory models have been developed which handle parallel processing in distinct ways.

This model is widely considered to be the most straightforward to program for. The main shortcoming of this model, however, is that it does not scale well due to the fact that the processors must share a common bus which serializes a significant portion of communication.

Distributed-memory

This model has the opposite strengths and weaknesses of the shared-memory model. It scales well as long as the machines are on a highspeed network, but the message-passing model associated with distributed-memory systems is much more difficult to program for.

SAS programming on distributed-memory machines is an attempt to give us the best of both the shared-memory model and the distributed memory model. Frequently referred to as the distributed shared-memory model or DSM, it uses a virtual address space or similar abstraction which gives the impression that the memory is shared even though it isn’t.<ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.17.7084&rep=rep1&type=pdf</ref>

DSMs are less efficient than a straight message passing system. The reason for this is that under the message passing model, the programmer is aware of when messages need to be passed and can program for those situations. With the DSM model, the programmer programs as if no messages need to be shared, but the DSM system must handle and attempt to anticipate when messages will need to be sent without knowing what applications will be running on it or how those applications have been programmed.<ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.52.4066&rep=rep1&type=pdf</ref>

Communication between processors

In the DSM, the system has to do a lookup for each reference to figure out if the data is local. If it’s not, a protocol action is invoked to acquire the data locally. This is referred to as access control.<ref>http://ftp.cs.wisc.edu/pub/techreports/1994/TR1238.pdf</ref> This is implemented in a variety of ways, including both hardware and software only solutions, as well solutions that include both hardware and software elements. All of these efforts are made in an attempt to bring the efficiency of the DSM model closer to that of a well-programmed message passing model. The efficiency issue with DSM boils down to how remote data is both stored and retrieved. There have been several different approaches to this communication problem.

Static Data

Simply making the location of data within the overall system static solves the problem of how to find the data. This approach, however, creates a new problem of making sure that the data is properly distributed throughout the system. If all of the data ends up on a single node, this will create problems as multiple processes try to access the same data.

Dynamic Data

All other approaches to accessing remote data assume that data will move throughout the system as needed, and thus involve some way of keeping track of this data.

Centralized Server

This approach greatly simplifies the task of finding remote by having a single server keeping track of data as it moves around the system. The problem with this approach is that it serializes the task of finding remote memory locations, which all but defeats the purpose of having a parallel system in the first place.

Broadcasting

With the broadcasting approach, the node needing the data sends a message out to all the nodes in the system. Each of the nodes processes the message to determine if it has the data the sending node needs. If one of the nodes has the data, it return the data to the sending node. The problem with this approach is that it creates a lot of overhead as every node has to process the broadcast.

Owner based distribution

This system keeps track of the original owner of each piece of data. A node which needs the data then queries the original owner of the data, which passes the request off to the new owner if it has moved. This can result in excessive forwarding.<ref>http://www.cdf.toronto.edu/~csc469h/fall/handouts/nitzberg91.pdf</ref>

Examples of DSM Systems

Dash

Developed at Stanford, this is a hardware-based system that uses point-to-point messaging instead of a broadcast system to share messages. This is known as the Directory-Based Cache Coherence Protocol.<ref>http://web.cecs.pdx.edu/~alaa/ece588/papers/lenoski_isca_1990.pdf</ref>

Munin

This DSM system was aimed at providing programmers a way to program using the Share-memory model without having to cater to the idiosyncrasies of the different flavors of DSM systems available at the time. It did this by utilizing multiple consistency protocols based on the type of variable being shared and multiple concurrent writers to reduce false sharing.<ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.144.8558&rep=rep1&type=pdf</ref>

TreadMarks

TreadMarks is a software-based system that runs on standard Unix systems. It features a relaxed memory model and uses a virtual memory hardware for detecting when data has been accessed.<ref>http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=25B1BF76E5CCA72AFC4F6F9A524F233B?doi=10.1.1.50.6377&rep=rep1&type=pdf</ref>

Transfer

The class of DSM machines is undoubtedly the fastest growing part in the family of high-performance computers. Although this DSM of machines is more difficult to deal with than shared-memory machines and DM-SIMD machines, the advantages of DSM-MIMD systems are clear: the bandwidth problem that haunts shared-memory systems is avoided because the bandwidth scales up automatically with the number of processors. Furthermore, the speed of the memory which is another critical issue with shared-memory systems (to get a peak performance that is comparable to that of DSM-MIMD systems, the processors of the shared-memory machines should be very fast and the speed of the memory should match it) is less important for the DSM-MIMD machines, because more processors can be configured without the afore mentioned bandwidth problems. <ref>http://www.netlib.org/utk/papers/advanced-computers/dm-mimd.html</ref>

Because a distributed-memory system is often called a multicomputer, it consists of multiple independent processing nodes with local memory modules, connected by a general interconnection network. The choice of the block size depends on some factors, the cost of communication: 1 byte message v.s. 1024 byte message; and locality of reference in the application. Most DSM systems use a page-based granularity with 1K byte to 8K byte. Larger page size, better locality of reference <ref>http://sslab.cs.nthu.edu.tw/~jimmy/present_2004/02_26/Introduction%20to%20Software%20Distributed%20Shared%20Memory%20Systems.ppt</ref>

Performance

Currently, message passing (MP) and shared address space (SAS) are the two leading programming paradigms for these systems. MP has been standardized with MPI, and is the most common and mature parallel programming approach. However, MP code development can be extremely difficult, especially for irregularly structured computations. SAS offers substantial ease of programming, but may suffer from performance limitations due to poor spatial locality and high protocol overhead.

Approaches to support SAS in software across clusters differ not only in the specialization and efficiencies of networks but also in the granularities at which they provide coherence. Fine-grained software coherence uses either code instrumentation for access control or commodity oriented hardware support with the protocol implemented in software. Page-grained software coherence takes advantage of the virtual memory management facilities to provide replication and coherence at page granularity.

Much research has been done in the design and implementation of shared address space (SAS) for clustered architectures, both at page and at finer fixed granularities through code instrumentation. Among the most popular ways to support a coherent SAS in software on clusters is page-based shared virtual memory (SVM). SVM provides replication and coherence at the page granularity by taking advantage of virtual memory management facilities.

It is possible to achieve high scalability on commodity clusters using both MPI and SAS programming approaches, and for certain applications, however, MPI significantly outperforms the SAS implementation, since the latter has higher RMEM and SYNC. These costs are due to the expensive protocol overheads of performing all-to-all communications

The difference in these two approaches stems from the relatively high latency and low bandwidth of the cluster, where it is more efficient to send fewer messages in exchange for increased computational requirements of assembling the scattered data chunks. <ref>http://crd-legacy.lbl.gov/~oliker/papers/ipdps01.pdf</ref>

Algorithm

Recently, clusters of workstations or multiprocessors have become widely and cheaply available to high-end application users as well. Since they are capable of delivering high computational performance, they are important and viable platforms for next-generation supercomputing. As a result, the coherent shared address space model (like message passing) has been supported on a much greater variety of systems with both different granularities as well as varying levels of hardware support in the communication architecture. The focus is on using more commodity-oriented communication architectures, either by relaxing the integration and specialization of the communication controller or by leveraging the virtual memory mechanism to produce coherence at page granularity (shared virtual memory), or by providing access control in software, in almost all cases running its protocol in software. For the shared address space model to truly deliver on its ease of programming advantages for complex, irregular applications such as tree-based N-body applications to end users—for whom it may be important that their codes run well across a range of available platforms—it is very important that performance not only be good on hardware cache-coherent systems but also port well across these important platforms.

In algorithms, particles are loaded directly into a single shared tree. As a result, whenever a cell is actually modified (e.g. particles or new children added) a lock is needed to implement the necessary mutual exclusion. This causes a lot of synchronization, contention and remote access, which are usually expensive in these systems.

The following is the code skeleton to build the global tree:

MakeGlobalTree()

{

cell *Local_Tree;

Local_Tree = InitRoot();

InsertParticlesInTree(Local_Particles, Local_Tree);

MergeLocalTrees (Local_Tree, Global_Tree);

BARRIER;

}

In this algorithm, The function InsertParticlesInTree is responsible for building a local tree ( Local Tree ) for each processor using only those particles (Local Particles) that are currently assigned to it (from the previous time step). <ref>http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3761&rep=rep1&type=pdf</ref>

References

<references></references>