CSC/ECE 506 Spring 2012/1c dm: Difference between revisions

| (68 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Overview== | ==Overview== | ||

This wiki article explores the Multiple Instruction Single Data architecture of multi processors as classified by Flynn’s Taxonomy. The article starts with a description of Flynn’s Taxonomy and its classification followed by the MISD architecture and its implementation. It also talks about the authors' and researchers' comments about the real-world examples of | This wiki article explores the Multiple Instruction Single Data architecture of multi processors as classified by Flynn’s Taxonomy. The article starts with a description of Flynn’s Taxonomy and its classification followed by the [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Multiple_Instructions.2C_Single_Data_stream_.28MISD.29 MISD architecture] and its implementation. It also talks about the authors' and researchers' comments about the real-world examples of [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Multiple_Instructions.2C_Single_Data_stream_.28MISD.29MISD architecture] and ends by providing examples of the architecture. | ||

==Multi Processor Systems== | ==Multi Processor Systems== | ||

The performance of a single processor system is generally limited by the frequency at which it operates and the amount of Instruction Level Parallelism (ILP) it can exploit. The slowdown in the rate of increase in the uni-processor performance arose due to the difficulty in running the processors at higher frequencies and diminishing returns from exploiting ILP. Thus, multiprocessor systems started becoming popular in the applications like servers, graphics intensive tasks, super computers, etc. | The performance of a single processor system is generally limited by the frequency at which it operates and the amount of [http://en.wikipedia.org/wiki/Instruction-level_parallelism Instruction Level Parallelism (ILP)] it can exploit. The slowdown in the rate of increase in the uni-processor performance arose due to the difficulty in running the processors at higher frequencies and diminishing returns from exploiting ILP. Thus, multiprocessor systems started becoming popular in the applications like servers, graphics intensive tasks, super computers, etc. | ||

A multiprocessor system is the use of two or more processing elements within a single system. Multiple tasks can be executed in parallel on these processing elements depending on the type of the system. The system can have the same kind of processing elements (Homogeneous System) or different kind of processing elements supporting different types of tasks (Heterogeneous System). | A multiprocessor system is the use of two or more processing elements within a single system. Multiple tasks can be executed in parallel on these processing elements depending on the type of the system. The system can have the same kind of processing elements (Homogeneous System) or different kind of processing elements supporting different types of tasks ([http://en.wikipedia.org/wiki/Heterogeneous_computing Heterogeneous System]). | ||

Multiprocessor systems are characterized by the number of instruction streams and the number of data streams the system has. Flynn’s Taxonomy gives the characterization of multiprocessor systems. | Multiprocessor systems are characterized by the number of instruction streams and the number of data streams the system has. [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Flynn.E2.80.99s_Taxonomy_of_Parallel_Computers.5B1.5D.5B2.5D Flynn’s Taxonomy] gives the characterization of multiprocessor systems. | ||

==Flynn’s Taxonomy of Parallel Computers<ref>http://en.wikipedia.org/wiki/Flynn's_taxonomy</ref><ref>http://www.phy.ornl.gov/csep/ca/node11.html</ref>== | ==Flynn’s Taxonomy of Parallel Computers<ref>http://en.wikipedia.org/wiki/Flynn's_taxonomy</ref><ref>http://www.phy.ornl.gov/csep/ca/node11.html</ref>== | ||

Flynn defined the taxonomy of parallel computers [Flynn, 1972] based on the number of instruction streams and data streams. | Flynn defined the taxonomy of parallel computers [[http://en.wikipedia.org/wiki/Michael_J._Flynn Flynn], 1972] based on the number of instruction streams and data streams. | ||

• An Instruction stream is a sequence of instructions followed from a single program counter | • An Instruction stream is a sequence of instructions followed from a single program counter | ||

| Line 21: | Line 21: | ||

A control unit fetches instructions from a single program counter, decodes them, and issues them to the processing element. The processing element is assumed to be a functional unit. Instruction and data are both supplied from the memory. | A control unit fetches instructions from a single program counter, decodes them, and issues them to the processing element. The processing element is assumed to be a functional unit. Instruction and data are both supplied from the memory. | ||

The four classifications defined by Flynn are based upon the number of concurrent instruction (or control) and data streams available in the architecture are | The four classifications defined by Flynn are based upon the number of concurrent instruction (or control) and data streams available in the architecture are<ref>https://computing.llnl.gov/tutorials/parallel_comp/#Flynn</ref> | ||

[[Image:Flynn's Taxonomy.PNG|thumb|center|400px|Figure 1. Flynn's Taxonomy]] | [[Image:Flynn's Taxonomy.PNG|thumb|center|400px|Figure 1. [http://en.wikipedia.org/wiki/Michael_J._Flynn Flynn]'s Taxonomy [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-0 1]]]] | ||

===Single Instruction, Single Data stream (SISD)=== | ===Single Instruction, Single Data stream (SISD)=== | ||

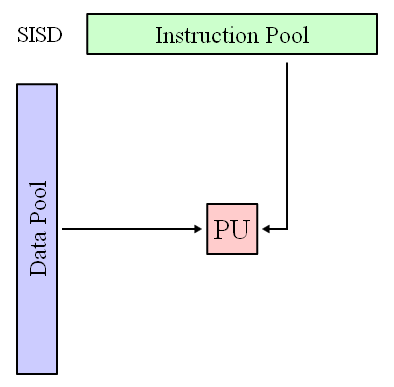

[[Image:SISD.PNG|thumb|right|100px|Figure 2. SISD]] | [[Image:SISD.PNG|thumb|right|100px|Figure 2. SISD [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-0 1]]]] | ||

SISD (single instruction, single data) is a term referring to a computer architecture in which a single processor, a uniprocessor, executes a single instruction stream, to operate on data stored in a single memory. Even though there is only one stream of instructions, parallelism between the instructions from the stream can be exploited when the instructions are independent from one another. This corresponds to the von Neumann architecture. | SISD (single instruction, single data) is a term referring to a computer architecture in which a single processor, a uniprocessor, executes a single instruction stream, to operate on data stored in a single memory. Even though there is only one stream of instructions, parallelism between the instructions from the stream can be exploited when the instructions are independent from one another. This corresponds to the [http://en.wikipedia.org/wiki/Von_Neumann_model von Neumann architecture]. | ||

It is a type of sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (CU) fetches single Instruction Stream (IS) from memory. The CU then generates appropriate control signals to direct single processing element (PE) to operate on single Data Stream (DS) i.e. one operation at a time | It is a type of sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (CU) fetches single Instruction Stream (IS) from memory. The CU then generates appropriate control signals to direct single processing element (PE) to operate on single Data Stream (DS) i.e. one operation at a time | ||

| Line 35: | Line 35: | ||

===Single Instruction, Multiple Data streams (SIMD)=== | ===Single Instruction, Multiple Data streams (SIMD)=== | ||

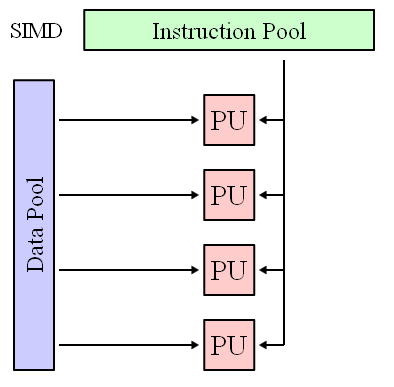

[[Image:SIMD.PNG|thumb|right|100px|Figure 3. SIMD Architecture]] | [[Image:SIMD.PNG|thumb|right|100px|Figure 3. SIMD Architecture [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-0 1]]]] | ||

SIMD is a parallel architecture in which a single instruction operates on multiple data. An example of SIMD architectures can be found in vector processors. SIMD is known for its efficiency in terms of the instruction count needed to perform a computation task. | SIMD is a parallel architecture in which a single instruction operates on multiple data. An example of SIMD architectures can be found in vector processors. SIMD is known for its efficiency in terms of the instruction count needed to perform a computation task. | ||

| Line 42: | Line 42: | ||

===Multiple Instructions, Single Data stream (MISD)=== | ===Multiple Instructions, Single Data stream (MISD)=== | ||

[[Image:MISD.PNG|thumb|right|100px|Figure 4. MISD Architecture]] | [[Image:MISD.PNG|thumb|right|100px|Figure 4. MISD Architecture [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-0 1]]]] | ||

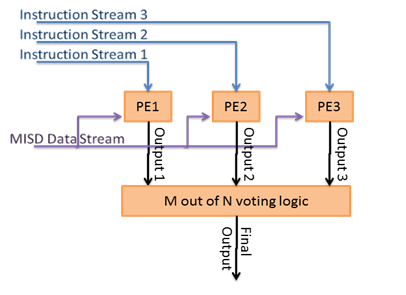

MISD (multiple instruction, single data) is an architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. It is a type of parallel computing architecture where many functional units perform different operations on the same data. | MISD (multiple instruction, single data) is an architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. It is a type of parallel computing architecture where many functional units perform different operations on the same data. | ||

Pipeline architectures belong to this type, though a purist might say that the data is different after processing by each stage in the pipeline. Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may be considered to belong to this type. Not many instances of this architecture exist, as MIMD and SIMD are often more appropriate for common data parallel techniques. Specifically, they allow better scaling and use of computational resources than MISD does. | Pipeline architectures belong to this type, though a purist might say that the data is different after processing by each stage in the pipeline. Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may be considered to belong to this type. Not many instances of this architecture exist, as MIMD and SIMD are often more appropriate for common data parallel techniques. Specifically, they allow better scaling and use of computational resources than MISD does. | ||

However, one prominent example of MISD in computing is the Space Shuttle flight control computers. Another example of this machine is the systolic array, such as the CMU iWrap [BORKAR et al., 1990]. All the elements in this array are controlled by a global clock. On each cycle, an element will read a piece of data from one of its neighbors, perform a simple operation (e.g. add the incoming element to a stored value), and prepare a value to be written to a neighbor on the next step. | However, one prominent example of MISD in computing is the Space Shuttle flight control computers. Another example of this machine is the systolic array, such as the [http://www.cs.cmu.edu/~iwarp/ CMU iWrap] [BORKAR et al., 1990]. All the elements in this array are controlled by a global clock. On each cycle, an element will read a piece of data from one of its neighbors, perform a simple operation (e.g. add the incoming element to a stored value), and prepare a value to be written to a neighbor on the next step. | ||

===Multiple Instruction, Multiple Data streams (MIMD)=== | ===Multiple Instruction, Multiple Data streams (MIMD)=== | ||

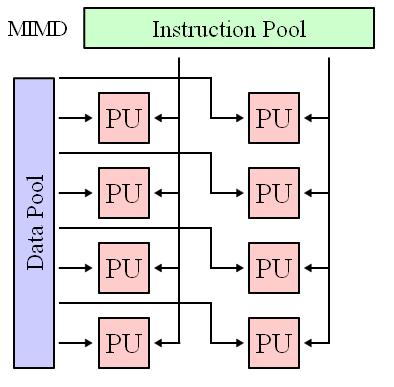

[[Image:MIMD.PNG|thumb|right|100px|Figure 5. MIMD Architecture]] | [[Image:MIMD.PNG|thumb|right|100px|Figure 5. MIMD Architecture [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-0 1]]]] | ||

MIMD (multiple instructions, multiple data) is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. MIMD architectures may be used in a number of application areas such as computer-aided design/computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared memory or distributed memory categories. Shared memory machines may be of the bus-based, extended, or hierarchical type. Distributed memory machines may have hypercube or mesh interconnection schemes. | MIMD (multiple instructions, multiple data) is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. MIMD architectures may be used in a number of application areas such as computer-aided design/computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared memory or distributed memory categories. Shared memory machines may be of the bus-based, extended, or hierarchical type. Distributed memory machines may have hypercube or mesh interconnection schemes. | ||

| Line 60: | Line 60: | ||

A systolic array is an arrangement of processors in an array where data flows synchronously across the array between neighbors, usually with different data flowing in different directions. Each Processor at each step takes in data from one or more neighbors, processes it and, in the next step, outputs results in the opposite direction. | A systolic array is an arrangement of processors in an array where data flows synchronously across the array between neighbors, usually with different data flowing in different directions. Each Processor at each step takes in data from one or more neighbors, processes it and, in the next step, outputs results in the opposite direction. | ||

The systolic array paradigm, data-stream-driven by data counters, is the counterpart of the [http://en.wikipedia.org/wiki/Von_Neumann_model von Neumann paradigm], instruction-stream-driven by a program counter. Because a systolic array usually sends and receives multiple data streams, and multiple data counters are needed to generate these data streams, it supports data parallelism. The name derives from analogy with the regular pumping of blood by the heart.<ref>http://en.wikipedia.org/wiki/Systolic_array</ref> | |||

====Type of Systolic Arrays<ref>http://home.engineering.iastate.edu/~zambreno/classes/cpre583/documents/JohHur93A.pdf General Purpose Systolic Arrays </ref>==== | |||

====Type of Systolic Arrays==== | |||

=====Special-purpose systolic array===== | =====Special-purpose systolic array===== | ||

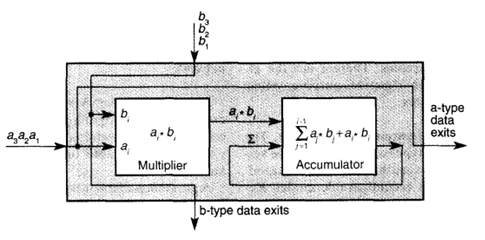

[[Image:systolic_1.png|thumb|right| | [[Image:systolic_1.png|thumb|right|250px|Figure 6: The algorithm for the sum of a scalar product, computed in systolic element [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-4 5]]]] | ||

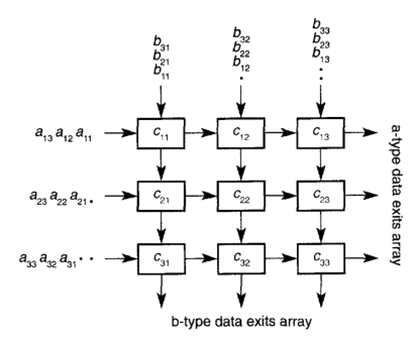

[[Image:systolic_2.png|thumb|right| | [[Image:systolic_2.png|thumb|right|250px|Figure 7: The systolic product of two 3x3 matrices [[http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-4 5]]]] | ||

An array of hardwired systolic processing elements tailored for a specific application. Typically, many tens or hundreds of cells fit on a single chip. One of the major applications of special-purpose systolic array is in matrix operations. Figure 6 illustrates the algorithm for the sum of a scalar product, computed in a single systolic element. | An array of hardwired systolic processing elements tailored for a specific application. Typically, many tens or hundreds of cells fit on a single chip. One of the major applications of special-purpose systolic array is in matrix operations. [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Systolic_1.png Figure 6] illustrates the algorithm for the sum of a scalar product, computed in a single systolic element. Here, a’s and b’s are synchronously shifted through the processing element to be available for next element. These data synchronously exits the processing element unmodified for the next element. The sum of the products is then shifted out of the accumulator. | ||

This principle easily extends to a matrix product as shown in Figure | This principle easily extends to a matrix product as shown in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Systolic_1.png Figure 7] and [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Systolic-array-for-matrix-multiplication.gif the animation] created with the help from the [http://www.iti.fh-flensburg.de/lang/papers/isa/isa2.htm link]. The only difference between single-element processing and array processing is that the latter delays each additional column and row by one cycle so that the columns and rows line up for a matrix multiply. The product matrix is shifted out after completion of processing. | ||

=====General-purpose systolic array===== | =====General-purpose systolic array===== | ||

| Line 80: | Line 78: | ||

A programmable systolic architecture is a collection of interconnected, general-purpose systolic cells, each of which is either programmable or reconfigurable. Programmable systolic cells are flexible processing elements specially designed to meet the computational and I/O requirements of systolic arrays. Programmable systolic architectures can be classified according to their cell inter-connection topologies: fixed or programmable. | A programmable systolic architecture is a collection of interconnected, general-purpose systolic cells, each of which is either programmable or reconfigurable. Programmable systolic cells are flexible processing elements specially designed to meet the computational and I/O requirements of systolic arrays. Programmable systolic architectures can be classified according to their cell inter-connection topologies: fixed or programmable. | ||

Reconfigurable systolic architectures capitalize on FPGA technology, which allows the user to configure a low-level logic circuit for each cell. Reconfigurable arrays also have either fixed or reconfigurable cell interconnections. The user configures an array’s topology by means of a switch lattice. Any general-purpose array that is not conventionally programmable is usually considered reconfigurable. All FPGA | Reconfigurable systolic architectures capitalize on [http://en.wikipedia.org/wiki/Field-programmable_gate_array FPGA] technology, which allows the user to configure a low-level logic circuit for each cell. Reconfigurable arrays also have either fixed or reconfigurable cell interconnections. The user configures an array’s topology by means of a switch lattice. Any general-purpose array that is not conventionally programmable is usually considered reconfigurable. All [http://en.wikipedia.org/wiki/Field-programmable_gate_array FPGA] re-configuring is static due to technology limitations. | ||

Hybrid models make use of both VLSI and FPGA technology. They usually consist of VLSI circuits embedded in an FPGA-reconfigurable interconnection network. | Hybrid models make use of both [http://en.wikipedia.org/wiki/Very-large-scale_integration VLSI] and [http://en.wikipedia.org/wiki/Field-programmable_gate_array FPGA] technology. They usually consist of VLSI circuits embedded in an FPGA-reconfigurable interconnection network. | ||

=====Programmable Systolic Array===== | =====Programmable Systolic Array===== | ||

| Line 90: | Line 88: | ||

* '''SIMD (Single Instruction Multiple Data)''' | * '''SIMD (Single Instruction Multiple Data)''' | ||

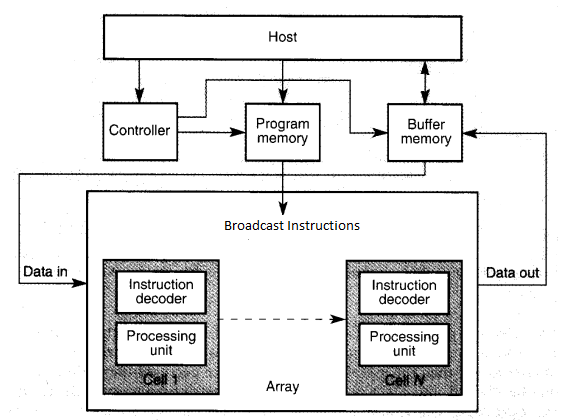

[[Image:systolic_3.png|thumb|right| | [[Image:systolic_3.png|thumb|right|250px|Figure 8: General organization of SIMD programmable linear systolic arrays [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-4 5]]] | ||

In SIMD systolic machines (Figure 8) the host workstation preloads a controller and a memory, which are external to the array, with the instructions and data for the application. The systolic cells store no programs or instructions | In SIMD systolic machines ([http://expertiza.csc.ncsu.edu/wiki/index.php/File:Systolic_3.png Figure 8]) the host workstation preloads a controller and a memory, which are external to the array, with the instructions and data for the application. The systolic cells store no programs or instructions. As soon as the workstation enables execution, the controller sequences through the external memory thereby delivering instructions and data to the systolic array. Within the array, instructions are broadcast and all cells perform the same operationon different data. Adjacent cells may share memory, but generally nomemory is shared by theentire array. After exiting the array, data is collected in the external buffer memory. | ||

This architecture can also be classified based on the number of instruction and data streams as Single Instruction Single Data (SISD) architecture as all the PEs are fed from the same instruction stream and the single data stream passes through all the PEs. | This architecture can also be classified based on the number of instruction and data streams as Single Instruction Single Data (SISD) architecture as all the PEs are fed from the same instruction stream and the single data stream passes through all the PEs. | ||

* '''MISD (Multiple Instruction Single Data)''' | * '''MISD (Multiple Instruction Single Data)''' | ||

[[Image:systolic_4.png|thumb|right| | [[Image:systolic_4.png|thumb|right|250px|Figure 9: General organization of MIMD programmable linear systolic arrays [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-4 5]]] | ||

The workstation downloads a program to each MISD (Figure 9) systolic cell. Each cell may be loaded with a different program, or all the cells in the array may be loaded with the same program. Each cell's architecture is somewhat similar to the conventional von Neumann architecture: It contains a control unit, an ALU, and local memory. MIMD systolic cells have more local memory than their SIMD counterparts to support the von Neumann-style organization. | The workstation downloads a program to each MISD ([http://expertiza.csc.ncsu.edu/wiki/index.php/File:Systolic_4.png Figure 9]) systolic cell. Each cell may be loaded with a different program, or all the cells in the array may be loaded with the same program. Each cell's architecture is somewhat similar to the conventional [http://en.wikipedia.org/wiki/Von_Neumann_model von Neumann architecture]: It contains a control unit, an ALU, and local memory. MIMD systolic cells have more local memory than their SIMD counterparts to support the von Neumann-style organization. | ||

This architecture is defined as Multiple Instruction Multiple Data (MIMD) architecture in [ | This architecture is defined as Multiple Instruction Multiple Data (MIMD) architecture in [http://home.engineering.iastate.edu/~zambreno/classes/cpre583/documents/JohHur93A.pdf 5]. The architecture has multiple instruction streams for the PEs and a single data stream passing through all the PEs. Thus, it can also be defined as Multiple Instruction Single Data (MISD) architecture. The architecture of Systolic array configuration are controversial as explained in the [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Architecture_of_systolic_arrays_as_against_MISD_architecture section 4.1.2.] | ||

=====Reconfigurable Systolic Array===== | =====Reconfigurable Systolic Array===== | ||

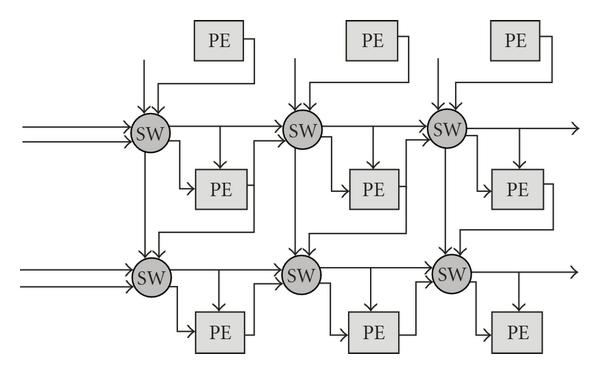

[[Image:reconfig.jpg|thumb|right| | [[Image:reconfig.jpg|thumb|right|250px|Figure 10: Block Diagram of the RSA Architecture [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-4 5]]] | ||

It is an array of systolic elements that can be programmed at the lowest level. Recent gate density advances in FPGA technology have produced a low-level, reconfigurable systolic array architecture that bridges the gap between special-purpose arrays and the more versatile, programmable general-purpose arrays. The FPGA architecture is unusual because a single hardware platform can be logically reconfigured as an exact duplicate of a special-purpose systolic array. | It is an array of systolic elements that can be programmed at the lowest level. Recent gate density advances in FPGA technology have produced a low-level, reconfigurable systolic array architecture that bridges the gap between special-purpose arrays and the more versatile, programmable general-purpose arrays. The FPGA architecture is unusual because a single hardware platform can be logically reconfigured as an exact duplicate of a special-purpose systolic array. | ||

The RSA circuit design is based on systolic array architecture consisting of PEs interconnected via SWs as depicted in Figure 10. The homogeneous characteristic of the Reconfigurable Systolic Array (RSA) architecture, where each reconfigurable processing element (PE) cell is connected to its nearest neighbors via configurable switch (SW) elements, enables array expansion for parallel processing and facilitates time sharing computation of high-throughput data by individual PEs. Both the PEs and SWs can be reconfigured dynamically with the former as an arithmetic processor and the latter as a flexible router linking the neighboring PE cells. The RSA shifts reconfiguration and input signals into the PEs and SWs on separate data bus which enables the circuit to continue its operation while the reconfiguration is in process. | The RSA circuit design is based on systolic array architecture consisting of PEs interconnected via SWs as depicted in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Reconfig.jpg Figure 10]. The homogeneous characteristic of the Reconfigurable Systolic Array (RSA) architecture, where each reconfigurable processing element (PE) cell is connected to its nearest neighbors via configurable switch (SW) elements, enables array expansion for parallel processing and facilitates time sharing computation of high-throughput data by individual PEs. Both the PEs and SWs can be reconfigured dynamically with the former as an arithmetic processor and the latter as a flexible router linking the neighboring PE cells. The RSA shifts reconfiguration and input signals into the PEs and SWs on separate data bus which enables the circuit to continue its operation while the reconfiguration is in process. | ||

====Architecture of systolic arrays as against MISD architecture==== | |||

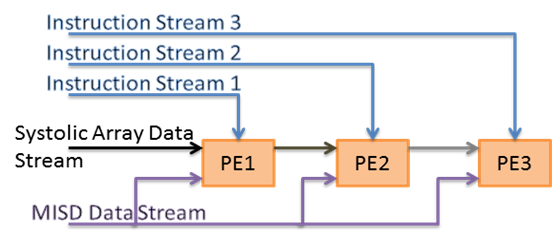

[[Image:comp.png|thumb|right|250px|Figure 11.Comparison between Architecture of systolic arrays and MISD]] | |||

[[Image:comp.png|thumb|right| | |||

As from the above mentioned configurations of the Systolic Arrays, it is seen that generally the configurations have multiple processing elements executing different instructions from dedicated instruction streams for each processing element. There is a single data stream that connects the adjacent PEs. Thus, systolic array can be defined as an MISD architecture. | As from the above mentioned configurations of the Systolic Arrays, it is seen that generally the configurations have multiple processing elements executing different instructions from dedicated instruction streams for each processing element. There is a single data stream that connects the adjacent PEs. Thus, systolic array can be defined as an MISD architecture. | ||

Many authors say that as the data read as input by one processing element is processed data output of the adjacent PE. The data stream cannot be considered as single because all the data paths do not carry the same data to all the PEs. Figure 11 shows the difference between the Data Stream for Systolic Arrays and the MISD architecture. Thus the systolic array should be considered as “Multiple Data” architecture and not Single Data architecture. | Many authors say that as the data read as input by one processing element is processed data output of the adjacent PE. The data stream cannot be considered as single because all the data paths do not carry the same data to all the PEs. [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Comp.png Figure 11] shows the difference between the Data Stream for Systolic Arrays and the MISD architecture. Thus the systolic array should be considered as “Multiple Data” architecture and not Single Data architecture. | ||

===Fault Tolerant Systems<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#Types_of_fault_tolerance</ref>=== | ===Fault Tolerant Systems<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#Types_of_fault_tolerance</ref>=== | ||

| Line 122: | Line 119: | ||

====History:<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#History</ref>==== | ====History:<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#History</ref>==== | ||

[[Image:fault.png|thumb|right| | [[Image:fault.png|thumb|right|250px|Figure 12 MISD as fault tolerant architecture]] | ||

The first known fault-tolerant computer was SAPO, built in 1951 in Czechoslovakia by Antonin Svoboda. | The first known fault-tolerant computer was [http://en.wikipedia.org/wiki/SAPO_(computer) SAPO], built in 1951 in [http://en.wikipedia.org/wiki/Czechoslovakia Czechoslovakia] by [http://en.wikipedia.org/wiki/Anton%C3%ADn_Svoboda Antonin Svoboda]. Its basic design was magnetic drums connected via relays, with a voting method of memory error detection. | ||

They separated into three distinct categories: | They separated into three distinct categories: | ||

| Line 134: | Line 131: | ||

Historically, motion has always been to move further from N-model and more to M out of N due to the fact that the complexity of systems and the difficulty of ensuring the transitive state from fault-negative to fault-positive did not disrupt operations. | Historically, motion has always been to move further from N-model and more to M out of N due to the fact that the complexity of systems and the difficulty of ensuring the transitive state from fault-negative to fault-positive did not disrupt operations. | ||

In computer systems, the SIMD, MISD and MIMD architectures facilitate the implementation of the fault tolerance systems by multiple instruction streams or multiple data streams or both. Fault tolerance on computations can be implemented by multiple processors (likely with different architectures) executing the algorithms on the same set of data. The output of each processor is compared with that of the others and M out of N majority voting method is used to determine the faulty processor. Thus MISD architecture is utilized to get the fault tolerance on critical computations. | In computer systems, the [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Single_Instruction.2C_Multiple_Data_streams_.28SIMD.29 SIMD], [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Multiple_Instructions.2C_Single_Data_stream_.28MISD.29 MISD] and [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Multiple_Instruction.2C_Multiple_Data_streams_.28MIMD.29 MIMD] architectures facilitate the implementation of the fault tolerance systems by multiple instruction streams or multiple data streams or both. Fault tolerance on computations can be implemented by multiple processors (likely with different architectures) executing the algorithms on the same set of data. The output of each processor is compared with that of the others and M out of N majority voting method is used to determine the faulty processor. Thus MISD architecture is utilized to get the fault tolerance on critical computations. | ||

There are various examples of MISD architecture being used as fault tolerant architecture. The major examples being flight control systems, nuclear power plants, satellite systems, super collider experiment systems, etc. Here, the flight control system is explained as an example of MISD architecture. | There are various examples of [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Multiple_Instructions.2C_Single_Data_stream_.28MISD.29 MISD architecture] being used as fault tolerant architecture. The major examples being flight control systems, nuclear power plants, satellite systems, super collider experiment systems, etc. Here, the flight control system is explained as an example of [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#Multiple_Instructions.2C_Single_Data_stream_.28MISD.29 MISD architecture]. | ||

====The Flight Control System – MISD Example for fault tolerance==== | ====The Flight Control System – MISD Example for fault tolerance==== | ||

A | A [http://en.wikipedia.org/wiki/Fly-by-wire Fly-By-Wire] system is used to replace the manual flight control by an electronic control interface. The movements of the flight control in the cockpit are converted to electronic signals and are transmitted to the actuators by wires. The control computers use the feedback from the sensors to compute and control the movement of the actuators to provide the expected response. These computers also perform the task to stabilize the aircraft and perform other tasks without the knowledge of the pilot. Flight control systems must meet extremely high levels of accuracy and functional integrity. | ||

There are redundant flight control computers present in the flight control system. If one of the flight-control computers crashes, gets damaged or is affected by electromagnetic pulses, the other computer can overrule the faulty one and hence the flight of the aircraft is unharmed. The number of redundant flight control computers is generally more than two, so that any computer whose results disagree with the others is ruled out to be faulty and is either ignored or rebooted. | There are redundant flight control computers present in the flight control system. If one of the flight-control computers crashes, gets damaged or is affected by electromagnetic pulses, the other computer can overrule the faulty one and hence the flight of the aircraft is unharmed. [[Image:fig13.png|thumb|right|250px|Figure 13: Architecture of triple redundant 777 primary flight computer [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1c_dm#cite_note-7 6]]]The number of redundant flight control computers is generally more than two, so that any computer whose results disagree with the others is ruled out to be faulty and is either ignored or rebooted.[[Image:fig14.png|thumb|right|250px|Figure 14: PFC with instruction and data streams]] | ||

====Multiple Processors Implementation in Boeing 777<ref>http://www.citemaster.net/getdoc/8767/R8.pdf Y.C. (Bob) Yeh, Boeing Commercial Airplane Group, "Triple-Triple Redundant 777 Primary Flight Computer" </ref>==== | ====Multiple Processors Implementation in Boeing 777<ref>http://www.citemaster.net/getdoc/8767/R8.pdf Y.C. (Bob) Yeh, Boeing Commercial Airplane Group, "Triple-Triple Redundant 777 Primary Flight Computer" </ref>==== | ||

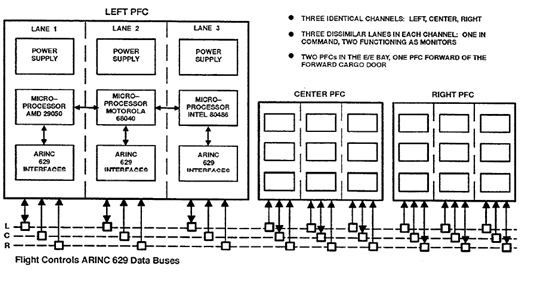

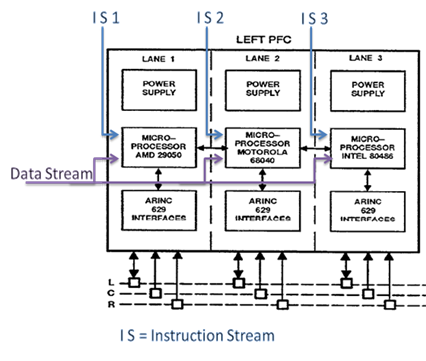

In modern computers, the redundant flight control computations are carried out by multiprocessor systems. The triple redundant 777 primary flight computer, has the architecture as shown in [http://expertiza.csc.ncsu.edu/wiki/index.php/File:Fig13.png Figure 13]. | |||

In modern computers, the redundant flight control computations are carried out by multiprocessor systems. The triple redundant 777 primary flight computer | |||

The system has three primary flight control computers, each of them having three lanes with different processors. The flight control program is compiled for each of the processors which get the input data from the same data bus but drive the output on their individual control bus. Thus each processor executes different instructions but they process the same data. Thus, it is the best suited example of Multiple Instruction Single Data (MISD) architecture. | The system has three primary flight control computers, each of them having three lanes with different processors. The flight control program is compiled for each of the processors which get the input data from the same data bus but drive the output on their individual control bus. Thus each processor executes different instructions but they process the same data. Thus, it is the best suited example of Multiple Instruction Single Data (MISD) architecture. | ||

The three processors selected for the flight control system of Boeing 777 were Intel 80486, Motorola 68040 and AMD 29050. The dissimilar processors lead to dissimilar interface hardware circuits and compilers. Each lane of the flight control computer is data synchronized with the other lanes so that all of the lanes read the same frame of data from the flight sensors. As the outputs of each lane can be different, the median value of the outputs is used to select the output of the lane to be considered. The lane which has the median value select hardware selected is said to be in “command mode” whereas the other lanes are said to be in “monitoring mode”. It receives the data from the other PFC lanes and performs a median select of the outputs. This provides a fault blocking mechanism before the fault detection and identification by the cross-lane monitoring system. Thus, the MISD based multi computer architecture is capable of detecting generic errors in compilers or in complex hardware devices providing assurance beyond reasonable doubt of the dependability of the Fly-By-Wire system. | The three processors selected for the flight control system of [http://en.wikipedia.org/wiki/Boeing_777 Boeing 777] were [http://en.wikipedia.org/wiki/Intel_80486 Intel 80486], [http://en.wikipedia.org/wiki/Motorola_68040 Motorola 68040] and [http://en.wikipedia.org/wiki/AMD_Am29000 AMD 29050]. The dissimilar processors lead to dissimilar interface hardware circuits and compilers. Each lane of the flight control computer is data synchronized with the other lanes so that all of the lanes read the same frame of data from the flight sensors. As the outputs of each lane can be different, the median value of the outputs is used to select the output of the lane to be considered. The lane which has the median value select hardware selected is said to be in “command mode” whereas the other lanes are said to be in “monitoring mode”. It receives the data from the other Primary Flight Computer (PFC) lanes and performs a median select of the outputs. This provides a fault blocking mechanism before the fault detection and identification by the cross-lane monitoring system. Thus, the MISD based multi computer architecture is capable of detecting generic errors in compilers or in complex hardware devices providing assurance beyond reasonable doubt of the dependability of the Fly-By-Wire system. | ||

The above mentioned system clearly has individual Instruction Streams as the architecture of each processor is different, thus different instruction sets and different instruction streams. These processors have frame synchronized input data which means they have same set of data to work upon which is fed from a single data stream. Thus the flight control system can be classified under MISD architecture. | The above mentioned system clearly has individual Instruction Streams as the architecture of each processor is different, thus different instruction sets and different instruction streams. These processors have frame synchronized input data which means they have same set of data to work upon which is fed from a single data stream. Thus the flight control system can be classified under MISD architecture. | ||

| Line 157: | Line 153: | ||

=='''References'''== | =='''References'''== | ||

<references/> | <references/> | ||

=='''Glossary'''== | |||

*'''CMU''': Carnegie Mellon University | |||

*'''CU''': Control Unit | |||

*'''DS''': Data Stream | |||

*'''Fly-By-Wire''': System that replaces the conventional manual flight controls of an aircraft with an electronic interface | |||

*'''FPGA''': Field Programmable Gate Array | |||

*'''Heterogeneous Systems''': A multiprocessor system with different kind of processors | |||

*'''Homogeneous System''': A multiprocessor system with same kind of processors | |||

*'''ILP''': Instruction Level Parallelism | |||

*'''IS''': Instruction Stream | |||

*'''MIMD''': Multiple Instruction Multiple Data | |||

*'''MISD''': Multiple Instruction Single Data | |||

*'''PE''': Processing Element | |||

*'''PFC''': Primary Flight Computer | |||

*'''SAPO''': Short for Samočinný počítač | |||

*'''SIMD''': Single Instruction Multiple Data | |||

*'''SISD''': Single Instruction Single Data | |||

*'''VLSI''': Very Large Scale Integration | |||

Latest revision as of 02:51, 7 February 2012

Overview

This wiki article explores the Multiple Instruction Single Data architecture of multi processors as classified by Flynn’s Taxonomy. The article starts with a description of Flynn’s Taxonomy and its classification followed by the MISD architecture and its implementation. It also talks about the authors' and researchers' comments about the real-world examples of architecture and ends by providing examples of the architecture.

Multi Processor Systems

The performance of a single processor system is generally limited by the frequency at which it operates and the amount of Instruction Level Parallelism (ILP) it can exploit. The slowdown in the rate of increase in the uni-processor performance arose due to the difficulty in running the processors at higher frequencies and diminishing returns from exploiting ILP. Thus, multiprocessor systems started becoming popular in the applications like servers, graphics intensive tasks, super computers, etc.

A multiprocessor system is the use of two or more processing elements within a single system. Multiple tasks can be executed in parallel on these processing elements depending on the type of the system. The system can have the same kind of processing elements (Homogeneous System) or different kind of processing elements supporting different types of tasks (Heterogeneous System).

Multiprocessor systems are characterized by the number of instruction streams and the number of data streams the system has. Flynn’s Taxonomy gives the characterization of multiprocessor systems.

Flynn’s Taxonomy of Parallel Computers<ref>http://en.wikipedia.org/wiki/Flynn's_taxonomy</ref><ref>http://www.phy.ornl.gov/csep/ca/node11.html</ref>

Flynn defined the taxonomy of parallel computers [Flynn, 1972] based on the number of instruction streams and data streams.

• An Instruction stream is a sequence of instructions followed from a single program counter

• A Data stream is an address in memory which the instruction operates on.

A control unit fetches instructions from a single program counter, decodes them, and issues them to the processing element. The processing element is assumed to be a functional unit. Instruction and data are both supplied from the memory.

The four classifications defined by Flynn are based upon the number of concurrent instruction (or control) and data streams available in the architecture are<ref>https://computing.llnl.gov/tutorials/parallel_comp/#Flynn</ref>

Single Instruction, Single Data stream (SISD)

SISD (single instruction, single data) is a term referring to a computer architecture in which a single processor, a uniprocessor, executes a single instruction stream, to operate on data stored in a single memory. Even though there is only one stream of instructions, parallelism between the instructions from the stream can be exploited when the instructions are independent from one another. This corresponds to the von Neumann architecture.

It is a type of sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (CU) fetches single Instruction Stream (IS) from memory. The CU then generates appropriate control signals to direct single processing element (PE) to operate on single Data Stream (DS) i.e. one operation at a time

Single Instruction, Multiple Data streams (SIMD)

SIMD is a parallel architecture in which a single instruction operates on multiple data. An example of SIMD architectures can be found in vector processors. SIMD is known for its efficiency in terms of the instruction count needed to perform a computation task.

One of the major advantages in SIMD systems is, typically they include only those instructions that can be applied to all of the data in one operation. In other words, if the SIMD system works by loading up eight data points at once, the add operation being applied to the data will happen to all eight values at the same time. Although the same is true for any super-scalar processor design, the level of parallelism in a SIMD system is typically much higher. The major drawback is, it has large register files which increase power consumption and chip area.

Multiple Instructions, Single Data stream (MISD)

MISD (multiple instruction, single data) is an architecture in which multiple processing elements execute from different instruction streams, and data is passed from one processing element to the next. It is a type of parallel computing architecture where many functional units perform different operations on the same data.

Pipeline architectures belong to this type, though a purist might say that the data is different after processing by each stage in the pipeline. Fault-tolerant computers executing the same instructions redundantly in order to detect and mask errors, in a manner known as task replication, may be considered to belong to this type. Not many instances of this architecture exist, as MIMD and SIMD are often more appropriate for common data parallel techniques. Specifically, they allow better scaling and use of computational resources than MISD does.

However, one prominent example of MISD in computing is the Space Shuttle flight control computers. Another example of this machine is the systolic array, such as the CMU iWrap [BORKAR et al., 1990]. All the elements in this array are controlled by a global clock. On each cycle, an element will read a piece of data from one of its neighbors, perform a simple operation (e.g. add the incoming element to a stored value), and prepare a value to be written to a neighbor on the next step.

Multiple Instruction, Multiple Data streams (MIMD)

MIMD (multiple instructions, multiple data) is a technique employed to achieve parallelism. Machines using MIMD have a number of processors that function asynchronously and independently. At any time, different processors may be executing different instructions on different pieces of data. MIMD architectures may be used in a number of application areas such as computer-aided design/computer-aided manufacturing, simulation, modeling, and as communication switches. MIMD machines can be of either shared memory or distributed memory categories. Shared memory machines may be of the bus-based, extended, or hierarchical type. Distributed memory machines may have hypercube or mesh interconnection schemes.

Implementations of MISD architecture

Systolic Array

A systolic array is an arrangement of processors in an array where data flows synchronously across the array between neighbors, usually with different data flowing in different directions. Each Processor at each step takes in data from one or more neighbors, processes it and, in the next step, outputs results in the opposite direction.

The systolic array paradigm, data-stream-driven by data counters, is the counterpart of the von Neumann paradigm, instruction-stream-driven by a program counter. Because a systolic array usually sends and receives multiple data streams, and multiple data counters are needed to generate these data streams, it supports data parallelism. The name derives from analogy with the regular pumping of blood by the heart.<ref>http://en.wikipedia.org/wiki/Systolic_array</ref>

Type of Systolic Arrays<ref>http://home.engineering.iastate.edu/~zambreno/classes/cpre583/documents/JohHur93A.pdf General Purpose Systolic Arrays </ref>

Special-purpose systolic array

An array of hardwired systolic processing elements tailored for a specific application. Typically, many tens or hundreds of cells fit on a single chip. One of the major applications of special-purpose systolic array is in matrix operations. Figure 6 illustrates the algorithm for the sum of a scalar product, computed in a single systolic element. Here, a’s and b’s are synchronously shifted through the processing element to be available for next element. These data synchronously exits the processing element unmodified for the next element. The sum of the products is then shifted out of the accumulator.

This principle easily extends to a matrix product as shown in Figure 7 and the animation created with the help from the link. The only difference between single-element processing and array processing is that the latter delays each additional column and row by one cycle so that the columns and rows line up for a matrix multiply. The product matrix is shifted out after completion of processing.

General-purpose systolic array

It is an array of systolic processing elements, which gets adapted to a variety of applications via programming or reconfiguration. Array topologies can be either programmable or reconfigurable. Likewise, array cells are either programmable or reconfigurable. This is referred to as Systolic topologies.

A programmable systolic architecture is a collection of interconnected, general-purpose systolic cells, each of which is either programmable or reconfigurable. Programmable systolic cells are flexible processing elements specially designed to meet the computational and I/O requirements of systolic arrays. Programmable systolic architectures can be classified according to their cell inter-connection topologies: fixed or programmable.

Reconfigurable systolic architectures capitalize on FPGA technology, which allows the user to configure a low-level logic circuit for each cell. Reconfigurable arrays also have either fixed or reconfigurable cell interconnections. The user configures an array’s topology by means of a switch lattice. Any general-purpose array that is not conventionally programmable is usually considered reconfigurable. All FPGA re-configuring is static due to technology limitations.

Hybrid models make use of both VLSI and FPGA technology. They usually consist of VLSI circuits embedded in an FPGA-reconfigurable interconnection network.

Programmable Systolic Array

It is an array of programmable systolic elements that operates either in SIMD or MIMD fashion. Either the arrays interconnect or each processing unit is programmable and a program controls dataflow through the elements. Programmable systolic arrays are programmable either at a high level or a low level. At either level, programmable arrays can be categorized as either SIMD or MIMD machines.

- SIMD (Single Instruction Multiple Data)

In SIMD systolic machines (Figure 8) the host workstation preloads a controller and a memory, which are external to the array, with the instructions and data for the application. The systolic cells store no programs or instructions. As soon as the workstation enables execution, the controller sequences through the external memory thereby delivering instructions and data to the systolic array. Within the array, instructions are broadcast and all cells perform the same operationon different data. Adjacent cells may share memory, but generally nomemory is shared by theentire array. After exiting the array, data is collected in the external buffer memory.

This architecture can also be classified based on the number of instruction and data streams as Single Instruction Single Data (SISD) architecture as all the PEs are fed from the same instruction stream and the single data stream passes through all the PEs.

- MISD (Multiple Instruction Single Data)

The workstation downloads a program to each MISD (Figure 9) systolic cell. Each cell may be loaded with a different program, or all the cells in the array may be loaded with the same program. Each cell's architecture is somewhat similar to the conventional von Neumann architecture: It contains a control unit, an ALU, and local memory. MIMD systolic cells have more local memory than their SIMD counterparts to support the von Neumann-style organization.

This architecture is defined as Multiple Instruction Multiple Data (MIMD) architecture in 5. The architecture has multiple instruction streams for the PEs and a single data stream passing through all the PEs. Thus, it can also be defined as Multiple Instruction Single Data (MISD) architecture. The architecture of Systolic array configuration are controversial as explained in the section 4.1.2.

Reconfigurable Systolic Array

It is an array of systolic elements that can be programmed at the lowest level. Recent gate density advances in FPGA technology have produced a low-level, reconfigurable systolic array architecture that bridges the gap between special-purpose arrays and the more versatile, programmable general-purpose arrays. The FPGA architecture is unusual because a single hardware platform can be logically reconfigured as an exact duplicate of a special-purpose systolic array.

The RSA circuit design is based on systolic array architecture consisting of PEs interconnected via SWs as depicted in Figure 10. The homogeneous characteristic of the Reconfigurable Systolic Array (RSA) architecture, where each reconfigurable processing element (PE) cell is connected to its nearest neighbors via configurable switch (SW) elements, enables array expansion for parallel processing and facilitates time sharing computation of high-throughput data by individual PEs. Both the PEs and SWs can be reconfigured dynamically with the former as an arithmetic processor and the latter as a flexible router linking the neighboring PE cells. The RSA shifts reconfiguration and input signals into the PEs and SWs on separate data bus which enables the circuit to continue its operation while the reconfiguration is in process.

Architecture of systolic arrays as against MISD architecture

As from the above mentioned configurations of the Systolic Arrays, it is seen that generally the configurations have multiple processing elements executing different instructions from dedicated instruction streams for each processing element. There is a single data stream that connects the adjacent PEs. Thus, systolic array can be defined as an MISD architecture.

Many authors say that as the data read as input by one processing element is processed data output of the adjacent PE. The data stream cannot be considered as single because all the data paths do not carry the same data to all the PEs. Figure 11 shows the difference between the Data Stream for Systolic Arrays and the MISD architecture. Thus the systolic array should be considered as “Multiple Data” architecture and not Single Data architecture.

Fault Tolerant Systems<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#Types_of_fault_tolerance</ref>

The fault tolerant systems are designed to handle the possible failures in software, hardware or interfaces. The hardware faults include hard disk failures, input or output device failures, etc. and the software and interface faults include driver failures; operator errors, installing unexpected software etc. The hardware faults can be detected and identified by implementing redundant hardware and multiple backups. The software faults can be tolerable by removing the program errors by executing the software redundantly or by implementing small programs that take over the tasks that crash or generate errors.

History:<ref>http://en.wikipedia.org/wiki/Fault-tolerant_computer_system#History</ref>

The first known fault-tolerant computer was SAPO, built in 1951 in Czechoslovakia by Antonin Svoboda. Its basic design was magnetic drums connected via relays, with a voting method of memory error detection.

They separated into three distinct categories:

- machines that would last a long time without any maintenance

- computers that were very dependable but required constant monitoring

- computers with a high amount of runtime which would be under heavy use

Voting was another initial method with multiple redundant backups operating constantly and checking each other's results and reporting the component with non-matching result as faulty. This is called M out of N majority voting.

Historically, motion has always been to move further from N-model and more to M out of N due to the fact that the complexity of systems and the difficulty of ensuring the transitive state from fault-negative to fault-positive did not disrupt operations.

In computer systems, the SIMD, MISD and MIMD architectures facilitate the implementation of the fault tolerance systems by multiple instruction streams or multiple data streams or both. Fault tolerance on computations can be implemented by multiple processors (likely with different architectures) executing the algorithms on the same set of data. The output of each processor is compared with that of the others and M out of N majority voting method is used to determine the faulty processor. Thus MISD architecture is utilized to get the fault tolerance on critical computations.

There are various examples of MISD architecture being used as fault tolerant architecture. The major examples being flight control systems, nuclear power plants, satellite systems, super collider experiment systems, etc. Here, the flight control system is explained as an example of MISD architecture.

The Flight Control System – MISD Example for fault tolerance

A Fly-By-Wire system is used to replace the manual flight control by an electronic control interface. The movements of the flight control in the cockpit are converted to electronic signals and are transmitted to the actuators by wires. The control computers use the feedback from the sensors to compute and control the movement of the actuators to provide the expected response. These computers also perform the task to stabilize the aircraft and perform other tasks without the knowledge of the pilot. Flight control systems must meet extremely high levels of accuracy and functional integrity.

There are redundant flight control computers present in the flight control system. If one of the flight-control computers crashes, gets damaged or is affected by electromagnetic pulses, the other computer can overrule the faulty one and hence the flight of the aircraft is unharmed.

The number of redundant flight control computers is generally more than two, so that any computer whose results disagree with the others is ruled out to be faulty and is either ignored or rebooted.

Multiple Processors Implementation in Boeing 777<ref>http://www.citemaster.net/getdoc/8767/R8.pdf Y.C. (Bob) Yeh, Boeing Commercial Airplane Group, "Triple-Triple Redundant 777 Primary Flight Computer" </ref>

In modern computers, the redundant flight control computations are carried out by multiprocessor systems. The triple redundant 777 primary flight computer, has the architecture as shown in Figure 13.

The system has three primary flight control computers, each of them having three lanes with different processors. The flight control program is compiled for each of the processors which get the input data from the same data bus but drive the output on their individual control bus. Thus each processor executes different instructions but they process the same data. Thus, it is the best suited example of Multiple Instruction Single Data (MISD) architecture.

The three processors selected for the flight control system of Boeing 777 were Intel 80486, Motorola 68040 and AMD 29050. The dissimilar processors lead to dissimilar interface hardware circuits and compilers. Each lane of the flight control computer is data synchronized with the other lanes so that all of the lanes read the same frame of data from the flight sensors. As the outputs of each lane can be different, the median value of the outputs is used to select the output of the lane to be considered. The lane which has the median value select hardware selected is said to be in “command mode” whereas the other lanes are said to be in “monitoring mode”. It receives the data from the other Primary Flight Computer (PFC) lanes and performs a median select of the outputs. This provides a fault blocking mechanism before the fault detection and identification by the cross-lane monitoring system. Thus, the MISD based multi computer architecture is capable of detecting generic errors in compilers or in complex hardware devices providing assurance beyond reasonable doubt of the dependability of the Fly-By-Wire system.

The above mentioned system clearly has individual Instruction Streams as the architecture of each processor is different, thus different instruction sets and different instruction streams. These processors have frame synchronized input data which means they have same set of data to work upon which is fed from a single data stream. Thus the flight control system can be classified under MISD architecture.

References

<references/>

Glossary

- CMU: Carnegie Mellon University

- CU: Control Unit

- DS: Data Stream

- Fly-By-Wire: System that replaces the conventional manual flight controls of an aircraft with an electronic interface

- FPGA: Field Programmable Gate Array

- Heterogeneous Systems: A multiprocessor system with different kind of processors

- Homogeneous System: A multiprocessor system with same kind of processors

- ILP: Instruction Level Parallelism

- IS: Instruction Stream

- MIMD: Multiple Instruction Multiple Data

- MISD: Multiple Instruction Single Data

- PE: Processing Element

- PFC: Primary Flight Computer

- SAPO: Short for Samočinný počítač

- SIMD: Single Instruction Multiple Data

- SISD: Single Instruction Single Data

- VLSI: Very Large Scale Integration